WebRTC CreateOffer源码剖析

一. 前言

在这篇博客中我们介绍了 SDP 协议相关的内容,WebRTC 是按会话描述,媒体描述(媒体信息,网络描述,安全描述,服务质量)对 SDP 描述字段进行分类的,本文将以 CreateOffer 为例并从源码角度分析 WebRTC 是如何生成 SDP 的。

二. WebRTC SDP类结构说明

如上是 WebRTC SDP 类关系图,每个 SDP 在 WebRTC 源码中对应一个 SessionDescription 结构,其中包含 ContentInfos,TransportInfos,ContentGroups 等重要类型成员。

class SessionDescription {

// ...

private:

ContentInfos contents_;

TransportInfos transport_infos_;

ContentGroups content_groups_;

bool msid_supported_ = true;

int msid_signaling_ = kMsidSignalingSsrcAttribute;

bool extmap_allow_mixed_ = false;

}; ContentInfos (vector

class RTC_EXPORT ContentInfo {

// ...

std::string name;

MediaProtocolType type;

bool rejected = false;

bool bundle_only = false;

private:

friend class SessionDescription;

std::unique_ptr description_;

}; class MediaContentDescription {

// ...

protected:

bool rtcp_mux_ = false;

bool rtcp_reduced_size_ = false;

bool remote_estimate_ = false;

int bandwidth_ = kAutoBandwidth;

std::string protocol_;

std::vector cryptos_;

std::vector rtp_header_extensions_;

bool rtp_header_extensions_set_ = false;

StreamParamsVec send_streams_;

bool conference_mode_ = false;

webrtc::RtpTransceiverDirection direction_ =

webrtc::RtpTransceiverDirection::kSendRecv;

rtc::SocketAddress connection_address_;

// Mixed one- and two-byte header not included in offer on media level or

// session level, but we will respond that we support it. The plan is to add

// it to our offer on session level. See todo in SessionDescription.

ExtmapAllowMixed extmap_allow_mixed_enum_ = kNo;

SimulcastDescription simulcast_;

std::vector receive_rids_;

absl::optional alt_protocol_;

}; TransportInfos (vector

struct TransportInfo {

TransportInfo() {}

TransportInfo(const std::string& content_name,

const TransportDescription& description)

: content_name(content_name), description(description) {}

std::string content_name;

TransportDescription description;

};struct TransportDescription {

// ...

std::vector transport_options;

std::string ice_ufrag;

std::string ice_pwd;

IceMode ice_mode;

ConnectionRole connection_role;

std::unique_ptr identity_fingerprint;

absl::optional opaque_parameters;

}; ContentGroups (vector

class ContentGroup {

// ...

private:

std::string semantics_;

ContentNames content_names_;

};三. WebRTC生成SDP

WebRTC 生成 SDP 的步骤:获取本地媒体,创建 PeerConnection 对等连接,通过 AddTrack 添加往 PeerConnection 添加媒体轨信息,调用 createOffer 产生 offer SDP(如果是 answer SDP 则调用 createAnswer)。

CreatePeerConnection

WebRTC 在生成 SDP 时会携带支持的音视频媒体类型,例如 a=rtpmap:111 opus/48000/2,a=rtpmap:103 ISAC/16000,a=rtpmap:9 G722/8000,那么它是如何收集编解码器信息的呢?

peer_connection_factory_ = webrtc::CreatePeerConnectionFactory(

nullptr /* network_thread */, nullptr /* worker_thread */,

nullptr /* signaling_thread */, nullptr /* default_adm */,

webrtc::CreateBuiltinAudioEncoderFactory(),

webrtc::CreateBuiltinAudioDecoderFactory(),

webrtc::CreateBuiltinVideoEncoderFactory(),

webrtc::CreateBuiltinVideoDecoderFactory(), nullptr /* audio_mixer */,

nullptr /* audio_processing */);首先创建 PeerConnectionFactory 会传入音视频编解码器工厂,以创建音频编码器工厂 CreateBuiltinAudioEncoderFactory 说明,它调用 CreateAudioEncoderFactory 可变模板参数方法,将该版本支持的编码器类型传入(如下 Opus,Isac,G722,Ilbc,G711...)。

rtc::scoped_refptr CreateBuiltinAudioEncoderFactory() {

return CreateAudioEncoderFactory<

#if WEBRTC_USE_BUILTIN_OPUS

AudioEncoderOpus, NotAdvertised,

#endif

AudioEncoderIsac, AudioEncoderG722,

#if WEBRTC_USE_BUILTIN_ILBC

AudioEncoderIlbc,

#endif

AudioEncoderG711, NotAdvertised>();

} template

rtc::scoped_refptr CreateAudioEncoderFactory() {

// There's no technical reason we couldn't allow zero template parameters,

// but such a factory couldn't create any encoders, and callers can do this

// by mistake by simply forgetting the <> altogether. So we forbid it in

// order to prevent caller foot-shooting.

static_assert(sizeof...(Ts) >= 1,

"Caller must give at least one template parameter");

return rtc::scoped_refptr(

new rtc::RefCountedObject<

audio_encoder_factory_template_impl::AudioEncoderFactoryT>());

} template

class AudioEncoderFactoryT : public AudioEncoderFactory {

public:

std::vector GetSupportedEncoders() override {

std::vector specs;

Helper::AppendSupportedEncoders(&specs);

return specs;

}

absl::optional QueryAudioEncoder(

const SdpAudioFormat& format) override {

return Helper::QueryAudioEncoder(format);

}

std::unique_ptr MakeAudioEncoder(

int payload_type,

const SdpAudioFormat& format,

absl::optional codec_pair_id) override {

return Helper::MakeAudioEncoder(payload_type, format, codec_pair_id);

}

}; CreateAudioEncoderFactory 会返回 rtc::scoped_refptr

cricket::MediaEngineDependencies media_dependencies;

media_dependencies.task_queue_factory = dependencies.task_queue_factory.get();

media_dependencies.adm = std::move(default_adm);

media_dependencies.audio_encoder_factory = std::move(audio_encoder_factory);

media_dependencies.audio_decoder_factory = std::move(audio_decoder_factory);

if (audio_processing) {

media_dependencies.audio_processing = std::move(audio_processing);

} else {

media_dependencies.audio_processing = AudioProcessingBuilder().Create();

}

media_dependencies.audio_mixer = std::move(audio_mixer);

media_dependencies.video_encoder_factory = std::move(video_encoder_factory);

media_dependencies.video_decoder_factory = std::move(video_decoder_factory);

dependencies.media_engine =

cricket::CreateMediaEngine(std::move(media_dependencies));

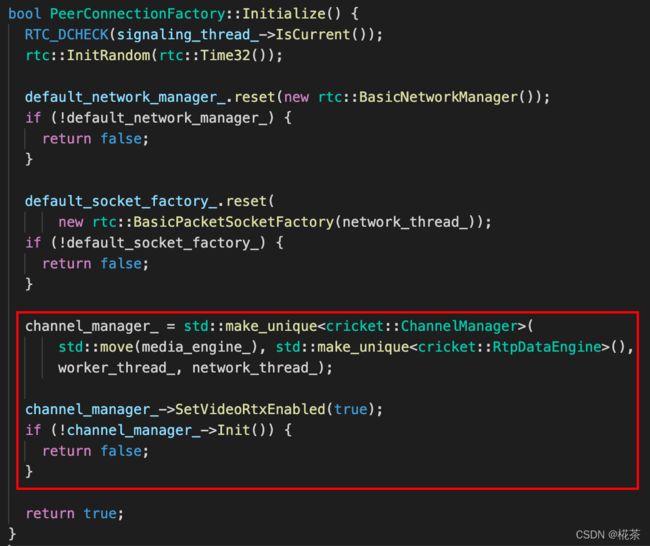

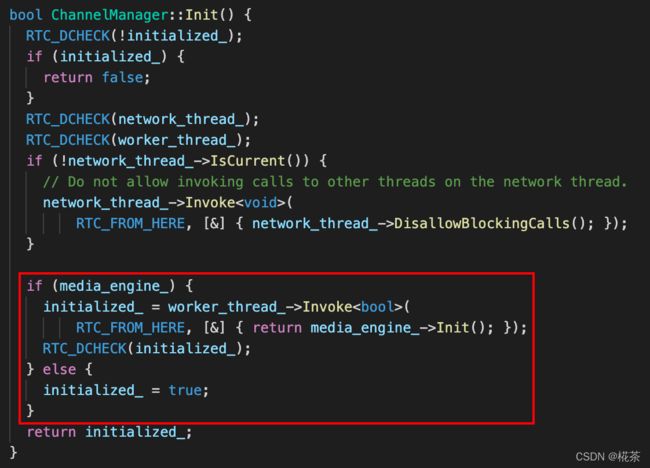

return CreateModularPeerConnectionFactory(std::move(dependencies));PeerConnectionFactory 创建后再调用 PeerConnectionFactory::Initialize 创建 ChannelManager 并进行初始化,ChannelManager::Init 会进行媒体引擎初始化,它会调用音视频编解码工厂类的 GetSupportedEncoders/GetSupportedDecoders 获取我们传入的编解码器类型所支持的编解码器参数。

void WebRtcVoiceEngine::Init() {

// ...

// Load our audio codec lists.

RTC_LOG(LS_VERBOSE) << "Supported send codecs in order of preference:";

send_codecs_ = CollectCodecs(encoder_factory_->GetSupportedEncoders());

for (const AudioCodec& codec : send_codecs_) {

RTC_LOG(LS_VERBOSE) << ToString(codec);

}

RTC_LOG(LS_VERBOSE) << "Supported recv codecs in order of preference:";

recv_codecs_ = CollectCodecs(decoder_factory_->GetSupportedDecoders());

for (const AudioCodec& codec : recv_codecs_) {

RTC_LOG(LS_VERBOSE) << ToString(codec);

}

// ...

}GetSupportedEncoders 会调用 AppendSupportedEncoders 将支持的编码器参数传入,如下是 OPUS 对应的 SdpAudioFormat 和 AudioCodecInfo 信息。

void AudioEncoderOpusImpl::AppendSupportedEncoders(

std::vector* specs) {

const SdpAudioFormat fmt = {"opus",

kRtpTimestampRateHz,

2,

{{"minptime", "10"}, {"useinbandfec", "1"}}};

const AudioCodecInfo info = QueryAudioEncoder(*SdpToConfig(fmt));

specs->push_back({fmt, info});

} PeerConnectionFactory 初始化完成后可以通过 CreatePeerConnection 创建 PeerConnection,在 PeerConnection::Initialize 中会创建 WebRtcSessionDescriptionFactory 对象,后续 createOffer 将通过该对象构造出 SDP。

bool PeerConnection::Initialize(

const PeerConnectionInterface::RTCConfiguration& configuration,

PeerConnectionDependencies dependencies) {

// ...

webrtc_session_desc_factory_.reset(new WebRtcSessionDescriptionFactory(

signaling_thread(), channel_manager(), this, session_id(),

std::move(dependencies.cert_generator), certificate, &ssrc_generator_));

webrtc_session_desc_factory_->SignalCertificateReady.connect(

this, &PeerConnection::OnCertificateReady);

if (options.disable_encryption) {

webrtc_session_desc_factory_->SetSdesPolicy(cricket::SEC_DISABLED);

}

webrtc_session_desc_factory_->set_enable_encrypted_rtp_header_extensions(

GetCryptoOptions().srtp.enable_encrypted_rtp_header_extensions);

webrtc_session_desc_factory_->set_is_unified_plan(IsUnifiedPlan());

// ...

}上述描述的流程总结图如下。

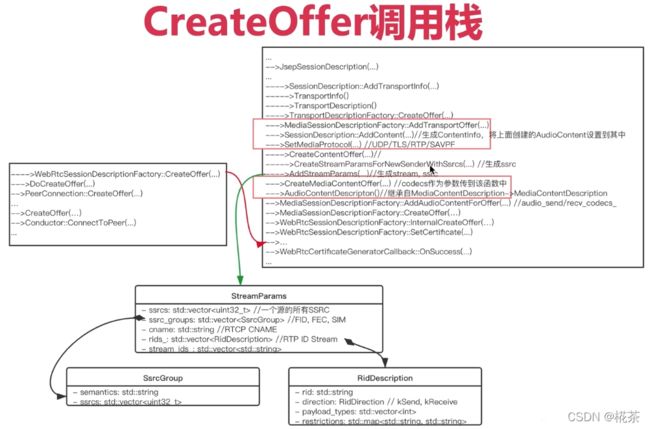

CreateOffer

当上层调用 PeerConnection::CreateOffer 后则开始生成 offer SDP,它会调用 PeerConnection::DoCreateOffer,DoCreateOffer 再调用 WebRtcSessionDescriptionFactory::CreateOffer 进行 offer SDP 的创建。

void PeerConnection::DoCreateOffer(

const RTCOfferAnswerOptions& options,

rtc::scoped_refptr observer) {

// ...

cricket::MediaSessionOptions session_options;

GetOptionsForOffer(options, &session_options);

webrtc_session_desc_factory_->CreateOffer(observer, options, session_options);

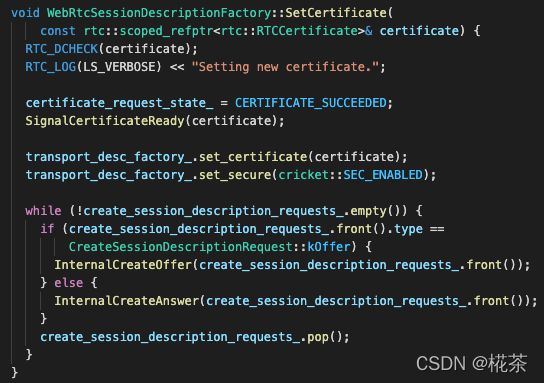

} WebRtcSessionDescriptionFactory::CreateOffer 会往 create_session_description_request_ 塞入创建 offer 类型 SDP 的请求,当工作线程完成证书的创建后会回调 WebRtcSessionDescriptionFactory::SetCertificate,取出请求并执行 WebRtcSessionDescriptionFactory::InternalCreateOffer 创建 offer SDP。

WebRtcSessionDescriptionFactory::InternalCreateOffer 调用 MediaSessionDescriptionFactory::CreateOffer。

MediaSessionDescriptionFactory::CreateOffer 会添加音视频的 SDP 信息,以 AddAudioContentForOffer 为例说明,

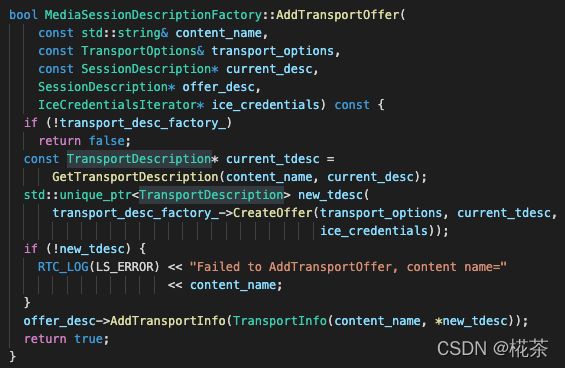

添加完媒体信息后又调用 AddTransportOffer 创建通道相关信息。

上述描述的流程总结图如下。