keepalived实现HAproxy高可用及脑裂监控

keepalived实现HAproxy高可用及脑裂监控

文章目录

-

- @[toc]

- 脑裂

-

- 脑裂产生的原因

- 脑裂的常见解决方案

- 对脑裂进行监控

- 部署keepalibed实现haproxy高可用、脑裂监控

-

- keepalived实现haproxy高可用

-

- 部署RS的Web服务

- 部署LB的haproxy负载均衡

- 部署LB的keepalived高可用

- 配置keepalived加入监控脚本实现半自动主备切换

- zabbix监控脑裂

文章目录

-

- @[toc]

- 脑裂

-

- 脑裂产生的原因

- 脑裂的常见解决方案

- 对脑裂进行监控

- 部署keepalibed实现haproxy高可用、脑裂监控

-

- keepalived实现haproxy高可用

-

- 部署RS的Web服务

- 部署LB的haproxy负载均衡

- 部署LB的keepalived高可用

- 配置keepalived加入监控脚本实现半自动主备切换

- zabbix监控脑裂

关于keepalived及高可用是什么请移步至这篇博客keepalived高可用

下面就开始介绍脑裂

脑裂

在高可用(HA)系统中,当联系2个节点的“心跳线”断开时,本来为一整体、动作协调的HA系统,就分裂成为2个独立的个体。由于相互失去了联系,都以为是对方出了故障。两个节点上的HA软件像“裂脑人”一样,争抢“共享资源”、争起“应用服务”,就会发生严重后果——或者共享资源被瓜分、2边“服务”都起不来了;或者2边“服务”都起来了,但同时读写“共享存储”,导致数据损坏(常见如数据库轮询着的联机日志出错)。

对付HA系统“裂脑”的对策,目前达成共识的的大概有以下几条:

- 添加冗余的心跳线,例如:双线条线(心跳线也HA),尽量减少“裂脑”发生几率;

- 启用磁盘锁。正在服务一方锁住共享磁盘,“裂脑”发生时,让对方完全“抢不走”共享磁盘资源。但使用锁磁盘也会有一个不小的问题,如果占用共享盘的一方不主动“解锁”,另一方就永远得不到共享磁盘。现实中假如服务节点突然死机或崩溃,就不可能执行解锁命令。后备节点也就接管不了共享资源和应用服务。于是有人在HA中设计了“智能”锁。即:正在服务的一方只在发现心跳线全部断开(察觉不到对端)时才启用磁盘锁。平时就不上锁了。

- 设置仲裁机制。例如设置参考IP(如网关IP),当心跳线完全断开时,2个节点都各自ping一下参考IP,不通则表明断点就出在本端。不仅“心跳”、还兼对外“服务”的本端网络链路断了,即使启动(或继续)应用服务也没有用了,那就主动放弃竞争,让能够ping通参考IP的一端去起服务。更保险一些,ping不通参考IP的一方干脆就自我重启,以彻底释放有可能还占用着的那些共享资源

脑裂产生的原因

一般来说,脑裂的发生,有以下几种原因:

- 高可用服务器对之间心跳线链路发生故障,导致无法正常通信

- 因心跳线坏了(包括断了,老化)

- 因网卡及相关驱动坏了,ip配置及冲突问题(网卡直连)

- 因心跳线间连接的设备故障(网卡及交换机)

- 因仲裁的机器出问题(采用仲裁的方案)

- 高可用服务器上开启了 iptables防火墙阻挡了心跳消息传输

- 高可用服务器上心跳网卡地址等信息配置不正确,导致发送心跳失败

- 其他服务配置不当等原因,如心跳方式不同,心跳广插冲突、软件Bug等

注意:

Keepalived配置里同一 VRRP实例如果 virtual_router_id两端参数配置不一致也会导致裂脑问题发生。

脑裂的常见解决方案

在实际生产环境中,我们可以从以下几个方面来防止裂脑问题的发生:

- 同时使用串行电缆和以太网电缆连接,同时用两条心跳线路,这样一条线路坏了,另一个还是好的,依然能传送心跳消息

- 当检测到裂脑时强行关闭一个心跳节点(这个功能需特殊设备支持,如Stonith、feyce)。相当于备节点接收不到心跳消患,通过单独的线路发送关机命令关闭主节点的电源

- 做好对裂脑的监控报警(如邮件及手机短信等或值班).在问题发生时人为第一时间介入仲裁,降低损失。例如,百度的监控报警短信就有上行和下行的区别。报警消息发送到管理员手机上,管理员可以通过手机回复对应数字或简单的字符串操作返回给服务器.让服务器根据指令自动处理相应故障,这样解决故障的时间更短.

当然,在实施高可用方案时,要根据业务实际需求确定是否能容忍这样的损失。对于一般的网站常规业务.这个损失是可容忍的

对脑裂进行监控

对脑裂的监控应在备用服务器上进行,通过添加zabbix自定义监控进行。

监控什么信息呢?监控备上有无VIP地址

备机上出现VIP有两种情况:

- 发生了脑裂

- 正常的主备切换

监控只是监控发生脑裂的可能性,不能保证一定是发生了脑裂,因为正常的主备切换VIP也是会到备上的。

监控脚本如下:

在配置zabbix触发器时,建议该脚本echo的值为1或0,好作比对触发告警

[root@slave ~]# mkdir -p /scripts && cd /scripts

[root@slave scripts]# vim check_keepalived.sh

#!/bin/bash

if [ `ip a show ens32 |grep 192.168.92.200|wc -l` -ne 0 ]

then

echo "keepalived is error!"

else

echo "keepalived is OK !"

fi

编写脚本时要注意,网卡要改成你自己的网卡名称,VIP也要改成你自己的VIP,最后不要忘了给脚本赋予执行权限,且要修改/scripts目录的属主属组为zabbix

部署keepalibed实现haproxy高可用、脑裂监控

环境说明:

| 主机名 | IP地址 | 安装的软件 | 系统 |

|---|---|---|---|

| zabbix | 192.168.92.139 | LAMP架构 zabbix server、zabbix agent |

Centos8 |

| LB01 | 192.168.92.130 | HAproxy keepalived |

Centos8 |

| LB02 | 192.168.92.128 | zabbix agent HAproxy keepalived |

Centos8 |

| RS01 | 192.168.92.129 | apache | Centos8 |

| RS02 | 192.168.92.132 | nginx | Centos8 |

各主机要实现的需求说明:

zabbix主机用于监控LB01和LB02的状态,一旦发生主备转换或脑裂时则发邮件告警给管理员。

LB01和LB02搭建HAproxy作为负载均衡器,部署keepalived实现负载均衡器(LB)一主一备的高可用(HA)。

LB01为主(master),LB02为备(backup)。VIP我这里设为192.168.92.200,在企业的生产环境中会用公网IP作为VIP

RS01与RS02作为后端的真实服务器,是实际上提供服务给用户的应用服务器。

提示:

本实验环境中各主机均关闭了防火墙与SELinux,配置了YUM仓库(国内网络源)。基础操作不做演示。

本次实验中需自己先备好zabbix服务,确保zabbix的Web管理界面能够正常访问。

部署zabbix请参考我的另一篇博客zabbix部署

keepalived实现haproxy高可用

部署RS的Web服务

RS01主机配置

[root@RS01 ~]# dnf -y install httpd

[root@RS01 ~]# echo 'RS1' > /var/www/html/index.html

[root@RS01 ~]# systemctl enable --now httpd

RS01主机配置

[root@RS02 ~]# dnf -y install nginx

[root@RS02 ~]# echo 'RS2' > /usr/share/nginx/html/index.html

[root@RS02 ~]# systemctl enable --now nginx.service

测试Web服务能否访问

#在RS01主机访问RS02

[root@RS01 ~]# curl 192.168.92.132

RS2

#在RS02主机访问RS01

[root@RS02 ~]# curl 192.168.92.129

RS1

部署LB的haproxy负载均衡

LB01主机配置

[root@LB01 ~]# dnf -y install make gcc pcre-devel bzip2-devel openssl-devel systemd-devel

[root@LB01 ~]# useradd -Mrs /sbin/nologin haproxy

[root@LB01 ~]# cd /usr/local/src/

[root@LB01 src]# wget https://www.haproxy.org/download/2.6/src/haproxy-2.6.6.tar.gz

[root@LB01 src]# tar -xf haproxy-2.6.6.tar.gz

[root@LB01 src]# cd haproxy-2.6.6/

[root@LB01 haproxy-2.6.6]# make clean

[root@LB01 haproxy-2.6.6]# make -j $(grep 'processor' /proc/cpuinfo | wc -l) \

TARGET=linux-glibc \

USE_OPENSSL=1 \

USE_ZLIB=1 \

USE_PCRE=1 \

USE_SYSTEMD=1

[root@LB01 haproxy-2.6.6]# make install PREFIX=/usr/local/haproxy

[root@LB01 haproxy-2.6.6]# cp haproxy /usr/sbin/

[root@LB01 ~]# echo 'net.ipv4.ip_nonlocal_bind = 1' >> /etc/sysctl.conf

[root@LB01 ~]# echo 'net.ipv4.ip_forward = 1' >> /etc/sysctl.conf

[root@LB01 ~]# sysctl -p

net.ipv4.ip_nonlocal_bind = 1

net.ipv4.ip_forward = 1

[root@LB01 ~]# mkdir /etc/haproxy

[root@LB01 ~]# vim /etc/haproxy/haproxy.cfg

global

daemon

maxconn 256

defaults

mode http

timeout connect 5000ms

timeout client 50000ms

timeout server 50000ms

frontend http-in

bind *:80

default_backend servers

backend servers

server web1 192.168.92.129:80

server web2 192.168.92.132:80

[root@LB01 ~]# cat > /usr/lib/systemd/system/haproxy.service <

[Unit]

Description=HAProxy Load Balancer

After=syslog.target network.target

[Service]

ExecStartPre=/usr/local/haproxy/sbin/haproxy -f /etc/haproxy/haproxy.cfg -c -q

ExecStart=/usr/local/haproxy/sbin/haproxy -Ws -f /etc/haproxy/haproxy.cfg -p /var/run/haproxy.pid

ExecReload=/bin/kill -USR2 $MAINPID

[Install]

WantedBy=multi-user.target

EOF

[root@LB01 ~]# systemctl daemon-reload

[root@LB01 ~]# systemctl start haproxy.service

[root@LB01 ~]# ss -anllt

State Recv-Q Send-Q Local Address:Port Peer Address:Port Process

LISTEN 0 128 0.0.0.0:80 0.0.0.0:*

LISTEN 0 128 0.0.0.0:22 0.0.0.0:*

LISTEN 0 128 [::]:22 [::]:*

[root@LB01 ~]# curl 192.168.92.130

RS1

[root@LB01 ~]# curl 192.168.92.130

RS2

[root@LB01 ~]# curl 192.168.92.130

RS1

[root@LB01 ~]# curl 192.168.92.130

RS2

LB02主机配置

[root@LB02 ~]# dnf -y install make gcc pcre-devel bzip2-devel openssl-devel systemd-devel

[root@LB02 ~]# useradd -Mrs /sbin/nologin haproxy

[root@LB02 ~]# cd /usr/local/src/

[root@LB02 src]# wget https://www.haproxy.org/download/2.6/src/haproxy-2.6.6.tar.gz

[root@LB02 src]# tar -xf haproxy-2.6.6.tar.gz

[root@LB02 src]# cd haproxy-2.6.6/

[root@LB02 haproxy-2.6.6]# make clean

[root@LB02 haproxy-2.6.6]# make -j $(grep 'processor' /proc/cpuinfo | wc -l) \

TARGET=linux-glibc \

USE_OPENSSL=1 \

USE_ZLIB=1 \

USE_PCRE=1 \

USE_SYSTEMD=1

[root@LB02 haproxy-2.6.6]# make install PREFIX=/usr/local/haproxy

[root@LB02 haproxy-2.6.6]# cp haproxy /usr/sbin/

[root@LB02 ~]# echo 'net.ipv4.ip_nonlocal_bind = 1' >> /etc/sysctl.conf

[root@LB02 ~]# echo 'net.ipv4.ip_forward = 1' >> /etc/sysctl.conf

[root@LB02 ~]# sysctl -p

net.ipv4.ip_nonlocal_bind = 1

net.ipv4.ip_forward = 1

[root@LB02 ~]# mkdir /etc/haproxy

[root@LB02 ~]# vim /etc/haproxy/haproxy.cfg

global

daemon

maxconn 256

defaults

mode http

timeout connect 5000ms

timeout client 50000ms

timeout server 50000ms

frontend http-in

bind *:80

default_backend servers

backend servers

server web1 192.168.92.129:80

server web2 192.168.92.132:80

[root@LB02 ~]# cat > /usr/lib/systemd/system/haproxy.service <

[Unit]

Description=HAProxy Load Balancer

After=syslog.target network.target

[Service]

ExecStartPre=/usr/local/haproxy/sbin/haproxy -f /etc/haproxy/haproxy.cfg -c -q

ExecStart=/usr/local/haproxy/sbin/haproxy -Ws -f /etc/haproxy/haproxy.cfg -p /var/run/haproxy.pid

ExecReload=/bin/kill -USR2 $MAINPID

[Install]

WantedBy=multi-user.target

EOF

[root@LB02 ~]# systemctl daemon-reload

[root@LB02 ~]# systemctl start haproxy.service

[root@LB02 ~]# ss -anllt

State Recv-Q Send-Q Local Address:Port Peer Address:Port Process

LISTEN 0 128 0.0.0.0:80 0.0.0.0:*

LISTEN 0 128 0.0.0.0:22 0.0.0.0:*

LISTEN 0 128 [::]:22 [::]:*

[root@LB02 ~]# curl 192.168.92.130

RS1

[root@LB02 ~]# curl 192.168.92.130

RS2

[root@LB02 ~]# curl 192.168.92.130

RS1

[root@LB02 ~]# curl 192.168.92.130

RS2

[root@LB02 ~]# systemctl stop haproxy.service

部署LB的keepalived高可用

LB01主机配置

[root@LB01 ~]# strings /dev/urandom |tr -dc A-Za-z0-9 | head -c8; echo

avUSem2K

[root@LB01 ~]# dnf -y install keepalived

[root@LB01 ~]# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id lb01

}

vrrp_instance VI_1 {

state MASTER

interface ens32

virtual_router_id 81

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass avUSem2K

}

virtual_ipaddress {

192.168.92.200

}

}

virtual_server 192.168.92.200 80 {

delay_loop 6

lb_algo rr

lb_kind DR

persistence_timeout 50

protocol TCP

real_server 192.168.92.130 80 {

weight 1

TCP_CHECK {

connect_port 80

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

real_server 192.168.92.128 80 {

weight 1

TCP_CHECK {

connect_port 80

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

}

[root@LB01 ~]# systemctl enable --now keepalived.service

Created symlink /etc/systemd/system/multi-user.target.wants/keepalived.service → /usr/lib/systemd/system/keepalived.service.

[root@LB01 ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens32: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:9e:e3:c1 brd ff:ff:ff:ff:ff:ff

inet 192.168.92.130/24 brd 192.168.92.255 scope global dynamic noprefixroute ens32

valid_lft 936sec preferred_lft 936sec

inet 192.168.92.200/32 scope global ens32

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe9e:e3c1/64 scope link noprefixroute

valid_lft forever preferred_lft forever

[root@LB01 ~]# curl 192.168.92.200

RS1

[root@LB01 ~]# curl 192.168.92.200

RS2

[root@LB01 ~]# curl 192.168.92.200

RS1

[root@LB01 ~]# curl 192.168.92.200

RS2

LB02主机配置

[root@LB02 ~]# dnf -y install keepalived

[root@LB02 ~]# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id lb01

}

vrrp_instance VI_1 {

state BACKUP

interface ens32

virtual_router_id 81

priority 90

advert_int 1

authentication {

auth_type PASS

auth_pass avUSem2K

}

virtual_ipaddress {

192.168.92.200

}

}

virtual_server 192.168.92.200 80 {

delay_loop 6

lb_algo rr

lb_kind DR

persistence_timeout 50

protocol TCP

real_server 192.168.92.130 80 {

weight 1

TCP_CHECK {

connect_port 80

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

real_server 192.168.92.128 80 {

weight 1

TCP_CHECK {

connect_port 80

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

}

测试keepalived高可用

[root@LB01 ~]# systemctl stop keepalived.service

[root@LB01 ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens32: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:9e:e3:c1 brd ff:ff:ff:ff:ff:ff

inet 192.168.92.130/24 brd 192.168.92.255 scope global dynamic noprefixroute ens32

valid_lft 1527sec preferred_lft 1527sec

inet6 fe80::20c:29ff:fe9e:e3c1/64 scope link noprefixroute

valid_lft forever preferred_lft forever

[root@LB02 ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens32: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:e4:f5:9d brd ff:ff:ff:ff:ff:ff

inet 192.168.92.128/24 brd 192.168.92.255 scope global dynamic noprefixroute ens32

valid_lft 1589sec preferred_lft 1589sec

inet 192.168.92.200/32 scope global ens32

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fee4:f59d/64 scope link noprefixroute

valid_lft forever preferred_lft forever

配置keepalived加入监控脚本实现半自动主备切换

所谓半自动主备切换意思是,当主ka(keepalived)挂掉了,监控脚本检测到后,备ka会自动成为新的主ka。当旧主ka恢复后想要重新成为主卡时需要系统管理员手动切换。

LB01主机配置

编写监控脚本

[root@LB01 ~]# mkdir /scripts

[root@LB01 ~]# cd /scripts/

[root@LB01 scripts]# vim check_haproxy.sh

#!/bin/bash

haproxy_status=$(ps -ef|grep -Ev "grep|$0"|grep '\bhaproxy\b'|wc -l)

if [ $haproxy_status -lt 1 ];then

systemctl stop keepalived

fi

[root@LB01 scripts]# chmod +x check_haproxy.sh

[root@LB01 scripts]# vim notify.sh

#!/bin/bash

case "$1" in

master)

haproxy_status=$(ps -ef|grep -Ev "grep|$0"|grep '\bhaproxy\b'|wc -l)

if [ $haproxy_status -lt 1 ];then

systemctl start haproxy

fi

;;

backup)

haproxy_status=$(ps -ef|grep -Ev "grep|$0"|grep '\bhaproxy\b'|wc -l)

if [ $haproxy_status -gt 0 ];then

systemctl stop haproxy

[root@LB01 scripts]# chmod +x notify.sh

在keepalived配置文件中加入监控脚本

[root@LB01 ~]# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id lb01

}

#添加以下武行

vrrp_script haproxy_check {

script "/scripts/check_haproxy.sh"

interval 1

weight -20

}

vrrp_instance VI_1 {

state MASTER

interface ens32

virtual_router_id 81

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass avUSem2K

}

virtual_ipaddress {

192.168.92.200

}

track_ipaddress{ #添加这四行

haproxy_check

}

notify_master "/scripts/notify.sh master"

}

virtual_server 192.168.92.200 80 {

delay_loop 6

lb_algo rr

lb_kind DR

persistence_timeout 50

protocol TCP

real_server 192.168.92.130 80 {

weight 1

TCP_CHECK {

connect_port 80

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

real_server 192.168.92.128 80 {

weight 1

TCP_CHECK {

connect_port 80

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

}

[root@LB01 ~]# systemctl restart keepalived.service

LB02主机配置

backup无需检测nginx是否正常,当升级为MASTER时启动nginx,当降级为BACKUP时关闭

编写监控脚本

[root@LB02 ~]# mkdir /scripts

[root@LB02 ~]# cd /scripts/

[root@LB02 scripts]# vim notify.sh

#!/bin/bash

case "$1" in

master)

haproxy_status=$(ps -ef|grep -Ev "grep|$0"|grep '\bhaproxy\b'|wc -l)

if [ $haproxy_status -lt 1 ];then

systemctl start haproxy

fi

;;

backup)

haproxy_status=$(ps -ef|grep -Ev "grep|$0"|grep '\bhaproxy\b'|wc -l)

if [ $haproxy_status -gt 0 ];then

systemctl stop haproxy

[root@LB02 scripts]# chmod +x notify.sh

在keepalived配置文件中加入监控脚本

[root@LB02 ~]# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id lb01

}

vrrp_instance VI_1 {

state BACKUP

interface ens32

virtual_router_id 81

priority 90

advert_int 1

authentication {

auth_type PASS

auth_pass avUSem2K

}

virtual_ipaddress {

192.168.92.200

}

notify_master "/scripts/notify.sh master"

notify_backup "/scripts/notify.sh backup"

}

virtual_server 192.168.92.200 80 {

delay_loop 6

lb_algo rr

lb_kind DR

persistence_timeout 50

protocol TCP

real_server 192.168.92.130 80 {

weight 1

TCP_CHECK {

connect_port 80

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

real_server 192.168.92.128 80 {

weight 1

TCP_CHECK {

connect_port 80

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

}

[root@LB02 ~]# systemctl restart keepalived.service

进行测试,手动停止负载均衡器模拟故障

#目前VIP在LB01主机上,说明此时还是主ka

[root@LB01 ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens32: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:9e:e3:c1 brd ff:ff:ff:ff:ff:ff

inet 192.168.92.130/24 brd 192.168.92.255 scope global dynamic noprefixroute ens32

valid_lft 1135sec preferred_lft 1135sec

inet 192.168.92.200/32 scope global ens32

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe9e:e3c1/64 scope link noprefixroute

valid_lft forever preferred_lft forever

#手动停止haproxy均衡负载器,模拟故障

[root@LB01 ~]# systemctl stop haproxy.service

#此时再查看发现VIP已不在,keepalived也相应的自动停止了

[root@LB01 ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens32: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:9e:e3:c1 brd ff:ff:ff:ff:ff:ff

inet 192.168.92.130/24 brd 192.168.92.255 scope global dynamic noprefixroute ens32

valid_lft 1106sec preferred_lft 1106sec

inet6 fe80::20c:29ff:fe9e:e3c1/64 scope link noprefixroute

valid_lft forever preferred_lft forever

[root@LB01 ~]# systemctl status keepalived.service

● keepalived.service - LVS and VRRP High Availability Monitor

Loaded: loaded (/usr/lib/systemd/system/keepalived.service; enabled; vendor preset: disabled)

Active: inactive (dead) since Mon 2022-10-10 01:33:40 CST; 1min 27s ago

#来到LB02主机上查看ip,发现有VIP,说明该台主机成为了主ka

[root@LB02 ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens32: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:e4:f5:9d brd ff:ff:ff:ff:ff:ff

inet 192.168.92.128/24 brd 192.168.92.255 scope global dynamic noprefixroute ens32

valid_lft 1101sec preferred_lft 1101sec

inet 192.168.92.200/32 scope global ens32

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fee4:f59d/64 scope link noprefixroute

valid_lft forever preferred_lft forever

#手动让LB01主机再次成为主ka

[root@LB01 ~]# systemctl restart haproxy.service keepalived.service

#可以看到此时VIP又回到了LB01主机上

[root@LB01 ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens32: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:9e:e3:c1 brd ff:ff:ff:ff:ff:ff

inet 192.168.92.130/24 brd 192.168.92.255 scope global dynamic noprefixroute ens32

valid_lft 1686sec preferred_lft 1686sec

inet 192.168.92.200/32 scope global ens32

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe9e:e3c1/64 scope link noprefixroute

valid_lft forever preferred_lft forever

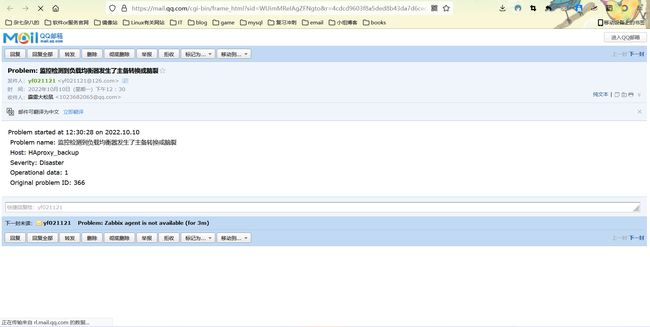

zabbix监控脑裂

提示:

本次实验中需自己先备好zabbix服务,确保zabbix的Web管理界面能够正常访问。

部署zabbix请参考我的另一篇博客zabbix部署

[root@LB02 ~]# useradd -rMs /sbin/nologin zabbix

[root@LB02 ~]# cd /usr/local/src/

[root@LB02 src]# wget https://cdn.zabbix.com/zabbix/sources/stable/6.2/zabbix-6.2.2.tar.gz

[root@LB02 src]# tar -xf zabbix-6.2.2.tar.gz

[root@LB02 src]# cd zabbix-6.2.2/

[root@LB02 zabbix-6.2.2]# ./configure --enable-agent

............

***********************************************************

* Now run 'make install' *

* *

* Thank you for using Zabbix! *

* <http://www.zabbix.com> *

***********************************************************

[root@LB02 zabbix-6.2.2]# make && make install

[root@LB02 ~]# vim /usr/local/etc/zabbix_agentd.conf

.............

Server=192.168.92.139 #修改为zabbix服务端的IP

.............

ServerActive=192.168.92.139 #修改为zabbix服务端的IP

.............

Hostname=HAproxy_backup #这里设置的主机名用于给zabbix调用的

.............

[root@LB02 ~]# zabbix_agentd

[root@LB02 ~]# ss -anlt

State Recv-Q Send-Q Local Address:Port Peer Address:Port Process

LISTEN 0 128 0.0.0.0:22 0.0.0.0:*

LISTEN 0 128 0.0.0.0:10050 0.0.0.0:*

LISTEN 0 128 [::]:22 [::]:*

编写监控脚本,在agentd配置文件中加入脚本

[root@LB02 ~]# cd /scripts/

[root@LB02 scripts]# vim check_keepalived.sh

#!/bin/bash

if [ `ip a show ens32 |grep 192.168.92.200|wc -l` -ne 0 ];then

echo "1"

else

echo "0"

fi

[root@LB02 scripts]# chmod +x check_keepalived.sh

...................

UnsafeUserParameters=1 #在文件末尾加入这两行

UserParameter=check_keepalived,/bin/bash /scripts/check_keepalived.sh

[root@LB02 scripts]# pkill zabbix_agentd

[root@LB02 scripts]# zabbix_agentd

[root@LB02 scripts]# ss -anlt

State Recv-Q Send-Q Local Address:Port Peer Address:Port Process

LISTEN 0 128 0.0.0.0:22 0.0.0.0:*

LISTEN 0 128 0.0.0.0:10050 0.0.0.0:*

LISTEN 0 128 [::]:22 [::]:*

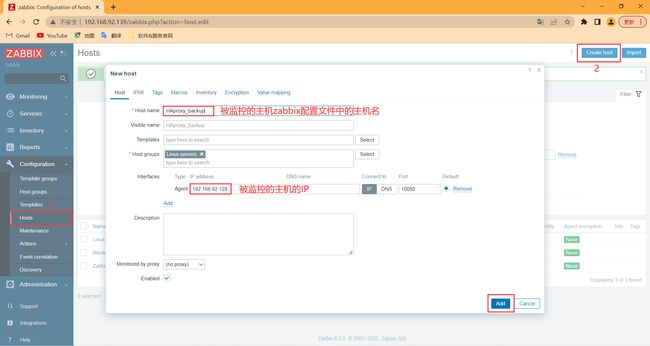

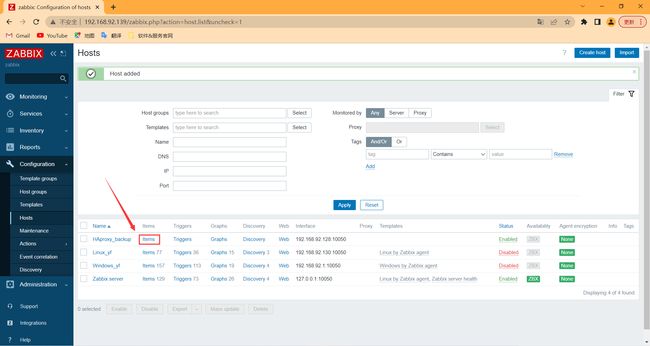

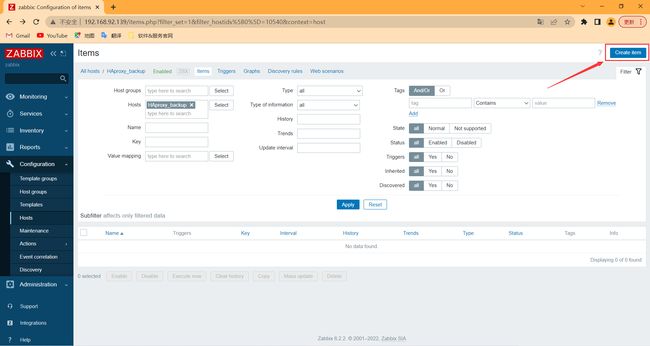

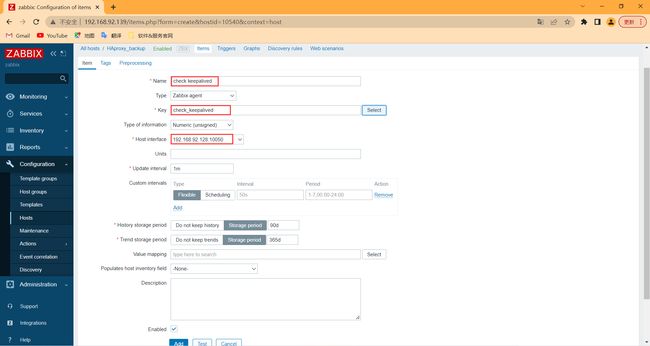

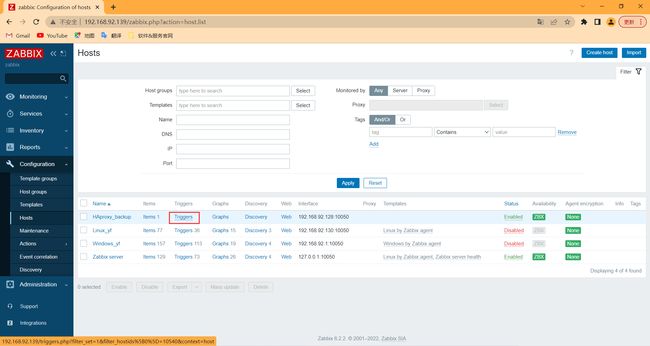

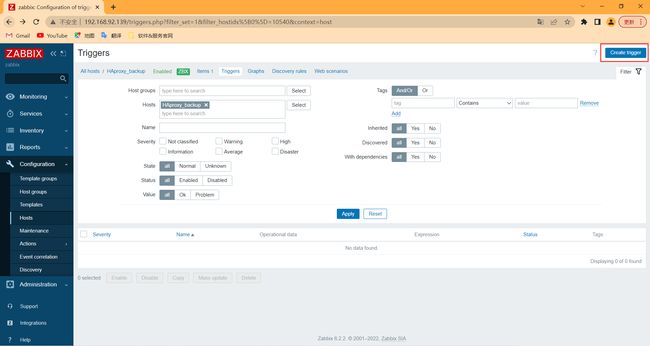

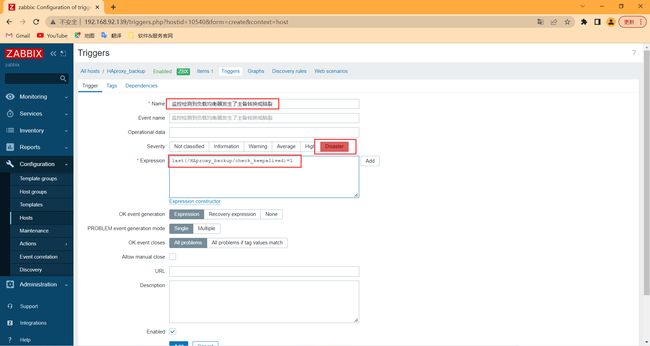

在zabbix服务端的Web管理界面添加主机,监控项,触发器,邮件告警媒介

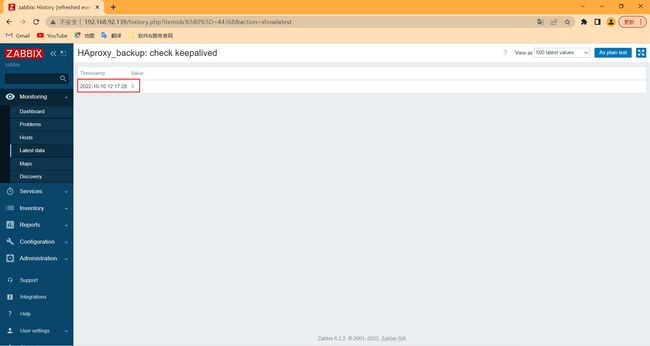

可以看到完成了一次交互(监控项生效, agentd汇报了一次被监控的资源情况)

由于该zabbix服务端先前已配置邮箱告警,这里不作演示。如何配置请参考这篇博客配置邮箱告警

模拟LB01(master)发生故障

[root@LB01 ~]# systemctl stop haproxy.service