Hadoop笔记04-Hadoop-Yarn

Yarn资源调度器

Yarn是一个资源调度平台,负责为运算程序提供服务器运算资源,相当于一个分布式的操作系统平台,而MapReduce等运算程序则相当于运行于操作系统之上的应用程序。

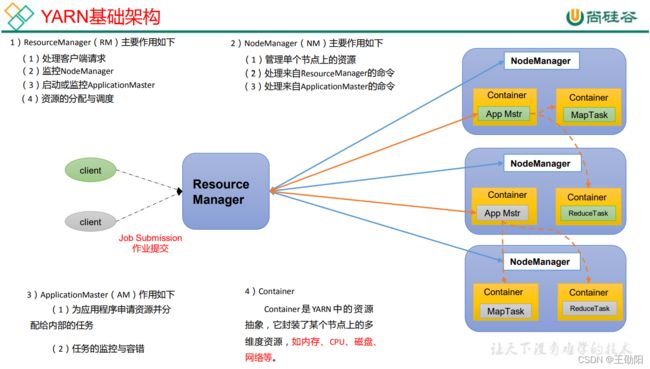

Yarn基础架构

YARN主要由ResourceManager、NodeManager、ApplicationMaster和Container等组件构成。

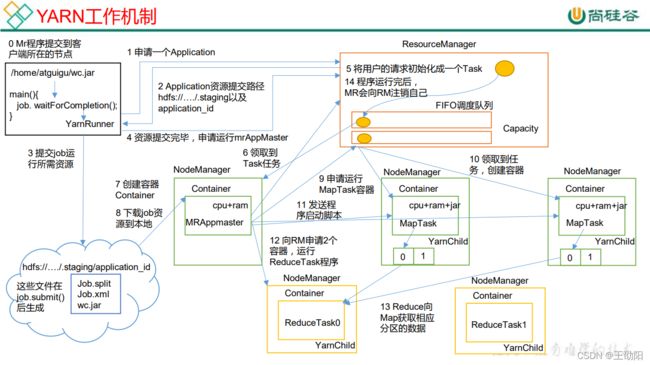

Yarn工作机制

- MapReduce程序提交到客户端所在结点

- YarnRunner向ResourceManager申请一个Application

- ResourceManager将应用程序的资源路径返回给YarnRunner

- 程序将运行需要的资源提交到HDFS上

- 程序提交完毕后,申请运行MRAppMaster

- ResourceManager将用户请求初始化为一个Task

- 其中一个NodeManager获取到Task

- 该NodeManager创建容器Container,并产生MRAppMaster

- Container从HDFS上拷贝资源到本地

- MRAppMaster向ResourceManager申请运行MapTask的资源

- ResourceManager将MapTask任务分配给另外两个NodeManager,另外两个NodeManager收到任务后创建容器

- MapReduce向两个接收到任务的NodeManager发送程序启动脚本,这两个NodeManager启动MapTask,MapTask对数据分区和排序

- MRAppMaster等待所以MapTask执行完毕,向ResourceManager申请容器运行ReduceTask

- ReduceTask先MapTask拉取分区数据

- 程序运行完毕后,MapReduce向ResourceManager申请注销自己,并释放资源

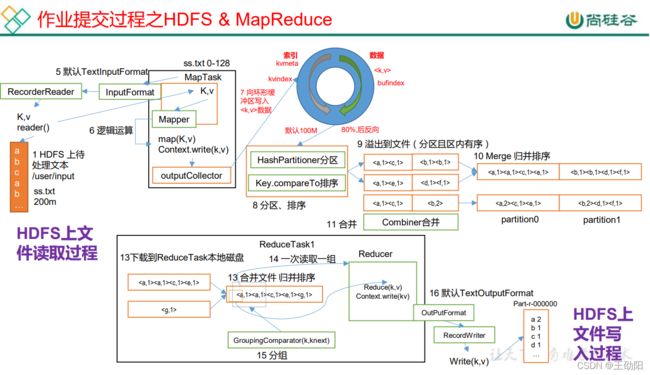

作业提交全过程

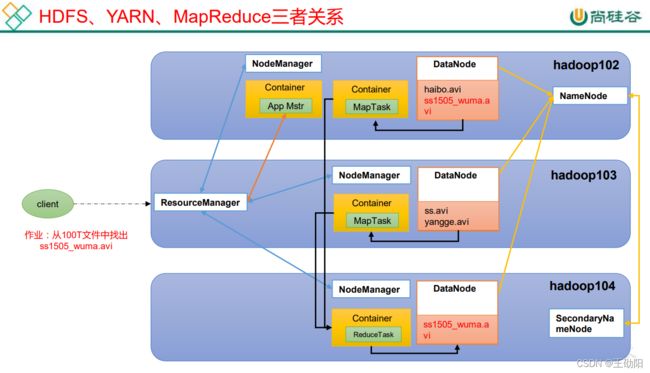

Yarn的作业提交过程,就是Yarn的工作机制那张图片,HDFS和MapReduce的作业提交过程类似于MapReduce的工作原理,只不过将数据的输入输出迁移到了HDFS上。

作业提交全过程:

- Client调用

job.waitForCompletion()方法,向集群提交MapReduce作业 - Client向ResourceManager申请一个作业id

- ResourceManager给Client返回job资源提交路径和作业id

- Client提交jar包、切片信息、配置文件到资源提交路径

- Client提交资源,向ResourceManager申请运行MRAppMaster

- 当ResourceManager收到Client的请求后,将该job添加到容器调度器中

- 某一个空闲的NodeManager领取到该job

- 该NodeManager创建Container并产生MRAppMaster

- 下载Client提交的资源到本地

- MRAppMaster向ResourceManager申请运行多个MapTask任务资源

- ResourceManager将运行MapTask任务分给另外两个NodeManager,另外两个NodeManager领取任务后分别创建容器

- MapReduce向两个接收到任务的NodeManager发送程序启动脚本,这两个NodeManager分别启动MapTask,MapTask对数据进行分区排序

- MRAppMaster等待所有MapTask执行完毕后,向ResourceManager申请容器来运行ReduceTask

- ReduceTask从MapTask拉取分区数据

- 程序运行完毕后,MapReduce向ResourceManager申请注销自己

Yarn中的任务将进度和状态返回给AppMaster,Client向AppMaster请求进度更新并展示给Client,频率由mapreduce.client.progressmonitor.pollinterval设置。

除了向AppMaster请求作业进度外,Client每隔5秒会调用waitForCompletion()检查作业是否完成,间隔可以通过 mapreduce.client.completion.pollinterval来设置。作业完成后,AppMaster和Container会执行清理工作,作业的执行记录会被作业历史服务器存储用于后续Client查看。

Yarn调度器和调度算法

Hadoop作业调度器主要有三种:FIFO、容量(Capacity Scheduler)、公平(Fair Scheduler)。Apache Hadoop 3.1.3默认资源调度器是Capacity Scheduler。

CDH框架默认调度器是Fair Scheduler。

先进先出调度器(FIFO)

FIFO调度器(First In First Out):先进先出队列,根据提交作业的先后顺序,先来先服务。

优点:简单易懂

缺点:不支持多队列,生产环境很少使用

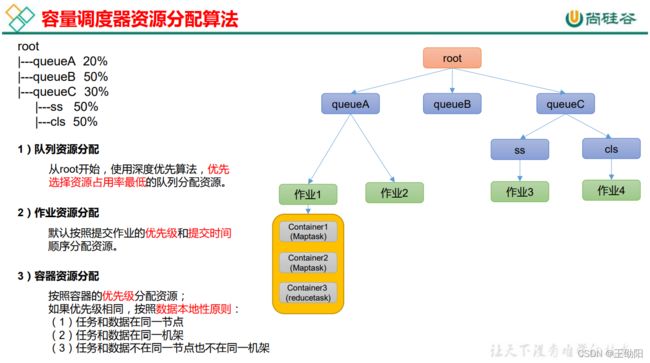

容量调度器(Capacity Scheduler)

Capacity Scheduler是Yahoo开发的多用户调度器。

特点:

- 多队列:每个队列可以配置一定的资源量,每个队列采用FIFO调度策略

- 容量保证:管理员可以给每个队列设置资源最低保证和资源使用上限

- 灵活性:如果一个队列中资源有剩余,可以暂时共享给其他需要资源的队列,一旦该队列有新的应用程序提交,其他队列借调的资源会归还给该队列

- 多租户:支持多用户共享集群和多应用程序同时运行,为了防止同一用户的作业独占队列中的资源,调度器会对同一用户提交的作业所占资源进行限定

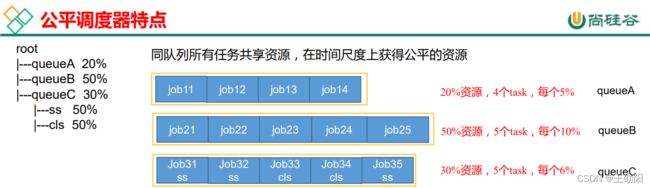

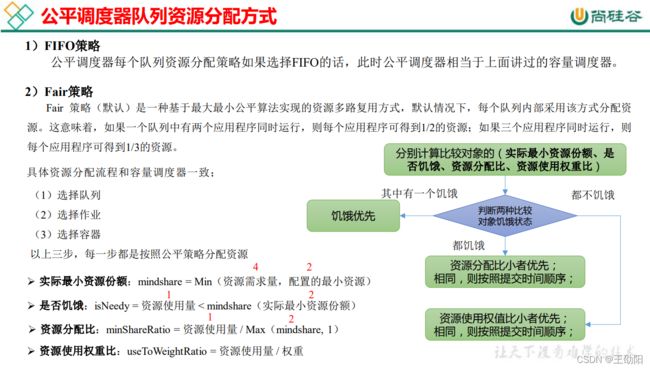

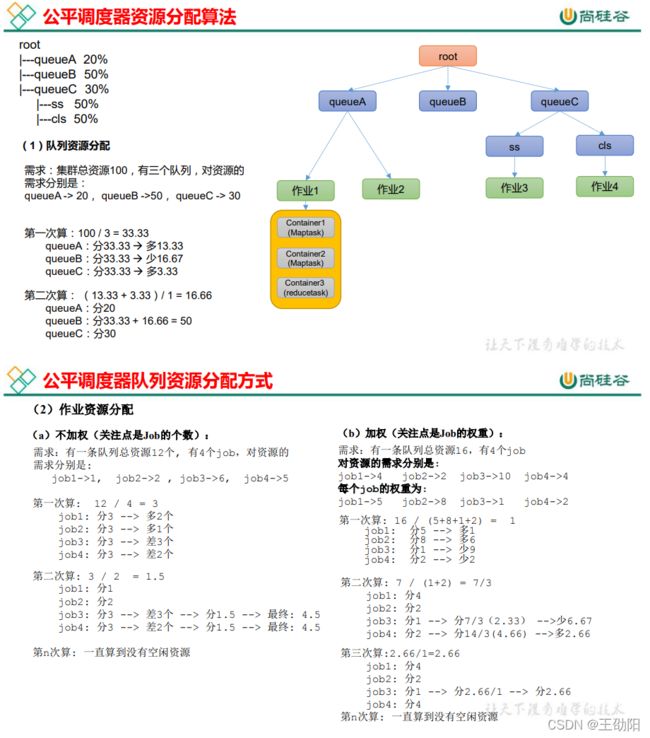

公平调度器(Fair Scheduler)

Fair Scheduler是Facebook开发的多用户调度器。

与容量调度器相同点:

- 多队列:支持多队列多作业

- 容量保证:管理员可以给每个队列设置资源最低保证和资源上限

- 灵活性:如果一个队列中还有资源,可以暂时贡献给其余需要资源的队列,一旦该队列有新的应用提交,其他队列将借调的资源归还回去

- 多租户:支持多用户共享集群和多应用程序同时运行,为了防止同一用户独占资源,调度器会对同一用户提交的作业所占资源进行限定

与容量调度器不同点:

容量调度器的核心调度策略:优先资源利用率低的队列

公平调度器的核心调度策略:优先资源缺额比例大的队列

容量调度器每个队列资源分配方式:FIFO、DRF

公平调度器每个队列资源分配方式:FIFO、FAIR、DRF

公平调度器设计目标是:在时间尺度上,所有作业获得公平的资源。某一

时刻一个作业应获资源和实际获取资源的差距叫“缺额”,调度器会优先为缺额大的作业分配资源。

DRF策略

DRF(Dominant Resource Fairness),我们之前说的资源,都是单一标准,例如只考虑内存(也是Yarn默认的情况)。但是很多时候我们资源有很多种,例如内存,CPU,网络带宽等,这样我们很难衡量两个应用应该分配的资源比例,我们使用DRF策略对不同应用设置不同比例的限制。

Yarn常用命令

# 列出所有Application

yarn application -list

# 根据Application状态过滤(状态值有:ALL、NEW、NEW_SAVING、SUBMITTED、ACCEPT、RUNNING、FINISHED、FAILED、KILLED)

yarn application -list -appStates FINISHED

# kill掉Application

yarn application -kill <ApplicationId>

# 查询Application日志

yarn logs -applicationId <ApplicationId>

# 查询Container日志

yarn logs -applicationId <ApplicationId> -containerId <ContainerId>

# 查看尝试运行的任务

yarn applicationattempt -list <ApplicationId>

# 查看参试运行任务的状态

yarn applicationattempt -status <ApplicationId>

# 列出所有Container

yarn container -list <ApplicationAttemptId>

# 查看Container状态

yarn container -status <ContainerId>

# 列出所有结点

yarn node -list -all

# 更新队列配置

yarn rmadmin -refreshQueues

# 查看队列

yarn queue -status <QueueName>

Yarn生产环境核心参数

Yarn案例实操

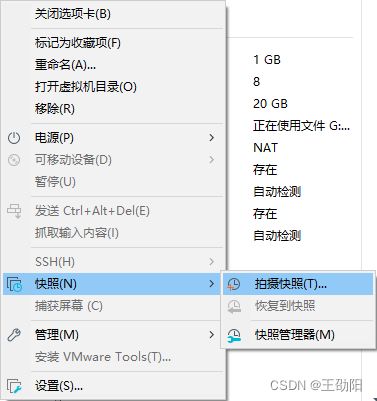

在操作之前,在VMware里先给虚拟机创建快照,相当于做一个备份,如果改坏了可以恢复回来。

Yarn生产环境核心参数配置案例

有1G的数据,做一个wordcount统计,目前有3台服务器,每台服务器配置4G内存,4核CPU,4线程。

每个分块是128MB,每个块会对应一个MapTask,所以会产生8个MapTask,默认是一个ReduceTask,一个MRAppMaster。平均每个结点运行10÷3≈3个任务,分别是4,3,3。

修改yarn-site.xml

<property>

<description>The class to use as the resource scheduler.description>

<name>yarn.resourcemanager.scheduler.classname>

<value>org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.CapacitySchedulervalue>

property>

<property>

<description>Number of threads to handle scheduler interface.description>

<name>yarn.resourcemanager.scheduler.client.thread-countname>

<value>8value>

property>

<property>

<description>Enable auto-detection of node capabilities such as memory and CPU.description>

<name>yarn.nodemanager.resource.detect-hardware-capabilitiesname>

<value>falsevalue>

property>

<property>

<description>Flag to determine if logical processors(such as hyperthreads) should be counted as cores. Only applicable on Linux when yarn.nodemanager.resource.cpu-vcores is set to -1 and yarn.nodemanager.resource.detect-hardware capabilities is true.description>

<name>yarn.nodemanager.resource.count-logical-processors-ascoresname>

<value>falsevalue>

property>

<property>

<description>Multiplier to determine how to convert phyiscal cores to vcores. This value is used if yarn.nodemanager.resource.cpu-vcores is set to -1(which implies auto-calculate vcores) and yarn.nodemanager.resource.detect-hardware-capabilities is set to true. The number of vcores will be calculated as number of CPUs * multiplier.description>

<name>yarn.nodemanager.resource.pcores-vcores-multipliername>

<value>1.0value>

property>

<property>

<description>Amount of physical memory, in MB, that can be allocated for containers. If set to -1 and yarn.nodemanager.resource.detect-hardware-capabilities is true, it is automatically calculated(in case of Windows and Linux). In other cases, the default is 8192MB.description>

<name>yarn.nodemanager.resource.memory-mbname>

<value>4096value>

property>

<property>

<description>Number of vcores that can be allocated for containers. This is used by the RM scheduler when allocating resources for containers. This is not used to limit the number of CPUs used by YARN containers. If it is set to -1 and yarn.nodemanager.resource.detect-hardware-capabilities is true, it is automatically determined from the hardware in case of Windows and Linux. In other cases, number of vcores is 8 by default.description>

<name>yarn.nodemanager.resource.cpu-vcoresname>

<value>4value>

property>

<property>

<description>The minimum allocation for every container request at the RM in MBs. Memory requests lower than this will be set to the value of this property. Additionally, a node manager that is configured to have less memory than this value will be shut down by the resource manager.description>

<name>yarn.scheduler.minimum-allocation-mbname>

<value>1024value>

property>

<property>

<description>The maximum allocation for every container request at the RM in MBs. Memory requests higher than this will throw an InvalidResourceRequestException.description>

<name>yarn.scheduler.maximum-allocation-mbname>

<value>2048value>

property>

<property>

<description>The minimum allocation for every container request at the RM in terms of virtual CPU cores. Requests lower than this will be set to the value of this property. Additionally, a node manager that is configured to have fewer virtual cores than this value will be shut down by the resource manager.description>

<name>yarn.scheduler.minimum-allocation-vcoresname>

<value>1value>

property>

<property>

<description>The maximum allocation for every container request at the RM in terms of virtual CPU cores. Requests higher than this will throw an InvalidResourceRequestException.description>

<name>yarn.scheduler.maximum-allocation-vcoresname>

<value>2value>

property>

<property>

<description>Whether virtual memory limits will be enforced forcontainers.description>

<name>yarn.nodemanager.vmem-check-enabledname>

<value>falsevalue>

property>

<property>

<description>Ratio between virtual memory to physical memory when setting memory limits for containers. Container allocations are expressed in terms of physical memory, and virtual memory usage is allowed to exceed this allocation by this ratio.description>

<name>yarn.nodemanager.vmem-pmem-rationame>

<value>2.1value>

property>

集群中的NodeManager如果配置不一样,需要单独设置yarn-site.xml。

# 重启yarn

[root@hadoop102 hadoop-3.1.3]# sbin/stop-yarn.sh

[root@hadoop102 hadoop-3.1.3]# sbin/start-yarn.sh

# 执行wordcount程序

[root@hadoop102 hadoop-3.1.3]# hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-3.1.3.jar wordcount /input /output

# 通过http://hadoop103:8088/cluster/apps观察任务执行情况

容量调度器多队列提交案例

默认情况下,调度器只有一个队列,不能满足生产环境下的要求,小公司可以根据框架划分:hive、spark、flink每个框架的任务放入指定的队列,大公司可以按照业务划分:登录、下单、注册、物流每个业务放入指定队列。

多队列可以增加容错性,避免某个任务卡死导致拖垮整个集群,还可以根据重要性,实现任务的降级。

需求1:default队列占总内存40%,最大资源容量占总资源60%,hive队列占用总内存的60%,最大资源容量占总资源的80%。

需求2:配置队列优先级。

修改capacity-scheduler.xml

修改如下配置

<property>

<name>yarn.scheduler.capacity.root.queuesname>

<value>default,hivevalue>

<description> The queues at the this level (root is the root queue).description>

property>

<property>

<name>yarn.scheduler.capacity.root.default.capacityname>

<value>40value>

property>

<property>

<name>yarn.scheduler.capacity.root.default.maximum-capacityname>

<value>60value>

property>

新增必要属性

<property>

<name>yarn.scheduler.capacity.root.hive.capacityname>

<value>60value>

property>

<property>

<name>yarn.scheduler.capacity.root.hive.user-limit-factorname>

<value>1value>

property>

<property>

<name>yarn.scheduler.capacity.root.hive.maximum-capacityname>

<value>80value>

property>

<property>

<name>yarn.scheduler.capacity.root.hive.statename>

<value>RUNNINGvalue>

property>

<property>

<name>yarn.scheduler.capacity.root.hive.acl_submit_applicationsname>

<value>*value>

property>

<property>

<name>yarn.scheduler.capacity.root.hive.acl_administer_queuename>

<value>*value>

property>

<property>

<name>yarn.scheduler.capacity.root.hive.acl_application_max_priorityname>

<value>*value>

property>

<property>

<name>yarn.scheduler.capacity.root.hive.maximum-applicationlifetimename>

<value>-1value>

property>

<property>

<name>yarn.scheduler.capacity.root.hive.default-applicationlifetimename>

<value>-1value>

property>

分发配置文件,重启Yarn执行yarn rmadmin -refreshQueue刷新队列,此时在http://hadoop103:8088/cluster的Scheduler里可以看到default队列和hive队列了。

既然有了hive队列,我们向hive队列提交一个任务查看执行情况,通过-D mapreduce.job.queuename=hive参数来指定队列。

[root@hadoop102 hadoop-3.1.3]# hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-3.1.3.jar wordcount -D mapreduce.job.queuename=hive /input /output

默认情况下,任务都会分配到default队列,如果希望分配到指定队列,代码层面可以通过configuration.set("mapreduce.job.queuename","hive");来指定。

默认情况下,所有任务优先级都是0,可以通过任务调度器指定某个任务的优先级,优先级高的任务优先获取资源,若想使用任务优先级功能,需要开启。

修改yarn-site.xml,增加以下参数,分发配置,重启Yarn。

<property>

<name>yarn.cluster.max-application-priorityname>

<value>5value>

property>

# 多次提交以下任务,直到新提交的任务申请不到资源为止

[root@hadoop102 hadoop-3.1.3]# hadoop jar /opt/module/hadoop3.1.3/share/hadoop/mapreduce/hadoop-mapreduce-examples-3.1.3.jar pi 5 2000000

# 再次重新提交优先级高的任务,可以看到高优先级的任务先获取到资源

[root@hadoop102 hadoop-3.1.3]# hadoop jar /opt/module/hadoop3.1.3/share/hadoop/mapreduce/hadoop-mapreduce-examples-3.1.3.jar pi -D mapreduce.job.priority=5 5 2000000

# 对于运行中的程序,可以通过如下指令修改优先级

[root@hadoop102 hadoop-3.1.3]# yarn application -appID -updatePriority 5

公平调度器案例

需求:创建两个队列:test和atguigu。若用户提交任务时候指定了队列,任务进到指定队列,若用户提交任务时候没有指定队列,test提交的任务进到root.group.test队列,atguigu提交的任务进到root.group.atguigu队列。

这里需要修改两个文件:yarn-site.xml和fair-scheduler.xml(公平调度器队列分配文件,文件名可自定义)。

配置文件参考资料:https://hadoop.apache.org/docs/r3.1.3/hadoop-yarn/hadoop-yarn-site/FairScheduler.html

任务队列放置规则参考资料:https://blog.cloudera.com/untangling-apache-hadoop-yarn-part-4-fair-scheduler-queuebasics/

修改yarn-site.xml,添加如下内容。

<property>

<name>yarn.resourcemanager.scheduler.classname>

<value>org.apache.hadoop.yarn.server.resourcemanager.scheduler.fair.FairSchedulervalue>

<description>配置使用公平调度器description>

property>

<property>

<name>yarn.scheduler.fair.allocation.filename>

<value>/opt/module/hadoop-3.1.3/etc/hadoop/fair-scheduler.xmlvalue>

<description>指明公平调度器队列分配配置文件description>

property>

<property>

<name>yarn.scheduler.fair.preemptionname>

<value>falsevalue>

<description>禁止队列间资源抢占description>

property>

配置fair-scheduler.xml。

<allocations>

<queueMaxAMShareDefault>0.5queueMaxAMShareDefault>

<queueMaxResourcesDefault>4096mb,4vcoresqueueMaxResourcesDefault>

<queue name="test">

<minResources>2048mb,2vcoresminResources>

<maxResources>4096mb,4vcoresmaxResources>

<maxRunningApps>4maxRunningApps>

<maxAMShare>0.5maxAMShare>

<weight>1.0weight>

<schedulingPolicy>fairschedulingPolicy>

queue>

<queue name="atguigu" type="parent">

<minResources>2048mb,2vcoresminResources>

<maxResources>4096mb,4vcoresmaxResources>

<maxRunningApps>4maxRunningApps>

<maxAMShare>0.5maxAMShare>

<weight>1.0weight>

<schedulingPolicy>fairschedulingPolicy>

queue>

<queuePlacementPolicy>

<rule name="specified" create="false"/>

<rule name="nestedUserQueue" create="true">

<rule name="primaryGroup" create="false"/>

rule>

<rule name="reject" />

queuePlacementPolicy>

allocations>

分发并重启Yarn,进行测试。

# 指定提交任务到队列test

[root@hadoop102 hadoop-3.1.3]# hadoop jar /opt/module/hadoop3.1.3/share/hadoop/mapreduce/hadoop-mapreduce-examples-3.1.3.jar pi -Dmapreduce.job.queuename=root.test 1 1

# 不指定提交任务到哪个队列,任务会到当前用户的队列

[root@hadoop102 hadoop-3.1.3]# hadoop jar /opt/module/hadoop3.1.3/share/hadoop/mapreduce/hadoop-mapreduce-examples-3.1.3.jar pi 1 1

Yarn的Tool接口案例

在用我们自己的jar包执行wordcount的时候,如果带了其他参数,会出现错误。

# 不带参数的时候,正常执行

[root@hadoop102 hadoop-3.1.3]# yarn jar demo-1.0-SNAPSHOT-jar-with-dependencies.jar com.demo.mapreduce.wordcount.WordCountDriver /wcinput /wcoutput

# 带参数的时候,提示错误,提示/wcinput已经存在,因为程序把-Dmapreduce.job.queuename=root.test当成了输入路径参数,把/wcinput当成了输出路径参数,/wcoutput参数被忽略了

[root@hadoop102 hadoop-3.1.3]# yarn jar demo-1.0-SNAPSHOT-jar-with-dependencies.jar com.demo.mapreduce.wordcount.WordCountDriver -Dmapreduce.job.queuename=root.test /wcinput /wcoutput

2022-01-26 22:43:28,885 INFO client.RMProxy: Connecting to ResourceManager at hadoop103/192.168.216.103:8032

Exception in thread "main" org.apache.hadoop.mapred.FileAlreadyExistsException: Output directory hdfs://hadoop102:8020/wcinput already exists

at org.apache.hadoop.mapreduce.lib.output.FileOutputFormat.checkOutputSpecs(FileOutputFormat.java:164)

at org.apache.hadoop.mapreduce.JobSubmitter.checkSpecs(JobSubmitter.java:277)

at org.apache.hadoop.mapreduce.JobSubmitter.submitJobInternal(JobSubmitter.java:143)

at org.apache.hadoop.mapreduce.Job$11.run(Job.java:1570)

at org.apache.hadoop.mapreduce.Job$11.run(Job.java:1567)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1729)

at org.apache.hadoop.mapreduce.Job.submit(Job.java:1567)

at org.apache.hadoop.mapreduce.Job.waitForCompletion(Job.java:1588)

at com.demo.mapreduce.wordcount.WordCountDriver.main(WordCountDriver.java:24)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.util.RunJar.run(RunJar.java:318)

at org.apache.hadoop.util.RunJar.main(RunJar.java:232)

为了解决这个问题,我们使用Tool接口。

WordCount.java

package com.demo.yarn;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.util.Tool;

import java.io.IOException;

public class WordCount implements Tool {

private Configuration configuration;

@Override

public int run(String[] strings) throws Exception {

Job job = Job.getInstance(configuration);

job.setJarByClass(WordCountDriver.class);

job.setMapperClass(WordCountMapper.class);

job.setReducerClass(WordCountReducer.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(IntWritable.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

FileInputFormat.setInputPaths(job, new Path(strings[0]));

FileOutputFormat.setOutputPath(job, new Path(strings[1]));

return job.waitForCompletion(true) ? 0 : 1;

}

@Override

public void setConf(Configuration configuration) {

this.configuration = configuration;

}

@Override

public Configuration getConf() {

return configuration;

}

public static class WordCountMapper extends Mapper<LongWritable, Text, Text, IntWritable> {

private Text text = new Text();

private IntWritable intWritable = new IntWritable(1);

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

String line = value.toString();

String[] words = line.split(" ");

for (String word : words) {

text.set(word);

context.write(text, intWritable);

}

}

}

public static class WordCountReducer extends Reducer<Text, IntWritable, Text, IntWritable> {

private IntWritable intWritable = new IntWritable();

@Override

protected void reduce(Text key, Iterable<IntWritable> values, Context context) throws IOException, InterruptedException {

int sum = 0;

for (IntWritable value : values) {

sum += value.get();

}

intWritable.set(sum);

context.write(key, intWritable);

}

}

}

WordCountDriver.java

package com.demo.yarn;

import org.apache.hadoop.mapred.JobConf;

import org.apache.hadoop.util.Tool;

import org.apache.hadoop.util.ToolRunner;

import java.util.Arrays;

public class WordCountDriver {

private static Tool tool;

public static void main(String[] args) throws Exception {

System.out.println(Arrays.toString(args));// 输出所有参数

int length = args.length;

// 1. 创建配置文件

JobConf jobConf = new JobConf();

// 2. 判断是否带额外参数

if (args[0].startsWith("-D")) {// 有参数

tool = new WordCount();

// 这里需要解析-Dmapreduce.job.queuename=root.test,并把queueName=root.test设置上去

// 解析过程略掉了,直接写死一个test的queue,这块还有点问题,运行会报错误,需要提前创建一个test队列,还不会

// Application application_1643207666393_0004 submitted by user root to unknown queue: root.test

jobConf.setQueueName("test");

} else {

throw new RuntimeException(" No such tool: " + args[0]);

}

// 3. 用 Tool 执行程序

// Arrays.copyOfRange 将老数组的元素放到新数组里面,这里将/wcinput和/wcoutput做参数传进去,其余参数都在jobConf里设置

int run = ToolRunner.run(jobConf, tool, Arrays.copyOfRange(args, length - 2, length));

System.exit(run);

}

}

maven install一下,将jar包发到服务器上,测试一下。

不过,这块还有点问题,代码里jobConf.setQueueName("test");后,程序不认识root.test这个queue,可能需要提前配置一下test的queue,不知道怎么改了。

其实这个Tool的目的就是将参数分离出来,输入、输出路径不变,其他参数通过conf设置进去。

[root@hadoop102 hadoop-3.1.3]# yarn jar demo-1.0-SNAPSHOT-jar-with-dependencies.jar com.demo.yarn.WordCountDriver -Dmapreduce.job.queuename=root.test /wcinput /wcoutput