Mahout:2->PFPGrowth | 分布式频繁模式挖掘

参考官网,分析源码

1. 网页给出了如何应用开发PFP-Growth的过程 https://cwiki.apache.org/confluence/display/MAHOUT/Parallel+Frequent+Pattern+Mining 但是易发现将其代码复制过去不能运行。原因有:

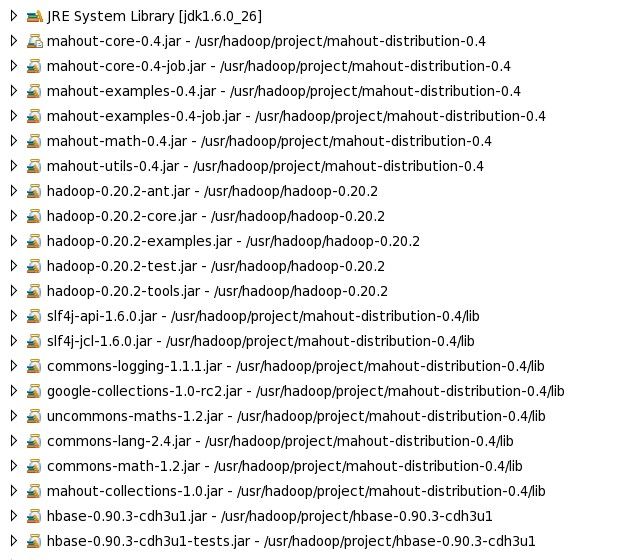

1.1 相应包没有加进去,加的必备包有:Mahout,Hadoop,Hbase。运行过程中会缺什么包,这是找到相应包加进去即可,这是一个折磨人的过程,因为不知道需要的类是包含在哪个包里面,但是一般都有启发式寻找思路。首先确定是在Mahout,还是在Hadoop下找?然后打开可能的包看下是否包含需要的类。例如本项目中用到了mahout-collections-*.jar找了很久才如何,还有Hbase的包!

1.2 复制过来的代码可能不是针对当前版本的Mahout。这就需要根据当前版本的Mahout下找源代码的函数是怎么定义,实例又是如何调用包中类(函数)

2. 注重看源码。特别是PFP-Growth的源码 org.apache.mahout.fpm.pfpgrowth.FPGrowthDriver.java的运行PFP—Growth例子

PFPGrowth实例实现

包:

Main代码:

package com.fora;

import java.io.File;

import java.io.IOException;

import java.nio.charset.Charset;

import java.util.HashSet;

import java.util.Set;

import java.util.List;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.SequenceFile;

import org.apache.hadoop.io.Text;

import org.apache.mahout.common.FileLineIterable;

import org.apache.mahout.common.StringRecordIterator;

import org.apache.mahout.fpm.pfpgrowth.convertors.ContextStatusUpdater;

import org.apache.mahout.fpm.pfpgrowth.convertors.SequenceFileOutputCollector;

import org.apache.mahout.fpm.pfpgrowth.convertors.string.StringOutputConverter;

import org.apache.mahout.fpm.pfpgrowth.convertors.string.TopKStringPatterns;

import org.apache.mahout.fpm.pfpgrowth.fpgrowth.FPGrowth;

import org.apache.mahout.math.map.OpenLongObjectHashMap;

import org.apache.mahout.common.Pair;

public class PFPGrowth {

public static void main(String[] args) throws IOException {

Set<String> features = new HashSet<String>();

String input = "/usr/hadoop/testdata/pfp.txt";

int minSupport = 3;

int maxHeapSize = 50;//top-k

String pattern = " \"[ ,\\t]*[,|\\t][ ,\\t]*\" ";

Charset encoding = Charset.forName("UTF-8");

FPGrowth<String> fp = new FPGrowth<String>();

String output = "output.txt";

Path path = new Path(output);

Configuration conf = new Configuration();

FileSystem fs = FileSystem.get(conf);

SequenceFile.Writer writer = new SequenceFile.Writer(fs, conf, path, Text.class, TopKStringPatterns.class);

fp.generateTopKFrequentPatterns(

new StringRecordIterator(new FileLineIterable(new File(input), encoding, false), pattern),

fp.generateFList(

new StringRecordIterator(new FileLineIterable(new File(input), encoding, false), pattern),

minSupport),

minSupport,

maxHeapSize,

features,

new StringOutputConverter(new SequenceFileOutputCollector<Text,TopKStringPatterns>(writer)),

new ContextStatusUpdater(null));

writer.close();

List<Pair<String,TopKStringPatterns>> frequentPatterns = FPGrowth.readFrequentPattern(fs, conf, path);

for (Pair<String,TopKStringPatterns> entry : frequentPatterns) {

//System.out.print(entry.getFirst()+"-"); // the frequent patterns meet minSupport

System.out.println(entry.getSecond()); // the frequent patterns meet minSupport and support

}

System.out.print("\nthe end! ");

}

}

数据输入

网址:http://fimi.ua.ac.be/data/ 下T10I4D100K (.gz)数据

运行结果(Run on hadoop):

2011-8-6 9:39:22 org.slf4j.impl.JCLLoggerAdapter info

信息: Mining FTree Tree for all patterns with 364

2011-8-6 9:39:22 org.slf4j.impl.JCLLoggerAdapter info

信息: Found 1 Patterns with Least Support 8

2011-8-6 9:39:22 org.slf4j.impl.JCLLoggerAdapter info

信息: Mining FTree Tree for all patterns with 363

2011-8-6 9:39:22 org.slf4j.impl.JCLLoggerAdapter info

信息: Found 1 Patterns with

...

...

信息: Found 1 Patterns with Least Support 59

2011-8-6 9:39:29 org.slf4j.impl.JCLLoggerAdapter info

信息: Mining FTree Tree for all patterns with 0

2011-8-6 9:39:29 org.slf4j.impl.JCLLoggerAdapter info

信息: Found 1 Patterns with Least Support 59

2011-8-6 9:39:29 org.slf4j.impl.JCLLoggerAdapter info

信息: Mining FTree Tree for all patterns with 0

2011-8-6 9:39:29 org.slf4j.impl.JCLLoggerAdapter info

信息: Found 1 Patterns with Least Support 65

2011-8-6 9:39:29 org.slf4j.impl.JCLLoggerAdapter info

信息: Tree Cache: First Level: Cache hits=3962 Cache Misses=489559

([97 707 755 918 938 ],3)

([95 181 295 758 ],3)

([95 145 266 401 797 833 ],3)

([94 217 272 620 ],3)

([93 517 789 825 ],3)

...

([28 145 157 274 346 735 742 809 ],59)

([1 66 314 470 523 823 874 884 980 ],65)

the end!

导出结果(因为输出文件output.txt在HDFS的/user/root/output.txt,而且是已序列化文件存储的,直接打开看不到内容),可以用命令导出到本地文件系统

命令: ./mahout seqdumper -s output.txt -o /usr/hadoop/output/pfp.txt 注意这里必须先建立本地文件/usr/hadoop/output/pfp.txt

待做:

分析PFP—Growth的源码实现