【云原生 | Kubernetes 系列】---Prometheus的服务发现机制

1. Prometheus的服务发现机制

prometheus默认是采用pull方式定时去目标拉取数据,每个被抓取目标向prometheus提供一个http接口,prometheus需要知道向哪个地址拉取数据,通过prometheus.yml中scrape_configs定义的job来实现目标的定义.这样就无法感知到新加入的变更信息.

通过daemonset实现在k8s环境中,当有新节点加入node-exporter和cadvisor的自动安装.

如果是传统方式,需要手动将新地址加入,并重启prometheus.

动态服务发现能够自动发现集群中的新端点,并加入配置中,通过服务发现,prometheus能够查询到需要监控的Target列表,然后轮询这个Target获取监控数据.

添加数据源的方法有很多种,常见的有静态配置和服务发现配置.

prometheus支持多种服务发现,常见的有:

- kubernetes_sd_configs: kubernetes服务发现,让prometheus动态发现kubernetes中被监控的目标

- static_configs: 静态服务发现,基于prometheus配置文件指定监控目标

- dns_sd_configs: DNS服务发现监控目标

- consul_sd_configs: Consul服务发现,基于consul服务动态发现监控目标

- file_sd_configs: 基于指定的文件事先服务发现,prometheus定时读取文件如果发生变化则加载新目标

2. Prometheus 标签重写

prometheus的relabeling(标签重写)可以在抓取到目标实例之前把目标实例的元数据标签动态修改,动态添加或覆盖.

Prometheus在加载Target成功之后,在Taret实例中,都包含一些Metadata标签信息,默认标签有:

| 标签 | 含义 |

|---|---|

| __address__ | 以:格式显示targets的地址 |

| __scheme__ | 采用的目标服务地址的Scheme形式,HTTP或者HTTPS |

| __metrics_path__ | 采用的目标服务的访问路径 |

修改标签的时机:

relabel_configs: 在抓取数据之前,可以使用relabel_configs添加一些标签,也可以值采集特定目标或过滤目标

metric_relabel_configs: 在抓取数据之后,可以使用metric_relabel_configs做最后的重新标记和过滤

- job_name: 'kubernetes-node-cadvisor' #job名称

kubernetes_sd_configs: #基于kubernetes_sd_configs实现服务发现

- role: endpoint # 发现endpoint

scheme: https # 当前job使用的发现协议

tls_config: # 证书配置

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt # 容器里的证书路径

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token # 容器里的token路径

relabel_configs: # 抓取前重写修改标签配置

- source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name] # 源标签

- action: keep # action定义了relabel的具体动作.action支持多种

regex: default;kubernetes;https # 通过正则匹配条件

#在default命名空间service名是kubernetes,并且使用https协议访问一旦条件成立,只保留匹配成功的数据(其实就是api-server)

2.1 label

source_labels: 源标签,没有经过relabel处理之前的名字

target_labels: 通过action处理之后的新标签名字

regex: 正则表达式,匹配源标签

replacement: 通过分组替换后标签(target_label)对应的值

2.2 action 详解

| 标签 | 含义 |

|---|---|

| replace | 替换标签值,根据regex正则匹配到源标签的值,使用replacement来引用表达式匹配的分组 |

| keep | 满足regex正则条件的实例进行采集,把source_labels中没有匹配到regex正则内容的target实例丢弃,只采集匹配成功的实例 |

| drop | 满足regex正则匹配条件的不采集,把source_labels中匹配到regex正则内容的Target丢弃,只采集没有匹配到的实例 |

| Hashmod | 使用Hashmod计算source_labels的Hash值并进行对比,基于自定义的模数取模,以实现对目标进行分类,重新赋值等功能 |

| labelmap | 匹配regex所有标签名称,然后赋值匹配标签的值进行分组,通过replacement分组引用替代 |

| labelkeep | 匹配regex所有标签名称,其他不匹配的标签都将从标签集中删除 |

| labeldrop | 匹配regex所有标签名称,其他匹配的标签都将从标签集中删除 |

2.3 发现类型

- node

- service

- pod

- endpoint

- Endpointslice # 对endpoint进行切片

- ingress

2.4 实现api-server监控

root@k8s-master-01:~# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.200.0.1 <none> 443/TCP 126d

root@k8s-master-01:~# kubectl get ep

NAME ENDPOINTS AGE

kubernetes 192.168.31.101:6443,192.168.31.102:6443,192.168.31.103:6443 126d

通过监控api-server,判断集群的健康状况.

- job_name: 'kubernetes-apiserver'

kubernetes_sd_configs:

- role: endpoints

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name]

action: keep

regex: default;kubernetes;https

2.5 监控kube-dns

创建kube-dns时需要再metadata中加入

annotations:

prometheus.io/port: "9153"

prometheus.io/scrape: "true"

这样就能在kube-dns的注解中看到prometheus.io/port: 9153,prometheus.io/scrape: true

才能使得prometheus发现kube-dns

root@k8s-master-01:/opt/k8s-data/yaml/prometheus-files/case1/prometheus-files/case# kubectl describe svc kube-dns -n kube-system

Name: kube-dns

Namespace: kube-system

Labels: addonmanager.kubernetes.io/mode=Reconcile

k8s-app=kube-dns

kubernetes.io/cluster-service=true

kubernetes.io/name=CoreDNS

Annotations: prometheus.io/port: 9153

prometheus.io/scrape: true

通过configmap进行匹配

匹配任意namespace的endpoint

- job_name: 'kubernetes-service-endpoints'

kubernetes_sd_configs:

- role: endpoints # 类型是endpoints

relabel_configs:

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scrape] # 匹配prometheus.io/scrape值

action: keep

regex: true #如果是true则保留,继续往下匹配,否则就不进行保存

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scheme]

action: replace

target_label: __scheme__

regex: (https?) # 匹配http和https

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+) # 至少1个字符任意长度

- source_labels: [__address__, __meta_kubernetes_service_annotation_prometheus_io_port]

action: replace

target_label: __address__

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2 # 将地址和端口两个值合并成 ip:port格式的__address__

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_service_name]

action: replace

target_label: kubernetes_name

2.5 cadvisor发现

- 确保所有节点都有cadvisor(daemonset控制)

- 服务端口8080

- job_name: 'kubernetes-node-cadvisor' # job名称

kubernetes_sd_configs: # 基于k8s发现

- role: node #角色

scheme: https #协议

tls_config: # 证书配置

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs: #标签重写配置

- action: labelmap

regex: __meta_kubernetes_node_label_(.+) #匹配node_label

- target_label: __address__ # 替换成

replacement: kubernetes.default.svc:443 # 分组

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __metrics_path__

replacement: /api/v1/nodes/${1}/proxy/metrics/cadvisor # $1是node_name

3. k8s外面的prometheus发现k8s中的服务

3.1 创建外部访问授权

授权外部访问k8s的授权

apiVersion: v1

kind: ServiceAccount

metadata:

name: prometheus

namespace: monitoring

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: prometheus

rules:

- apiGroups:

- ""

resources:

- nodes

- services

- endpoints

- pods

- nodes/proxy

verbs:

- get

- list

- watch

- apiGroups:

- "extensions"

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- configmaps

- nodes/metrics

verbs:

- get

- nonResourceURLs:

- /metrics

verbs:

- get

---

#apiVersion: rbac.authorization.k8s.io/v1beta1

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: prometheus

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: prometheus

subjects:

- kind: ServiceAccount

name: prometheus

namespace: monitoring

创建授权

kubectl apply -f case4-prom-rbac.yaml

serviceaccount/prometheus created

clusterrole.rbac.authorization.k8s.io/prometheus created

clusterrolebinding.rbac.authorization.k8s.io/prometheus created

3.2 获取token

获取secrets

# kubectl get secrets -n monitoring

NAME TYPE DATA AGE

default-token-cpcf8 kubernetes.io/service-account-token 3 25h

monitor-token-q8rcq kubernetes.io/service-account-token 3 24h

prometheus-token-5stl8 kubernetes.io/service-account-token 3 114s

获取secrets的详细信息并保存

# kubectl describe secrets prometheus-token-5stl8 -n monitoring

Name: prometheus-token-5stl8

Namespace: monitoring

Labels: <none>

Annotations: kubernetes.io/service-account.name: prometheus

kubernetes.io/service-account.uid: c4bd516b-bf0a-4427-8f40-447b5e4c2f6c

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1350 bytes

namespace: 10 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IjUxUzlHTUdYcUN2YnA2UU9ndEFSbEZJaUtySVFxdWJvVGc0TEFIR2NhbGcifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJtb25pdG9yaW5nIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZWNyZXQubmFtZSI6InByb21ldGhldXMtdG9rZW4tNXN0bDgiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoicHJvbWV0aGV1cyIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6ImM0YmQ1MTZiLWJmMGEtNDQyNy04ZjQwLTQ0N2I1ZTRjMmY2YyIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDptb25pdG9yaW5nOnByb21ldGhldXMifQ.D19ZpwmkdnEu5_jzN1oGqBRC2uu4euEoJT3U4WYRIjKIOGDqnB_cZrUUXkLBRPGDkR7VrYV8QZFyeeqswFZFa9nm-TEmJKAPGozL75GSZ5RSe8aMBm44P3mci5fx06jcio_qFUUkhgkWghkI84PYzjIrVhnN-LREVtn50lfpmzJLM26rCuQ2jaz7m3M-G8yrc_BI_nQ3N1MrgNAzupWOhh3n_bACXrY1SCcT2tVMLL8YLTtZVlCpaAUxTpaNMbCQVrXZQNyo0ZCLaqTAPbGe5fnDvfcago9U5Czfrihs3h5jJTjRF81yDE9-hVi8qtZPeIsGfzxIh75H5xH2WM924g

将token保存到二进制安装的prometheus服务器目录下

root@prometheus-2:/apps/prometheus# cat /apps/prometheus/k8s.token

eyJhbGciOiJSUzI1NiIsImtpZCI6IjUxUzlHTUdYcUN2YnA2UU9ndEFSbEZJaUtySVFxdWJvVGc0TEFIR2NhbGcifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJtb25pdG9yaW5nIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZWNyZXQubmFtZSI6InByb21ldGhldXMtdG9rZW4tNXN0bDgiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoicHJvbWV0aGV1cyIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6ImM0YmQ1MTZiLWJmMGEtNDQyNy04ZjQwLTQ0N2I1ZTRjMmY2YyIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDptb25pdG9yaW5nOnByb21ldGhldXMifQ.D19ZpwmkdnEu5_jzN1oGqBRC2uu4euEoJT3U4WYRIjKIOGDqnB_cZrUUXkLBRPGDkR7VrYV8QZFyeeqswFZFa9nm-TEmJKAPGozL75GSZ5RSe8aMBm44P3mci5fx06jcio_qFUUkhgkWghkI84PYzjIrVhnN-LREVtn50lfpmzJLM26rCuQ2jaz7m3M-G8yrc_BI_nQ3N1MrgNAzupWOhh3n_bACXrY1SCcT2tVMLL8YLTtZVlCpaAUxTpaNMbCQVrXZQNyo0ZCLaqTAPbGe5fnDvfcago9U5Czfrihs3h5jJTjRF81yDE9-hVi8qtZPeIsGfzxIh75H5xH2WM924g

3.3 配置监控api-server

在prometheus.yml追加以下内容

- job_name: 'kubernetes-apiservers-monitor'

kubernetes_sd_configs:

- role: endpoints

api_server: https://192.168.31.188:6443

tls_config:

insecure_skip_verify: true #跳过证书验证

bearer_token_file: /apps/prometheus/k8s.token # token地址

scheme: https

tls_config:

insecure_skip_verify: true

bearer_token_file: /apps/prometheus/k8s.token

relabel_configs:

- source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name]

action: keep

regex: default;kubernetes;https

- target_label: __address__

replacement: 192.168.31.188:6443 # api集群地址

重启prometheus服务

root@prometheus-2:/apps/prometheus# systemctl restart prometheus.service

此时就可以在prometheus上看到apiservers的信息被采集到

3.4 配置监控node

- job_name: 'kubernetes-nodes-monitor'

scheme: http

tls_config:

insecure_skip_verify: true

bearer_token_file: /apps/prometheus/k8s.token

kubernetes_sd_configs:

- role: node

api_server: https://192.168.31.188:6443

tls_config:

insecure_skip_verify: true

bearer_token_file: /apps/prometheus/k8s.token

relabel_configs:

- source_labels: [__address__]

regex: '(.*):10250'

replacement: '${1}:9100'

target_label: __address__

action: replace

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

重启prometheus服务后

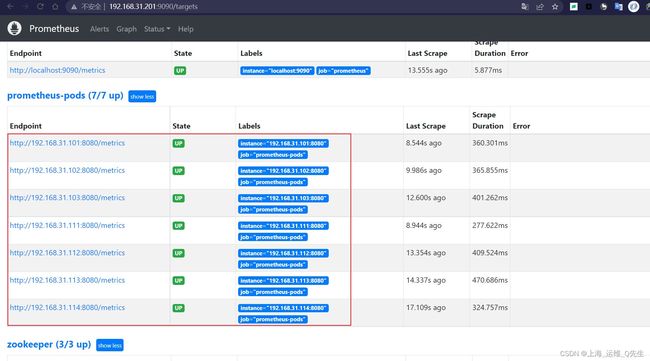

3.5 配置监控Pod

- job_name: 'kubernetes-pods-monitor'

kubernetes_sd_configs:

- role: pod

api_server: https://192.168.31.101:6443

tls_config:

insecure_skip_verify: true

bearer_token_file: /apps/prometheus/k8s.token

relabel_configs:

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_scrape]

action: keep

regex: true

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_pod_annotation_prometheus_io_port]

action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

target_label: __address__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: kubernetes_pod_name

在创建pod配置里需要加

template:

metadata:

annotations:

prometheus.io/port: "9180"

prometheus.io/scrape: "true"

这样就能在prometheus中看到pod了

4. 基于Consul自动发现

Consul是分布式k/v数据存储集群,目前常用于服务注册和服务发现

4.1 consul的注册

向consul注册zookeep-1和zookeep-2数据

curl -X PUT -d '{"id": "zookeeper-1","name": "zookeeper-1","address": "192.168.31.121","port": 9100,"tags": ["zookeeper","node-exporter"],"checks": [{"http": "http://192.168.31.121:9100/","interval": "5s"}]}' http://192.168.31.121:8500/v1/agent/service/register

curl -X PUT -d '{"id": "zookeeper-2","name": "zookeeper-2","address": "192.168.31.122","port": 9100,"tags": ["zookeeper","node-exporter"],"checks": [{"http": "http://192.168.31.122:9100/","interval": "5s"}]}' http://192.168.31.121:8500/v1/agent/service/register

此时添加

curl -X PUT -d '{"id": "zookeeper-3","name": "zookeeper-3","address": "192.168.31.123","port": 9100,"tags": ["zookeeper","node-exporter"],"checks": [{"http": "http://192.168.31.123:9100/","interval": "5s"}]}' http://192.168.31.121:8500/v1/agent/service/register

这样就不需要每次都去修改配置文件再重启prometheus了.

4.2 consul的删除

curl --request PUT http://192.168.31.121:8500/v1/agent/service/deregister/cadvisor-1

curl --request PUT http://192.168.31.121:8500/v1/agent/service/deregister/zookeeper

5. 基于file的服务发现

5.1 编写sd_configs文件

通过prometheus加载本地文件,当本地文件发生变化,prometheus随之变化

mkdir -p /apps/prometheus/file_sd

cd /apps/prometheus/file_sd

vim sd_my_server.json

[

{

"targets": ["192.168.31.121:9100","192.168.31.122:9100","192.168.31.123:9100"]

}

]

5.2 prometheus调用sd_configs

- job_name: "file_sd_my_server"

file_sd_configs:

- files:

- /apps/prometheus/file_sd/sd_my_server.json

refresh_interval: 10s

重启prometheus

systemctl restart prometheus

修改配置,追加2台服务器

[

{

"targets": ["192.168.31.121:9100","192.168.31.122:9100","192.168.31.123:9100","192.168.31.111:9100","192.168.31.112:9100"]

}

]

此时不再需要重启服务,等待刷新就可以看到新追加的服务

6. Dns服务发现

6.1 配置dns

这里用hosts代替

192.168.31.111 node-1 k8s-node-1

192.168.31.112 node-2 k8s-node-2

192.168.31.113 node-3 k8s-node-3

192.168.31.114 node-4 k8s-node-4

6.2 修改prometheus.yml

- job_name: "file_sd_my_server"

file_sd_configs:

- files:

- /apps/prometheus/file_sd/sd_my_server.json

refresh_interval: 10s

- job_name: "dns-server-monitor"

metrics_path: /metrics

dns_sd_configs:

- names: ["k8s-node-1","k8s-node-2","k8s-node-3"]

type: A

port: 9100

6.3 重启prometheus服务

curl -X POST http://192.168.31.201:9090/-/reload

当变更hosts中的解析记录时

192.168.31.114 node-3 k8s-node-3

不用重启,节点信息自动变更.