springBoot整合quartz持久化

前言

项目中有个定时任务服务,用于跑多有的定时任务,记录下 抽取的个demo

使用的springBoot项目 版本是2.3.1.RELEASE

官网

Quartz Enterprise Job Scheduler

参考

https://blog.csdn.net/sqlgao22/category_9339817.html

参考

springboot整合quartz实现分布式定时任务集群_sqlgao22的博客-CSDN博客_springboot 定时任务

字段描述

quartz中表及其表字段的意义_sqlgao22的博客-CSDN博客_qrtz_triggers表说明

相关表可以从官网下载 下载后解压 在\docs\dbTables里 有oracle和mysql等

工作流相关表

Activiti使用到的表都是ACT_开头的。一共28张表

ACT_RE_*:

’RE’表示repository(存储),RepositoryService接口所操作的表。带此前缀的表包含的是静态信息,如,流程定义,流程的资源(图片,规则等)。

ACT_RU_*:

‘RU’表示runtime,运行时表-RuntimeService。这是运行时的表存储着流程变量,用户任务,变量,职责(job)等运行时的数据。Activiti只存储实例执行期间的运行时数据,当流程实例结束时,将删除这些记录。这就保证了这些运行时的表小且快。

ACT_ID_*:

’ID’表示identity (组织机构),IdentityService接口所操作的表。用户记录,流程中使用到的用户和组。这些表包含标识的信息,如用户,用户组,等等。

ACT_HI_*:

’HI’表示history,历史数据表,HistoryService。就是这些表包含着流程执行的历史相关数据,如结束的流程实例,变量,任务,等等

ACT_GE_*:

全局通用数据及设置(general),各种情况都使用的数据。

以下是比较重要的表-------------

1.通用数据表

ACT_GE_BYTEARRAY

| 字段 |

类型 |

长度 |

描述 |

| ID_ |

NVARCHAR2 |

64 |

主键 |

| REV_ |

NVARCHAR2 |

255 |

数据版本 |

| NAME_ |

NVARCHAR2 |

255 |

资源名称 |

| DEPLOYMENT_ID_ |

NVARCHAR2 |

255 |

一次部署可以添加多个资源,该字段与部署表ACT_RE_DEPLOYMENT的主键关联 |

| BYTES_ |

NVARCHAR2 |

255 |

资源内容 |

| GENERATED_ |

NVARCHAR2 |

255 |

是由Activit自动产生的数据,0表示false,1表示true |

属性表

ACT_GE_PROPERTY

| 字段 |

类型 |

长度 |

描述 |

| NAME_ |

NVARCHAR2 |

64 |

属性名称 |

| VALUE_ |

NVARCHAR2 |

300 |

属性值 |

| REV_ |

NVARCHAR2 |

255 |

数据的版本号 |

部署数据表

ACT_RE_DEPLOYMENT

| 字段 |

类型 |

长度 |

描述 |

| ID_ |

NVARCHAR2 |

64 |

主键 |

| NAME_ |

NVARCHAR2 |

300 |

部署名称 |

| DEPLOYMENT_TIME_ |

timestamp |

255 |

部署时间 |

流程定义表

ACT_RE_PROCDEF

| 字段 |

类型 |

长度 |

描述 |

| ID_ |

NVARCHAR2 |

64 |

主键 |

| REV_ |

NVARCHAR2 |

255 |

数据版本 |

| CATEGORY_ |

NVARCHAR2 |

255 |

流程定义分类 |

| NAME_ |

NVARCHAR2 |

255 |

流程定义名称 |

| KEY_ |

NVARCHAR2 |

255 |

流程定义的KEY |

| VERSION_ |

NVARCHAR2 |

255 |

流程定义的版本 |

| DEPLOYMENT_ID_ |

NVARCHAR2 |

255 |

流程定义的对应的资源名称,一般为流程文件的相对路径 |

| RESOURCE_NAME_ |

NVARCHAR2 |

255 |

流程定义的对应的资源名称 |

| DGRM_RERSOURCE_NAME_ |

NVARCHAR2 |

255 |

流程定义的对应流程图的资源名称 |

| HAS_START_FORM_KEY_ |

NVARCHAR2 |

255 |

流程文件是否有开始表单,可以在时间中使用activiti:formKey属性来配置开始表单 |

| SUSPENSION_STATE_ |

NVARCHAR2 |

255 |

表示流程定义的状态是激活还是终止,如果是终止,那么将不能启动流程 |

身份数据表

ACT_ID_UESR

| 字段 |

类型 |

长度 |

描述 |

| ID_ |

NVARCHAR2 |

64 |

主键 |

| REV_ |

NVARCHAR2 |

255 |

数据版本 |

| FIRST_ |

NVARCHAR2 |

255 |

名字的名称 |

| LAST_ |

NVARCHAR2 |

255 |

名字的姓氏 |

| EMAIL_ |

NVARCHAR2 |

255 |

用户邮箱 |

| PWD_ |

NVARCHAR2 |

255 |

用户密码 |

| PICTURE_ID_ |

用户图片,对应资源表的数据ID |

用户账号(信息)表

ACT_ID_INFO

| 字段 |

类型 |

长度 |

描述 |

| ID_ |

NVARCHAR2 |

64 |

主键 |

| REV_ |

NVARCHAR2 |

300 |

数据版本 |

| USER_ID_ |

NVARCHAR2 |

255 |

对应用户表的数据ID |

| TYPE_ |

NVARCHAR2 |

255 |

信息类型,当前可以设置用户的帐号(account)、用户信息(userinfo)和NULL三种值 |

| KEY_ |

NVARCHAR2 |

255 |

数据的键 |

| VALUE_ |

NVARCHAR2 |

255 |

数据的值 |

| PASSWORD_ |

NVARCHAR2 |

255 |

用户帐号的密码字段 |

| PARENT_ID_ |

NVARCHAR2 |

255 |

该信息的父信息ID,如果一条数据设置父信息ID,则表示该数据是用户帐号(信息)的明细数据。比如有个信息有明细,那么明细就是这个帐号的数据。 |

用户组表

ACT_ID_GROUP

| 字段 |

类型 |

长度 |

描述 |

| ID_ |

NVARCHAR2 |

64 |

主键 |

| REV_ |

NVARCHAR2 |

255 |

数据版本 |

| NAME_ |

NVARCHAR2 |

255 |

用户组名称 |

| TYPE_ |

NVARCHAR2 |

255 |

用户组类型 |

关系表

ACT_ID_MEMBERSHIP

| 字段 |

类型 |

长度 |

描述 |

| USER_ID_ |

NVARCHAR2 |

255 |

用户ID,不能为null |

| GROUP_ID_ |

NVARCHAR2 |

255 |

用户组ID,不能为null |

流程实例(执行流)表

ACT_ RU_ EXECUTION

| 字段 |

类型 |

长度 |

描述 |

| ID_ |

NVARCHAR2 |

64 |

主键 |

| REV_ |

NVARCHAR2 |

255 |

数据版本 |

| PROC_ INST_ _ID_ |

NVARCHAR2 |

255 |

流程实例ID, |

| BUSINESS_ KEY_ _ |

NVARCHAR2 |

255 |

启动流程时指定的业务主键。 |

| PARENT_ ID_ |

NVARCHAR2 |

255 |

流程实例(执行流)的ID, 一个流程实例有可能会产生执行流,新的执行流数据以该字段标识其所属的流程实例。 |

| PROC_ DEF_ _ID_ |

NVARCHAR2 |

255 |

流程定义数据的ID。 |

| SUPER_ EXEC_ |

NVARCHAR2 |

255 |

父执行流的ID,一个执行流可以产生新的流程实例,该流程实例数据使用该字段标识其所属的流程实例。 |

| ACT_ ID_ |

NVARCHAR2 |

255 |

当前执行流行为的ID, ID在流程文件中定义。 |

| IS_ ACTIVE_ |

NVARCHAR2 |

255 |

该执行流是否活跃的标识。 |

| IS_ CONCURRENT_ |

NVARCHAR2 |

255 |

执行流是否正在并行。 |

| IS_ SCOPE |

NVARCHAR2 |

255 |

是否在执行流范围内。 |

| IS_ EVENT_ SCOPE_ |

NVARCHAR2 |

255 |

是否在事件范围内。 |

| SUSPENSION STATE_ |

NVARCHAR2 |

255 |

标识流程的中断状态。 |

| CACHED_ ENT_ STATE_ |

NVARCHAR2 |

255 |

流程实体的缓存,取值为0~7。 |

流程任务表

ACT_ RU_TASK

| 字段 |

类型 |

长度 |

描述 |

| ID_ |

NVARCHAR2 |

64 |

主键 |

| REV_ |

NVARCHAR2 |

255 |

数据版本 |

| EXECUTION _ID_ |

NVARCHAR2 |

255 |

任务所处的执行流ID。 |

| PROC _INST_ ID_ |

NVARCHAR2 |

255 |

对应的流程实例ID。 |

| PROC_ DEF _ID_ |

NVARCHAR2 |

255 |

对应流程定义数据的ID。 |

| NAME_ |

NVARCHAR2 |

255 |

任务名称,在流程文件中定义。 |

| PARENT_TASK_ ID_ |

NVARCHAR2 |

255 |

父任务ID, 子任务才会设置该字段的值。 |

| DESCRIPTION_ |

NVARCHAR2 |

255 |

任务描述,在流程文件中配置。 |

| TASK_ DEF_ KEY_ |

NVARCHAR2 |

255 |

任务定义的ID值,在流程文件中定义。 |

| OWNER_ |

NVARCHAR2 |

255 |

任务拥有人,没有做外键关联。 |

| ASSIGNEE_ |

NVARCHAR2 |

255 |

被指派执行该任务的人,没有做外键关联。 |

| DELEGATION_ |

NVARCHAR2 |

255 |

任务委托状态,有等候中和已解决两种状态。 |

| PRIORITY_ |

int |

50 |

任务优先级 |

| CREATE .TIME_ |

timestamp |

255 |

任务创建时间 |

| DUE_ DATE_ |

datetime |

255 |

任务预订日期 |

流程参数表

ACT_ RU_ VARIABLE

| 字段 |

类型 |

长度 |

描述 |

| ID_ |

NVARCHAR2 |

64 |

主键 |

| REV_ |

NVARCHAR2 |

255 |

数据版本 |

| TYPE_ |

NVARCHAR2 |

255 |

参数类型,该字段值可以为boolean. bytes、serializable. date. double、 integer、jpa-entity、long、 null、 short 或string,这些字段值均为Activiti 提供,还可以通过自定义来扩展参数类型。 |

| NAME_ |

NVARCHAR2 |

255 |

参数名称。 |

| EXECUTION_ ID_ |

NVARCHAR2 |

255 |

该参数对应的执行ID, 可以为null. |

| PROC_ INST_ ID_ |

NVARCHAR2 |

255 |

该参数对应的流程实例ID, 可以为null。 |

| TASK_ ID_ |

NVARCHAR2 |

255 |

如果该参数是任务参数,就需要设置任务ID。 |

| BYTEARRAY_ID_ |

NVARCHAR2 |

255 |

如果参数值是序列化对象,那么可以将该对象作为资源保存到资源表中,该字段保存资源表中数据的ID. |

| DOUBLE_ |

DOUBLE |

255 |

参数类型为double,则值会保存到该字段中。 |

| LONG_ |

LONG |

255 |

参数类型为long, 则值会保存到该字段中。 |

| TEXT_ |

NVARCHAR2 |

4000 |

用于保存文本类型的参数值 |

| TEXT2_ |

NVARCHAR2 |

255 |

与TEXT_字段一样,用于保存文本类型的参数值。 |

流程与身份关系表

ACT_ RU_ IDENTITYLINK

| 字段 |

类型 |

长度 |

描述 |

| ID_ |

NVARCHAR2 |

64 |

主键 |

| REV_ |

NVARCHAR2 |

255 |

数据版本 |

| GROUP_ID_ |

NVARCHAR2 |

255 |

该关系数据中的用户组ID。 |

| TYPE_ |

NVARCHAR2 |

255 |

该关系数据的类型 |

| USER_ ID_ |

NVARCHAR2 |

255 |

关系数据中的用户ID。 |

| TASK_ID_ |

NVARCHAR2 |

255 |

关系数据中的任务ID. |

| PROC DEF_ID_ |

NVARCHAR2 |

255 |

关系数据中的流程定义ID |

工作数据表

ACT_ RU_ JOB

| 字段 |

类型 |

长度 |

描述 |

| ID_ |

NVARCHAR2 |

64 |

主键 |

| REV_ |

NVARCHAR2 |

255 |

数据版本 |

| TYPE_ |

NVARCHAR2 |

255 |

值为message或者timer |

| LOCK_EXP_TIME_ |

NVARCHAR2 |

255 |

工作锁定的结束时间表示 |

| LOCK_OWNER_ |

NVARCHAR2 |

255 |

工作锁定标识,默认为UUID |

| EXCLUSIVE_ |

NVARCHAR2 |

255 |

工作是否需要单独执行 |

| EXECUTION_ID_ |

NVARCHAR2 |

255 |

产生工作的执行流ID |

| PROCESS_INSTANCE_ID_ |

NVARCHAR2 |

255 |

流程实例ID |

| RETRIES_ |

NVARCHAR2 |

255 |

工作的剩余执行次数,默认值为3 |

| EXCEPTION_STACK_ID_ |

NVARCHAR2 |

255 |

异常堆栈信息的数据ID |

| EXCEPTION_MSG_ |

NVARCHAR2 |

4000 |

异常信息 |

| DUEDATE_ |

DATETIME |

255 |

工作执行时间 |

| PEPEAT_ |

INTEGER |

255 |

工作重复执行次数 |

| HANDLER_TYPE_ |

NVARCHAR2 |

255 |

标识工作的处理类 |

| HANDLER_CFG_ |

NVARCHAR2 |

255 |

工作相关的数据配置 |

事件描述表

ACT_RU_EVENT_SUBSCR

| 字段 |

类型 |

长度 |

描述 |

| ID_ |

NVARCHAR2 |

64 |

主键 |

| REV_ |

NVARCHAR2 |

255 |

数据版本 |

| EVENT_TYPE_ |

NVARCHAR2 |

255 |

事件类型 |

| EVENT_NAME_ |

NVARCHAR2 |

255 |

事件名称 |

| EXECUTION_ID_ |

NVARCHAR2 |

255 |

事件所在的执流程ID |

| PROC_INST_ID_ |

NVARCHAR2 |

255 |

事件所在的流程实例ID |

| ACTIVITY_ID_ |

NVARCHAR2 |

255 |

具体事件的ID |

| CONFIGURATION_ |

NVARCHAR2 |

255 |

事件的属性配置 |

| CREATED_ |

DATETIME |

255 |

事件的创建时间 |

历史流程实例表

ACT_HI_PROCINST

| 字段 |

类型 |

长度 |

描述 |

| START_ACT_ID |

NVARCHAR2 |

64 |

开始活动的ID |

| END_ACT_ID |

NVARCHAR2 |

255 |

流程最后一个活动的ID |

| EDLETE_REASON_ |

NVARCHAR2 |

255 |

该流程实例删除的原因 |

历史流程明细表

ACT_HI_ACTINS

| 字段 |

类型 |

长度 |

描述 |

| ID_ |

NVARCHAR2 |

64 |

主键 |

| PROC_DEF_ ID_ |

NVARCHAR2 |

255 |

流程定义ID。 |

| PROC_INST_ID_ |

NVARCHAR2 |

255 |

流程实例ID. |

| EXECUTION_ID_ |

NVARCHAR2 |

255 |

执行流ID。 |

| ACT_ID_ |

NVARCHAR2 |

255 |

流程活动的ID, 在流程文件中定义。 |

| ACT_ NAME_ |

NVARCHAR2 |

255 |

活动的名称。 |

| ACT_TYPE_ |

NVARCHAR2 |

255 |

活动类型,例如开始事件,活动名称为startEvent。 |

| ASSIGNEE_ |

NVARCHAR2 |

255 |

活动指派人。 |

| START_TIME_ |

DATETIME |

255 |

活动开始时间 |

| END_TIME_ |

DATETIME |

255 |

活动结束时间 |

| DURATION_ |

DATETIME |

255 |

活动持续时间 |

附件表和评论表

ACT_ HI_ATTACHMENT

| 字段 |

类型 |

长度 |

描述 |

| ID_ |

NVARCHAR2 |

64 |

主键 |

| REV_ |

NVARCHAR2 |

255 |

数据版本号 |

| USER_ID_ |

NVARCHAR2 |

255 |

附件对应的用户ID |

| DESCRIPTION |

NVARCHAR2 |

255 |

附件类型 |

| TASK_ ID_ |

NVARCHAR2 |

255 |

该附件对应的任务ID |

| PROC INST_ ID _ |

NVARCHAR2 |

255 |

对应的流程实例ID |

| CONTENT_ID_ |

NVARCHAR2 |

255 |

附件内容ID |

ACT_ HI_ COMMENT表实际不只保存评论数据,它还会保存某些事件数据,但它的表名.为COMMENT,因此更倾向把它叫作评论表,该表有如下字段。

ACT_ HI_ COMMENT

| 字段 |

类型 |

长度 |

描述 |

| ID_ |

NVARCHAR2 |

64 |

主键 |

| TYPE |

NVARCHAR2 |

255 |

评论的类型 |

| TIME_ |

DATETIME |

255 |

数据产生的时间。 |

| USER_ID_ |

NVARCHAR2 |

255 |

产生评论数据的用户ID。 |

| TASK_ID_ |

NVARCHAR2 |

255 |

该评论数据的任务ID。 |

| PROC_INST_ID_ |

NVARCHAR2 |

255 |

数据对应的流程实例ID。 |

| ACTION |

NVARCHAR2 |

255 |

该评论数据的操作标识。 |

| MESSAGE_ |

NVARCHAR2 |

255 |

该评论数据的信息。 |

| FULL_ MSG_ |

NVARCHAR2 |

255 |

该字段同样记录评论数据的信息。 |

业务表 用于测试事务

SET NAMES utf8mb4;

SET FOREIGN_KEY_CHECKS = 0;-- ----------------------------

-- Table structure for goods

-- ----------------------------

DROP TABLE IF EXISTS `goods`;

CREATE TABLE `goods` (

`id` int(11) NOT NULL,

`name` varchar(255) CHARACTER SET utf8 COLLATE utf8_general_ci NULL DEFAULT NULL,

`color_ids` varchar(255) CHARACTER SET utf8 COLLATE utf8_general_ci NULL DEFAULT NULL,

PRIMARY KEY (`id`) USING BTREE

) ENGINE = InnoDB CHARACTER SET = utf8 COLLATE = utf8_general_ci ROW_FORMAT = Dynamic;-- ----------------------------

-- Records of goods

-- ----------------------------

INSERT INTO `goods` VALUES (1, '铅笔', '1,2,3');

INSERT INTO `goods` VALUES (2, '钢笔', '1,2');SET FOREIGN_KEY_CHECKS = 1;

2.maven依赖

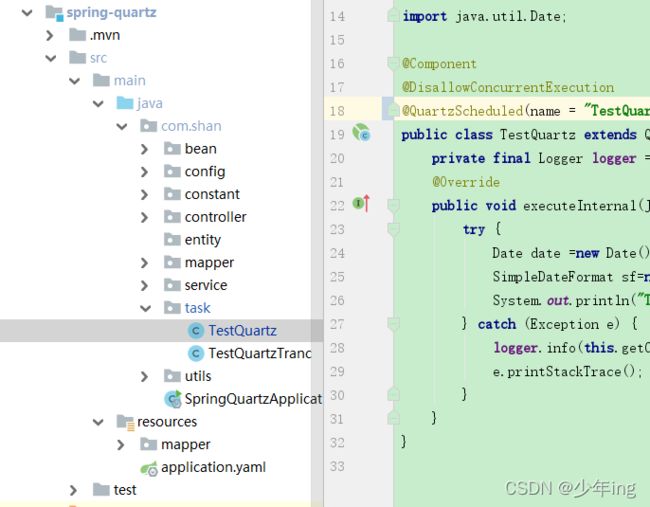

項目结构图

org.springframework.boot spring-boot-starter org.springframework.boot spring-boot-starter-web org.projectlombok lombok true org.springframework.boot spring-boot-starter-test test mysql mysql-connector-java 8.0.11 org.mybatis.spring.boot mybatis-spring-boot-starter 2.1.0 com.alibaba druid 1.1.19 com.alibaba druid-spring-boot-starter 1.1.9 org.springframework.boot spring-boot-starter-quartz com.google.guava guava 16.0.1 cglib cglib 3.3.0 org.apache.commons commons-collections4 4.4

3.代码

配置文件 application.yum

server:

contextPath: /

port: 9090

spring:

profiles:

active: dev

application:

name: spring-quartz

---

spring:

profiles: dev

datasource:

url: jdbc:mysql://:3306/yxsd?serverTimezone=GMT%2B8&characterEncoding=utf8&useUnicode=true&useSSL=false&nullCatalogMeansCurrent=true

username: root

password:

driverClassName: com.mysql.cj.jdbc.Driver

type: com.alibaba.druid.pool.DruidDataSource

druid:

web-stat-monitor:

enabled: true

initialSize: 5

minIdle: 5

maxActive: 100

maxWait: 30000

timeBetweenEvictionRunsMillis: 60000

minEvictableIdleTimeMillis: 300000

maxEvictableIdleTimeMillis: 2000000

#validationQuery: SELECT 1

validationQuery: SELECT 1 FROM DUAL

validationQueryTimeout: 10000

testWhileIdle: true

testOnBorrow: false

testOnReturn: false

poolPreparedStatements: true

maxPoolPreparedStatementPerConnectionSize: 20

#filters: stat,wall

connectionProperties: druid.stat.mergeSql=true;druid.stat.slowSqlMillis=5000

quartz:

url: ${spring.datasource.url}

username: ${spring.datasource.username}

password: ${spring.datasource.password}

driverClassName: ${spring.datasource.driverClassName}

type: ${spring.datasource.type}

quartz:

schedulerName: mySchedule

autoStartup: true

startupDelay: 0

waitForJobsToCompleteOnShutdown: true

overwriteExistingJobs: false

jobStoreType: jdbc

jdbc:

initializeSchema: always

properties:

org:

quartz:

scheduler:

instanceName: quartzScheduler

instanceId: AUTO

makeSchedulerThreadDaemon: true

skipUpdateCheck: true

threadPool:

class: org.quartz.simpl.SimpleThreadPool

makeThreadsDaemons: true

threadCount: 50

threadPriority: 5

threadsInheritContextClassLoaderOfInitializingThread: true

jobStore:

class: org.quartz.impl.jdbcjobstore.JobStoreTX

driverDelegateClass: org.quartz.impl.jdbcjobstore.StdJDBCDelegate

tablePrefix: QRTZ_

isClustered: false

misfireThreshold: 2500

mybatis:

mapper-locations: classpath:/mapper/*.xml

type-aliases-package: com.shan.entity

启动类

开启注解 启动加载配置

package com.shan;

import com.shan.config.scheduler.SchedulerManager;

import com.shan.utils.SpringContextUtils;

import org.mybatis.spring.annotation.MapperScan;

import org.springframework.boot.CommandLineRunner;

import org.springframework.boot.SpringApplication;

import org.springframework.boot.autoconfigure.SpringBootApplication;

import org.springframework.context.ApplicationContext;

import org.springframework.context.ConfigurableApplicationContext;

import org.springframework.scheduling.annotation.EnableScheduling;

import java.util.Arrays;

@SpringBootApplication

@EnableScheduling

public class SpringQuartzApplication implements CommandLineRunner {

private final ApplicationContext appContext;

public final SchedulerManager myScheduler;

public SpringQuartzApplication(ApplicationContext appContext, SchedulerManager myScheduler) {

this.appContext = appContext;

this.myScheduler = myScheduler;

}

public static void main(String[] args) {

ConfigurableApplicationContext run = SpringApplication.run(SpringQuartzApplication.class, args);

SpringContextUtils.setApplicationContext(run);

}

@Override

public void run(String... args) {

String[] beans = appContext.getBeanDefinitionNames();

Arrays.sort(beans);

for (String bean : beans) {

System.out.println(bean);

}

// 加载所有任务类(如果有新增任务类,需要在部署前先清空所有QUARTZ开头的表)

myScheduler.initAllJob(appContext);

}

}

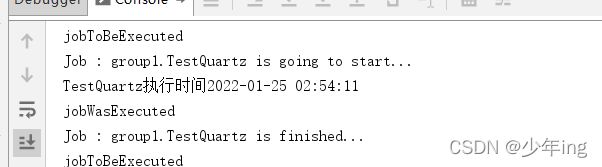

测试

项目启动后定时任务执行

相关实体 和工具类

bean包

//

// Source code recreated from a .class file by IntelliJ IDEA

// (powered by Fernflower decompiler)

//

package com.shan.bean;

public class QuartzJob {

public static final Integer STATUS_RUNNING = 1;

public static final Integer STATUS_NOT_RUNNING = 0;

public static final Integer CONCURRENT_IS = 1;

public static final Integer CONCURRENT_NOT = 0;

private String jobId;

private String cronExpression;

private String methodName;

private Integer isConcurrent;

private String description;

private String beanName;

private String triggerName;

private Integer jobStatus;

private String springBean;

private String jobName;

private String jobGroup;

public String getJobId() {

return this.jobId;

}

public String getCronExpression() {

return this.cronExpression;

}

public String getMethodName() {

return this.methodName;

}

public Integer getIsConcurrent() {

return this.isConcurrent;

}

public String getDescription() {

return this.description;

}

public String getBeanName() {

return this.beanName;

}

public String getTriggerName() {

return this.triggerName;

}

public Integer getJobStatus() {

return this.jobStatus;

}

public String getSpringBean() {

return this.springBean;

}

public String getJobName() {

return this.jobName;

}

public String getJobGroup() {

return this.jobGroup;

}

public QuartzJob setJobId(String jobId) {

this.jobId = jobId;

return this;

}

public QuartzJob setCronExpression(String cronExpression) {

this.cronExpression = cronExpression;

return this;

}

public QuartzJob setMethodName(String methodName) {

this.methodName = methodName;

return this;

}

public QuartzJob setIsConcurrent(Integer isConcurrent) {

this.isConcurrent = isConcurrent;

return this;

}

public QuartzJob setDescription(String description) {

this.description = description;

return this;

}

public QuartzJob setBeanName(String beanName) {

this.beanName = beanName;

return this;

}

public QuartzJob setTriggerName(String triggerName) {

this.triggerName = triggerName;

return this;

}

public QuartzJob setJobStatus(Integer jobStatus) {

this.jobStatus = jobStatus;

return this;

}

public QuartzJob setSpringBean(String springBean) {

this.springBean = springBean;

return this;

}

public QuartzJob setJobName(String jobName) {

this.jobName = jobName;

return this;

}

public QuartzJob setJobGroup(String jobGroup) {

this.jobGroup = jobGroup;

return this;

}

public boolean equals(Object o) {

if (o == this) {

return true;

} else if (!(o instanceof QuartzJob)) {

return false;

} else {

QuartzJob other = (QuartzJob)o;

if (!other.canEqual(this)) {

return false;

} else {

label143: {

Object this$isConcurrent = this.getIsConcurrent();

Object other$isConcurrent = other.getIsConcurrent();

if (this$isConcurrent == null) {

if (other$isConcurrent == null) {

break label143;

}

} else if (this$isConcurrent.equals(other$isConcurrent)) {

break label143;

}

return false;

}

Object this$jobStatus = this.getJobStatus();

Object other$jobStatus = other.getJobStatus();

if (this$jobStatus == null) {

if (other$jobStatus != null) {

return false;

}

} else if (!this$jobStatus.equals(other$jobStatus)) {

return false;

}

Object this$jobId = this.getJobId();

Object other$jobId = other.getJobId();

if (this$jobId == null) {

if (other$jobId != null) {

return false;

}

} else if (!this$jobId.equals(other$jobId)) {

return false;

}

label122: {

Object this$cronExpression = this.getCronExpression();

Object other$cronExpression = other.getCronExpression();

if (this$cronExpression == null) {

if (other$cronExpression == null) {

break label122;

}

} else if (this$cronExpression.equals(other$cronExpression)) {

break label122;

}

return false;

}

label115: {

Object this$methodName = this.getMethodName();

Object other$methodName = other.getMethodName();

if (this$methodName == null) {

if (other$methodName == null) {

break label115;

}

} else if (this$methodName.equals(other$methodName)) {

break label115;

}

return false;

}

Object this$description = this.getDescription();

Object other$description = other.getDescription();

if (this$description == null) {

if (other$description != null) {

return false;

}

} else if (!this$description.equals(other$description)) {

return false;

}

Object this$beanName = this.getBeanName();

Object other$beanName = other.getBeanName();

if (this$beanName == null) {

if (other$beanName != null) {

return false;

}

} else if (!this$beanName.equals(other$beanName)) {

return false;

}

label94: {

Object this$triggerName = this.getTriggerName();

Object other$triggerName = other.getTriggerName();

if (this$triggerName == null) {

if (other$triggerName == null) {

break label94;

}

} else if (this$triggerName.equals(other$triggerName)) {

break label94;

}

return false;

}

label87: {

Object this$springBean = this.getSpringBean();

Object other$springBean = other.getSpringBean();

if (this$springBean == null) {

if (other$springBean == null) {

break label87;

}

} else if (this$springBean.equals(other$springBean)) {

break label87;

}

return false;

}

Object this$jobName = this.getJobName();

Object other$jobName = other.getJobName();

if (this$jobName == null) {

if (other$jobName != null) {

return false;

}

} else if (!this$jobName.equals(other$jobName)) {

return false;

}

Object this$jobGroup = this.getJobGroup();

Object other$jobGroup = other.getJobGroup();

if (this$jobGroup == null) {

if (other$jobGroup != null) {

return false;

}

} else if (!this$jobGroup.equals(other$jobGroup)) {

return false;

}

return true;

}

}

}

protected boolean canEqual(Object other) {

return other instanceof QuartzJob;

}

public int hashCode() {

int PRIME = 56;

int result = 1;

Object $isConcurrent = this.getIsConcurrent();

result = result * 59 + ($isConcurrent == null ? 43 : $isConcurrent.hashCode());

Object $jobStatus = this.getJobStatus();

result = result * 59 + ($jobStatus == null ? 43 : $jobStatus.hashCode());

Object $jobId = this.getJobId();

result = result * 59 + ($jobId == null ? 43 : $jobId.hashCode());

Object $cronExpression = this.getCronExpression();

result = result * 59 + ($cronExpression == null ? 43 : $cronExpression.hashCode());

Object $methodName = this.getMethodName();

result = result * 59 + ($methodName == null ? 43 : $methodName.hashCode());

Object $description = this.getDescription();

result = result * 59 + ($description == null ? 43 : $description.hashCode());

Object $beanName = this.getBeanName();

result = result * 59 + ($beanName == null ? 43 : $beanName.hashCode());

Object $triggerName = this.getTriggerName();

result = result * 59 + ($triggerName == null ? 43 : $triggerName.hashCode());

Object $springBean = this.getSpringBean();

result = result * 59 + ($springBean == null ? 43 : $springBean.hashCode());

Object $jobName = this.getJobName();

result = result * 59 + ($jobName == null ? 43 : $jobName.hashCode());

Object $jobGroup = this.getJobGroup();

result = result * 59 + ($jobGroup == null ? 43 : $jobGroup.hashCode());

return result;

}

public String toString() {

return "QuartzJob(jobId=" + this.getJobId() + ", cronExpression=" + this.getCronExpression() + ", methodName=" + this.getMethodName() + ", isConcurrent=" + this.getIsConcurrent() + ", description=" + this.getDescription() + ", beanName=" + this.getBeanName() + ", triggerName=" + this.getTriggerName() + ", jobStatus=" + this.getJobStatus() + ", springBean=" + this.getSpringBean() + ", jobName=" + this.getJobName() + ", jobGroup=" + this.getJobGroup() + ")";

}

public QuartzJob() {

}

public QuartzJob(String jobId, String cronExpression, String methodName, Integer isConcurrent, String description, String beanName, String triggerName, Integer jobStatus, String springBean, String jobName, String jobGroup) {

this.jobId = jobId;

this.cronExpression = cronExpression;

this.methodName = methodName;

this.isConcurrent = isConcurrent;

this.description = description;

this.beanName = beanName;

this.triggerName = triggerName;

this.jobStatus = jobStatus;

this.springBean = springBean;

this.jobName = jobName;

this.jobGroup = jobGroup;

}

}

config.dataSource.context包

//

// Source code recreated from a .class file by IntelliJ IDEA

// (powered by Fernflower decompiler)

//

package com.shan.config.dataSource.context;

import com.alibaba.druid.pool.xa.DruidXADataSource;

import com.alibaba.druid.spring.boot.autoconfigure.DruidDataSourceBuilder;

import com.google.common.base.Optional;

import com.shan.utils.BeanTransferUtils;

import com.zaxxer.hikari.HikariConfig;

import com.zaxxer.hikari.HikariDataSource;

import org.springframework.boot.jdbc.DataSourceBuilder;

import javax.sql.DataSource;

import java.util.Map;

public class ConnectionPoolFactory {

public ConnectionPoolFactory() {

}

public static Optional create(String className, Map config) {

Optional result = Optional.absent();

if (className.indexOf("DruidDataSource") > 0) {

result = Optional.fromNullable(DruidDataSourceBuilder.create().build());

DruidDataSourceManager.initDataSource((DataSource)result.get(), config);

} else if (className.indexOf("HikariDataSource") > 0) {

HikariConfig hikariConfig = new HikariConfig();

HikariDataSourceProperties properties = (new HikariDataSourceProperties()).initFromConfig(config);

BeanTransferUtils.bean2bean(properties, hikariConfig);

result = Optional.fromNullable(new HikariDataSource(hikariConfig));

} else if (className.indexOf("DruidXADataSource") > 0) {

DruidXADataSource xaDataSource = new DruidXADataSource();

try {

result = Optional.fromNullable(xaDataSource);

DruidDataSourceManager.initDataSource((DataSource)result.get(), config);

} catch (Throwable var8) {

try {

xaDataSource.close();

} catch (Throwable var6) {

var8.addSuppressed(var6);

}

throw var8;

}

xaDataSource.close();

} else {

try {

Class type = Class.forName(className);

result = Optional.fromNullable(DataSourceBuilder.create().type(type).build());

} catch (ClassNotFoundException var7) {

throw new IllegalStateException(var7);

}

}

return result;

}

}

//

// Source code recreated from a .class file by IntelliJ IDEA

// (powered by Fernflower decompiler)

//

package com.shan.config.dataSource.context;

import com.alibaba.druid.pool.DruidDataSource;

import javax.sql.DataSource;

import java.sql.SQLException;

import java.util.Map;

public class DruidDataSourceManager {

public DruidDataSourceManager() {

}

public static void initDataSource(DataSource dataSource, Map map) {

if (dataSource instanceof DruidDataSource) {

name((DruidDataSource)dataSource, map);

}

}

private static void name(DruidDataSource ds, Map map) {

if (map.get("url") != null) {

ds.setUrl(map.get("url").toString());

}

if (map.get("username") != null) {

ds.setUsername(map.get("username").toString());

}

if (map.get("password") != null) {

ds.setPassword(map.get("password").toString());

}

if (map.get("testWhileIdle") != null) {

ds.setTestWhileIdle(Boolean.parseBoolean(map.get("testWhileIdle").toString()));

}

if (map.get("testOnBorrow") != null) {

ds.setTestOnBorrow(Boolean.parseBoolean(map.get("testOnBorrow").toString()));

}

if (map.get("validationQuery") != null) {

ds.setValidationQuery(map.get("validationQuery").toString());

}

if (map.get("useGlobalDataSourceStat") != null) {

ds.setUseGlobalDataSourceStat(Boolean.parseBoolean(map.get("useGlobalDataSourceStat").toString()));

}

if (map.get("filters") != null) {

try {

ds.setFilters(map.get("filters").toString());

} catch (SQLException var3) {

var3.printStackTrace();

}

}

if (map.get("timeBetweenLogStatsMillis") != null) {

ds.setTimeBetweenLogStatsMillis(Long.parseLong(map.get("timeBetweenLogStatsMillis").toString()));

}

if (map.get("maxSize") != null) {

}

if (map.get("clearFiltersEnable") != null) {

ds.setClearFiltersEnable(Boolean.parseBoolean(map.get("clearFiltersEnable").toString()));

}

if (map.get("resetStatEnable") != null) {

ds.setResetStatEnable(Boolean.parseBoolean(map.get("resetStatEnable").toString()));

}

if (map.get("notFullTimeoutRetryCount") != null) {

ds.setNotFullTimeoutRetryCount(Integer.parseInt(map.get("notFullTimeoutRetryCount").toString()));

}

if (map.get("maxWaitThreadCount") != null) {

ds.setMaxWaitThreadCount(Integer.parseInt(map.get("maxWaitThreadCount").toString()));

}

if (map.get("failFast") != null) {

ds.setFailFast(Boolean.parseBoolean(map.get("failFast").toString()));

}

if (map.get("phyTimeoutMillis") != null) {

ds.setPhyTimeoutMillis(Long.parseLong(map.get("phyTimeoutMillis").toString()));

}

if (map.get("minEvictableIdleTimeMillis") != null) {

ds.setMinEvictableIdleTimeMillis(Long.parseLong(map.get("minEvictableIdleTimeMillis").toString()));

}

if (map.get("maxEvictableIdleTimeMillis") != null) {

ds.setMaxEvictableIdleTimeMillis(Long.parseLong(map.get("maxEvictableIdleTimeMillis").toString()));

}

if (map.get("initialSize") != null) {

ds.setInitialSize(Integer.parseInt(map.get("initialSize").toString()));

}

if (map.get("minIdle") != null) {

ds.setMinIdle(Integer.parseInt(map.get("minIdle").toString()));

}

if (map.get("maxActive") != null) {

ds.setMaxActive(Integer.parseInt(map.get("maxActive").toString()));

}

if (map.get("maxWait") != null) {

ds.setMaxWait((long)Integer.parseInt(map.get("maxWait").toString()));

}

if (map.get("timeBetweenEvictionRunsMillis") != null) {

ds.setTimeBetweenEvictionRunsMillis(Long.parseLong(map.get("timeBetweenEvictionRunsMillis").toString()));

}

if (map.get("poolPreparedStatements") != null) {

ds.setPoolPreparedStatements(Boolean.parseBoolean(map.get("poolPreparedStatements").toString()));

}

if (map.get("maxPoolPreparedStatementPerConnectionSize") != null) {

ds.setMaxPoolPreparedStatementPerConnectionSize(Integer.parseInt(map.get("maxPoolPreparedStatementPerConnectionSize").toString()));

}

if (map.get("connectionProperties") != null) {

ds.setConnectionProperties(map.get("connectionProperties").toString());

}

}

}

//

// Source code recreated from a .class file by IntelliJ IDEA

// (powered by Fernflower decompiler)

//

package com.shan.config.dataSource.context;

import com.google.common.base.Optional;

import org.apache.commons.collections4.MapUtils;

import java.util.Iterator;

import java.util.Map;

import java.util.Map.Entry;

import java.util.Properties;

public class HikariDataSourceProperties {

private String username;

private String password;

private String jdbcUrl;

private String driverClassName;

private Integer maximumPoolSize;

private String connectionTestQuery;

private String poolName;

private Properties dataSourceProperties = new Properties();

public HikariDataSourceProperties initFromConfig(Map map) {

if (MapUtils.isEmpty(map)) {

return this;

} else {

if (map.get("url") != null) {

this.setJdbcUrl(map.get("url").toString());

}

if (map.get("username") != null) {

this.setUsername(map.get("username").toString());

}

if (map.get("password") != null) {

this.setPassword(map.get("password").toString());

}

if (map.get("driverClassName") != null) {

this.setDriverClassName(map.get("driverClassName").toString());

}

if (map.get("maximumPoolSize") != null) {

this.setMaximumPoolSize(Integer.parseInt(map.get("maximumPoolSize").toString()));

}

if (map.get("connectionTestQuery") != null) {

this.setConnectionTestQuery(map.get("connectionTestQuery").toString());

}

if (map.get("poolName") != null) {

this.setPoolName(map.get("poolName").toString());

}

Optionalconfig.scheduler包

package com.shan.config.scheduler;

import com.google.common.base.Optional;

import com.google.common.base.Preconditions;

import com.google.common.collect.Maps;

import java.util.Map;

import java.util.Properties;

import javax.sql.DataSource;

import com.shan.config.dataSource.context.ConnectionPoolFactory;

import org.quartz.Scheduler;

import org.quartz.spi.TriggerFiredBundle;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.beans.factory.config.AutowireCapableBeanFactory;

import org.springframework.boot.autoconfigure.condition.ConditionalOnProperty;

import org.springframework.boot.autoconfigure.quartz.QuartzDataSource;

import org.springframework.boot.autoconfigure.quartz.QuartzProperties;

import org.springframework.boot.context.properties.bind.Bindable;

import org.springframework.boot.context.properties.bind.Binder;

import org.springframework.context.EnvironmentAware;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import org.springframework.core.env.Environment;

import org.springframework.scheduling.quartz.SchedulerFactoryBean;

import org.springframework.scheduling.quartz.SpringBeanJobFactory;

import org.springframework.stereotype.Component;

@ConditionalOnProperty(

name = {"spring.quartz.jobStoreType"}

)

@Configuration

public class QuartzConfiguration implements EnvironmentAware {

@Autowired

private QuartzProperties properties;

private Map dataSourceConfig = Maps.newHashMap();

public QuartzConfiguration() {

}

private Properties asProperties(Map source) {

Properties properties = new Properties();

properties.putAll(source);

return properties;

}

@Bean(

name = {"schedulerManager"}

)

public SchedulerManager getSchedulerManager(Scheduler scheduler) {

return new SchedulerManager(scheduler);

}

@Bean

@QuartzDataSource

public DataSource quartzDataSource() {

Optional result = ConnectionPoolFactory.create(this.dataSourceConfig.get("type").toString(), this.dataSourceConfig);

Preconditions.checkState(result.isPresent(), "An exception has occurred to create Quartz datasource.");

return (DataSource)result.get();

}

@Bean(

name = {"scheduler"}

)

public Scheduler scheduler(SchedulerFactoryBean schedulerFactoryBean) throws Exception {

Scheduler scheduler = schedulerFactoryBean.getScheduler();

scheduler.start();

return scheduler;

}

@Bean

public SchedulerFactoryBean schedulerFactoryBean(QuartzConfiguration.QuartzJobFactory quartzJobFactory) throws Exception {

SchedulerFactoryBean schedulerFactoryBean = new SchedulerFactoryBean();

schedulerFactoryBean.setDataSource(this.quartzDataSource());

schedulerFactoryBean.setOverwriteExistingJobs(true);

schedulerFactoryBean.setJobFactory(quartzJobFactory);

schedulerFactoryBean.setQuartzProperties(this.asProperties(this.properties.getProperties()));

schedulerFactoryBean.setStartupDelay(15);

return schedulerFactoryBean;

}

public void setEnvironment(Environment environment) {

this.dataSourceConfig = (Map)Binder.get(environment).bind("spring.datasource.quartz", Bindable.mapOf(String.class, Object.class)).get();

}

@Component("quartzJobFactory")

private class QuartzJobFactory extends SpringBeanJobFactory {

@Autowired

private AutowireCapableBeanFactory capableBeanFactory;

private QuartzJobFactory() {

}

protected Object createJobInstance(TriggerFiredBundle bundle) throws Exception {

Object jobInstance = super.createJobInstance(bundle);

this.capableBeanFactory.autowireBean(jobInstance);

return jobInstance;

}

}

}

//

// Source code recreated from a .class file by IntelliJ IDEA

// (powered by Fernflower decompiler)

//

package com.shan.config.scheduler;

import java.lang.annotation.Documented;

import java.lang.annotation.ElementType;

import java.lang.annotation.Retention;

import java.lang.annotation.RetentionPolicy;

import java.lang.annotation.Target;

@Documented

@Retention(RetentionPolicy.RUNTIME)

@Target({ElementType.METHOD, ElementType.TYPE})

public @interface QuartzScheduled {

int count() default -1;

String cron() default "";

int fixedRate() default -1;

String group() default "";

long initialDelay() default -1L;

String name();

String param() default "";

boolean status() default true;

}

//

// Source code recreated from a .class file by IntelliJ IDEA

// (powered by Fernflower decompiler)

//

package com.shan.config.scheduler;

import org.quartz.JobExecutionContext;

import org.quartz.JobExecutionException;

import org.quartz.JobListener;

public class SchedulerListener implements JobListener {

public static final String LISTENER_NAME = "QuartSchedulerListener";

public SchedulerListener() {

}

public String getName() {

return "QuartSchedulerListener";

}

public void jobExecutionVetoed(JobExecutionContext context) {

System.out.println("jobExecutionVetoed");

}

public void jobToBeExecuted(JobExecutionContext context) {

String jobName = context.getJobDetail().getKey().toString();

System.out.println("jobToBeExecuted");

System.out.println("Job : " + jobName + " is going to start...");

}

public void jobWasExecuted(JobExecutionContext context, JobExecutionException jobException) {

System.out.println("jobWasExecuted");

String jobName = context.getJobDetail().getKey().toString();

System.out.println("Job : " + jobName + " is finished...");

if (jobException != null && !jobException.getMessage().equals("")) {

System.out.println("Exception thrown by: " + jobName + " Exception: " + jobException.getMessage());

}

}

}

//

// Source code recreated from a .class file by IntelliJ IDEA

// (powered by Fernflower decompiler)

//

package com.shan.config.scheduler;

import com.google.common.collect.Lists;

import java.util.ArrayList;

import java.util.Iterator;

import java.util.List;

import java.util.Map;

import com.shan.bean.QuartzJob;

import org.quartz.CronScheduleBuilder;

import org.quartz.CronTrigger;

import org.quartz.Job;

import org.quartz.JobBuilder;

import org.quartz.JobDetail;

import org.quartz.JobKey;

import org.quartz.JobListener;

import org.quartz.Scheduler;

import org.quartz.SchedulerException;

import org.quartz.Trigger;

import org.quartz.TriggerBuilder;

import org.quartz.TriggerKey;

import org.springframework.context.ApplicationContext;

public class SchedulerManager {

private Scheduler scheduler;

private JobListener scheduleListener;

public SchedulerManager(Scheduler scheduler) {

this.scheduler = scheduler;

}

public void addJob(QuartzJob job) {

try {

Class clazz = Class.forName(job.getBeanName());

Job jobEntity = (Job) clazz.newInstance();

JobDetail jobDetail = JobBuilder.newJob(jobEntity.getClass()).withIdentity(job.getJobName()).build();

Trigger cronTrigger = TriggerBuilder.newTrigger().withIdentity(job.getTriggerName()).startNow().withSchedule(CronScheduleBuilder.cronSchedule(job.getCronExpression())).build();

this.scheduler.scheduleJob(jobDetail, cronTrigger);

} catch (InstantiationException | IllegalAccessException | SchedulerException | ClassNotFoundException var6) {

var6.printStackTrace();

}

}

public void deleteJob(QuartzJob quartzJob) {

try {

JobKey jobKey = JobKey.jobKey(quartzJob.getJobName(), quartzJob.getJobGroup());

this.scheduler.deleteJob(jobKey);

} catch (SchedulerException var3) {

var3.printStackTrace();

}

}

private Listconstant包

package com.shan.constant;

/**

* Quartz Cron 表达式

*/

public final class QuartzCronUtils {

/**

* 10s

*/

public static final String QUARTZ_CRON_TEN_SECOND = "*/10 * * * * ?";

}

mapper包

package com.shan.mapper;

import org.apache.ibatis.annotations.Mapper;

import java.util.HashMap;

@Mapper

public interface TestMapper {

int updateById(HashMap param);

}

service 包

package com.shan.service;

public interface TestService {

void testTransactional();

}

service.impl 包

package com.shan.service.impl;

import com.shan.mapper.TestMapper;

import com.shan.service.TestService;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.stereotype.Service;

import org.springframework.transaction.annotation.Transactional;

import java.util.HashMap;

@Service

public class TestServiceImpl implements TestService {

@Autowired

private TestMapper testMapper;

//验证事务

@Override

@Transactional(rollbackFor = Exception.class)

public void testTransactional() {

HashMap param =new HashMap();

param.put("id","1");

param.put("name","哈哈");

testMapper.updateById(param);

int i = 5/0;

param.put("id","1");

param.put("name","哈哈11111");

testMapper.updateById(param);

}

}

task包

package com.shan.task;

import com.shan.config.scheduler.QuartzScheduled;

import com.shan.constant.QuartzCronUtils;

import org.quartz.DisallowConcurrentExecution;

import org.quartz.JobExecutionContext;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.scheduling.quartz.QuartzJobBean;

import org.springframework.stereotype.Component;

import java.text.SimpleDateFormat;

import java.util.Date;

@Component

@DisallowConcurrentExecution

@QuartzScheduled(name = "TestQuartz", group = "group1", cron = QuartzCronUtils.QUARTZ_CRON_TEN_SECOND)

public class TestQuartz extends QuartzJobBean {

private final Logger logger = LoggerFactory.getLogger(this.getClass());

@Override

public void executeInternal(JobExecutionContext context) {

try {

Date date =new Date();

SimpleDateFormat sf=new SimpleDateFormat("yyyy-MM-dd hh:mm:ss");

System.out.println("TestQuartz执行时间"+sf.format(date));

} catch (Exception e) {

logger.info(this.getClass() + "执行异常------------------------>");

e.printStackTrace();

}

}

}

package com.shan.task;

import com.shan.config.scheduler.QuartzScheduled;

import com.shan.constant.QuartzCronUtils;

import com.shan.service.TestService;

import org.quartz.DisallowConcurrentExecution;

import org.quartz.JobExecutionContext;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.scheduling.quartz.QuartzJobBean;

import org.springframework.stereotype.Component;

import org.springframework.transaction.annotation.Transactional;

import java.text.SimpleDateFormat;

import java.util.Date;

//验证定时任务中的事务

@Component

@DisallowConcurrentExecution

@QuartzScheduled(name = "TestQuartzTranc", group = "group1", cron = QuartzCronUtils.QUARTZ_CRON_TEN_SECOND)

public class TestQuartzTranc extends QuartzJobBean {

private final Logger logger = LoggerFactory.getLogger(this.getClass());

@Autowired

private TestService testService;

@Override

public void executeInternal(JobExecutionContext context) {

try {

Date date =new Date();

SimpleDateFormat sf=new SimpleDateFormat("yyyy-MM-dd hh:mm:ss");

System.out.println("TestQuartzTranc执行开始时间"+sf.format(date));

//testService.testTransactional();

System.out.println("TestQuartzTranc执行结束时间"+sf.format(date));

} catch (Exception e) {

logger.info(this.getClass() + "执行异常------------------------>");

e.printStackTrace();

}

}

}

utils 包

//

// Source code recreated from a .class file by IntelliJ IDEA

// (powered by Fernflower decompiler)

//

package com.shan.utils;

import com.google.common.collect.Lists;

import java.util.ArrayList;

import java.util.Collections;

import java.util.HashMap;

import java.util.Iterator;

import java.util.List;

import java.util.Map;

import net.sf.cglib.beans.BeanMap;

import org.apache.commons.collections4.CollectionUtils;

import org.apache.commons.collections4.MapUtils;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.cglib.beans.BeanCopier;

import org.springframework.cglib.core.Converter;

public class BeanTransferUtils {

private static final Logger log = LoggerFactory.getLogger(BeanTransferUtils.class);

private BeanTransferUtils() {

}

public static K transferColumn(T source, Class sourceClazz, Class targetClazz) throws Exception {

if (source == null) {

return getInstance(targetClazz);

} else {

K targetBean = getInstance(targetClazz);

BeanCopier.create(sourceClazz, targetClazz, false).copy(source, targetBean, (Converter)null);

return targetBean;

}

}

public static List transferColumn(List sourceList, Class sourceClazz, Class targetClazz) throws Exception {

if (CollectionUtils.isEmpty(sourceList)) {

return Collections.emptyList();

} else {

List result = Lists.newArrayList();

BeanCopier beanCopier = BeanCopier.create(sourceClazz, targetClazz, false);

for (Iterator localIterator = sourceList.iterator(); localIterator.hasNext(); ) { Object source = localIterator.next();

Object targetBean = getInstance(targetClazz);

beanCopier.copy(source, targetBean, null);

result.add(targetBean);

}

return result;

}

}

public static K bean2bean(T from, K to) {

BeanCopier beanCopier = BeanCopier.create(from.getClass(), to.getClass(), false);

beanCopier.copy(from, to, (Converter)null);

return to;

}

public static Map bean2Map(T bean) {

if (bean == null) {

return Collections.emptyMap();

} else {

BeanMap beanMap = BeanMap.create(bean);

Map result = new HashMap(beanMap.size());

result.putAll(beanMap);

return result;

}

}

public static T map2Bean(Map sourceMap, Class targetClazz) {

T bean = null;

try {

bean = targetClazz.newInstance();

if (MapUtils.isNotEmpty(sourceMap)) {

BeanMap beanMap = BeanMap.create(bean);

beanMap.putAll(sourceMap);

}

} catch (Exception e) {

log.error("", e);

}

return bean;

}

public static List> beanList2Map(List beanList) {

if (CollectionUtils.isEmpty(beanList)) {

return Collections.emptyList();

}

List list = new ArrayList(beanList.size());

Iterator localIterator;

if (CollectionUtils.isNotEmpty(beanList))

for (localIterator = beanList.iterator(); localIterator.hasNext(); ) { Object bean = localIterator.next();

if (bean != null)

{

BeanMap beanMap = BeanMap.create(bean);

Map result = new HashMap(beanMap.size());

result.putAll(beanMap);

list.add(result);

}

}

return list;

}

public static T getInstance(Class clazz) throws Exception {

try {

return clazz.newInstance();

} catch (IllegalAccessException | InstantiationException var2) {

log.info("", var2);

throw new Exception(var2);

}

}

}

//

// Source code recreated from a .class file by IntelliJ IDEA

// (powered by Fernflower decompiler)

//

package com.shan.utils;

import org.springframework.beans.factory.NoSuchBeanDefinitionException;

import org.springframework.context.ApplicationContext;

public class SpringContextUtils {

private static ApplicationContext context;

public SpringContextUtils() {

}

public static boolean containsBean(String name) {

return context.containsBean(name);

}

public static String[] getAliases(String name) throws NoSuchBeanDefinitionException {

return context.getAliases(name);

}

public static ApplicationContext getApplicationContext() {

return context;

}

public static T getBean(Class clazz) {

return context.getBean(clazz);

}

public static Object getBean(String name) {

return context.getBean(name);

}

public static T getBean(String name, Class clazz) {

return context.getBean(name, clazz);

}

public static Class getType(String name) throws NoSuchBeanDefinitionException {

return context.getType(name);

}

public static boolean isSingleton(String name) throws NoSuchBeanDefinitionException {

return context.isSingleton(name);

}

public static void setApplicationContext(ApplicationContext ctx) {

if (context == null) {

context = ctx;

}

}

}

xml

update goods SET name =#{name} where id =#{id}