搭建使用TensorRT加速的centerpoint模型环境

github地址:

本文安装的环境是RTX3090+CUDA11.1+CUDNN8.0.5+Pytorch1.8.0+PYTHON3.7.0

1、基础安装

# basic python libraries

conda create --name centerpoint python=3.7

conda activate centerpoint

conda install pytorch==1.8.0 torchvision==0.9.0 torchaudio==0.8.0 cudatoolkit=11.1 -c pytorch -c conda-forge

git clone https://hub.fastgit.org/CarkusL/CenterPoint.git

cd CenterPoint

pip install -r requirements.txt

# add CenterPoint to PYTHONPATH by adding the following line to ~/.bashrc (change the path accordingly)

export PYTHONPATH="${PYTHONPATH}:PATH_TO_CENTERPOINT"

2、安装nuscenes devkit

git clone https://github.com/tianweiy/nuscenes-devkit

# add the following line to ~/.bashrc and reactivate bash (remember to change the PATH_TO_NUSCENES_DEVKIT value)

export PYTHONPATH="${PYTHONPATH}:PATH_TO_NUSCENES_DEVKIT/python-sdk"

3、设置CUDA环境

# 加到~/.bashrc里面

export PATH=/usr/local/cuda-11.1/bin:$PATH

export CUDA_PATH=/usr/local/cuda-11.1

export CUDA_HOME=/usr/local/cuda-11.1

export LD_LIBRARY_PATH=/usr/local/cuda-11.1/lib64:$LD_LIBRARY_PATH

4、编译

source ~/.bashrc

bash setup.sh

中间编译deform_conv_cuda报错

解决办法:

在CenterPoint/det3d/ops/dcn/src/deform_conv_cuda.cpp中用TORCH_CHECK替换掉AT_CHECK

5、安装apex

git clone https://github.com/NVIDIA/apex

cd apex

git checkout 5633f6 # recent commit doesn't build in our system

pip install -v --no-cache-dir --global-option="--cpp_ext" --global-option="--cuda_ext" ./

6、安装spconv

sudo apt-get install libboost-all-dev

git clone https://github.com/traveller59/spconv.git --recursive

cd spconv && git checkout 7342772

python setup.py bdist_wheel

cd ./dist && pip install *

在python setup.py bdist_wheel时报错:

error: no matching function for call to ‘torch::jit::RegisterOperators::RegisterOperators(const char [28], <unresolved overloaded function type>)’

解决办法:

将torch::jit::RegisterOperators()替换成torch::RegisterOperators()

7、生成数据集元素

nuscenes数据集目录结构

# For nuScenes Dataset

└── NUSCENES_DATASET_ROOT

├── samples <-- key frames

├── sweeps <-- frames without annotation

├── maps <-- unused

├── v1.0-mini <-- metadata

mkdir data

cd data

mkdir nuScenes

cd nuScenes

#下面使用软连接或者拷贝的方式把前面提到的目录结构放进去

#接着运行以下代码,其中NUSCENES_TRAINVAL_DATASET_ROOT代表到nuScenes文件夹的路径

python tools/create_data.py nuscenes_data_prep --root_path=NUSCENES_TRAINVAL_DATASET_ROOT --version="v1.0-mini" --nsweeps=10

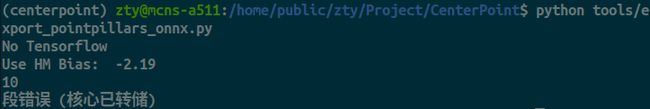

8、转换模型

python tools/export_pointpillars_onnx.py

解决办法:

初步认为是pytorch版本问题,使用CUDA10.2+CUDNN8.2.2+Pytorch1.7.0+PYTHON3.7.0之后没有问题

9、简化模型

python tools/simplify_model.py

python tools/merge_pfe_rpn_model.py

10、下载TensorRT

参考地址

将CenterPoint/tensorrt/samples文件夹下的文件放到tensorrt主目录下的samples文件夹下,将CenterPoint/tensorrt/data文件夹下的文件放到tensorrt主目录下的data文件夹下

11、编译

进tensorRT主目录下的samples/centerpoint中

make

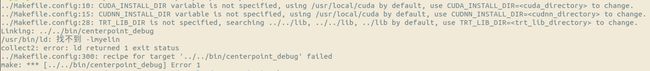

报错:

提示找不到NvInferPlugin.h

解决办法:

sudo cp TENSORRT_ROOT/include/* /usr/include

TENSORRT_ROOT是TensorRT的主目录

继续报错:

解决办法:

我这边的原因是因为作者可能使用的低版本的tensorrt,而我的版本较高,导致接口对应不上,因此需要修改头文件和cpp文件,修改后如下:

ScatterNDPlugin.h:

#ifndef BATCHTILEPLUGIN_H

#define BATCHTILEPLUGIN_H

#include "NvInferPlugin.h"

#include ScatterNDPlugin.cu:

/**

* For the usage of those member function, please refer to the

* offical api doc.

* https://docs.nvidia.com/deeplearning/tensorrt/api/c_api/classnvinfer1_1_1_i_plugin_v2_ext.html

*/

#include "ScatterNDPlugin.h"

#include 继续make,报错:

解决办法:

1.下载tensorrt7,将其中lib文件夹下的libmyelin.so,libmyelin.so.1

,libmyelin.so.1.1.116放到现在的TensorRT8根目录中的lib文件夹下

2.将Makefile.config中

COMMON_LIBS += $(MYELIN_LIB) $(NVRTC_LIB)

替换为

COMMON_LIBS += $(NVRTC_LIB)

12、 测试

运行CenterPoint下的TensorRT_Visualize.ipynb可以生成data,将tensorrt/data目录下的文件复制到TENSORRT8根目录/data下

接着在TENSORRT8根目录/bin文件夹下执行./centerpoint

发现没有输出耗时的输出

解决办法:

将TENSORRT8根目录/samples/centerpoint/samplecenterpoint.cpp中253行中的路径改为绝对路径

253行原本为:

std::vector<std::string> filePath = glob("../"+mParams.dataDirs[0]+"/points/*.bin");

接着报错:

解决办法:

tensorrt版本换成7.1.3.4,完美解决,记得换完后把/usr/include下面的NvInfer*.h删掉,换成最新的。

**PS:**高版本适配低版本也太难了,最后还是妥协了= =