Idea上 使用Spark3.0 sql 操作hive

Idea上 使用Spark3.0 sql操作hive

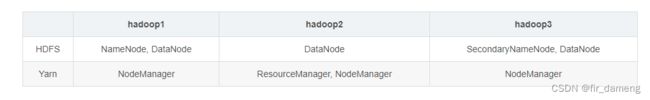

前提:按照以下集群规划安装好HDFS,Hadoop Yarn

1、安装hive

选择一个节点安装hive相关组件,这里选择hadoop3

1.1 、安装mysql

官方链接 : https://dev.mysql.com/get/Downloads/MySQL-5.7/mysql-5.7.26-1.el7.x86_64.rpm-bundle.tar

# centos7.6自带的 MariaDB(MariaDB是MySQL的一个分支),与要安装的MySQL有冲突,需要删除

# 查询是否安装了mariadb

rpm -aq | grep mariadb

# 删除mariadb。-e 删除指定的套件;--nodeps 不验证套件的相互关联性

rpm -e --nodeps mariadb-libs

# 安装依赖

yum install perl -y

yum install net-tools -y

# 解压缩

tar xvf mysql-5.7.26-1.el7.x86_64.rpm-bundle.tar

# 依次运行以下命令

rpm -ivh mysql-community-common-5.7.26-1.el7.x86_64.rpm

rpm -ivh mysql-community-libs-5.7.26-1.el7.x86_64.rpm

rpm -ivh mysql-community-client-5.7.26-1.el7.x86_64.rpm

rpm -ivh mysql-community-server-5.7.26-1.el7.x86_64.rpm

# 启动数据库

systemctl start mysqld

# 查找root密码

grep password /var/log/mysqld.log

# 进入MySQL,使用前面查询到的口令

mysql -u root -p

# 设置口令强度;将root口令设置为12345678;刷新

set global validate_password_policy=0;

set password for 'root'@'localhost' =password('12345678');

flush privileges;

# 创建 hive 用户

# 创建用户设置口令、授权、刷新

CREATE USER 'hive'@'%' IDENTIFIED BY '12345678';

GRANT ALL ON *.* TO 'hive'@'%';

FLUSH PRIVILEGES;

1.2 、安装hive

(1)下载hive执行包并配置

http://archive.apache.org/dist/hive/hive-2.3.9/ 下载 apache-hive-2.3.9-bin.tar.gz

如果下载较慢,可选择国内镜像安装

cd /opt/cwc/software

tar zxvf apache-hive-2.3.9-bin.tar.gz -C ../servers/

cd ../servers

mv apache-hive-2.3.9-bin hive-2.3.9

# 修改环境变量

# 在 /etc/profile 文件中增加环境变量

export HIVE_HOME=/opt/cwc/servers/hive-2.3.9

export PATH=$PATH:$HIVE_HOME/bin

# 执行并生效

source /etc/profile

(2)创建hive-site.xml,增加如下配置

# 创建hive-site.xml,增加如下配置

cd $HIVE_HOME/conf

vim hive-site.xml

增加如下内容

<configuration>

<property>

<name>javax.jdo.option.ConnectionURLname>

<value>jdbc:mysql://hadoop3:3306/hivemetadata?createDatabaseIfNotExist=true&useSSL=falsevalue>

<description>JDBC connect string for a JDBC metastoredescription>

property>

<property>

<name>javax.jdo.option.ConnectionDriverNamename>

<value>com.mysql.jdbc.Drivervalue>

<description>Driver class name for a JDBC metastoredescription>

property>

<property>

<name>javax.jdo.option.ConnectionUserNamename>

<value>hivevalue>

<description>username to use against metastore databasedescription>

property>

<property>

<name>javax.jdo.option.ConnectionPasswordname>

<value>12345678value>

<description>password to use against metastore databasedescription>

property>

<property>

<name>hive.metastore.warehouse.dirname>

<value>/user/hive/warehousevalue>

<description>location of default database for the warehousedescription>

property>

<property>

<name>hive.cli.print.current.dbname>

<value>truevalue>

<description>Whether to include the current database in the Hive prompt.description>

property>

<property>

<name>hive.cli.print.headername>

<value>truevalue>

property>

configuration>

(3)拷贝 MySQL JDBC 驱动程序,初始化hive元数据

将 mysql-connector-java-5.1.46.jar 拷贝到 $HIVE_HOME/lib

# 初始化元数据库

schematool -dbType mysql -initSchema

# 启动hive服务之前,请先启动hdfs、yarn的服务

[root@linux123 ~]$ hive

hive> show functions;

sz:将选定的文件发送(send)到本地机器

rz:运行该命令会弹出一个文件选择窗口,从本地选择文件上传到Linux服务器yum install lrzsz

2、Idea中连接hive

2.1 、示例代码

import org.apache.spark.sql.SparkSession

object hive_read {

def main(args: Array[String]): Unit = {

val ss = SparkSession.builder().master("local[2]").appName("the test of SparkSession").enableHiveSupport().getOrCreate()

// 测试是否连接到hive

val df = ss.sql("show databases")

// 测试是否spark sql 能读取hive表执行

//val df = ss.sql("select count(1) from biods.ods_start_log where dt='20210721'")

df.show

Thread.sleep(1000000)

ss.stop()

}

}

2.2 、pom依赖

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0modelVersion>

<groupId>org.examplegroupId>

<artifactId>lagou-sparkartifactId>

<version>1.0-SNAPSHOTversion>

<properties>

<maven.compiler.source>1.8maven.compiler.source>

<maven.compiler.target>1.8maven.compiler.target>

<scala.version>2.12.10scala.version>

<spark.version>3.0.1spark.version>

<hadoop.version>2.9.2hadoop.version>

<encoding>UTF-8encoding>

properties>

<dependencies>

<dependency>

<groupId>org.scala-langgroupId>

<artifactId>scala-libraryartifactId>

<version>${scala.version}version>

dependency>

<dependency>

<groupId>org.apache.sparkgroupId>

<artifactId>spark-core_2.12artifactId>

<version>${spark.version}version>

dependency>

<dependency>

<groupId>org.apache.sparkgroupId>

<artifactId>spark-sql_2.12artifactId>

<version>${spark.version}version>

dependency>

<dependency>

<groupId>org.apache.sparkgroupId>

<artifactId>spark-hive_2.12artifactId>

<version>${spark.version}version>

dependency>

<dependency>

<groupId>mysqlgroupId>

<artifactId>mysql-connector-javaartifactId>

<version>5.1.46version>

dependency>

dependencies>

<build>

<pluginManagement>

<plugins>

<plugin>

<groupId>net.alchim31.mavengroupId>

<artifactId>scala-maven-pluginartifactId>

<version>3.2.2version>

plugin>

<plugin>

<groupId>org.apache.maven.pluginsgroupId>

<artifactId>maven-compiler-pluginartifactId>

<version>3.5.1version>

plugin>

plugins>

pluginManagement>

<plugins>

<plugin>

<groupId>net.alchim31.mavengroupId>

<artifactId>scala-maven-pluginartifactId>

<executions>

<execution>

<id>scala-compile-firstid>

<phase>process-resourcesphase>

<goals>

<goal>add-sourcegoal>

<goal>compilegoal>

goals>

execution>

<execution>

<id>scala-test-compileid>

<phase>process-test-resourcesphase>

<goals>

<goal>testCompilegoal>

goals>

execution>

executions>

plugin>

<plugin>

<groupId>org.apache.maven.pluginsgroupId>

<artifactId>maven-compiler-pluginartifactId>

<executions>

<execution>

<phase>compilephase>

<goals>

<goal>compilegoal>

goals>

execution>

executions>

plugin>

<plugin>

<groupId>org.apache.maven.pluginsgroupId>

<artifactId>maven-shade-pluginartifactId>

<version>2.4.3version>

<executions>

<execution>

<phase>packagephase>

<goals>

<goal>shadegoal>

goals>

<configuration>

<filters>

<filter>

<artifact>*:*artifact>

<excludes>

<exclude>METAINF/*.SFexclude>

<exclude>METAINF/*.DSAexclude>

<exclude>METAINF/*.RSAexclude>

excludes>

filter>

filters>

configuration>

execution>

executions>

plugin>

plugins>

build>

project>

2.3 将hive-site.xml放入resource目录

2.4 遇到的问题

2.4.1 提示xxx目录没有权限

报错日志如下:

org.apache.hadoop.hive.ql.metadata.HiveException: MetaException(message:Got exception: org.apache.hadoop.security.AccessControlException Permission denied: user=CaiWencheng, access=WRITE, inode="/user/hive/warehouse/temp.db":root:supergroup:drwxr-xr-x

解决方式: 加权限

hadoop fs -chmod -R 777 /user/hive/warehouse/temp.db

2.4.1 mysql驱动没找到

报错日志如下:

org.datanucleus.store.rdbms.connectionpool.DatastoreDriverNotFoundException: The specified datastore driver ("com.mysql.jdbc.Driver") was not found in the CLASSPATH. Please check your CLASSPATH specification, and the name of the driver.

解决方式:添加驱动包依赖

<dependency>

<groupId>mysqlgroupId>

<artifactId>mysql-connector-javaartifactId>

<version>5.1.46version>

dependency>

2.5 Hive建表

# 创建数据库

create database biods;

-- 创建orc外部表

create external table biods.ods_start_log

(

`str` string

)

comment '用户启动日志信息'

partitioned by (`dt` string)

stored as orc

location '/bi/ods/ods_start_log';

-- 创建txt外部表

create external table biods.txt_ods_start_log

(

`str` string

)

comment '用户启动日志信息'

partitioned by (`dt` string)

stored as textfile

location '/bi/ods/txt_ods_start_log';

2.6 环境启动

(1)启动hdfs

在hadoop1节点,执行start-dfs.sh

(2)启动yarn

在hadoop2节点,执行start-yarn.sh

(3)启动hive

在hadoop3节点,执行hive