嵌入式Linux设备驱动程序开发指南14(Linux设备驱动使用DMA)——读书笔记

Linux设备驱动使用DMA

- 十四、Linux设备驱动使用DMA

-

- 14.1 简介

- 14.2 缓存一致性

- 14.3 DMA控制器接口

- 14.4 流式DMA模块

-

- 14.4.1 sdma_sam_m2m.c源代码

- 14.4.2 DMA设备树

- 14.4.3 调试

- 14.5 DMA分散聚集映射

-

- 14.5.1 sdma_imx_sg_m2m.c源代码

- 14.6 用户态DMA

-

- 14.6.1 用户DMA模块分析

- 14.6.2 用户DMA模块代码

- ### 14.6.3 应用程序

- ### 14.6.4 调试

十四、Linux设备驱动使用DMA

14.1 简介

DMA又称直接内存访问,是嵌入式Linux使用的一种控制器。外设存储器直接与主存之间独立传输,而不需要CPU参与,当DMA传输完毕,DMA控制器向CPU发送一个中断。

使用的场景是,当数据块非常大或频繁传送数据时,数据传送会消耗很大一部分CPU处理时间,此时可以使用DMA。

14.2 缓存一致性

高速缓存中使用DMA最大的问题是缓存内容和存储器的内容可能不一致,分为两类:

一致性体系结构: (arm_coherent_dma_ops)一些处理器包含一种称为总线侦听或缓存侦听机制;

非一致性体系结构: (arm_dma_ops)因为非一致性体系结构不为一致性管理提供额外的硬件支持,因此需要软件来特殊处理。

14.3 DMA控制器接口

DMA控制器接口定义到真实的DMA硬件功能接口,以下介绍DMA客户端的使用步骤:

DMA客户端的使用步骤:

1、分配DMA客户通道 ———— dma_request_chan();

2、设置客户端设备和控制器特定参数 ———— dmaengine_slave_config();

3、获取事务描述符 ———— dmaengine_prep_slave_sg();

4、提交事务 ———— dmaengine_submit()

5、发出待处理的请求并等待回调通知 ———— dma_sync_issue_pending()

驱动使用虚拟地址,通过ioremap()把物理地址映射到虚拟地址,DMA访问的内存在物理地址上是连续的,因此由kmalloc()(最高申请128K)申请或__get_free_pages()(最高申请8MB),而不能用vmalloc()。

另外还有一个概念,连续内存分配器(CMA)是为了分配大的、物理上连续的内存块而开发的。

DMA映射:指的是DMA缓存区及缓存器生产设备访问的地址组合。缓冲区通常在驱动程序初始化时候进行映射,在结束时候解除映射。

DMA映射分类:

dma_alloc_coherent() //一致性DMA映射:使用进行分配

dma_map_single() //流式DMA映射:使用进行分配

dma_free_coherent() //解除映射并释放这样的DMA区域函数

dma_unmap_single() //流式DMA清除,当DMA活动完成时,例如从中断中得知DMA传输完成,需要调用该函数。

dma_map_single() 函数调用dma_map_single_attrs(),后者又调用arm_dma_map_page(),来确保缓存缓存中的任何数据将被适当的丢弃或写回。

流式DMA映射规则:

缓冲区只能在指定的放下上使用;

被映射缓存区属于设备,不属于CPU;

在映射之前,用于向设备发送数据的缓存区必须包含的数据;

在DMA任然处于活动时,解除DMA缓存区的映射,将导致严重的系统不稳定问题。

14.4 流式DMA模块

内核DMA模块,驱动分配两个缓冲区,wbuf、rbuf。

驱动从用户态接收字符,并将它们存储在wbuf中。然后将设置一个DMA传输事务(内存到内存),将值从wbuf复制到rbuf。

14.4.1 sdma_sam_m2m.c源代码

#include 14.4.2 DMA设备树

sdma_m2m {

compatible = "arrow,sdma_m2m";

};

14.4.3 调试

nsmod sdma_imx_m2m.ko

echo abcdefg > /dev/sdma_test #写值到wbuf

rmmod sdma_imx_m2m.ko

14.5 DMA分散聚集映射

有不同方式可以通过DMA发送多个缓冲区的内容。它们可以一次发送一个映射,也可以用分散/聚集方式,一次全部发送。

14.5.1 sdma_imx_sg_m2m.c源代码

#include 14.6 用户态DMA

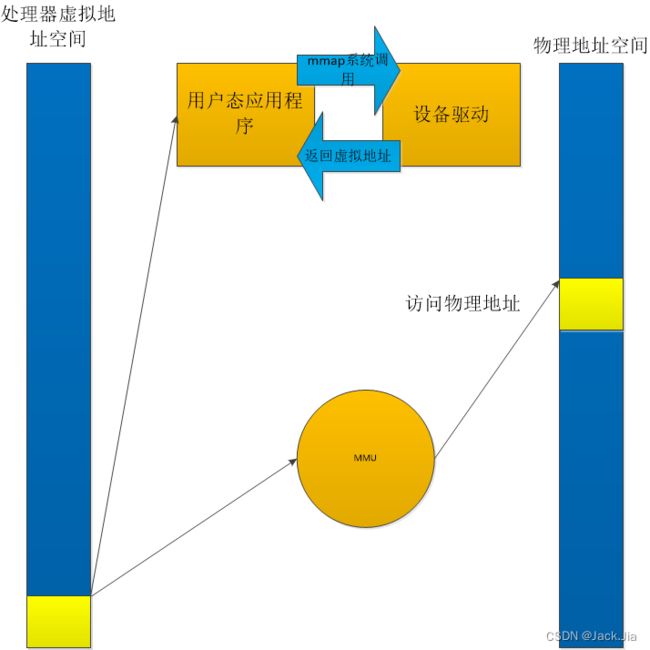

对于较大的缓冲区,用copy_to_user()和copy_from_user()复制数据效率低,也没有利用DMA搬移数据优势。Linux允许内核态和用户态接口框架,用户态DMA被定义为能够访问缓冲区以进行DMA传输,并从用户态应用中进行控制DMA传输。

用户进程可以显示的进行内存映射,mmap()函数,mmap()文件操作允许将设备驱动程序的内存映射到用户态进程地址空间,将虚拟地址转换成物理地址空间。

14.6.1 用户DMA模块分析

sdma_ioctl()回调函数

sdma_open()函数

sdma_ioctl()函数

设备树:

sdma_m2m {

compatible = “arrow, sdma_m2m”,

};

14.6.2 用户DMA模块代码

#include ### 14.6.3 应用程序

#include ### 14.6.4 调试

insmod sdma_imx_mmap.ko //加载模块

./sdma //DMA物理地址内存映射到用户虚拟空间

rmmod sdma_imx_mmap.ko //卸载模块

感谢阅读,祝君成功!

-by aiziyou