docker部署prometheus

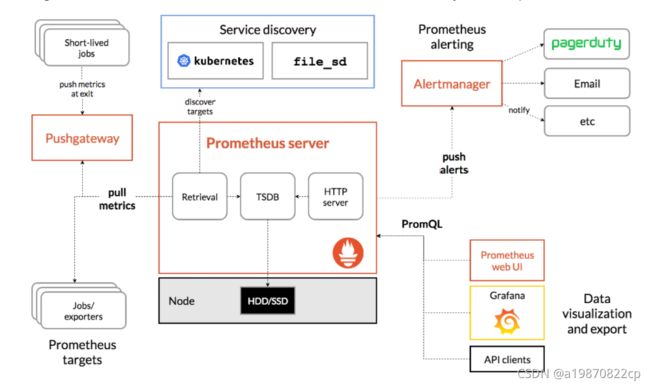

1 prometheus简介

prometheus是由 SoundCloud 开源监控告警解决方案,是云原生时代最火热的监控系统之一。

详细功能可以参看官网文档。

2 prometheus部署

本文主要讲述prometheus的部署,云原生当然是用k8s来部署,所以本文不会讲源码安装、二进制安装等,先从docker 安装说起,k8s后面再讲。

2.1 部署组件

prometheus不只有prometheus,而是有一些列的组件,本次使用docker-compose部署prometheus、node_export、grafana、alertmanager、promtail和loki一套完整prometheus监控体系。

2.2 docker-compose.yml

version: '2'

networks:

monitor:

driver: bridge

services:

prometheus:

image: prom/prometheus

container_name: prometheus

hostname: prometheus

restart: always

volumes:

- ./prometheus/prometheus.yml:/etc/prometheus/prometheus.yml

- ./prometheus/node_down.yml:/etc/prometheus/node_down.yml

ports:

- "9090:9090"

networks:

- monitor

alertmanager:

image: prom/alertmanager

container_name: alertmanager

hostname: alertmanager

restart: always

volumes:

- ./alertmanager/alertmanager.yml:/etc/alertmanager/alertmanager.yml

ports:

- "9093:9093"

networks:

- monitor

node-exporter:

image: quay.io/prometheus/node-exporter

container_name: node-exporter

hostname: node-exporter

restart: always

ports:

- "9100:9100"

networks:

- monitor

pushgateway:

image: prom/pushgateway

container_name: pushgateway

restart: always

ports:

- 9091:9091

networks:

- monitor

loki:

image: grafana/loki

container_name: loki

restart: always

ports:

- "3100:3100"

volumes:

- ./loki:/etc/loki

command: -config.file=/etc/loki/local-config.yaml

networks:

- monitor

promtail:

image: grafana/promtail

container_name: promtail

restart: always

volumes:

- ./promtail:/etc/promtail

- /home/blockchain-test/e-invoice/logs:/opt

command: -config.file=/etc/promtail/docker-config.yaml

networks:

- monitor

grafana:

image: grafana/grafana

container_name: grafana

hostname: grafana

restart: always

ports:

- "3000:3000"

depends_on:

- loki

- promtail

volumes:

- ./grafana:/var/lib/grafana

networks:

- monitor

# mysql的exporter,可选

mysql-exporter:

image: prom/mysqld-exporter

container_name: mysql-exporter

hostname: mysql-exporter

restart: always

ports:

- "9104:9104"

networks:

- monitor

environment:

DATA_SOURCE_NAME: "root:123456@(192.168.0.1:3306)/"

# redis的exporter,可选

redis-exporter:

image: oliver006/redis_exporter

container_name: redis_exporter

hostname: redis_exporter

restart: always

ports:

- "9121:9121"

networks:

- monitor

command:

- '--redis.addr=redis://192.168.0.1:6379'

- '--redis.password=123456'

描述文件中,部署了各个组件,暴露了相应的端口,挂载对应的目录主要是配置文件。

2.3 配置文件

prometheus配置文件:

prometheus.yml

# my global config

global:

scrape_interval: 15s # Set the scrape interval to every 15 seconds. Default is every 1 minute.

evaluation_interval: 15s # Evaluate rules every 15 seconds. The default is every 1 minute.

# scrape_timeout is set to the global default (10s).

# Alertmanager configuration

alerting:

alertmanagers:

- static_configs:

- targets: ['192.168.0.1:9093'] #alertmanager地址

# - alertmanager:9093

# Load rules once and periodically evaluate them according to the global 'evaluation_interval'.

rule_files:

#- "node_down.yml"

# - "first_rules.yml"

# - "second_rules.yml"

# A scrape configuration containing exactly one endpoint to scrape:

# Here it's Prometheus itself.

scrape_configs:

# The job name is added as a label `job=` to any timeseries scraped from this config.

# prometheus抓取的监控的信息

- job_name: 'prometheus'

scrape_interval: 8s

static_configs:

- targets: ['192.168.0.1:9090']

- targets: ['192.168.0.1:9100','192.168.0.2:9100']

labels:

group: 'client-node-exporter'

- targets: ['192.168.0.1:9091']

labels:

group: 'client-pushgateway'

- targets: ['192.168.0.1:9104']

labels:

group: 'client-mysql'

# prometheus抓取redis监控的信息

- job_name: 'redis_exporter'

static_configs:

- targets:

- 192.168.0.1:9121

# prometheus抓取GPU监控的信息

- job_name: 'dcgm'

static_configs:

- targets: ['192.168.0.3:9400']

- job_name: 'nvidia_gpu_exporter'

static_configs:

- targets: ['192.168.0.4:9835']

prometheus.yml主要配置prometheus抓取的监控信息,只要配置对应的exporter暴露的指标,都能被prometheus获取到。

node_down.yml:自定义的告警规则文件,文件中配置主机宕机和某个服务down 了之后的告警。可以根据需要自行配置。

groups:

- name: node_down

rules:

- alert: InstanceDown

expr: up == 0

for: 1m

labels:

user: test

annotations:

summary: "Instance {{ $labels.instance }} down"

description: "{{ $labels.instance }} of job {{ $labels.job }} has been down for more than 1 minutes."

- name: node-up

rules:

- alert: node-up

expr: up{job="xxx"} == 0

for: 15s

labels:

severity: 1

team: node

annotations:

summary: "{{ $labels.instance }} 已停止运行超过 15s!"

alertmanager配置文件:

alertmanager.yml,主要配置告警的频率,告警的邮箱等。除了邮件告警之外,还阔以配置短信、微信、钉钉等。

global:

resolve_timeout: 5m

smtp_from: '[email protected]'

smtp_smarthost: 'smtp.126.com:25'

smtp_auth_username: '[email protected]'

smtp_auth_password: 'SFSFEVCDFDGD'

smtp_require_tls: false

smtp_hello: 'xxx监控告警'

route:

group_by: ['alertname']

group_wait: 5s

group_interval: 5s

repeat_interval: 5m

receiver: 'email'

receivers:

- name: 'email'

email_configs:

- to: '[email protected]'

send_resolved: true

- to: '[email protected]'

send_resolved: true

- to: '[email protected]'

send_resolved: true

loki配置:

loki是个轻量级的日志采集工具,原理prometheus几乎是一模一样的,有点就是轻量级了,如果只需要是看日志的话很适合用这个,没必要上ELK。

local-config.yaml,基本是官方的配置,如有需要自行修改。

auth_enabled: false

server:

http_listen_port: 3100

ingester:

lifecycler:

address: 127.0.0.1

ring:

kvstore:

store: inmemory

replication_factor: 1

final_sleep: 0s

chunk_idle_period: 5m

chunk_retain_period: 30s

max_transfer_retries: 0

schema_config:

configs:

- from: 2018-04-15

store: boltdb

object_store: filesystem

schema: v11

index:

prefix: index_

period: 168h

storage_config:

boltdb:

directory: /loki/index

filesystem:

directory: /loki/chunks

limits_config:

enforce_metric_name: false

reject_old_samples: true

reject_old_samples_max_age: 168h

chunk_store_config:

max_look_back_period: 0s

table_manager:

retention_deletes_enabled: false

retention_period: 0s

promtail配置

promtail和exporter类似,部署到要采集日志主机上,通过tail的方式去抓取日志,然后发到loki。

配置文件主要是配置要抓取的日志的服务和路径.

docker-config.yaml,抓取日志的配置:

server:

http_listen_port: 9080

grpc_listen_port: 0

positions:

filename: /tmp/positions.yaml

clients:

- url: http://loki:3100/loki/api/v1/push

scrape_configs:

- job_name: system

static_configs:

- targets:

- localhost

labels:

job: varlogs

__path__: /var/log/*log # 抓取系统日志

- job_name: xxx

static_configs:

- targets:

- localhost

labels:

job: xxx

__path__: /opt/console/*log # 抓取某应用控制台日志

- job_name: xxx1

static_configs:

- targets:

- localhost

labels:

job: xxxInfo

__path__: /opt/info/*log # 抓取某应用info日志

- job_name: xxx2

static_configs:

- targets:

- localhost

labels:

job: xxxError

__path__: /opt/error/*log # 抓取某应用error日志

local-config.yaml ,默认配置。

server:

http_listen_port: 9080

grpc_listen_port: 0

positions:

filename: /tmp/positions.yaml

clients:

- url: http://localhost:3100/loki/api/v1/push

scrape_configs:

- job_name: system

static_configs:

- targets:

- localhost

labels:

job: varlogs1

__path__: /var/log/*log

2.4 启动

完成描述文件和配置文件之后,就可以启动了。

docker-compose up -d

3 使用prometheus

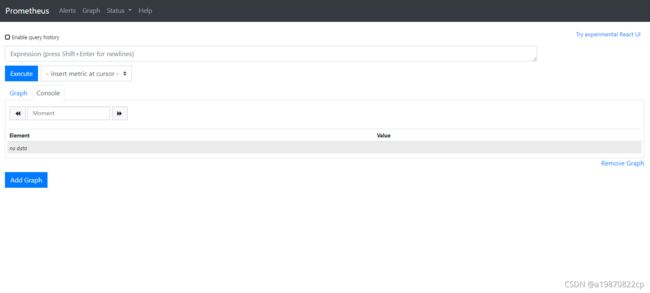

输入ip+对应的端口打开应用

prometheus:http://192.168.0.1:9090/,可以自定义一些数据的图标。

查看相应的Targets信息等。

alertmanager http://192.168.0.1:9093/#/alerts

可以查看、配置、屏蔽一些告警信息。

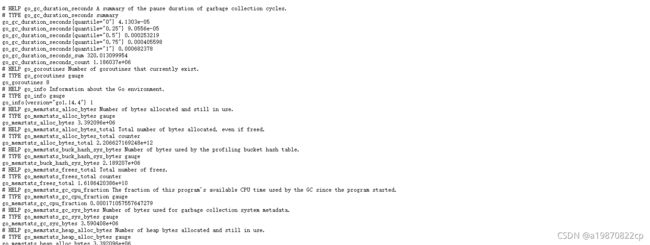

xxx-exporter 通过对应的端口,可以查看exporter信息

主要是一些数据Metrics信息,这些数据看起来并不方便,所以可以使用grafana

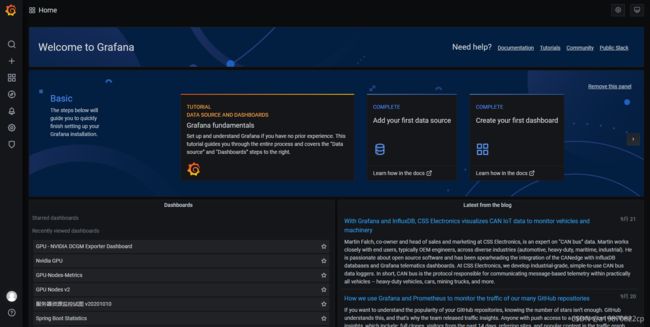

grafana http://192.168.0.1:3000/login

登录进入之后,配置prometheus数据源,可以自定义一些监控图标,也可以去grafana的商店下载一些dashboard。详细grafana的使用自行了解。

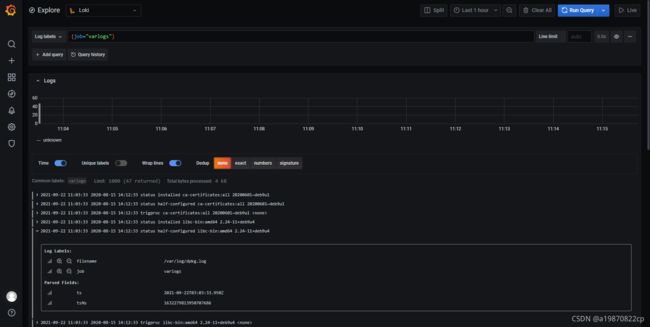

Loki,Loki也集成在grafana中,切换数据源即可

选择对应的job,查看日志:

到此prometheus部署完成。