机器学习笔记 十六:基于Boruta算法的随机森林(RF)特征重要性评估

文章目录

- 1. Boruta

-

- 1.1 特点

- 1.2 参数

- 1.3 例子

- 2. 决策树的重要性模型

-

- 2.1 Gini系数重要性

- 2.2 算法

- 2.3 例子

-

- 2.3.1 利用符合正太分布的三个变量综合为一个结果(Gini Importance)

- 2.3.2 排列重要性(Permutation Importance)

- 2.3.3 Boruta

-

- 2.3.3.1 算法步骤:

- 3. 葡萄酒数据集实验

-

- 3.1 探索性数据分析

-

- 3.1.1 特征相关性分析(热图)

1. Boruta

Boruta是一种所有相关的特征选择方法,而其他大多数方法都是最小最优的,这意味着它试图找到所有携带信息的特征用于预测,而不是寻找一个可能的紧凑的特征子集,在这个特征上一些分类器有最小的错误。

boruta_py 包安装:

pip install Boruta

依赖包:numpy、scipy、scikit-learn

1.1 特点

- 更快的运行时间

- Scikit-learn式界面

- 从scikit-learn兼容任何集成方法

- 自动n_estimator选择

- 特性排序

- 特征的重要性来源于Gini杂质机制(MDI)而不是RandomForest R包的MDA。

建议使用修剪过的树,深度在3-7之间

1.2 参数

estimator : object

A supervised learning estimator, with a 'fit' method that returns the feature_importances_ attribute.

Important features must correspond to high absolute values in the feature_importances_.

n_estimators : int or string, default = 1000

If int sets the number of estimators in the chosen ensemble method. If 'auto' this is determined automatically based on the size of the dataset.

The other parameters of the used estimators need to be set with initialisation.

perc : int, default = 100

Instead of the max we use the percentile defined by the user, to pick our threshold for comparison between shadow and real features. The max tends to be too stringent.

This provides a finer control over this. The lower perc is the more false positives will be picked as relevant but also the less relevant features will be left out.

The usual trade-off. The default is essentially the vanilla Boruta corresponding to the max.

alpha : float, default = 0.05(置信度)

Level at which the corrected p-values will get rejected in both correction steps.

two_step : Boolean, default = True

If you want to use the original implementation of Boruta with Bonferroni correction only set this to False.

max_iter : int, default = 100(最大迭代次数)

The number of maximum iterations to perform.

verbose : int, default=0

Controls verbosity of output.

1.3 例子

import pandas as pd

from sklearn.ensemble import RandomForestClassifier

from boruta import BorutaPy

X = pd.read_csv('C:/Users/Administrator/Desktop/test_X.csv', index_col=0).values

y = pd.read_csv('C:/Users/Administrator/Desktop/test_y.csv', header=None, index_col=0).values

y = y.ravel() # 数据展平

rf = RandomForestClassifier(n_jobs=-1, class_weight='balanced', max_depth=5)

# 定义Boruta特征选择方法

feat_selector = BorutaPy(rf, n_estimators='auto', verbose=2, random_state=1)

# 找出所有相关的特征

feat_selector.fit(X, y)

# 检查所选的特征

feat_selector.support_

# 检查特征的排名

feat_selector.ranking_

# 在X上调用transform()将其筛选到所选的特征

X_filtered = feat_selector.transform(X)

2. 决策树的重要性模型

随机森林是最受欢迎的机器学习方法之一,由于其相对良好的准确性、鲁棒性和易用性。它们还提供了两种简单的功能选择方法:1. 基尼重要性或平均减少杂质 (MDI);2. 排列重要性或平均减少精度 (MDA)

一种新的全相关特征选择方法是:Boruta,由Witold R. Rudnicki构思,并在华沙大学(ICM UW)数学和计算建模跨学科中心开发。

2.1 Gini系数重要性

随机森林由许多决策树组成,决策树中的每个节点都是单个特征的一个条件,旨在将数据集分成两个,以便相似的响应值最终出现在相同的集合中。

选择(局部)最优条件所依据的度量称为杂质:1.对于分类树,它通常是基尼杂质或信息增益/熵;2.对于回归树,它是方差。

在scikit-learn中,对于分类问题,Gini Importance以RandomForestClassifier.feature_importances_ 的形式实现;对于回归问题,以RandomForestRegressor.feature_importances_ 的形式实现。

2.2 算法

在训练一棵树时,可以计算出每个特征减少树中加权杂质的多少:

- 每次在变量上分裂一个节点时,两个子节点的基尼杂质准则(或信息熵)都小于父节点。

- 将森林中所有树木中每个个体变量的基尼系数下降相加,得到一个快速变量的重要性,通常与排列重要性度量非常一致。

注意:

在使用基于杂质的排名时,有几件事要记住:

- 基于杂质减少的特征选择倾向于偏好类别更多的变量(参见随机森林变量重要性度量的偏差)。

- 当数据集有两个(或更多)相关特征时,那么从模型的角度来看,这些相关特征中的任何一个都可以用作预测器,没有具体的偏好。但一旦其中一个被使用,其他特征的重要性就会显著降低,因为它们所能去除的杂质实际上已经被第一个特征去除了。因此,它们的报告重要性将较低。当我们想要使用特征选择来减少过拟合时,这不是一个问题,因为删除被其他特征复制的特征是有意义的。但在解读数据时,它可能会导致错误的结论,即其中一个变量是一个很强的预测因子,而同一组中的其他变量不重要,而实际上它们与响应变量的关系非常密切。

2.3 例子

2.3.1 利用符合正太分布的三个变量综合为一个结果(Gini Importance)

import sys

import numpy as np

import pandas as pd

import seaborn as sns

import matplotlib.pyplot as plt

from sklearn.ensemble import RandomForestClassifier

from sklearn.ensemble import RandomForestRegressor

from sklearn.svm import SVC

from sklearn.linear_model import SGDClassifier

from sklearn.metrics import confusion_matrix, classification_report

from sklearn.preprocessing import StandardScaler, LabelEncoder

from sklearn.model_selection import train_test_split, GridSearchCV, cross_val_score

from sklearn.model_selection import RandomizedSearchCV

from sklearn.metrics import accuracy_score

from collections import defaultdict

from sklearn.metrics import r2_score

sys.path.insert(0, 'boruta_py-master/boruta')

from boruta import BorutaPy

sys.path.insert(0, 'random-forest-importances-master/src')

from rfpimp import *

%matplotlib inline

wine = pd.read_csv("C:/Users/Administrator/Desktop/winequality-red.csv")

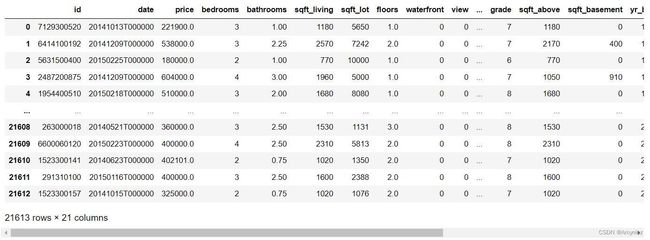

house = pd.read_csv("C:/Users/Administrator/Desktop/kc_house_data.csv")

size = 10000

np.random.seed(seed=10)

# normal正态分布:

# 1.param1:正态分布的均值,对应着这个分布的中心

# 2.param2:正态分布的标准差,对应分布的宽度,值越大,正态分布的曲线越矮胖,值越小,曲线越高瘦。

# 3.param3:输出的值赋在shape里,默认为None。

X_seed = np.random.normal(0, 1, size) # (10000,)

X0 = X_seed + np.random.normal(0, .1, size)

X1 = X_seed + np.random.normal(0, .1, size)

X2 = X_seed + np.random.normal(0, .1, size)

X = np.array([X0, X1, X2]).T

Y = X0 + X1 + X2

rf = RandomForestRegressor(n_estimators=20, max_features=2)

rf.fit(X, Y);

print("Scores for X0, X1, X2: {}".format(rf.feature_importances_))

Scores for X0, X1, X2: [0.29792321 0.52388941 0.17818738]

2.3.2 排列重要性(Permutation Importance)

对于每一列,重复排列10次,以便对平均精度下降(MDA)有一个更好的估计,并计算该估计的标准差。

注意,metric有两个可能的输入:

- oob_classifier_accuracy:用于计算scikit-learn random forest classifier的袋外(OOB)精度。

- oob_regression_r2_score:用于计算scikit-learn random forest regressor的袋外(OOB)。

这些函数在 rfpimp.py 中实现。

使用OOB样本进行排列重要性计算会对性能产生强烈的负面影响。使用OOB示例意味着使用Python循环遍历树,而不是使用scikit/numpy中高度向量化的代码进行预测。

def permutation_importances(rf, X_train, y_train, metric):

baseline = metric(rf, X_train, y_train)

imp = []

std = []

for col in X_train.columns:

tmp=[]

for i in range(10):

save = X_train[col].copy()

X_train[col] = np.random.permutation(X_train[col]) # permutation()按照给定列表生成一个打乱后的随机列表

m = metric(rf, X_train, y_train)

X_train[col] = save

tmp.append(m)

imp.append(baseline - np.mean(tmp))

std.append(np.std(tmp))

return np.array(imp),np.array(std)

2.3.3 Boruta

Boruta是一个R包的名字,它实现了一种新的特征选择算法。它像Permutation Importance一样对变量进行随机排列,但同时对所有变量进行排列,并将洗牌后的特征与原始特征连接起来。它迭代地删除那些被统计测试证明不如随机探针相关的特征。

2.3.3.1 算法步骤:

- 首先,我们复制我们的数据集,并对每一列的值进行洗牌,这些被称为影子特征。

- 然后,我们在新合并的数据集上训练一个分类器,这样我们就能得到每个特征的重要性。

- 现在,我们检查每一个真实特征的重要性是否高于影子特征中最好的那个。

- 如果有,我们将其记录在一个向量中(这些被称为命中率)并继续进行另一次迭代。

- 在每一次迭代中,我们都要进行统计测试,检查一个给定的特征是否比预期的随机机会做得更好。我们通过简单地比较一个特征比影子特征做得更好的次数,使用二项分布。

- 有把握地优于影子特征的特征被标记为 “确认”,而那些没有被确认的特征被标记为 "拒绝 "并从原始数据矩阵中删除。

- 在设定的迭代次数之后(或者如果所有的特征都被确认或拒绝),我们停止。

当进行统计检验时,由于我们对成千上万的特征进行了检验,我们需要对多重检验进行修正。原始方法为此使用了相当保守的Bonferroni校正。在Python的实现中,对多重检验的校正被放宽了,使其成为一个两步的过程,而不是苛刻的一步Bonferroni校正。

虽然Boruta是一种特征选择算法,但我们可以用确认/拒绝的顺序作为对特征的重要性进行排序的一种方式。

3. 葡萄酒数据集实验

数据解释: 葡萄酒数据集,第一个数据集与葡萄牙 "Vinho Verde "葡萄酒的红色变体有关。该数据集只有物理化学(输入)和感官(输出)变量可用(没有关于葡萄类型、葡萄酒品牌、葡萄酒销售价格等数据)。

类别是有序但不平衡的(正常的葡萄酒比优秀或差的葡萄酒多得多)

输入连续变量(基于物理化学测试): 固定酸度、挥发性酸度、柠檬酸、残糖、氯化物、游离二氧化硫、总二氧化硫、密度、pH值10、硫酸盐、酒精

输出分类变量(基于感官数据): 质量(分数在0和10之间)

3.1 探索性数据分析

将问题分割成二元任务:

# 目标变量的数量

sns.countplot(x='quality', data=wine)

# 对响应变量进行二元分类

# 通过给出质量的界限,将葡萄酒分为好的和坏的

# bad:质量<=6

# good:质量 >= 7

bins = (2, 6.5, 8)

group_names = ['bad', 'good']

wine['quality'] = pd.cut(wine['quality'], bins = bins, labels = group_names)

# 给质量变量指定一个标签

label_quality = LabelEncoder()

# 坏的变成0,好的变成1

wine['quality'] = label_quality.fit_transform(wine['quality'])

# 新的响应变量

sns.countplot(x='quality', data=wine)

fig, ax =plt.subplots(nrows=3,ncols=5,figsize=(15,10))

sns.distplot(wine[wine['quality']==0]['fixed acidity'], hist = False, kde = True,

kde_kws = {'shade': True, 'linewidth': 3},label='Bad Quality',ax=ax[0][0])

sns.distplot(wine[wine['quality']==1]['fixed acidity'], hist = False, kde = True,

kde_kws = {'shade': True, 'linewidth': 3},label='Good Quality',ax=ax[0][0])

# plt.legend(loc=0, prop={'size': 8},)

# plt.legend(labels = ['bad quality','good quality'])

sns.distplot(wine[wine['quality']==0]['volatile acidity'], hist = False, kde = True,

kde_kws = {'shade': True, 'linewidth': 3},label='Bad Quality',ax=ax[0][1])

sns.distplot(wine[wine['quality']==1]['volatile acidity'], hist = False, kde = True,

kde_kws = {'shade': True, 'linewidth': 3},label='Good Quality',ax=ax[0][1])

plt.legend(loc=0, prop={'size': 8})

sns.distplot(wine[wine['quality']==0]['sulphates'], hist = False, kde = True,

kde_kws = {'shade': True, 'linewidth': 3},label='Bad Quality',ax=ax[0][2])

sns.distplot(wine[wine['quality']==1]['sulphates'], hist = False, kde = True,

kde_kws = {'shade': True, 'linewidth': 3},label='Good Quality',ax=ax[0][2])

plt.legend(loc=0, prop={'size': 8})

sns.distplot(wine[wine['quality']==0]['citric acid'], hist = False, kde = True,

kde_kws = {'shade': True, 'linewidth': 3},label='Bad Quality',ax=ax[0][3])

sns.distplot(wine[wine['quality']==1]['citric acid'], hist = False, kde = True,

kde_kws = {'shade': True, 'linewidth': 3},label='Good Quality',ax=ax[0][3])

plt.legend(loc=0, prop={'size': 8})

sns.distplot(wine[wine['quality']==0]['residual sugar'], hist = False, kde = True,

kde_kws = {'shade': True, 'linewidth': 3},label='Bad Quality',ax=ax[0][4])

sns.distplot(wine[wine['quality']==1]['residual sugar'], hist = False, kde = True,

kde_kws = {'shade': True, 'linewidth': 3},label='Good Quality',ax=ax[0][4])

plt.legend(loc=0, prop={'size': 8})

sns.distplot(wine[wine['quality']==0]['chlorides'], hist = False, kde = True,

kde_kws = {'shade': True, 'linewidth': 3},label='Bad Quality',ax=ax[1][0])

sns.distplot(wine[wine['quality']==1]['chlorides'], hist = False, kde = True,

kde_kws = {'shade': True, 'linewidth': 3},label='Good Quality',ax=ax[1][0])

plt.legend(loc=0, prop={'size': 8})

sns.distplot(wine[wine['quality']==0]['free sulfur dioxide'], hist = False, kde = True,

kde_kws = {'shade': True, 'linewidth': 3},label='Bad Quality',ax=ax[1][1])

sns.distplot(wine[wine['quality']==1]['free sulfur dioxide'], hist = False, kde = True,

kde_kws = {'shade': True, 'linewidth': 3},label='Good Quality',ax=ax[1][1])

plt.legend(loc=0, prop={'size': 8})

sns.distplot(wine[wine['quality']==0]['total sulfur dioxide'], hist = False, kde = True,

kde_kws = {'shade': True, 'linewidth': 3},label='Bad Quality',ax=ax[1][2])

sns.distplot(wine[wine['quality']==1]['total sulfur dioxide'], hist = False, kde = True,

kde_kws = {'shade': True, 'linewidth': 3},label='Good Quality',ax=ax[1][2])

plt.legend(loc=0, prop={'size': 8})

sns.distplot(wine[wine['quality']==0]['density'], hist = False, kde = True,

kde_kws = {'shade': True, 'linewidth': 3},label='Bad Quality',ax=ax[1][3])

sns.distplot(wine[wine['quality']==1]['density'], hist = False, kde = True,

kde_kws = {'shade': True, 'linewidth': 3},label='Good Quality',ax=ax[1][3])

plt.legend(loc=0, prop={'size': 8})

sns.distplot(wine[wine['quality']==0]['pH'], hist = False, kde = True,

kde_kws = {'shade': True, 'linewidth': 3},label='Bad Quality',ax=ax[1][4])

sns.distplot(wine[wine['quality']==1]['pH'], hist = False, kde = True,

kde_kws = {'shade': True, 'linewidth': 3},label='Good Quality',ax=ax[1][4])

plt.legend(loc=0, prop={'size': 8})

sns.distplot(wine[wine['quality']==0]['alcohol'], hist = False, kde = True,

kde_kws = {'shade': True, 'linewidth': 3},label='Bad Quality',ax=ax[2][0])

sns.distplot(wine[wine['quality']==1]['alcohol'], hist = False, kde = True,

kde_kws = {'shade': True, 'linewidth': 3},label='Good Quality',ax=ax[2][0])

plt.legend(loc=0, prop={'size': 8})

fig.tight_layout()

plt.subplots_adjust(wspace=.3, hspace=.3)

fig.delaxes(ax[2][1])

fig.delaxes(ax[2][2])

fig.delaxes(ax[2][3])

fig.delaxes(ax[2][4])

相关结果显示:

最能解释葡萄酒质量的特征似乎是酒精、硫酸盐、挥发性酸度和柠檬酸。

3.1.1 特征相关性分析(热图)

corr = wine.drop('quality',axis=1).corr()

# 为上面的三角形生成一个掩码

mask = np.zeros_like(corr, dtype=np.bool)

mask[np.triu_indices_from(mask)] = True

f, ax = plt.subplots(figsize=(10, 10))

# 生成一个自定义的发散性色彩图

cmap = sns.diverging_palette(220, 10, as_cmap=True)

# 用掩膜和正确的长宽比绘制热图

sns.heatmap(corr, mask=mask, cmap=cmap, center=0,

square=True, linewidths=.5, cbar_kws={"shrink": .5})

结果显示:

特征之间没有高度关联(即没有多重共线性的风险)

正相关特征:"总二氧化硫 "与 “游离二氧化硫”、"固定酸度 "与 "柠檬酸 "、"固定酸度 "与 “密度”;

负相关特征:"pH值 "与 “固定酸度”、"pH值 "与 "柠檬酸 "、"柠檬酸 "与 “挥发性酸度”;

这些相关性可能是由于这些特征之间的物理关系造成的。