AN OPEN-SOURCE SPEAKER GENDER DETECTION FRAMEWORK FOR MONITORING GENDER EQUALITY

AN OPEN-SOURCE SPEAKER GENDER DETECTION FRAMEWORK FOR MONITORING GENDER EQUALITY

监测两性平等的开源说话人性别检测框架

David Doukhan, Jean Carrive

French National Institute of Audiovisual Paris, France

Fe ́licien Vallet† CNIL

Paris, France

Anthony Larcher, Sylvain Meignier

LIUM - Universite ́ du Maine Le Mans, France

This paper presents an approach based on acoustic analysis to describe gender equality in French audiovisual streams, through the estimation of male and female speaking time. Gender detection systems based on Gaussian Mixture Mod- els, i-vectors and Convolutional Neural Networks (CNN) were trained using an internal database of 2,284 French speakers and evaluated using REPERE challenge corpus. The CNN system obtained the best performance with a frame- level gender detection F-measure of 96.52 and a hourly women speaking time percentage error bellow 0.6%. It was considered reliable enough to realize large-scale gen- der equality descriptions. The proposed gender detection system has been packaged as an open-source framework.

本文提出了一种基于声学分析的方法来描述法语视听流中的性别平等,通过估计男性和女性的说话时间。基于高斯混合模型、i-向量和卷积神经网络(CNN)的性别检测系统使用2284名法语使用者的内部数据库进行训练,并使用repee挑战语料库进行评估。CNN系统在帧级性别检测F-measure为96.52,女性每小时说话时间百分比误差小于0.6%的情况下,获得了最佳性能。它被认为是可靠的,足以实现大规模的gen-der等式描述。拟议的性别检测系统已打包为一个开源框架。

-

INTRODUCTION

一。导言

Gender equality in media is a concern which has been analyzed through various descriptors such as the propor- tion of male and female employees in media (journalists, top decision-making posts) [1], speaker roles (expert, in- terviewer), topics covered [2] or correlation between the proportion of male and female speakers and the amount of audience [3]. Among the descriptors of gender equality, male and female speaking-time duration has been used in Global Media Monitoring Project world-scale study [4]. Due to the cost associated to the manual annotation of speech, this mon- itoring is restricted to the analysis of a single day every five years, and limited to news-related content. Such a limitation induces biases, such as the particular context in which these measures were made, which may influence the topics covered in the news, as well as the amount of male and female speak- ers. Moreover, it describes a limited set of programs that does not fully reflect audiovisual diversity.

媒体中的性别平等是一个关注点,通过各种描述来分析,例如媒体中男女雇员的比例(记者、最高决策职位)[1]、演讲者角色(专家、观众)、所涉及的话题[2]或男女演讲者的比例与听众数量之间的关系[3]。在两性平等的描述词中,全球媒体监测项目世界范围研究[4]使用了男性和女性的发言时间。由于人工注释语音的相关成本,此监视仅限于每五年分析一天,并且仅限于与新闻相关的内容。这样的限制会导致偏见,例如采取这些措施的特定背景,这些偏见可能会影响新闻报道的主题,以及男性和女性发言人的数量。此外,它还描述了一组有限的程序,这些程序不能完全反映视听多样性。

In the present work, we design an automatic speaker gen- der detection system for large scale monitoring of raw audio- visual streams in order to reduce the bias associated to a par- ticular measure context. Quantitative gender speaking time estimates may help describing the evolution of gender equal- ity across time and could be used to guide qualitative stud- ies. They could also enable TV channels and radio stations to monitor gender equality in their programs. Long term goal of this research is based on the assumption that quantitative de- scription of gender equality in media may increase conscious- ness on those issues and result in societal changes.

在本研究中,我们设计了一个用于大规模原始音视频流监控的自动说话人生成检测系统,以减少与特定测量背景相关的偏差。定量的性别说话时间估计有助于描述性别平等随时间的演变,并可用于指导定性研究。它们还可以使电视频道和广播电台在其节目中监测两性平等。这项研究的长期目标是基于这样一个假设,即媒体对两性平等的定量描述可能会增强人们对这些问题的意识,并导致社会变革。

As it is easier than other automatic speech-related tasks, gender detection’s difficulty is often under-estimated. Fe- male speech is generally associated to higher pitch, vowel formants located in higher frequencies and considered to be more breathy. Language-dependent acoustic variations between male and female speakers were also reported. Dis- tinction between male and female speech is therefore not only due to anatomical differences, but also to a given socio- cultural context [5]. Consequently, the robustness of speech gender detection systems is still challenged by speakers hav- ing marked accents (regional, foreign), extreme pitch ranges, or speaking using non-standard intonation.

由于它比其他与语音相关的自动任务更容易实现,性别检测的难度往往被低估。非男性语言通常与音调较高,元音共振峰位于较高的频率,被认为是更呼吸。男性和女性之间的语言依赖性声学差异也被报道。因此,男性和女性的言语差异不仅是由于解剖学上的差异,而且是由于特定的社会文化背景[5]。因此,语音性别检测系统的鲁棒性仍然受到带有明显口音(地区、外国)、极端音高范围或使用非标准语调说话的人的挑战。

This study addresses three major issues: Which meth- ods result in the most accurate speech-time estimates? Does the robustness of automatic systems allows to perform reli- able gender equality descriptions based on men and women speech-time percentage? What knowledge can be gathered from audiovisual streams using this information?

这项研究解决了三个主要问题:哪种方法能得到最准确的语音时间估计?自动系统的稳健性是否允许基于男性和女性讲话时间百分比执行可重复的性别平等描述?使用这些信息可以从视听流中收集哪些知识? -

RELATION TO PRIOR WORK

Speech gender detection systems have been used for a long time as a preprocessing step in Automatic Speech Recogni- tion (ASR) engines, allowing the selection of gender depen- dent acoustic models [6, 7]. Gender detection was not neces- sarily considered as an end itself, but rather as a way of select- ing models having the closest acoustic properties to the utter- ance being analyzed. More recent studies have used speaker gender detection as a preprocessing step for selecting gender-specific emotion recognition engines [8], as well as a way of enhancing human-machine interactions by defining gender- specific interaction strategies [9].

语音性别检测系统作为自动语音识别(ASR)引擎中的一个预处理步骤已经使用了很长一段时间,允许选择与性别相关的声学模型[6,7]。性别检测并不一定被认为是目的本身,而是一种选择模型的方法,该模型具有与被分析话语最接近的声学特性。最近的研究将说话人性别检测作为选择特定性别情感识别引擎的预处理步骤[8],以及通过定义特定性别的交互策略来增强人机交互的方法[9]。

Gaussian Mixture Models (GMM), trained with MFCCs have been used as a de facto standard for speech gender recog- nition since the 90’s and are still used in most of modern sys- tems [6]. Approaches based on i-vectors, compact represen- tations of speaker’s utterances, have been successfully used over the last few years for speaker recognition tasks [10]. They have recently shown better performances over GMMs for the detection of speaker gender, in a context where al- ready segmented utterances were assumed to belong to a sin- gle speaker [9]. Other approaches based on Convolutional Neural Networks (CNN) were proposed and evaluated on ut- terances assumed to belong to a single speaker [11]. However, those approaches were trained using a limited amount of data, that does not allow to take advantage of deep architectures.

高斯混合模型(GMM)是用MFCCs训练的,自90年代以来一直被用作语音性别识别的事实标准,至今仍在大多数现代系统中使用[6]。基于i-向量的方法,即说话人话语的紧凑表示,在过去几年中已经成功地用于说话人识别任务[10]。他们最近在检测说话人性别方面比GMMs显示出更好的性能,在这种情况下,所有准备好的分段话语都被认为属于一个单一的说话人。提出了基于卷积神经网络(CNN)的其他方法,并对假设属于单个说话人的ut-terance进行了评估[11]。然而,这些方法是使用有限的数据进行训练的,这不允许利用深层架构。

The study presented in this paper aims at designing a speaker gender detection system for large-scale analysis of raw audio streams. For this purpose, we compare the performances of GMMs, i-vector and CNN based systems. Audiovisual streams contain speech recorded in various con- ditions (telephone of variable quality, studio, spontaneous and prepared speech, speech-over-music, speech-over-noise) which should be handled robustly. While GMM and CNN approaches may be directly used on speech streams, i-vector based systems require a preliminary speaker segmentation step, in charge of splitting speech excerpts into homoge- neous segments assumed to belong to a single speaker. The reliability of this pre-segmentation stage may affect the dis- criminative power of the resulting i-vectors.

本文旨在设计一个用于大规模原始音频流分析的说话人性别检测系统。为此,我们比较了GMMs、i-vector和CNN系统的性能。视听流包含在各种条件下录制的语音(可变质量的电话、录音室、自发的和准备好的语音、音乐上的语音、噪声上的语音),这些条件应得到可靠的处理。虽然GMM和CNN方法可以直接用于语音流,但基于i-向量的系统需要一个初步的说话人分割步骤,负责将语音片段分割成假设属于单个说话人的同源片段。此预分割阶段的可靠性可能会影响生成的i向量的区分能力。

- TRAINING DATASET: INA’ SSPEAKER DICTIONARY

Gender detection models were trained using INA’s Speaker Dictionary [12, 13], which is to our knowledge the largest manually annotated speaker database issued from broadcast material reported in the literature, providing a definite techno- logical advantage regardless of the algorithmic strategy used (see [14] for a recent review of speaker databases). This au- diovisual corpus, constituted using a semi-automatic annota- tion protocol, contains about 32000 speech excerpts, corre- sponding to 1780 male (94 hours) and 494 female speakers (27h).

性别检测模型是使用INA的说话人词典[12,13]进行训练的,据我们所知,这是从文献报道的广播材料中发布的最大的手动注释说话人数据库,提供明确的技术优势,而不考虑所使用的算法策略(见[14]最近对说话人数据库的评论)。这个视觉语料库使用半自动注释协议构建,包含约32000个语音片段,对应1780名男性(94小时)和494名女性(27小时)。

A first set of excerpts was collected from TV news broad- casted from 2007 to 2014. Speech streams were first seg- mented in speaker turns using LIUM’s open-source software SpkDiarization, based on HAC-BIC clustering and Viterbi de- coding [15]. An Optical Character Recognition system was used to match personality name appearing on screen to refer- enced people [16]. The corresponding excerpts were thus pre- sented to annotators in charge of validating speech excerpts. A second set of archives was obtained from INA’s collections, for a period of time ranging from 1957 to 2012. Queries based on famous personalities names were performed on Ina.fr website. Speaker diarization procedures were used on result- ing archives and segments found in largest speaker clusters were presented to annotators. The last set of excerpts was ob- tained from 3 radio stations collected from 2012 to 2014. Ex- tracted speech turns were associated to i-vectors, and matched to previously known speakers, before being manually vali- dated.

从2007年到2014年,第一组摘录来自电视新闻广播。基于HAC-BIC聚类和Viterbi去编码的LIUM开源软件SpkDiarization,语音流首先在说话人轮换中被分割[15]。一个光学字符识别系统被用来匹配出现在屏幕上的人名,以推荐被推荐的人[16]。因此,相应的摘录被预先发送给负责验证语音摘录的注释员。第二套档案是从INA的藏品中获得的,保存时间从1957年到2012年不等。在Ina.fr网站上查询名人姓名。在结果存档中使用说话人二值化程序,在最大的说话人群中找到的片段被呈现给注释者。最后一组节选选自2012年至2014年收集的3家电台。在人工验证之前,提取的语音转换与i向量相关,并与先前已知的说话人匹配。

4. GENDERDETECTIONSYSTEMS

四。性别检测系统

Gender detection processing pipeline is composed of several modules. A Speech/Music segmenter based on CNN is used to discard music and empty segments [17]. Features corre- sponding to speech segments are then computed using a com- mon extraction framework. A simple energy threshold is used to discard frames associated with low energy. GMM, i-vector and CNN systems are then used to classify the remaining speech segments into male and female excerpts.

性别检测处理流程由几个模块组成。基于CNN的语音/音乐分割器用于丢弃音乐和空片段[17]。然后使用com-mon提取框架计算与语音段对应的特征。简单的能量阈值用于丢弃与低能量相关联的帧。然后使用GMM、i-vector和CNN系统将剩余的语音片段分为男性和女性片段。

4.1. FeatureExtraction

4.1条。特征提取

Feature extraction was realized on audio excerpts sampled at 16KHz using SIDEKIT [18]. 24 Mel-scaled filter-banks coef- ficients were computed on 25ms sliding windows with a 10ms shift. Those coefficients were fed directly to CNN models while a second set of acoustic features (MFCC) was obtained by applying Discrete Cosine Transform (DCT) to those filter- bank coefficients to extract 19 Mel-Frequency Cepstrums co- efficients (MFCC), which were augmented with log energy. A feature warping method was used to gaussianize MFCC distributions using windows of 3 seconds [19]. Time deriva- tives (∆) and acceleration (∆∆) coefficients were concate- nated to MFCC frames, resulting in 60-dimensional input fea- tures used by GMM and i-vector models.

利用SIDEKIT[18]对16KHz采样的音频片段进行特征提取。在位移为10ms的25ms滑动窗口上计算了24个Mel尺度滤波器组系数。利用离散余弦变换(DCT)对滤波器组系数进行离散余弦变换(DCT)提取19个Mel频率倒谱系数(MFCC),并利用对数能量进行增强,得到第二组声学特征(MFCC)。使用特征扭曲方法,使用3秒的窗口对MFCC分布进行高斯化[19]。将时间导数和加速度系数结合到MFCC框架中,得到GMM和i-vector模型使用的60维输入特征。

4.2. GaussianMixtureModels

4.2条。高斯混合模型

GMMs are the most common approach used for modeling speaker gender, and were used as a baseline for evaluating more complex models. Female and male GMM’s were trained using 1024 gaussians and diagonal covariance matrices. Each GMM was trained using 2000 randomly selected frames for each speaker found in the training set. Resulting models were used in a 2 state Hidden Markov Model (HMM) to segment speech into male and female excerpts.

GMMs是模拟说话人性别的最常用方法,被用作评估更复杂模型的基线。用1024个高斯矩阵和对角协方差矩阵训练女性和男性GMM。每个GMM使用2000个随机选择的帧对训练集中的每个说话人进行训练。将所得模型应用于二态隐马尔可夫模型(HMM)中,将语音分为男性和女性两部分。

4.3. I-vectorgendersegmentationsystem

4.3条。I-VectorGenderSegmentation系统

Our i-vector-based gender segmentation system is composed of three main modules. A speech diarization module is firstly used to segment speech into homogeneous speaker turns. An i-vector extractor is then used in a second stage to model each speaker turn as a compact vector, assumed to contain discrim- inative speaker information. Lastly, a classifier trained with i-vectors is used to predict the gender associated to a given speaker turn.

我们的基于i向量的性别分割系统由三个主要模块组成。首先利用语音二值化模块将语音分割成均匀的说话人话轮。然后,在第二阶段中使用i-向量抽取器将每个扬声器匝数建模为一个压缩向量,假设该压缩向量包含与说话人相关的信息。最后,利用i-向量训练的分类器来预测与给定的说话人转身相关的性别。

The diarization procedure was realized using S4D [20]. Speech segments were pre-segmented using Gaussian Di- vergence criterion, with a 2.5 second-window. Segments assumed to belong to the same speaker were merged through two clustering procedures based on Bayesian Information Criterion: a first procedure of Linear clustering, merging adjacent candidates, followed by a global Hierarchical Ag- glomerative Clustering procedure. Viterbi re-segmentation procedure was carried on the resulting clusters. Clustering al- gorithms and Viterbi re-segmentation were used with default thresholds (2, 3, -250). I-vector extraction was realized us- ing SIDEKIT [18]. A 1024 gaussians Universal Background Model (UBM) with diagonal covariance matrices was trained using 2500 randomly selected frames per female and 700 frames per male speaker, in order to deal with the gender balance of the training dataset. The total variability matrix was defined with rank 200, and trained using 10 randomly selected excerpts per speaker. Training excerpts used for the estimation of the total variability matrix were used to extract i-vectors, which were fed into a linear SVM in charge of predicting speaker gender. Training examples were weighted according to the gender distribution found in the training examples.

使用S4D[20]实现了二聚过程。在2.5秒窗口下,采用高斯双收敛准则对语音片段进行预分割。基于贝叶斯信息准则,采用两种聚类方法对假设属于同一说话人的语段进行合并:第一种是线性聚类,合并相邻的候选语段,然后是全局分层的Ag-glomerative聚类方法。对聚类结果进行维特比重分割。聚类al-gorithms和Viterbi重分割与默认阈值(2,3,-250)一起使用。利用SIDEKIT[18]实现了I矢量提取。为了解决训练数据集的性别均衡问题,采用1024个高斯通用背景模型(UBM)对每个女性2500帧和每个男性700帧进行训练。用秩200定义总变异矩阵,每个说话人随机抽取10个片段进行训练。利用估计总变异矩阵的训练片段提取i-向量,并将其输入负责预测说话人性别的线性支持向量机。根据训练实例中的性别分布对训练实例进行加权。

4.4. ConvolutionalNeuralNetworksystem(CNN)

4.4条。卷积神经网络系统(CNN)

Figure 1 describes the deep CNN architecture which was implemented using Keras to discriminate between male and female speech [21]. It is inspired from speech recognition models [22], and differs by using filter banks frames having lower dimensionality, associated to a larger time context of 68 frames (695 ms, 24 dimensions)1. Two 3x3 convolutional layers of 64 neurons are first used, followed by a 1x2 max- pooling layer, aimed at providing invariance in the frequency domain. Two additional 3x3 layers of 128 neurons are then followed by a 2x2 max-pooling layer and a last 3x3 convo- lutional layer of 256 neurons. A maximal temporal pooling layer, in charge of selecting the most discriminating pattern found in this relatively large time interval is finally used, and followed by 4 dense layers of 512 neurons, associated to increasing dropout rates, before the last softmax layer out- putting 1 probability per gender. Each layer of the network is followed by batch normalizations and RELU activations.

图1描述了使用Keras区分男性和女性语音的深层CNN架构[21]。它受到语音识别模型的启发[22],并且不同于使用具有较低维度的滤波器组帧,与68帧(695 ms,24维)1的较大时间上下文相关。首先使用64个神经元的两个3x3卷积层,然后是一个1x2最大池层,目的是在频域提供不变性。另外两个由128个神经元组成的3x3层,接着是一个2x2最大池层和最后一个由256个神经元组成的3x3循环层。最后使用最大的时间池层,负责选择在这个相对较大的时间间隔中发现的最具辨别力的模式,然后是4个由512个神经元组成的密集层,这与辍学率的增加有关,最后一个softmax层之前-每个性别输出1个概率。网络的每一层之后都是批量规范化和重新激活。

A data selection strategy aimed at using all available training data with limited over-fitting for a particular gender or speaker was used, consisting in generating 1000 examples batches, composed of 500 randomly examples of distinct speakers of each gender. Epochs were defined as an amount of 1,000 batches.

采用了一种数据选择策略,旨在使用所有可用的培训数据,并对特定性别或发言人进行有限的过度拟合,包括生成1000个示例批次,由每个性别的不同发言人的500个随机示例组成。时代被定义为1000个批次的数量。

Model selection consisted in splitting INA’s speaker dic-

分离INA说话人dic的模型选择-

tionary into training and validation subsets containing respec- tively 80% and 20% of disjoint speakers. Models’ perfor- mances were assessed after each epoch on the validation set, using speaker sampling strategies. F-measure was used as performance criterion, and training iterations were stopped after 5 epochs not resulting in performance improvement. Best models were obtained using less than 10 epochs. CNN’s output was then used to feed a 2 states HMM.

进入训练和验证子集,分别包含80%和20%的不连贯说话者。使用说话人抽样策略,在验证集的每个历元之后评估模型的性能。以F-measure作为性能指标,在5个阶段后停止训练迭代,没有提高性能。在不到10个时期内获得了最佳模型。然后CNN的输出被用来为两个州提供信息。

- EVALUATION

5.1. EvaluationMaterial

REPERE challenge corpus contains annotated TV streams gathered from two French channels from 2011 to 2013 [23]. This includes street interviews, debates and news correspond- ing to 46 hours of speech attributed to 1129 male speakers, and 12 hours attributed to 557 female speakers2. 457 speakers found in REPERE corpus were also found in INA’s speaker dictionary used for training, and 606 speakers were associated to a gender label, without identity annotation.

repee challenge corpus包含从2011年到2013年从两个法国频道收集的带注释的电视流[23]。这包括街头采访、辩论和新闻报道——1129名男性发言人的演讲时间为46小时,557名女性发言人的演讲时间为12小时。在国家广播电台用于培训的说话人词典中也发现了457名说话人,606名说话人与性别标签相关,没有身份标注。

5.2. Frame-LevelEvaluation

Table 1 presents speaker gender recognition frame-level per- formances obtained on REPERE corpus. I-vector speech-turn estimates were mapped to frame-level with same sampling frequency than GMM and CNNs. Since small pauses are not systematically annotated in ground-truth, this evaluation was carried on the subset of frames annotated as speech in REPERE and predicted as speech by the speech/music seg- menter, resulting in a binary classification problem. Best re- sults were obtained with the CNN model, which was asso- ciated to a F-measure of 96.52 and accuracy percentage of 97.42. All models were associated to better recall rates for male speakers, which may lead to systems underestimating female speaking time. This may be explained by the largest diversity of male speakers found in the training database.

表1显示了在重唱语料库中获得的说话人性别识别框架水平的表现。与GMM和CNNs相比,在相同的采样频率下,I矢量语音旋转估计被映射到帧级。由于小停顿并没有被系统地标注在基本事实中,因此该评估是在REPERE中标注为语音并由语音/音乐段预测为语音的帧子集上进行的,从而导致二元分类问题。CNN模型的F值为96.52,准确率为97.42。所有模型都与男性说话者的更好的回忆率相关,这可能导致系统低估女性的说话时间。这可以解释为,在培训数据库中发现的男性发言人的多样性最大。

5.3. End-to-EndEvaluation

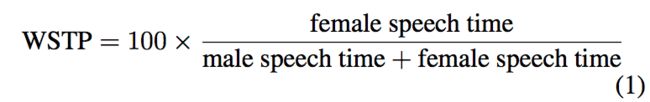

This evaluation describes the ability of models to optimize Women Speaking-Time Percentage (WSTP) estimation on variable length raw audiovisual excerpts. WSTP is the main descriptor retained in our ongoing gender equality stud- ies, and was computed for each annotated stream found in REPERE challenge dataset using the following formula:

该评估描述了模型在可变长度原始视听节选上优化女性说话时间百分比(WSTP)估计的能力。WSTP是我们正在进行的两性平等研究中保留的主要描述符,并使用以下公式为repee challenge数据集中发现的每个注释流计算:

Frame-level male and female gender detection errors may counter-balance and provide robust WSTP estimators for a reasonably long time-interval. Based on this assumption, REPERE shows were split into four categories according to their duration, resulting in 133 excepts of less than 2 minutes, 82 excerpts of duration varying between 2 and 10 minutes excerpts, 104 10-30 minutes excerpts and 25 30-60 minutes excerpts. Figure 2 shows Root Mean Square Error (RMSE) between estimated and reference WSTP, obtained using vari- able length recordings. As expected, longer excerpt durations were associated to lower WSTP estimation errors. Best re- sults were obtained with the CNN model, associated to a RMSE of 0.68 for excerpts longer than 30 minutes together with WSTP estimation mean and worst-case error rates as low as 0.59% and 1.8%.

帧级男性和女性性别检测错误可以在相当长的时间间隔内进行平衡并提供稳健的WSTP估计。基于这一假设,将剧目按时长分为四类,其中2分钟以下选编133部,2分钟至10分钟选编82部,10分钟至30分钟选编104部,30分钟至60分钟选编25部。图2显示了使用可变长度记录获得的估计和参考WSTP之间的均方根误差(RMSE)。正如预期的那样,较长的摘录持续时间与较低的WSTP估计错误相关。用CNN模型得到了最佳结果,对于超过30分钟的摘录,RMSE为0.68,WSTP估计的平均和最坏情况错误率分别低至0.59%和1.8%。

Maximal error-rates were found to be always lower for CNN than for i-vector model. This phenomenon may be ex- plained by the architecture of the i-vector model, relying on a baseline diarization step, which may miss small speaker turns, resulting in i-vectors corresponding to several speakers.

发现CNN的最大错误率总是低于i-向量模型。这种现象可以通过i-向量模型的结构来解释,依赖于基线二值化步骤,这可能会错过小的说话人匝数,从而导致对应于多个说话人的i-向量。

-

CONCLUSIONANDFUTUREWORK

6。结论与展望

A deep CNN model has been realized to segment speech streams into male and female excerpts and compared to GMM and i-vector models on REPERE corpus, showing su- perior performances. The proposed CNN model is accessible from INA’s GitHub repository: http://github.com/ ina-foss.

实现了一个将语音流分为男性和女性片段的深度CNN模型,并与剧目语料库中的GMM和i-向量模型进行了比较,显示了良好的性能。建议的CNN模型可以从INA的GitHub存储库访问:http://GitHub.com/INA foss。

This positive outcome allows considering the reliability of this gender detection system sufficient to perform large-scale gender equality descriptions, offering concrete perspectives for digital humanities. Detailed analyses realized using this framework goes beyond the scope of this paper and are ad- dressed in other studies [24]. Results obtained using 580.000 hours of French TV and radio streams broadcasted from 2001 to 2017 highlighted several interesting tendencies: Speaking time was mainly attributed to male speaker, especially in ra- dio material. Women speaking time was lower during peak viewing time. Percentage of women speaking time has in- creased of about 0.5% per year between 2001 and 2017. Pro- portion of male speakers was higher in sport, cultural and ed- ucational TV channels. Longer term studies may take advan- tage of INA’s available meta-data (detailed audience, type of program, speaker identities) to propose deeper analyses.

这一积极成果使我们能够考虑到这一性别检测系统的可靠性,足以进行大规模的性别平等描述,为数字人文学科提供具体的视角。使用该框架实现的详细分析超出了本文的范围,并在其他研究中进行了补充[24]。从2001年到2017年,使用580000小时的法国电视和广播流获得的结果突出了几个有趣的趋势:说话时间主要归因于男性,尤其是在拉丁语材料中。在观看高峰期,女性的说话时间较低。从2001年到2017年,女性发言时间的比例每年增加了0.5%。在体育、文化和教育电视频道中,男性演讲者的比例较高。长期研究可能会利用INA现有的元数据(详细的受众、节目类型、演讲者身份)来提出更深入的分析。

The impact of our evaluation is limited to the properties of REPERE corpus, containing only broadcast news archives. It does not include child speakers, nor very specific speech ut- terances such as found in movies, comedy sketches or speaker imitations, which should be addressed in future studies. Im- provements of the proposed i-vector system could be investi- gated using more sophisticated diarization methods [25]. The use of recurrent neural network units in CNN’s last layers, replacing the final temporal max-pooling layer proposed will also be investigated, together with training example balancing strategies based on pitch estimation and data augmentation.

我们的评价仅限于节目语料库的性质,只包含广播新闻档案。它不包括儿童演讲者,也不包括在电影、喜剧小品或模仿演讲者中发现的非常具体的言语现象,这些都应该在未来的研究中加以解决。提出的i-向量系统的改进可以使用更复杂的二值化方法进行研究[25]。在CNN的最后一层中使用递归神经网络单元,取代最后提出的时间最大池层,以及基于基音估计和数据增强的训练实例平衡策略。

Since speaking time is not sufficient to describe fully gen- der equality, longer-term research may include using ASR to describe gender roles and interactions.

由于演讲时间不足以充分描述性别平等,长期研究可能包括使用ASR来描述性别角色和互动。 -

REFERENCES

[1] Gender equality commission, “Handbook on the im- plementation of recommendation cm/rec(2013)1 of the committee of ministers of the council of europe on gen- der equality and media,” Council of Europe, 2015.

[2] Michele Reiser and Brigitte Gresy, “L’image des femmes dans les me ́dias,” 2008. [3] Conseil supe ́rieur de l’audiovisuel (CSA), “La repre ́sentation des femmes ala te ́le ́vision et a` la radio - rapport sur l’exercice 2016,” 2017.

[4] Sarah et al. Macharia, Who Makes the News?: Global Media Monitoring Project 2015, World Association for Christian Communication, 2015.

[5] Erwan Pe ́piot, “Voice, speech and gender:. male- female acoustic differences and cross-language varia- tion in english and french speakers,” Corela. Cognition, repre ́sentation, langage, , no. HS-16, 2015.

[6] Lori F Lamel and Jean-Luc Gauvain, “A phone-based approach to non-linguistic speech feature identifica- tion,” Computer Speech & Language, vol. 9, no. 1, pp. 87–103, 1995.

[7] Tobias Bocklet, Andreas Maier, Josef G Bauer, Felix Burkhardt, and Elmar Noth, “Age and gender recogni- tion for telephone applications based on gmm supervec- tors and support vector machines,” in Acoustics, Speech and Signal Processing, ICASSP. IEEE, 2008, pp. 1605– 1608.

[8] Rui Xia, Jun Deng, Bjorn Schuller, and Yang Liu, “Modeling gender information for emotion recognition using denoising autoencoder,” in Acoustics, Speech and Signal Processing (ICASSP). IEEE, 2014, pp. 990–994.

[9] Laurent El Shafey, Elie Khoury, and Se ́bastien Mar- cel, “Audio-visual gender recognition in uncontrolled environment using variability modeling techniques,” in International Joint Conference on Biometrics (IJCB). IEEE, 2014, pp. 1–8.

[10] Najim Dehak, Patrick J Kenny, Re ́da Dehak, Pierre Du- mouchel, and Pierre Ouellet, “Front-end factor analysis for speaker verification,” IEEE Transactions on Audio, Speech, and Language Processing, vol. 19, no. 4, pp. 788–798, 2011.

[11] Jimena Royo-Letelier, Romain Hennequin, and Manuel Moussallam, “Detection and characterization of singing voice using deep neural netwo rks,” UPMC-Paris, 2015.

[12] Franc ̧ois Salmon and Fe ́licien Vallet, “An effortless way to create large-scale datasets for famous speakers.,” in LREC, 2014, pp. 348–352.

[13] Fe ́licien Vallet, Jim Uro, Je ́re ́my Andriamakaoly, Hakim Nabi, Mathieu Derval, and Jean Carrive, “Speech trax: A bottom to the top approach for speaker tracking and indexing in an archiving context.,” in LREC, 2016.

[14] Arsha Nagrani, Joon Son Chung, and Andrew Zisser- man, “Voxceleb: A large-scale speaker identification dataset,” Proc. Interspeech 2017, pp. 2616–2620, 2017.

[15] Sylvain Meignier and Teva Merlin, “Lium spkdiariza- tion: an open source toolkit for diarization,” in CMU SPUD Workshop, 2010, vol. 2010.

[16] Johann Poignant, Laurent Besacier, Georges Que ́not, and Franck Thollard, “From text detection in videos to person identification,” in Multimedia and Expo (ICME). IEEE, 2012, pp. 854–859.

[17] David Doukhan and Jean Carrive, “Investigating the Use of Semi-Supervised Convolutional Neural Network Models for Speech/Music Classification and Segmenta- tion,” in The Ninth International Conferences on Ad- vances in Multimedia (MMEDIA 2017), Apr. 2017.

[18] Anthony Larcher, Kong Aik Lee, and Sylvain Meignier, “An extensible speaker identification sidekit in python,” in Acoustics, Speech and Signal Processing (ICASSP). IEEE, 2016, pp. 5095–5099.

[19] Jason Pelecanos and Sridha Sridharan, “Feature warp- ing for robust speaker verification,” 2001.

5218

[20] Sylvain Meignier and Anthony Larcher,

Sidekit for speaker diarization,” http://lium. univ-lemans.fr/sidekit/s4d/, 2015.

[21] Franc ̧ois Chollet et al., “Keras,” https://github. com/fchollet/keras, 2015.

[22] Yanmin Qian, Mengxiao Bi, Tian Tan, and Kai Yu, “Very deep convolutional neural networks for noise ro- bust speech recognition,” IEEE/ACM Transactions on Audio, Speech, and Language Processing, vol. 24, no. 12, pp. 2263–2276, 2016.

[23] Aude Giraudel, Matthieu Carre ́, Vale ́rie Mapelli, Juli- ette Kahn, Olivier Galibert, and Ludovic Quintard, “The repere corpus: a multimodal corpus for person recogni- tion.,” in LREC, 2012, pp. 1102–1107.

[24] David Doukhan, Ge ́raldine Poels, and Jean Carrive, “Describing gender equality in french audiovisual streams with a deep learning approach (accepted),” Journal of European Television History and Culture (VIEW), 2018.

[25] Gae ̈l Le Lan, Delphine Charlet, Anthony Larcher, and Sylvain Meignier, “A triplet ranking-based neural net- work for speaker diarization and linking,” Proc. Inter- speech 2017, pp. 3572–3576, 2017.