【MMDetection3D】MVXNet踩坑笔记

文章目录

- 1. 搭建环境

- 2. 制作数据集

- 3. 修改config

- 4. 训练

- 5. 推理

原文

代码

MVXNet(CVPR2019)

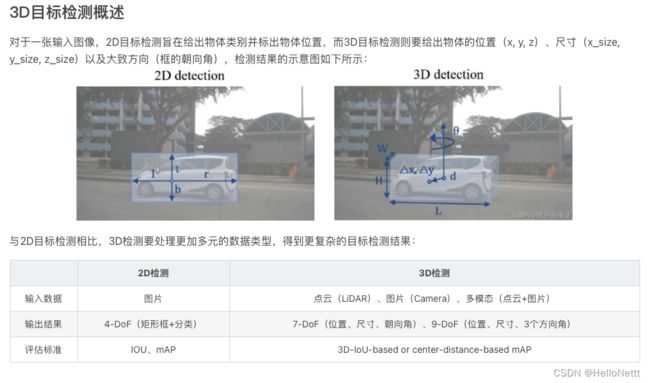

最近许多关于3D target detection的工作都集中在设计能够使用点云数据的神经网络架构上。虽然这些方法表现出令人鼓舞的性能,但它们通常基于单一模态,无法利用其他模态(如摄像头和激光雷达)的信息。尽管一些方法融合了来自不同模式的数据,这些方法要么使用复杂的pipeline来顺序处理模式,要么执行晚期融合,无法在早期阶段学习不同模式之间的相互作用。在这项工作中,我们提出了点融合和VoxelFusion:两种简单而有效的早期融合方法,通过利用最近引入的VoxelNet架构,将RGB和点云模式结合起来。对KITTI数据集的评估表明,与仅使用点云数据的方法相比,KITTI数据集在性能上有显著改进。此外,所提出的方法提供了与最先进的多模态算法竞争的结果,通过使用简单的单级网络,在KITTI基准的6个鸟瞰图和3D检测类别中的5个获得前2名的排名。

本文在复现过程中借鉴了@嗜睡的篠龙的这篇文章,下文引用也多出于这篇文章,在此向作者致谢!

1. 搭建环境

搭建环境,直接无脑follow 官方的INSTALL.md。

conda create --name openmmlab python=3.8 -y

conda activate openmmlab

# GPU环境

conda install pytorch torchvision -c pytorch

# or CPU环境

conda install pytorch torchvision cpuonly -c pytorch

pip install openmim

mim install mmcv-full

mim install mmdet

mim install mmsegmentation

git clone https://github.com/open-mmlab/mmdetection3d.git

cd mmdetection3d

pip install -e .

2. 制作数据集

本文使用KITTI数据集。有很多分析KITTI数据的文章,我们不再赘述,简单列一下基本信息。

该数据集用于评测立体图像(stereo),光流(optical flow),视觉测距(visual odometry),3D物体检测(object detection)和3D跟踪(tracking)等计算机视觉技术在车载环境下的性能。

场景:Road、City、Residential、Campus、Person

类别:Car、Van、Truck、Pedestrian、Person_sitting、Cyclist、Tram、Misc、DontCare,其中DontCare标签表示该区域没有被标注

3D目标检测数据集由7481个训练图像和7518个测试图像以及相应的点云数据组成,包括总共80256个标记对象。具体来看,下图蓝色框标记的为我们需要的数据,分别是

彩色图像数据(12GB)

点云数据(29GB)

相机矫正数据(16MB)

标签数据(5MB)

其中彩色图像数据、点云数据、相机矫正数据均包含training(7481)和testing(7518)两个部分,标签数据只有training部分。

按照官方文档组织数据集在./mmdetection3d/data/

mmdetection3d

├── mmdet3d

├── tools

├── configs

├── data

│ ├── kitti

│ │ ├── ImageSets

│ │ ├── testing

│ │ │ ├── calib

│ │ │ ├── image_2

│ │ │ ├── velodyne

│ │ ├── training

│ │ │ ├── calib

│ │ │ ├── image_2

│ │ │ ├── label_2

│ │ │ ├── velodyne

│ │ │ ├── planes (optional)

P.S.这里我最近学到一个技巧,一般/home的硬盘容量不会很大,我们把数据集保存在最大的硬盘/data,可以通过下方命令实现软连接。

ln -s /data/kitti /home/mmdetection3d/data/kitti

下面开始创建 KITTI 点云数据,首先需要加载原始的点云数据并生成相关的包含目标标签和标注框的数据标注文件,同时还需要为 KITTI 数据集生成每个单独的训练目标的点云数据,并将其存储在 data/kitti/kitti_gt_database 的 .bin 格式的文件中,此外,需要为训练数据或者验证数据生成 .pkl 格式的包含数据信息的文件。

通过运行下面的命令来创建最终的 KITTI 数据:

# 进入mmdetection3d主目录

cd mmdetection3d

# 创建文件夹

mkdir ./data/kitti/ && mkdir ./data/kitti/ImageSets

# Download data split

wget -c https://raw.githubusercontent.com/traveller59/second.pytorch/master/second/data/ImageSets/test.txt --no-check-certificate --content-disposition -O ./data/kitti/ImageSets/test.txt

wget -c https://raw.githubusercontent.com/traveller59/second.pytorch/master/second/data/ImageSets/train.txt --no-check-certificate --content-disposition -O ./data/kitti/ImageSets/train.txt

wget -c https://raw.githubusercontent.com/traveller59/second.pytorch/master/second/data/ImageSets/val.txt --no-check-certificate --content-disposition -O ./data/kitti/ImageSets/val.txt

wget -c https://raw.githubusercontent.com/traveller59/second.pytorch/master/second/data/ImageSets/trainval.txt --no-check-certificate --content-disposition -O ./data/kitti/ImageSets/trainval.txt

python tools/create_data.py kitti --root-path ./data/kitti --out-dir ./data/kitti --extra-tag kitti --with-plane

处理完成之后的目录如下:

kitti

├── ImageSets

│ ├── test.txt

│ ├── train.txt

│ ├── trainval.txt

│ ├── val.txt

├── testing

│ ├── calib

│ ├── image_2

│ ├── velodyne

│ ├── velodyne_reduced

├── training

│ ├── calib

│ ├── image_2

│ ├── label_2

│ ├── velodyne

│ ├── velodyne_reduced

│ ├── planes (optional)

├── kitti_gt_database

│ ├── xxxxx.bin

├── kitti_infos_train.pkl

├── kitti_infos_val.pkl

├── kitti_dbinfos_train.pkl

├── kitti_infos_test.pkl

├── kitti_infos_trainval.pkl

├── kitti_infos_train_mono3d.coco.json

├── kitti_infos_trainval_mono3d.coco.json

├── kitti_infos_test_mono3d.coco.json

├── kitti_infos_val_mono3d.coco.json

3. 修改config

要想成功训练MVXNet我们还需要配置一下configs/_base_/schedules/cosine.py文件,将learning rate调小到0.0003以下

# lr = 0.003 # max learning rate

lr = 0.0001

否则会出现如下报错

Traceback (most recent call last):

File "tools/train.py", line 265, in <module>

main()

File "tools/train.py", line 254, in main

train_model(

File "/data/run01/scz3687/openmmlab/mmdetection3d/mmdet3d/apis/train.py", line 344, in train_model

train_detector(

File "/data/run01/scz3687/openmmlab/mmdetection3d/mmdet3d/apis/train.py", line 319, in train_detector

runner.run(data_loaders, cfg.workflow)

File "/HOME/scz3687/.conda/envs/openmmlab/lib/python3.8/site-packages/mmcv/runner/epoch_based_runner.py", line 127, in run

epoch_runner(data_loaders[i], **kwargs)

File "/HOME/scz3687/.conda/envs/openmmlab/lib/python3.8/site-packages/mmcv/runner/epoch_based_runner.py", line 51, in train

self.call_hook('after_train_iter')

File "/HOME/scz3687/.conda/envs/openmmlab/lib/python3.8/site-packages/mmcv/runner/base_runner.py", line 309, in call_hook

getattr(hook, fn_name)(self)

File "/HOME/scz3687/.conda/envs/openmmlab/lib/python3.8/site-packages/mmcv/runner/hooks/optimizer.py", line 56, in after_train_iter

runner.outputs['loss'].backward()

File "/HOME/scz3687/.conda/envs/openmmlab/lib/python3.8/site-packages/torch/_tensor.py", line 363, in backward

torch.autograd.backward(self, gradient, retain_graph, create_graph, inputs=inputs)

File "/HOME/scz3687/.conda/envs/openmmlab/lib/python3.8/site-packages/torch/autograd/__init__.py", line 173, in backward

Variable._execution_engine.run_backward( # Calls into the C++ engine to run the backward pass

File "/HOME/scz3687/.conda/envs/openmmlab/lib/python3.8/site-packages/torch/autograd/function.py", line 253, in apply

return user_fn(self, *args)

File "/HOME/scz3687/.conda/envs/openmmlab/lib/python3.8/site-packages/mmcv/ops/scatter_points.py", line 51, in backward

ext_module.dynamic_point_to_voxel_backward(

RuntimeError: CUDA error: an illegal memory access was encountered

4. 训练

首先,使用命令python tools/train.py -h查看训练参数:

usage: train.py [-h] [--work-dir WORK_DIR] [--resume-from RESUME_FROM]

[--auto-resume] [--no-validate]

[--gpus GPUS | --gpu-ids GPU_IDS [GPU_IDS ...] | --gpu-id

GPU_ID] [--seed SEED] [--diff-seed] [--deterministic]

[--options OPTIONS [OPTIONS ...]]

[--cfg-options CFG_OPTIONS [CFG_OPTIONS ...]]

[--launcher {none,pytorch,slurm,mpi}]

[--local_rank LOCAL_RANK] [--autoscale-lr]

config

Train a detector

positional arguments:

config train config file path

optional arguments:

-h, --help show this help message and exit

--work-dir WORK_DIR the dir to save logs and models

--resume-from RESUME_FROM

the checkpoint file to resume from

--auto-resume resume from the latest checkpoint automatically

--no-validate whether not to evaluate the checkpoint during training

--gpus GPUS (Deprecated, please use --gpu-id) number of gpus to

use (only applicable to non-distributed training)

--gpu-ids GPU_IDS [GPU_IDS ...]

(Deprecated, please use --gpu-id) ids of gpus to use

(only applicable to non-distributed training)

--gpu-id GPU_ID number of gpus to use (only applicable to non-

distributed training)

--seed SEED random seed

--diff-seed Whether or not set different seeds for different ranks

--deterministic whether to set deterministic options for CUDNN

backend.

--options OPTIONS [OPTIONS ...]

override some settings in the used config, the key-

value pair in xxx=yyy format will be merged into

config file (deprecate), change to --cfg-options

instead.

--cfg-options CFG_OPTIONS [CFG_OPTIONS ...]

override some settings in the used config, the key-

value pair in xxx=yyy format will be merged into

config file. If the value to be overwritten is a list,

it should be like key="[a,b]" or key=a,b It also

allows nested list/tuple values, e.g.

key="[(a,b),(c,d)]" Note that the quotation marks are

necessary and that no white space is allowed.

--launcher {none,pytorch,slurm,mpi}

job launcher

--local_rank LOCAL_RANK

--autoscale-lr automatically scale lr with the number of gpus

- configs:必选参数,训练模型的参数配置文件

- work-dir:可选参数,训练日志及权重文件保存文件夹,默认会新建

work-dirs文件夹,并保存在以configs文件名命名的文件夹中 - gpu-id:使用的GPU个数

单GPU训练

python tools/train.py configs/mvxnet/dv_mvx-fpn_second_secfpn_adamw_2x8_80e_kitti-3d-3class.py

多GPU训练

CUDA_VISIBLE_DEVICES=0,1,2,3 tools/dist_train.sh configs/mvxnet/dv_mvx-fpn_second_secfpn_adamw_2x8_80e_kitti-3d-3class.py 4

2022-11-08 10:51:31,649 - mmdet - INFO - Epoch [1][50/928] lr: 1.441e-05, eta: 4:37:44, time: 0.450, data_time: 0.054, memory: 6653, loss_cls: 1.4217, loss_bbox: 3.9761, loss_dir: 0.1595, loss: 5.5573, grad_norm: 1373.2281

2022-11-08 10:51:48,514 - mmdet - INFO - Epoch [1][100/928] lr: 1.891e-05, eta: 4:02:44, time: 0.337, data_time: 0.007, memory: 6736, loss_cls: 1.1844, loss_bbox: 2.9370, loss_dir: 0.1458, loss: 4.2673, grad_norm: 743.8485

2022-11-08 10:52:04,932 - mmdet - INFO - Epoch [1][150/928] lr: 2.341e-05, eta: 3:49:02, time: 0.328, data_time: 0.007, memory: 6736, loss_cls: 1.1656, loss_bbox: 2.0739, loss_dir: 0.1407, loss: 3.3801, grad_norm: 380.4805

...

2022-11-08 10:56:18,483 - mmdet - INFO - Saving checkpoint at 1 epochs

[ ] 0/3769, elapsed: 0s, ETA:

[ ] 1/3769, 0.4 task/s, elapsed: 2s, ETA: 8539s

[ ] 2/3769, 0.9 task/s, elapsed: 2s, ETA: 4269s

...

[>>>>>>>>>>>>>>>>>>>>>>>>> ] 3768/3769, 260.8 task/s, elapsed: 14s, ETA: 0s

[>>>>>>>>>>>>>>>>>>>>>>>>>>] 3769/3769, 260.8 task/s, elapsed: 14s, ETA: 0s

Result is saved to /tmp/tmpvzpmdfvd/resultspts_bbox.pkl.

2022-11-08 11:04:55,700 - mmdet - INFO - Results of pts_bbox:

----------- AP11 Results ------------

Pedestrian [email protected], 0.50, 0.50:

bbox AP11:22.9431, 19.6280, 18.6389

bev AP11:26.2247, 21.6366, 19.8992

3d AP11:14.9756, 12.1538, 11.6735

aos AP11:10.18, 8.75, 8.27

Pedestrian [email protected], 0.25, 0.25:

bbox AP11:22.9431, 19.6280, 18.6389

bev AP11:39.5001, 35.3343, 32.7441

3d AP11:39.1213, 34.2670, 31.7966

aos AP11:10.18, 8.75, 8.27

Cyclist [email protected], 0.50, 0.50:

bbox AP11:9.9306, 7.9863, 8.3425

bev AP11:4.0278, 3.4255, 3.4084

3d AP11:1.1849, 1.3387, 1.3626

aos AP11:3.31, 2.91, 3.02

Cyclist [email protected], 0.25, 0.25:

bbox AP11:9.9306, 7.9863, 8.3425

bev AP11:10.6039, 8.3722, 8.3490

3d AP11:9.9899, 7.9064, 7.9410

aos AP11:3.31, 2.91, 3.02

Car [email protected], 0.70, 0.70:

bbox AP11:75.9377, 67.8962, 66.4752

bev AP11:70.0417, 64.4884, 61.9631

3d AP11:28.9216, 27.3963, 24.5591

aos AP11:72.03, 63.01, 61.01

Car [email protected], 0.50, 0.50:

bbox AP11:75.9377, 67.8962, 66.4752

bev AP11:88.4258, 86.7206, 79.9050

3d AP11:85.1207, 79.3290, 77.9177

aos AP11:72.03, 63.01, 61.01

Overall AP11@easy, moderate, hard:

bbox AP11:36.2705, 31.8368, 31.1522

bev AP11:33.4314, 29.8502, 28.4236

3d AP11:15.0274, 13.6296, 12.5317

aos AP11:28.51, 24.89, 24.10

----------- AP40 Results ------------

Pedestrian [email protected], 0.50, 0.50:

bbox AP40:22.5011, 18.9615, 18.0096

bev AP40:25.9403, 21.0708, 19.7923

3d AP40:14.2027, 11.5243, 10.5772

aos AP40:9.94, 8.44, 7.97

Pedestrian [email protected], 0.25, 0.25:

bbox AP40:22.5011, 18.9615, 18.0096

bev AP40:40.4494, 35.8287, 33.2829

3d AP40:39.9180, 34.3863, 31.8323

aos AP40:9.94, 8.44, 7.97

Cyclist [email protected], 0.50, 0.50:

bbox AP40:9.6635, 7.9641, 7.9617

bev AP40:4.1145, 3.2324, 3.0399

3d AP40:1.0719, 1.0088, 1.0162

aos AP40:3.21, 2.90, 2.88

Cyclist [email protected], 0.25, 0.25:

bbox AP40:9.6635, 7.9641, 7.9617

bev AP40:10.8334, 8.5479, 8.2732

3d AP40:9.7111, 7.8380, 7.6383

aos AP40:3.21, 2.90, 2.88

Car [email protected], 0.70, 0.70:

bbox AP40:76.0322, 70.1343, 65.6697

bev AP40:70.1388, 63.4255, 60.4669

3d AP40:24.2739, 22.6836, 20.7413

aos AP40:71.62, 64.28, 59.54

Car [email protected], 0.50, 0.50:

bbox AP40:76.0322, 70.1343, 65.6697

bev AP40:91.1145, 88.5165, 84.1039

3d AP40:86.1016, 82.8727, 78.3102

aos AP40:71.62, 64.28, 59.54

Overall AP40@easy, moderate, hard:

bbox AP40:36.0656, 32.3533, 30.5470

bev AP40:33.3979, 29.2429, 27.7664

3d AP40:13.1828, 11.7389, 10.7782

aos AP40:28.26, 25.21, 23.46

训练结束后,我们可以在work_dirs/dv_mvx-fpn_second_secfpn_adamw_2x8_80e_kitti-3d-3class文件夹中看到训练结果,包括日志文件(.log)、权重文件(.pth)以及模型配置文件(.py)等。

5. 推理

首先使用命令查看测试函数有哪些可传入参数:python tools/test.py -h:

usage: test.py [-h] [--out OUT] [--fuse-conv-bn]

[--gpu-ids GPU_IDS [GPU_IDS ...]] [--gpu-id GPU_ID]

[--format-only] [--eval EVAL [EVAL ...]] [--show]

[--show-dir SHOW_DIR] [--gpu-collect] [--tmpdir TMPDIR]

[--seed SEED] [--deterministic]

[--cfg-options CFG_OPTIONS [CFG_OPTIONS ...]]

[--options OPTIONS [OPTIONS ...]]

[--eval-options EVAL_OPTIONS [EVAL_OPTIONS ...]]

[--launcher {none,pytorch,slurm,mpi}] [--local_rank LOCAL_RANK]

config checkpoint

MMDet test (and eval) a model

positional arguments:

config test config file path

checkpoint checkpoint file

optional arguments:

-h, --help show this help message and exit

--out OUT output result file in pickle format

--fuse-conv-bn Whether to fuse conv and bn, this will slightly

increasethe inference speed

--gpu-ids GPU_IDS [GPU_IDS ...]

(Deprecated, please use --gpu-id) ids of gpus to use

(only applicable to non-distributed training)

--gpu-id GPU_ID id of gpu to use (only applicable to non-distributed

testing)

--format-only Format the output results without perform evaluation.

It isuseful when you want to format the result to a

specific format and submit it to the test server

--eval EVAL [EVAL ...]

evaluation metrics, which depends on the dataset,

e.g., "bbox", "segm", "proposal" for COCO, and "mAP",

"recall" for PASCAL VOC

--show show results

--show-dir SHOW_DIR directory where results will be saved

--gpu-collect whether to use gpu to collect results.

--tmpdir TMPDIR tmp directory used for collecting results from

multiple workers, available when gpu-collect is not

specified

--seed SEED random seed

--deterministic whether to set deterministic options for CUDNN

backend.

--cfg-options CFG_OPTIONS [CFG_OPTIONS ...]

override some settings in the used config, the key-

value pair in xxx=yyy format will be merged into

config file. If the value to be overwritten is a list,

it should be like key="[a,b]" or key=a,b It also

allows nested list/tuple values, e.g.

key="[(a,b),(c,d)]" Note that the quotation marks are

necessary and that no white space is allowed.

--options OPTIONS [OPTIONS ...]

custom options for evaluation, the key-value pair in

xxx=yyy format will be kwargs for dataset.evaluate()

function (deprecate), change to --eval-options

instead.

--eval-options EVAL_OPTIONS [EVAL_OPTIONS ...]

custom options for evaluation, the key-value pair in

xxx=yyy format will be kwargs for dataset.evaluate()

function

--launcher {none,pytorch,slurm,mpi}

job launcher

--local_rank LOCAL_RANK

可以看到有两个必选参数config和checkpoint,分别为模型配置文件和训练生成的权重文件,其他参数:

- eval:使用的评价指标,取决于数据集(“bbox”, “segm”, “proposal” for COCO, and “mAP”,

“recall” for PASCAL VOC),这里直接沿用了2D检测中常用的几个评价标准 - show:是否对测试结果进行可视化,要安装open3d库(没有的话,直接

pip install open3d即可) - out_dir:测试结果的保存目录

# model settings

voxel_size = [0.05, 0.05, 0.1]

point_cloud_range = [0, -40, -3, 70.4, 40, 1]

Others:点云中继特征向量尺寸计算:

# model settings

voxel_size = [0.05, 0.05, 0.1]

point_cloud_range = [0, -40, -3, 70.4, 40, 1]