Meta Learning: Learn to learn

- 本文为李宏毅 2021 ML 课程的笔记

目录

- Introduction of Meta Learning

-

- What is Meta Learning?

- Meta Learning – Step 1

- Meta Learning – Step 2

- Meta Learning – Step 3

- Meta Learning v.s ML

- Applications

-

- Few-shot Image Classification

- More...

- What is learnable in a learning algorithm?

-

- Learning to initialize

-

- Model-Agnostic Meta-Learning (MAML)

- Reptile

- MAML++

- Optimizer

- Network Architecture Search (NAS)

- Data Processing

-

- Data Augmentation

- Sample Reweighting

- Learning to compare: Metric-based approach

-

- Siamese Network

- N N N-way Few/One-shot Learning

-

- Prototypical Network

- Relation Network

- Few-shot learning for imaginary data

- Train + Test as RNN

Introduction of Meta Learning

What is Meta Learning?

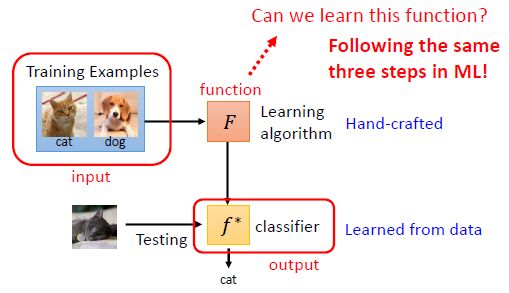

3 steps: (1) Function with unknown; (2) Define loss function; (3) Optimization

Meta Learning – Step 1

- What is learnable in a learning algorithm? - e.g. In DL: Net Architecture, Initial Parameters, Learning Rate… (In meta, we will try to learn some of them. We can categorize meta learning based on what is learnable)

Meta Learning – Step 2

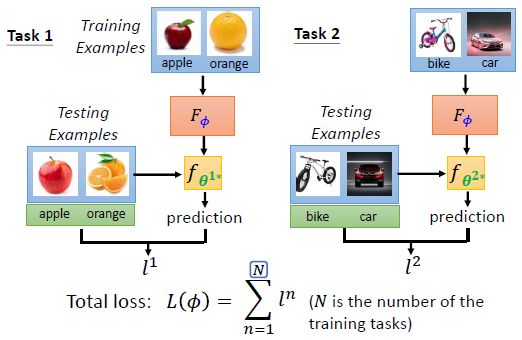

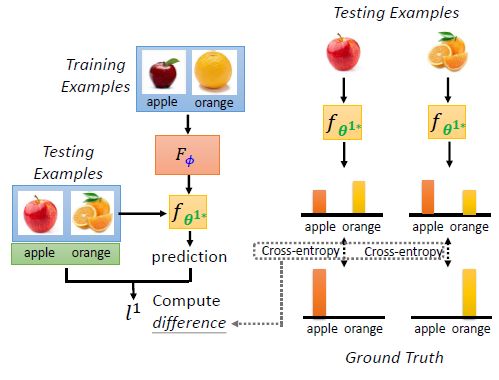

- Define loss function L ( ϕ ) L(\phi) L(ϕ) for learning algorithm F ϕ F_\phi Fϕ

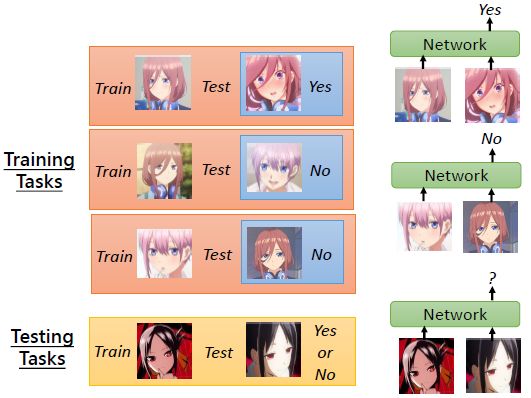

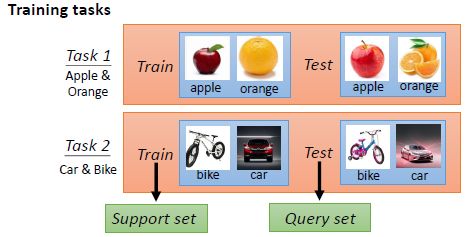

- 首先我们有一系列的 training tasks (相当于 ML 里的 training data) 用于训练 F ϕ F_\phi Fϕ

- Across-task Training (includes within-task training and testing): 对每个 training task,我们都可以根据 F ϕ F_\phi Fϕ,使用 training task 中的 training examples (Support set) 学出一个模型 f θ ∗ f_{\theta^*} fθ∗,然后在 training task 中的 testing examples (Query set) 上计算损失函数 l l l

θ 1 ∗ \theta^{1*} θ1∗: parameters of the classifier learned by F ϕ F_\phi Fϕ using the training examples of task 1

Meta Learning – Step 3

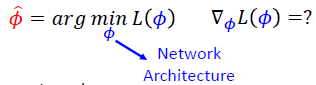

- Find ϕ \phi ϕ that can minimize L ( ϕ ) L(\phi) L(ϕ)

ϕ ∗ = arg min ϕ L ( ϕ ) \phi^*=\argmin_\phi L(\phi) ϕ∗=ϕargminL(ϕ)- If you know how to compute ∂ L ( ϕ ) ∂ ϕ \frac{\partial L(\phi)}{\partial \phi} ∂ϕ∂L(ϕ), Gradient descent is your friend.

- What if L ( ϕ ) L(\phi) L(ϕ) is not differentiable? – Reinforcement Learning / Evolutionary Algorithm

Framework

Meta Learning v.s ML

- What you know about ML can usually apply to meta learning

- Overfitting on training tasks ⇒ \Rightarrow ⇒ (1) Get more training tasks to improve performance; (2) Task augmentation

- There are also hyperparameters when learning a learning algorithm …… ⇒ \Rightarrow ⇒ We also need Development tasks (类似于 ML 中的验证集,用于选择超参)

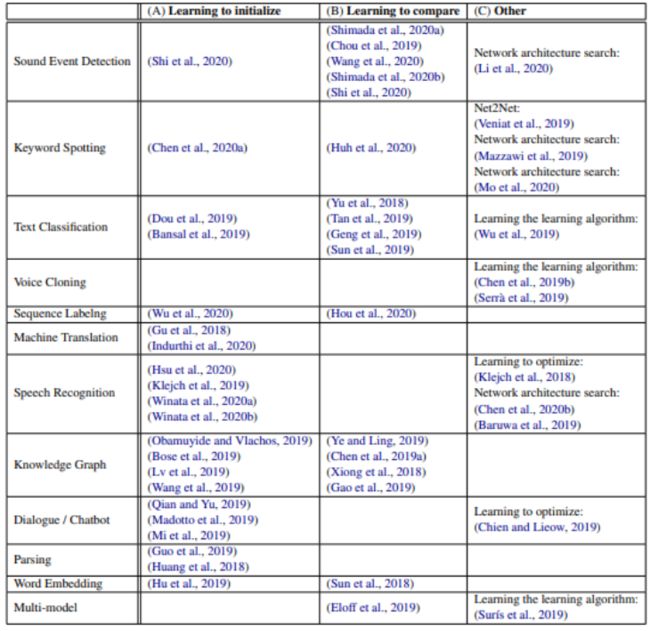

Applications

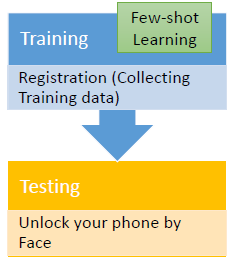

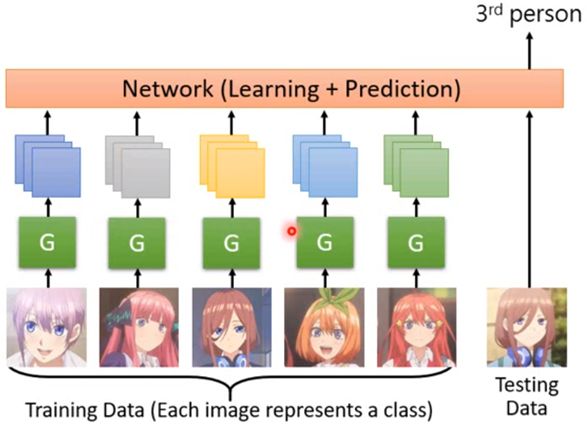

Few-shot Image Classification

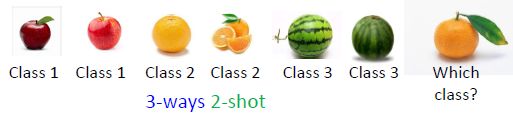

- Few-shot Image Classification: Each class only has a few images.

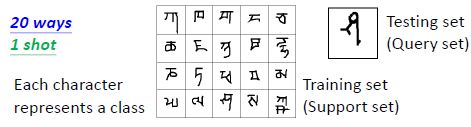

- N N N-ways K K K-shot classification: In each task, there are N classes, each has K examples.

Omniglot

- In meta learning, you need to prepare many N N N-ways K K K-shot tasks as training and testing tasks. 最常用的就是使用 Omniglot 数据集: 1623 characters; Each has 20 examples

- Split your characters into training and testing characters

More…

What is learnable in a learning algorithm?

Learning to initialize

学习如何初始化网络参数

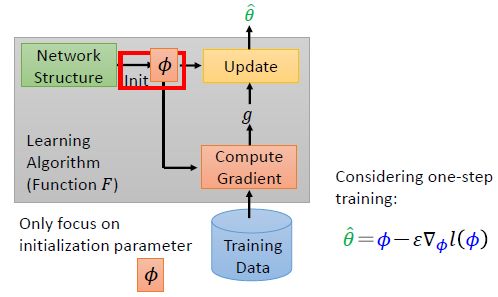

Model-Agnostic Meta-Learning (MAML)

MAML 读作 mammal

- 在 training tasks 上训练时,只更新一次参数:

Gradient descent

- MAML 利用梯度下降来更新 ϕ \phi ϕ,下面就计算梯度 ∇ ϕ L ( ϕ ) \nabla_\phi L(\phi) ∇ϕL(ϕ):

∇ ϕ L ( ϕ ) = ∇ ϕ ∑ n = 1 N l n ( θ ^ n ) = ∑ n = 1 N ∇ ϕ l n ( θ ^ n ) \nabla_\phi L(\phi)=\nabla_\phi \sum_{n=1}^N l^n(\hat \theta^n)=\sum_{n=1}^N\nabla_\phi l^n(\hat \theta^n) ∇ϕL(ϕ)=∇ϕn=1∑Nln(θ^n)=n=1∑N∇ϕln(θ^n)而

∂ l ( θ ^ ) ∂ ϕ i = ∑ j ∂ l ( θ ^ ) ∂ θ ^ j ∂ θ ^ j ∂ ϕ i \frac{\partial l(\hat \theta)}{\partial\phi_i}=\sum_j \frac{\partial l(\hat \theta)}{\partial\hat \theta_j}\frac{\partial \hat \theta_j}{\partial\phi_i} ∂ϕi∂l(θ^)=j∑∂θ^j∂l(θ^)∂ϕi∂θ^j现在只需求出 ∂ θ ^ j ∂ ϕ i \frac{\partial \hat \theta_j}{\partial\phi_i} ∂ϕi∂θ^j 即可 (using a first-order approximation):

∂ θ ^ j ∂ ϕ i = ∂ ( ϕ j − ε ∂ l ( ϕ ) ∂ ϕ j ) ∂ ϕ i = ∂ ϕ j ∂ ϕ i − ε ∂ l ( ϕ ) ∂ ϕ j ∂ ϕ i ≈ { 0 i ≠ j 1 i = j \begin{aligned}\frac{\partial\hat \theta_j}{\partial\phi_i}&=\frac{\partial(\phi_j-\varepsilon\frac{\partial l(\phi)}{\partial\phi_j})}{\partial\phi_i}=\frac{\partial\phi_j}{\partial\phi_i}-\varepsilon\frac{\partial l(\phi)}{\partial\phi_j\partial\phi_i} \\&\approx\begin{cases}0&i\neq j\\1&i=j\end{cases}\end{aligned} ∂ϕi∂θ^j=∂ϕi∂(ϕj−ε∂ϕj∂l(ϕ))=∂ϕi∂ϕj−ε∂ϕj∂ϕi∂l(ϕ)≈{01i=ji=j因此

∂ l ( θ ^ ) ∂ ϕ i = ∑ j ∂ l ( θ ^ ) ∂ θ ^ j ∂ θ ^ j ∂ ϕ i ≈ ∂ l ( θ ^ ) ∂ θ ^ i \frac{\partial l(\hat \theta)}{\partial\phi_i}=\sum_j \frac{\partial l(\hat \theta)}{\partial\hat \theta_j}\frac{\partial \hat \theta_j}{\partial\phi_i}\approx\frac{\partial l(\hat \theta)}{\partial\hat \theta_i} ∂ϕi∂l(θ^)=j∑∂θ^j∂l(θ^)∂ϕi∂θ^j≈∂θ^i∂l(θ^) ∇ ϕ L ( ϕ ) ≈ ∑ n = 1 N ∇ θ ^ l n ( θ ^ n ) \nabla_\phi L(\phi)\approx\sum_{n=1}^N\nabla_{\hat \theta} l^n(\hat \theta^n) ∇ϕL(ϕ)≈n=1∑N∇θ^ln(θ^n)

MAML – Real Implementation

- 由于 ϕ \phi ϕ 更新的梯度方向与 θ ^ \hat\theta θ^ 的梯度方向一样,因此可以在每个 training task 上计算两次梯度,第一次用于将 ϕ \phi ϕ 更新为 θ ^ \hat\theta θ^,第二次用于将 ϕ i \phi^i ϕi 更新为 ϕ i + 1 \phi^{i+1} ϕi+1

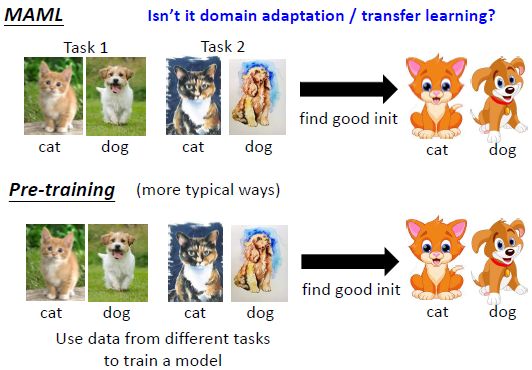

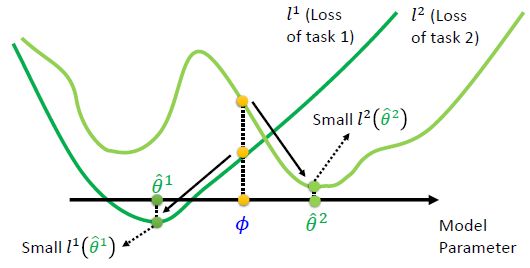

MAML v.s. Pre-training

- MAML: 我們不在意 ϕ \phi ϕ 在 training task 上表現如何,我們在意用 ϕ \phi ϕ 訓練出來的 θ ^ n \hat\theta^n θ^n 表現如何 (如下图所示,初始值 ϕ \phi ϕ 在两个任务上表现并不是特别好,但在训练后却能在两个任务上都找到最优解)

- Model Pre-training: 找尋在所有 task 都最好的 ϕ \phi ϕ,並不保證拿 ϕ \phi ϕ 去訓練以後會得到好的 θ ^ n \hat\theta^n θ^n (如下图所示,初始值 ϕ \phi ϕ 在两个任务上表现不错,但在训练后却不能达到最优解)

Pre-training: Also known as multi-task learning (baseline of meta)

MAML is good because ……

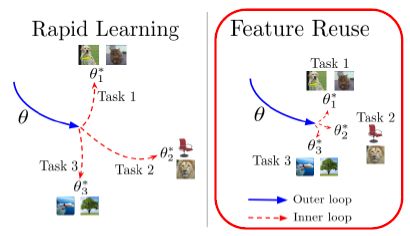

- paper: Rapid Learning or Feature Reuse? Towards Understanding the Effectiveness of MAML

- 这篇文章探索了如下问题:

- Is the effectiveness of MAML due to the meta-initialization being primed for rapid learning (large, efficient changes in the representations) (MAML 学到的初始化参数有利于各个任务学习得到最优解) or due to feature reuse, with the meta initialization already containing high quality features (MAML 学到的初始化参数本身就比较接近各个任务的最优解)?

- We find that feature reuse is the dominant factor. This leads to the ANIL (Almost No Inner Loop) algorithm, a simplification of MAML where we remove the inner loop for all but the (task-specific) head of a MAML-trained network.

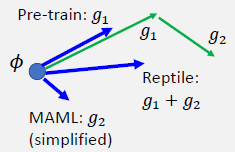

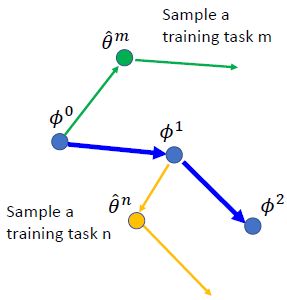

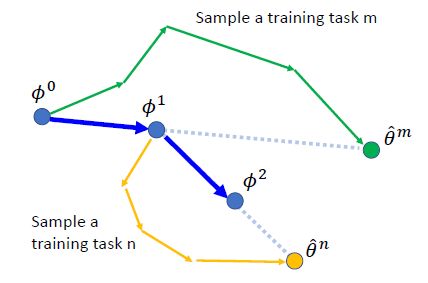

Reptile

- Reptile 的想法比 MAML 更简单:如下图所示,Reptile 允许在 training task m m m 上多次更新参数得到参数 θ ^ m \hat\theta^m θ^m,然后将 ϕ \phi ϕ 朝着 θ ^ m \hat\theta^m θ^m 的方向上更新:

MAML v.s. Reptile v.s. Pre-training

MAML++

- paper: How to train your MAML (MAML++)

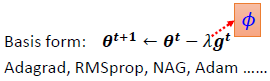

Optimizer

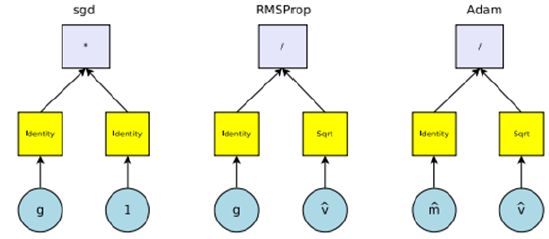

- paper: Learning to learn by gradient descent by gradient descent

- 常见的三种优化器 SGD, RMSProp, Adam 可以看作下图所示的结构,其中 g g g 为梯度, l l l 为学习率, v ^ \hat v v^ 为梯度平方的累加和, m ^ \hat m m^ 为动量

- 因此可以用如下结构,让机器自己学出一个优化器:

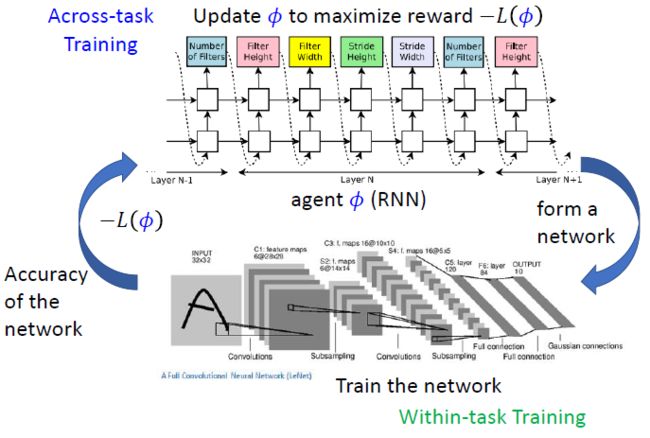

Network Architecture Search (NAS)

- 由于网络架构参数是不可微的,因此不能使用 gradient descent

- Reinforcement Learning

- Evolution Algorithm

- Esteban Real, et al., Large-Scale Evolution of Image Classifiers, ICML 2017

- Esteban Real, et al., Regularized Evolution for Image Classifier Architecture Search, AAAI, 2019

- Hanxiao Liu, et al., Hierarchical Representations for Efficient Architecture Search, ICLR, 2018

- DARTS: DARTS: Differentiable Architecture Search (想办法让 loss 可微,然后用梯度下降进行优化)

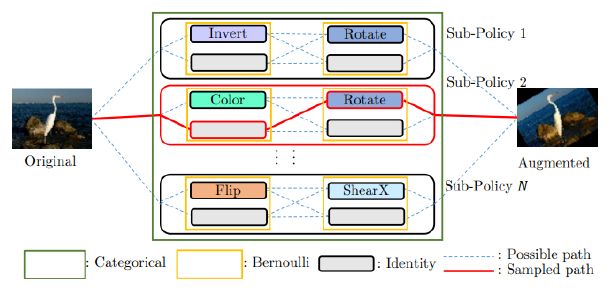

Data Processing

Data Augmentation

- paper:

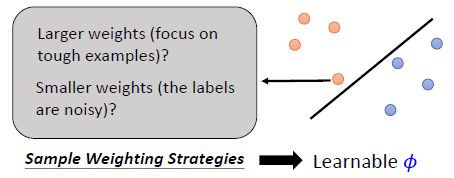

Sample Reweighting

- paper:

- Meta-Weight-Net: Learning an Explicit Mapping For Sample Weighting

- Learning to Reweight Examples for Robust Deep Learning

Learning to compare: Metric-based approach

- paper: Meta-Learning with Latent Embedding Optimization

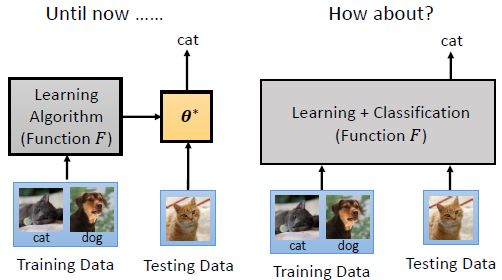

- 不再是学习 gradient descent 中的一个部分,而是直接抛弃 gradient descent 的框架,让学习算法读入训练数据和测试数据,就能直接输出测试数据的结果

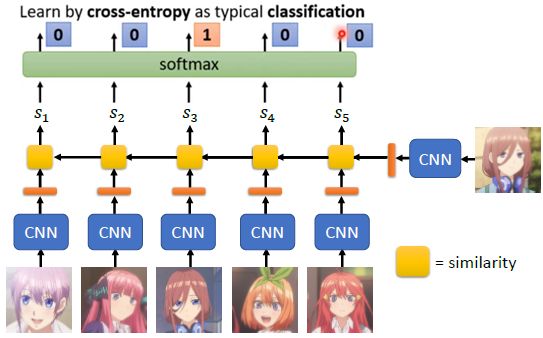

Siamese Network

孪生网络

Face Verification

Siamese Network

What kind of distance should we use?

- SphereFace: Deep Hypersphere Embedding for Face Recognition

- Additive Margin Softmax for Face Verification

- ArcFace: Additive Angular Margin Loss for Deep Face Recognition

Triplet loss (每个 training task 中都包含 1 张 training data 和 2 张 testing data (positive + negative))

- Deep Metric Learning using Triplet Network

- FaceNet: A Unified Embedding for Face Recognition and Clustering

N N N-way Few/One-shot Learning

Prototypical Network

- paper: Prototypical Networks for Few-shot Learning

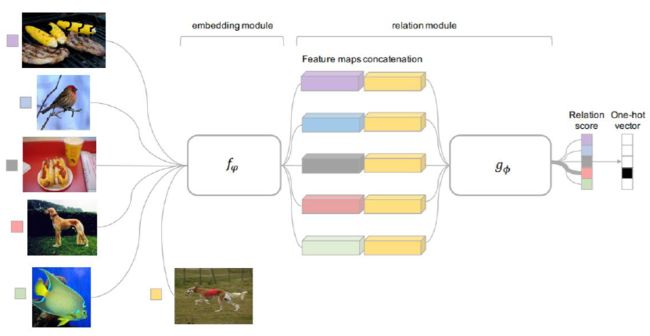

Relation Network

- paper: Learning to Compare: Relation Network for Few-Shot Learning

- Relation Network 是先抽取出训练样本和测试样本的 embedding,然后将测试样本的 embedding 与所有训练样本的 embedding 连接起来,再送入后续网络得到 Relation score

Few-shot learning for imaginary data

- paper: Low-Shot Learning from Imaginary Data

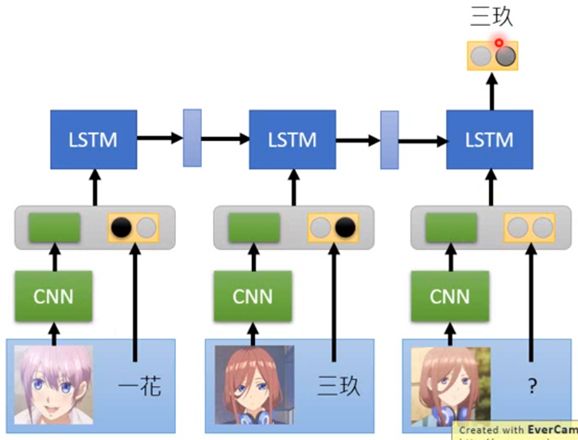

Train + Test as RNN

General LSTM does not work …

- A Simple Neural Attentive Meta-Learner (SNAIL)

- One-shot Learning with Memory-Augmented Neural Networks (MANN)