【DropBlock】《DropBlock:A regularization method for convolutional networks》

NIPS-2018

文章目录

- 1 Background and Motivation

- 2 Related Work

- 3 Advantages / Contributions

- 4 DropBlock

- 5 Experiments

-

- 5.1 ImageNet Classification

-

- 5.1.1 DropBlock in ResNet-50

- 5.1.2 DropBlock in AmoebaNet

- 5.2 Experimental Analysis

- 5.3 Object Detection in COCO

- 5.4 Semantic Segmentation in PASCAL VOC

- 6 Conclusion(own)

1 Background and Motivation

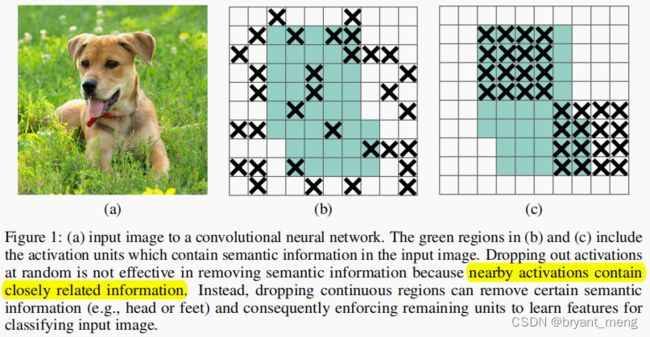

Dropout 的缺点,as a regularization technique for fully connected layers, it is often less effective for convolutional layers

有此缺点的原因,activation units in convolutional layers are spatially correlated so information can still flow through convolutional networks despite dropout.

Thus a structured form of dropout is needed to regularize convolutional networks

作者提出了 DropBlock,a form of structured dropout, where units in a contiguous region of a feature map are dropped together

the networks must look elsewhere for evidence to fit the data

2 Related Work

- DropConnect

- maxout

- StochasticDepth

- DropPath

- Scheduled-DropPath

- shake-shake regularization

- ShakeDrop regularization

The basic principle behind these methods is to inject noise into neural networks so that they do not overfit the training data.

Our method is inspired by Cutout(灵感来源去 cutout,可参考 【Cutout】《Improved Regularization of Convolutional Neural Networks with Cutout》)

DropBlock generalizes Cutout by applying Cutout at every feature map in a convolutional networks.

3 Advantages / Contributions

提出DropBlock 数据增广策略,works better than dropout in regularizing convolutional networks

4 DropBlock

linearly increase it over time during training

Its main difference from dropout is that it drops

contiguous regions from a feature map of a layer instead of dropping out independent random units.

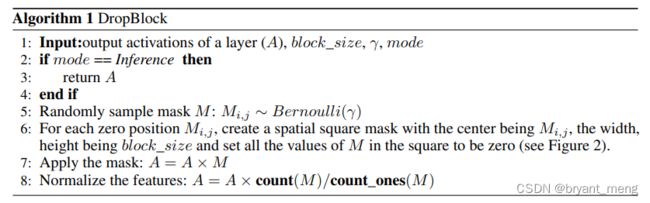

算法流程

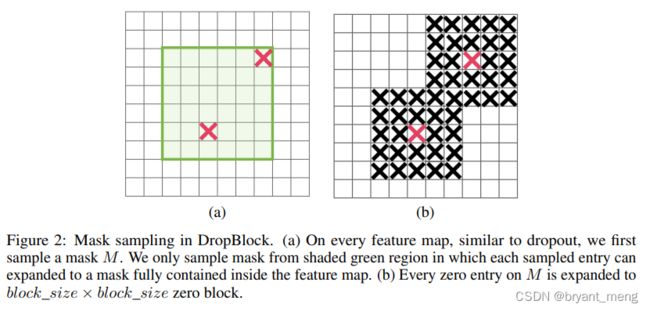

示意图

先找 mask M M M,图2a 绿色区域, M M M 中找 block 中心点,也即 zero entry M i , j M_{i,j} Mi,j(红X),服从 M i , j ∼ B e r n o u l l i ( γ ) M_{i,j} \sim Bernoulli(\gamma) Mi,j∼Bernoulli(γ) 二项分布,以 block 中心外扩形成边长为 block_size 的正方形 block 区域(黑X),黑X 和绿框重叠的区域被置为了0

为了保证每一个 M i , j M_{i,j} Mi,j 点都能扩展成一个正方形,本文仅仅在绿色区域中采样,也即 feat_size - block_size + 1 区域。

两个要配置的参数

-

block_size,所有特征图上 block_size 大小固定

DropBlock resembles dropout when block_size = 1 and resembles SpatialDropout when block_size covers the full feature map.(通道被 mask 了) -

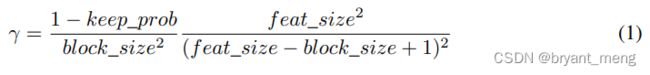

γ \gamma γ,

其中 keep_prob 的含义, keep every activation unit with the probability of keep_prob,实验中被设置为了 between 0.75 and 0.95下面看看 γ \gamma γ 的推导过程,来自DropBlock

- 特征删除总量为 ( 1 − k e e p _ p r o b ) ∗ ( f e a t _ s i z e ) 2 (1-keep\_prob)*(feat\_size)^2 (1−keep_prob)∗(feat_size)2

- 中心区域(绿框)大小为 ( f e a t _ s i z e − b l o c k _ s i z e + 1 ) 2 (feat\_size - block\_size + 1)^2 (feat_size−block_size+1)2

- 红叉数量为 ( f e a t _ s i z e − b l o c k _ s i z e + 1 ) 2 ∗ γ (feat\_size - block\_size + 1)^2 * \gamma (feat_size−block_size+1)2∗γ

- 总屏蔽(黑叉)大小为 ( f e a t _ s i z e − b l o c k _ s i z e + 1 ) 2 ∗ γ ∗ b l o c k _ s i z e 2 (feat\_size - block\_size + 1)^2 * \gamma * block\_size^2 (feat_size−block_size+1)2∗γ∗block_size2

由 ( 1 − k e e p _ p r o b ) ∗ ( f e a t _ s i z e ) 2 = ( f e a t _ s i z e − b l o c k _ s i z e + 1 ) 2 ∗ γ ∗ b l o c k _ s i z e 2 (1-keep\_prob)*(feat\_size)^2 = (feat\_size - block\_size + 1)^2 * \gamma * block\_size^2 (1−keep_prob)∗(feat_size)2=(feat_size−block_size+1)2∗γ∗block_size2,推导出 γ \gamma γ 的公式,当然,黑叉区域有重叠,上述只是个大概的估计(The main nuance of DropBlock is that there will be some overlapped in the dropped blocks, so the above equation is only an approximation. )

Scheduled DropBlock

gradually decreasing keep_prob over time from 1 to the target value is more robust

实验中用的是线性 decrease(use a linear scheme of decreasing the value of keep_prob)

5 Experiments

Datasets

- ILSVRC 2012 classification dataset

- COCO

- PASCAL VOC

5.1 ImageNet Classification

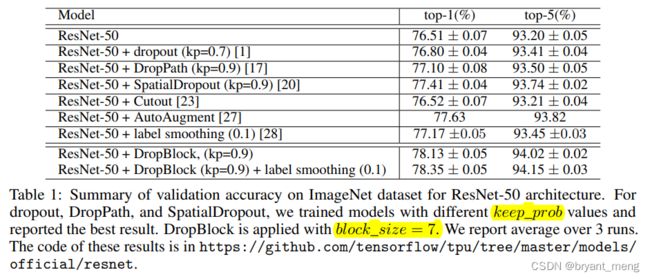

5.1.1 DropBlock in ResNet-50

only after convolution layers or applying DropBlock after both convolution layers and skip connections.

applying DropBlock to Group 4 or to both Groups 3 and 4(对应的应该是 ResNet 的 stage4 和 stage5)

block_size 默认为 7

图 3a 可以看出,DropBlock 效果比较好

图 3b 可以看出,引入 scheduled keep_prob 后,acc 更高,而且应对不同的 keep_prob 设定,其鲁棒性更好(峰值维持的更持久)

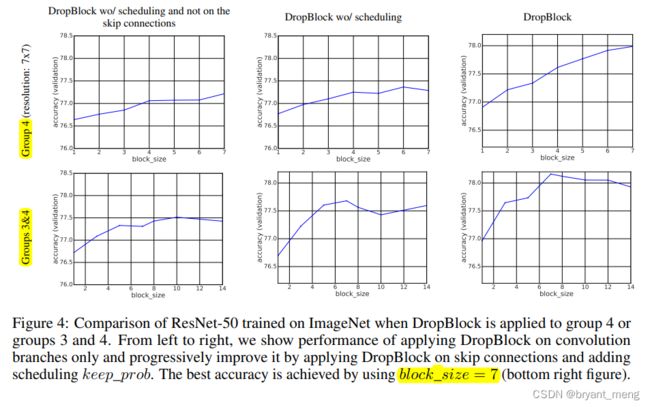

图 4 可以看出,DropBlock 作用在 Group3&4 比单独作用在 Group3 上效果要好,引入 schedule keep_prob 后效果提升,DropBlock 作用在 skip connection 分支后,效果进一步提升。block_size 设置为7效果最好。

作者 diss SpatialDropout,can be too harsh when applying to

high resolution feature map on group 3,也是,整块特征图都丢

对比 Cutout,it does not improve the accuracy on the ImageNet dataset in our experiments.

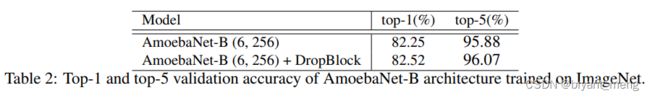

5.1.2 DropBlock in AmoebaNet

5.2 Experimental Analysis

1)DropBlock drops more semantic information

validation accuracy reduced quickly with decreasing keep_prob during inference.

说明 DropBlock removes semantic information and makes classification more difficult.

2)Model trained with DropBlock is more robust

图5看出 block_size = 7 is more robust and has the benefit of block_size = 1 but not vice versa.

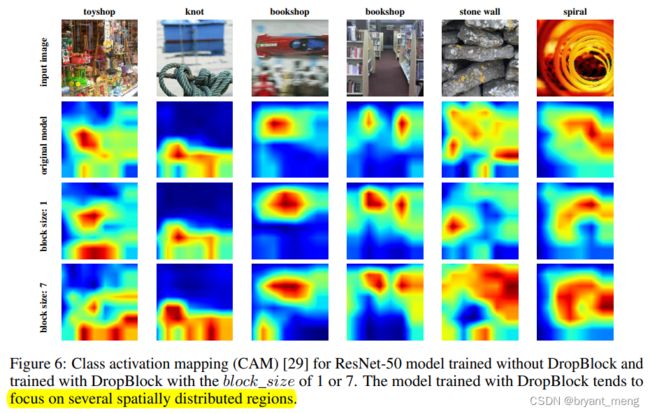

3)DropBlock learns spatially distributed representations

实话说第三列我没有怎么看出来是个书店

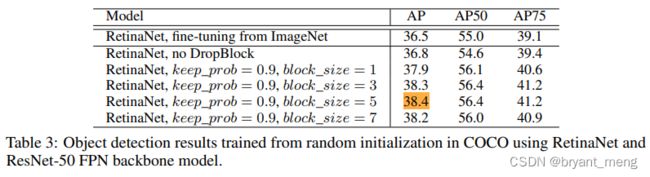

5.3 Object Detection in COCO

5.4 Semantic Segmentation in PASCAL VOC

6 Conclusion(own)

- dropblock 和 dropout 是兼容的

- 作用在 skip connection 可以进一步提升性能

代码,参考 DropBlock的原理和实现