视觉SLAM十四讲CH7代码解析及课后习题详解

orb_cv.cpp

#include

#include

#include //特征点头文件,处理特征点信息

#include //opencv gui头文件

#include //用于计时的头文件

using namespace std;

using namespace cv;

int main(int argc, char **argv) {

if (argc != 3) {

cout << "usage: feature_extraction img1 img2" << endl;//读取图片文件的用法

return 1;

}

//-- 读取图像

Mat img_1 = imread(argv[1], CV_LOAD_IMAGE_COLOR);//读取彩色图片1 CV_LOAD_IMAGE_COLOR表示返回的是一张彩色图

Mat img_2 = imread(argv[2], CV_LOAD_IMAGE_COLOR);//读取彩色图片2 CV_LOAD_IMAGE_COLOR表示返回的是一张彩色图

assert(img_1.data != nullptr && img_2.data != nullptr); //assert()为断言函数,如果它的条件返回错误,则终止程序执行

//-- 初始化

std::vector keypoints_1, keypoints_2;//图片1 -> 关键点1 图片2 -> 关键点2

Mat descriptors_1, descriptors_2;//描述子

Ptr detector = ORB::create(2000);//可以修改特征点的个数来增加匹配点数量 特征点检测

Ptr descriptor = ORB::create();//描述子

Ptr matcher = DescriptorMatcher::create("BruteForce-Hamming");//特征匹配

//-- 第一步:检测 Oriented FAST 角点位置

chrono::steady_clock::time_point t1 = chrono::steady_clock::now();//检测 Oriented FAST 角点前计时

detector->detect(img_1, keypoints_1);//检测图片1的Oriented FAST 角点

detector->detect(img_2, keypoints_2);//检测图片2的Oriented FAST 角点

//-- 第二步:根据角点位置计算 BRIEF 描述子

descriptor->compute(img_1, keypoints_1, descriptors_1);//计算图片1的描述子

descriptor->compute(img_2, keypoints_2, descriptors_2);//计算图片2的描述子

chrono::steady_clock::time_point t2 = chrono::steady_clock::now();//计算耗时

chrono::duration time_used = chrono::duration_cast>(t2 - t1);//计算检测角点和计算描述子所用的时间

cout << "extract ORB cost = " << time_used.count() << " seconds. " << endl;//输出extract ORB cost =

Mat outimg1;//定义ORB特征显示结果的变量

drawKeypoints(img_1, keypoints_1, outimg1, Scalar::all(-1), DrawMatchesFlags::DEFAULT);//画出图像1的ORB特征点提取结果

imshow("ORB features", outimg1);//显示图像1的ORB特征点提取结果

//-- 第三步:对两幅图像中的BRIEF描述子进行匹配,使用 Hamming 距离

vector matches;//匹配matches

t1 = chrono::steady_clock::now();//计时

matcher->match(descriptors_1, descriptors_2, matches);//描述子1和描述子2进行匹配

t2 = chrono::steady_clock::now();//计时

time_used = chrono::duration_cast>(t2 - t1);//计算耗时

cout << "match ORB cost = " << time_used.count() << " seconds. " << endl;//输出match ORB cost

//-- 第四步:匹配点对筛选

// 计算最小距离和最大距离

auto min_max = minmax_element(matches.begin(), matches.end(),

[](const DMatch &m1, const DMatch &m2) { return m1.distance < m2.distance; });

//minmax_element()为c++中定义的寻找最小值和最大值的函数。

//第3个参数表示比较函数,默认从小到大,可以省略

double min_dist = min_max.first->distance;//将两幅图像的ORB特征点之间的最小距离赋值给min_dist

double max_dist = min_max.second->distance;//将两幅图像的ORB特征点之间的最大距离赋值给max_dist

printf("-- Max dist : %f \n", max_dist);// 输出两幅图像的ORB特征点匹配的最大距离

printf("-- Min dist : %f \n", min_dist);// 输出两幅图像的ORB特征点匹配的最小距离

//当描述子之间的距离大于两倍的最小距离时,即认为匹配有误.但有时候最小距离会非常小,设置一个经验值30作为下限.

std::vector good_matches;//

for (int i = 0; i < descriptors_1.rows; i++)//遍历描述子

{

if (matches[i].distance <= max(2 * min_dist, 30.0)) //不同的结果可以在这里设置

//当描述子之间的距离大于两倍的最小距离时,即认为匹配有误 30.0为经验值

{

good_matches.push_back(matches[i]);

}

}

//-- 第五步:绘制匹配结果

Mat img_match;//绘制匹配结果变量

Mat img_goodmatch;//绘制剔除误匹配的匹配结果变量

drawMatches(img_1, keypoints_1, img_2, keypoints_2, matches, img_match);//画出粗匹配匹配结果

drawMatches(img_1, keypoints_1, img_2, keypoints_2, good_matches, img_goodmatch);//画出剔除误匹配的匹配结果

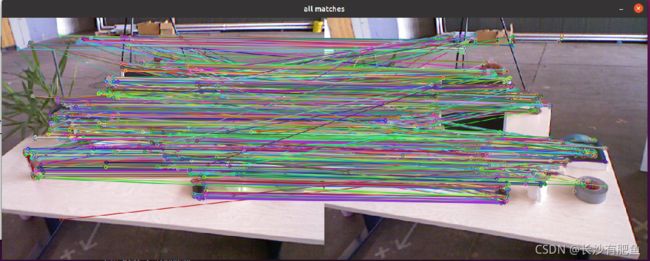

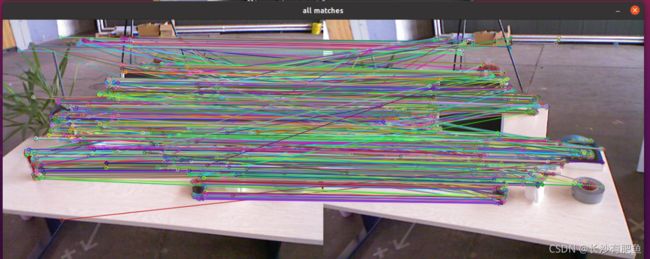

imshow("all matches", img_match);//界面展示粗匹配匹配结果

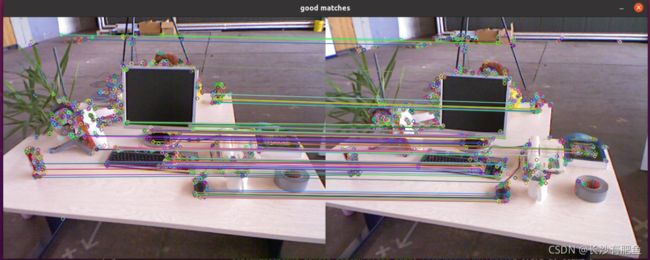

imshow("good matches", img_goodmatch);//界面展示剔除误匹配的匹配结果

waitKey(0);

return 0;

}

CMakeLists.txt如下:

cmake_minimum_required(VERSION 2.8)

project(vo1)

set(CMAKE_BUILD_TYPE "Release")

add_definitions("-DENABLE_SSE")

#set(CMAKE_CXX_FLAGS "-std=c++11 -O2 ${SSE_FLAGS} -msse4")

set(CMAKE_CXX_FLAGS "-std=c++14 -O2 ${SSE_FLAGS} -msse4")

list(APPEND CMAKE_MODULE_PATH ${PROJECT_SOURCE_DIR}/cmake)

find_package(OpenCV 3 REQUIRED)

find_package(G2O REQUIRED)

find_package(Sophus REQUIRED)

include_directories(

${OpenCV_INCLUDE_DIRS}

${G2O_INCLUDE_DIRS}

${Sophus_INCLUDE_DIRS}

"/usr/local/include/eigen3/"

)

add_executable(orb_cv orb_cv.cpp)

target_link_libraries(orb_cv ${OpenCV_LIBS} fmt)

add_executable(orb_self orb_self.cpp)

target_link_libraries(orb_self ${OpenCV_LIBS} fmt)

# add_executable( pose_estimation_2d2d pose_estimation_2d2d.cpp extra.cpp ) # use this if in OpenCV2

add_executable(pose_estimation_2d2d pose_estimation_2d2d.cpp)

target_link_libraries(pose_estimation_2d2d ${OpenCV_LIBS} fmt)

# # add_executable( triangulation triangulation.cpp extra.cpp) # use this if in opencv2

add_executable(triangulation triangulation.cpp)

target_link_libraries(triangulation ${OpenCV_LIBS} fmt)

add_executable(pose_estimation_3d2d pose_estimation_3d2d.cpp)

target_link_libraries(pose_estimation_3d2d

g2o_core g2o_stuff

${OpenCV_LIBS} fmt)

add_executable(pose_estimation_3d3d pose_estimation_3d3d.cpp)

target_link_libraries(pose_estimation_3d3d

g2o_core g2o_stuff

${OpenCV_LIBS} fmt)执行结果:

执行命令:

./orb_cv 1.png 2.pngextract ORB cost = 0.0350498 seconds.

match ORB cost = 0.00511809 seconds.

-- Max dist : 92.000000

-- Min dist : 4.000000

All matches of points: 2000

Good matches of points: 370!

修改:

if (matches[i].distance <= max(5 * min_dist, 10.0)) //不同的结果可以在这里设置得到的结果如下:

extract ORB cost = 0.0349625 seconds.

match ORB cost = 0.00639175 seconds.

-- Max dist : 92.000000

-- Min dist : 4.000000

All matches of points: 2000

Good matches of points: 134!

orb_self.cpp

#include

#include

#include

#include

using namespace std;

// global variables

string first_file = "./1.png";//图片1的路径

string second_file = "./2.png";//图片2的路径

// 32 bit unsigned int, will have 8, 8x32=256

typedef vector DescType; // Descriptor type 描述子类型

/**

* compute descriptor of orb keypoints

* @param img input image

* @param keypoints detected fast keypoints

* @param descriptors descriptors

*

* NOTE: if a keypoint goes outside the image boundary (8 pixels), descriptors will not be computed and will be left as

* empty

*/

void ComputeORB(const cv::Mat &img, vector &keypoints, vector &descriptors);//函数ComputeORB 输入图像和Fast角点,输出描述子

/**

* brute-force match two sets of descriptors

* @param desc1 the first descriptor

* @param desc2 the second descriptor

* @param matches matches of two images

*/

void BfMatch(const vector &desc1, const vector &desc2, vector &matches);//函数BfMatch 输入描述子desc1和描述子desc2,输出匹配对matches

int main(int argc, char **argv) {

// load image

cv::Mat first_image = cv::imread(first_file, 0);//0表示返回一张灰度图 返回第一张图像的灰度图

cv::Mat second_image = cv::imread(second_file, 0);//0表示返回一张灰度图 返回第二张图像的灰度图

assert(first_image.data != nullptr && second_image.data != nullptr);//assert()表示断言函数,条件为假则程序停止执行

// detect FAST keypoints1 using threshold=40

chrono::steady_clock::time_point t1 = chrono::steady_clock::now();//计时开始

vector keypoints1;//关键点 第一张图像

cv::FAST(first_image, keypoints1, 40);//40表示设定的一个灰度阈值

vector descriptor1;//描述子1

ComputeORB(first_image, keypoints1, descriptor1);//调用ComputeORB函数

// same for the second

vector keypoints2;//关键点 第二张图像

vector descriptor2;//描述子2

cv::FAST(second_image, keypoints2, 40);//40表示设定的一个灰度阈值

ComputeORB(second_image, keypoints2, descriptor2);//调用ComputeORB函数

chrono::steady_clock::time_point t2 = chrono::steady_clock::now();//计时结束

chrono::duration time_used = chrono::duration_cast>(t2 - t1);//计算ORB提取所花费的时间

cout << "extract ORB cost = " << time_used.count() << " seconds. " << endl;//输出ORB提取所花费的时间

// find matches

vector matches;//匹配matches

t1 = chrono::steady_clock::now();//计时开始

BfMatch(descriptor1, descriptor2, matches);//调用BfMatch函数

t2 = chrono::steady_clock::now();//计时结束

time_used = chrono::duration_cast>(t2 - t1);//计算耗时

cout << "match ORB cost = " << time_used.count() << " seconds. " << endl;//输出ORB匹配的用时

cout << "matches: " << matches.size() << endl;//输出匹配点的数目

// plot the matches

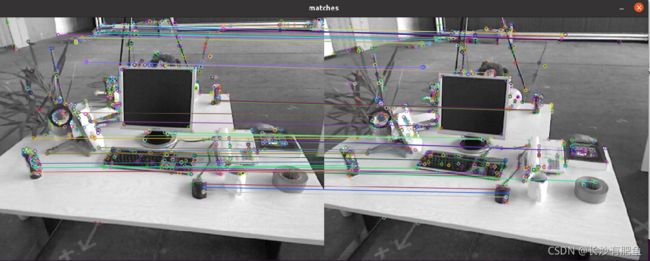

cv::Mat image_show;

cv::drawMatches(first_image, keypoints1, second_image, keypoints2, matches, image_show);//画出两幅图像间的匹配图

cv::imshow("matches", image_show);//界面展示匹配结果

cv::imwrite("matches.png", image_show);//在build文件中写入匹配结果图

cv::waitKey(0);

cout << "done." << endl;

return 0;

}

// -------------------------------------------------------------------------------------------------- //

// ORB pattern

//确定二进制描述子Rotated BRIEF中每一位上是0还是1时,两个像素点的选择;32*32图像块

int ORB_pattern[256 * 4] = {

8, -3, 9, 5/*mean (0), correlation (0)*/,

4, 2, 7, -12/*mean (1.12461e-05), correlation (0.0437584)*/,

-11, 9, -8, 2/*mean (3.37382e-05), correlation (0.0617409)*/,

7, -12, 12, -13/*mean (5.62303e-05), correlation (0.0636977)*/,

2, -13, 2, 12/*mean (0.000134953), correlation (0.085099)*/,

1, -7, 1, 6/*mean (0.000528565), correlation (0.0857175)*/,

-2, -10, -2, -4/*mean (0.0188821), correlation (0.0985774)*/,

-13, -13, -11, -8/*mean (0.0363135), correlation (0.0899616)*/,

-13, -3, -12, -9/*mean (0.121806), correlation (0.099849)*/,

10, 4, 11, 9/*mean (0.122065), correlation (0.093285)*/,

-13, -8, -8, -9/*mean (0.162787), correlation (0.0942748)*/,

-11, 7, -9, 12/*mean (0.21561), correlation (0.0974438)*/,

7, 7, 12, 6/*mean (0.160583), correlation (0.130064)*/,

-4, -5, -3, 0/*mean (0.228171), correlation (0.132998)*/,

-13, 2, -12, -3/*mean (0.00997526), correlation (0.145926)*/,

-9, 0, -7, 5/*mean (0.198234), correlation (0.143636)*/,

12, -6, 12, -1/*mean (0.0676226), correlation (0.16689)*/,

-3, 6, -2, 12/*mean (0.166847), correlation (0.171682)*/,

-6, -13, -4, -8/*mean (0.101215), correlation (0.179716)*/,

11, -13, 12, -8/*mean (0.200641), correlation (0.192279)*/,

4, 7, 5, 1/*mean (0.205106), correlation (0.186848)*/,

5, -3, 10, -3/*mean (0.234908), correlation (0.192319)*/,

3, -7, 6, 12/*mean (0.0709964), correlation (0.210872)*/,

-8, -7, -6, -2/*mean (0.0939834), correlation (0.212589)*/,

-2, 11, -1, -10/*mean (0.127778), correlation (0.20866)*/,

-13, 12, -8, 10/*mean (0.14783), correlation (0.206356)*/,

-7, 3, -5, -3/*mean (0.182141), correlation (0.198942)*/,

-4, 2, -3, 7/*mean (0.188237), correlation (0.21384)*/,

-10, -12, -6, 11/*mean (0.14865), correlation (0.23571)*/,

5, -12, 6, -7/*mean (0.222312), correlation (0.23324)*/,

5, -6, 7, -1/*mean (0.229082), correlation (0.23389)*/,

1, 0, 4, -5/*mean (0.241577), correlation (0.215286)*/,

9, 11, 11, -13/*mean (0.00338507), correlation (0.251373)*/,

4, 7, 4, 12/*mean (0.131005), correlation (0.257622)*/,

2, -1, 4, 4/*mean (0.152755), correlation (0.255205)*/,

-4, -12, -2, 7/*mean (0.182771), correlation (0.244867)*/,

-8, -5, -7, -10/*mean (0.186898), correlation (0.23901)*/,

4, 11, 9, 12/*mean (0.226226), correlation (0.258255)*/,

0, -8, 1, -13/*mean (0.0897886), correlation (0.274827)*/,

-13, -2, -8, 2/*mean (0.148774), correlation (0.28065)*/,

-3, -2, -2, 3/*mean (0.153048), correlation (0.283063)*/,

-6, 9, -4, -9/*mean (0.169523), correlation (0.278248)*/,

8, 12, 10, 7/*mean (0.225337), correlation (0.282851)*/,

0, 9, 1, 3/*mean (0.226687), correlation (0.278734)*/,

7, -5, 11, -10/*mean (0.00693882), correlation (0.305161)*/,

-13, -6, -11, 0/*mean (0.0227283), correlation (0.300181)*/,

10, 7, 12, 1/*mean (0.125517), correlation (0.31089)*/,

-6, -3, -6, 12/*mean (0.131748), correlation (0.312779)*/,

10, -9, 12, -4/*mean (0.144827), correlation (0.292797)*/,

-13, 8, -8, -12/*mean (0.149202), correlation (0.308918)*/,

-13, 0, -8, -4/*mean (0.160909), correlation (0.310013)*/,

3, 3, 7, 8/*mean (0.177755), correlation (0.309394)*/,

5, 7, 10, -7/*mean (0.212337), correlation (0.310315)*/,

-1, 7, 1, -12/*mean (0.214429), correlation (0.311933)*/,

3, -10, 5, 6/*mean (0.235807), correlation (0.313104)*/,

2, -4, 3, -10/*mean (0.00494827), correlation (0.344948)*/,

-13, 0, -13, 5/*mean (0.0549145), correlation (0.344675)*/,

-13, -7, -12, 12/*mean (0.103385), correlation (0.342715)*/,

-13, 3, -11, 8/*mean (0.134222), correlation (0.322922)*/,

-7, 12, -4, 7/*mean (0.153284), correlation (0.337061)*/,

6, -10, 12, 8/*mean (0.154881), correlation (0.329257)*/,

-9, -1, -7, -6/*mean (0.200967), correlation (0.33312)*/,

-2, -5, 0, 12/*mean (0.201518), correlation (0.340635)*/,

-12, 5, -7, 5/*mean (0.207805), correlation (0.335631)*/,

3, -10, 8, -13/*mean (0.224438), correlation (0.34504)*/,

-7, -7, -4, 5/*mean (0.239361), correlation (0.338053)*/,

-3, -2, -1, -7/*mean (0.240744), correlation (0.344322)*/,

2, 9, 5, -11/*mean (0.242949), correlation (0.34145)*/,

-11, -13, -5, -13/*mean (0.244028), correlation (0.336861)*/,

-1, 6, 0, -1/*mean (0.247571), correlation (0.343684)*/,

5, -3, 5, 2/*mean (0.000697256), correlation (0.357265)*/,

-4, -13, -4, 12/*mean (0.00213675), correlation (0.373827)*/,

-9, -6, -9, 6/*mean (0.0126856), correlation (0.373938)*/,

-12, -10, -8, -4/*mean (0.0152497), correlation (0.364237)*/,

10, 2, 12, -3/*mean (0.0299933), correlation (0.345292)*/,

7, 12, 12, 12/*mean (0.0307242), correlation (0.366299)*/,

-7, -13, -6, 5/*mean (0.0534975), correlation (0.368357)*/,

-4, 9, -3, 4/*mean (0.099865), correlation (0.372276)*/,

7, -1, 12, 2/*mean (0.117083), correlation (0.364529)*/,

-7, 6, -5, 1/*mean (0.126125), correlation (0.369606)*/,

-13, 11, -12, 5/*mean (0.130364), correlation (0.358502)*/,

-3, 7, -2, -6/*mean (0.131691), correlation (0.375531)*/,

7, -8, 12, -7/*mean (0.160166), correlation (0.379508)*/,

-13, -7, -11, -12/*mean (0.167848), correlation (0.353343)*/,

1, -3, 12, 12/*mean (0.183378), correlation (0.371916)*/,

2, -6, 3, 0/*mean (0.228711), correlation (0.371761)*/,

-4, 3, -2, -13/*mean (0.247211), correlation (0.364063)*/,

-1, -13, 1, 9/*mean (0.249325), correlation (0.378139)*/,

7, 1, 8, -6/*mean (0.000652272), correlation (0.411682)*/,

1, -1, 3, 12/*mean (0.00248538), correlation (0.392988)*/,

9, 1, 12, 6/*mean (0.0206815), correlation (0.386106)*/,

-1, -9, -1, 3/*mean (0.0364485), correlation (0.410752)*/,

-13, -13, -10, 5/*mean (0.0376068), correlation (0.398374)*/,

7, 7, 10, 12/*mean (0.0424202), correlation (0.405663)*/,

12, -5, 12, 9/*mean (0.0942645), correlation (0.410422)*/,

6, 3, 7, 11/*mean (0.1074), correlation (0.413224)*/,

5, -13, 6, 10/*mean (0.109256), correlation (0.408646)*/,

2, -12, 2, 3/*mean (0.131691), correlation (0.416076)*/,

3, 8, 4, -6/*mean (0.165081), correlation (0.417569)*/,

2, 6, 12, -13/*mean (0.171874), correlation (0.408471)*/,

9, -12, 10, 3/*mean (0.175146), correlation (0.41296)*/,

-8, 4, -7, 9/*mean (0.183682), correlation (0.402956)*/,

-11, 12, -4, -6/*mean (0.184672), correlation (0.416125)*/,

1, 12, 2, -8/*mean (0.191487), correlation (0.386696)*/,

6, -9, 7, -4/*mean (0.192668), correlation (0.394771)*/,

2, 3, 3, -2/*mean (0.200157), correlation (0.408303)*/,

6, 3, 11, 0/*mean (0.204588), correlation (0.411762)*/,

3, -3, 8, -8/*mean (0.205904), correlation (0.416294)*/,

7, 8, 9, 3/*mean (0.213237), correlation (0.409306)*/,

-11, -5, -6, -4/*mean (0.243444), correlation (0.395069)*/,

-10, 11, -5, 10/*mean (0.247672), correlation (0.413392)*/,

-5, -8, -3, 12/*mean (0.24774), correlation (0.411416)*/,

-10, 5, -9, 0/*mean (0.00213675), correlation (0.454003)*/,

8, -1, 12, -6/*mean (0.0293635), correlation (0.455368)*/,

4, -6, 6, -11/*mean (0.0404971), correlation (0.457393)*/,

-10, 12, -8, 7/*mean (0.0481107), correlation (0.448364)*/,

4, -2, 6, 7/*mean (0.050641), correlation (0.455019)*/,

-2, 0, -2, 12/*mean (0.0525978), correlation (0.44338)*/,

-5, -8, -5, 2/*mean (0.0629667), correlation (0.457096)*/,

7, -6, 10, 12/*mean (0.0653846), correlation (0.445623)*/,

-9, -13, -8, -8/*mean (0.0858749), correlation (0.449789)*/,

-5, -13, -5, -2/*mean (0.122402), correlation (0.450201)*/,

8, -8, 9, -13/*mean (0.125416), correlation (0.453224)*/,

-9, -11, -9, 0/*mean (0.130128), correlation (0.458724)*/,

1, -8, 1, -2/*mean (0.132467), correlation (0.440133)*/,

7, -4, 9, 1/*mean (0.132692), correlation (0.454)*/,

-2, 1, -1, -4/*mean (0.135695), correlation (0.455739)*/,

11, -6, 12, -11/*mean (0.142904), correlation (0.446114)*/,

-12, -9, -6, 4/*mean (0.146165), correlation (0.451473)*/,

3, 7, 7, 12/*mean (0.147627), correlation (0.456643)*/,

5, 5, 10, 8/*mean (0.152901), correlation (0.455036)*/,

0, -4, 2, 8/*mean (0.167083), correlation (0.459315)*/,

-9, 12, -5, -13/*mean (0.173234), correlation (0.454706)*/,

0, 7, 2, 12/*mean (0.18312), correlation (0.433855)*/,

-1, 2, 1, 7/*mean (0.185504), correlation (0.443838)*/,

5, 11, 7, -9/*mean (0.185706), correlation (0.451123)*/,

3, 5, 6, -8/*mean (0.188968), correlation (0.455808)*/,

-13, -4, -8, 9/*mean (0.191667), correlation (0.459128)*/,

-5, 9, -3, -3/*mean (0.193196), correlation (0.458364)*/,

-4, -7, -3, -12/*mean (0.196536), correlation (0.455782)*/,

6, 5, 8, 0/*mean (0.1972), correlation (0.450481)*/,

-7, 6, -6, 12/*mean (0.199438), correlation (0.458156)*/,

-13, 6, -5, -2/*mean (0.211224), correlation (0.449548)*/,

1, -10, 3, 10/*mean (0.211718), correlation (0.440606)*/,

4, 1, 8, -4/*mean (0.213034), correlation (0.443177)*/,

-2, -2, 2, -13/*mean (0.234334), correlation (0.455304)*/,

2, -12, 12, 12/*mean (0.235684), correlation (0.443436)*/,

-2, -13, 0, -6/*mean (0.237674), correlation (0.452525)*/,

4, 1, 9, 3/*mean (0.23962), correlation (0.444824)*/,

-6, -10, -3, -5/*mean (0.248459), correlation (0.439621)*/,

-3, -13, -1, 1/*mean (0.249505), correlation (0.456666)*/,

7, 5, 12, -11/*mean (0.00119208), correlation (0.495466)*/,

4, -2, 5, -7/*mean (0.00372245), correlation (0.484214)*/,

-13, 9, -9, -5/*mean (0.00741116), correlation (0.499854)*/,

7, 1, 8, 6/*mean (0.0208952), correlation (0.499773)*/,

7, -8, 7, 6/*mean (0.0220085), correlation (0.501609)*/,

-7, -4, -7, 1/*mean (0.0233806), correlation (0.496568)*/,

-8, 11, -7, -8/*mean (0.0236505), correlation (0.489719)*/,

-13, 6, -12, -8/*mean (0.0268781), correlation (0.503487)*/,

2, 4, 3, 9/*mean (0.0323324), correlation (0.501938)*/,

10, -5, 12, 3/*mean (0.0399235), correlation (0.494029)*/,

-6, -5, -6, 7/*mean (0.0420153), correlation (0.486579)*/,

8, -3, 9, -8/*mean (0.0548021), correlation (0.484237)*/,

2, -12, 2, 8/*mean (0.0616622), correlation (0.496642)*/,

-11, -2, -10, 3/*mean (0.0627755), correlation (0.498563)*/,

-12, -13, -7, -9/*mean (0.0829622), correlation (0.495491)*/,

-11, 0, -10, -5/*mean (0.0843342), correlation (0.487146)*/,

5, -3, 11, 8/*mean (0.0929937), correlation (0.502315)*/,

-2, -13, -1, 12/*mean (0.113327), correlation (0.48941)*/,

-1, -8, 0, 9/*mean (0.132119), correlation (0.467268)*/,

-13, -11, -12, -5/*mean (0.136269), correlation (0.498771)*/,

-10, -2, -10, 11/*mean (0.142173), correlation (0.498714)*/,

-3, 9, -2, -13/*mean (0.144141), correlation (0.491973)*/,

2, -3, 3, 2/*mean (0.14892), correlation (0.500782)*/,

-9, -13, -4, 0/*mean (0.150371), correlation (0.498211)*/,

-4, 6, -3, -10/*mean (0.152159), correlation (0.495547)*/,

-4, 12, -2, -7/*mean (0.156152), correlation (0.496925)*/,

-6, -11, -4, 9/*mean (0.15749), correlation (0.499222)*/,

6, -3, 6, 11/*mean (0.159211), correlation (0.503821)*/,

-13, 11, -5, 5/*mean (0.162427), correlation (0.501907)*/,

11, 11, 12, 6/*mean (0.16652), correlation (0.497632)*/,

7, -5, 12, -2/*mean (0.169141), correlation (0.484474)*/,

-1, 12, 0, 7/*mean (0.169456), correlation (0.495339)*/,

-4, -8, -3, -2/*mean (0.171457), correlation (0.487251)*/,

-7, 1, -6, 7/*mean (0.175), correlation (0.500024)*/,

-13, -12, -8, -13/*mean (0.175866), correlation (0.497523)*/,

-7, -2, -6, -8/*mean (0.178273), correlation (0.501854)*/,

-8, 5, -6, -9/*mean (0.181107), correlation (0.494888)*/,

-5, -1, -4, 5/*mean (0.190227), correlation (0.482557)*/,

-13, 7, -8, 10/*mean (0.196739), correlation (0.496503)*/,

1, 5, 5, -13/*mean (0.19973), correlation (0.499759)*/,

1, 0, 10, -13/*mean (0.204465), correlation (0.49873)*/,

9, 12, 10, -1/*mean (0.209334), correlation (0.49063)*/,

5, -8, 10, -9/*mean (0.211134), correlation (0.503011)*/,

-1, 11, 1, -13/*mean (0.212), correlation (0.499414)*/,

-9, -3, -6, 2/*mean (0.212168), correlation (0.480739)*/,

-1, -10, 1, 12/*mean (0.212731), correlation (0.502523)*/,

-13, 1, -8, -10/*mean (0.21327), correlation (0.489786)*/,

8, -11, 10, -6/*mean (0.214159), correlation (0.488246)*/,

2, -13, 3, -6/*mean (0.216993), correlation (0.50287)*/,

7, -13, 12, -9/*mean (0.223639), correlation (0.470502)*/,

-10, -10, -5, -7/*mean (0.224089), correlation (0.500852)*/,

-10, -8, -8, -13/*mean (0.228666), correlation (0.502629)*/,

4, -6, 8, 5/*mean (0.22906), correlation (0.498305)*/,

3, 12, 8, -13/*mean (0.233378), correlation (0.503825)*/,

-4, 2, -3, -3/*mean (0.234323), correlation (0.476692)*/,

5, -13, 10, -12/*mean (0.236392), correlation (0.475462)*/,

4, -13, 5, -1/*mean (0.236842), correlation (0.504132)*/,

-9, 9, -4, 3/*mean (0.236977), correlation (0.497739)*/,

0, 3, 3, -9/*mean (0.24314), correlation (0.499398)*/,

-12, 1, -6, 1/*mean (0.243297), correlation (0.489447)*/,

3, 2, 4, -8/*mean (0.00155196), correlation (0.553496)*/,

-10, -10, -10, 9/*mean (0.00239541), correlation (0.54297)*/,

8, -13, 12, 12/*mean (0.0034413), correlation (0.544361)*/,

-8, -12, -6, -5/*mean (0.003565), correlation (0.551225)*/,

2, 2, 3, 7/*mean (0.00835583), correlation (0.55285)*/,

10, 6, 11, -8/*mean (0.00885065), correlation (0.540913)*/,

6, 8, 8, -12/*mean (0.0101552), correlation (0.551085)*/,

-7, 10, -6, 5/*mean (0.0102227), correlation (0.533635)*/,

-3, -9, -3, 9/*mean (0.0110211), correlation (0.543121)*/,

-1, -13, -1, 5/*mean (0.0113473), correlation (0.550173)*/,

-3, -7, -3, 4/*mean (0.0140913), correlation (0.554774)*/,

-8, -2, -8, 3/*mean (0.017049), correlation (0.55461)*/,

4, 2, 12, 12/*mean (0.01778), correlation (0.546921)*/,

2, -5, 3, 11/*mean (0.0224022), correlation (0.549667)*/,

6, -9, 11, -13/*mean (0.029161), correlation (0.546295)*/,

3, -1, 7, 12/*mean (0.0303081), correlation (0.548599)*/,

11, -1, 12, 4/*mean (0.0355151), correlation (0.523943)*/,

-3, 0, -3, 6/*mean (0.0417904), correlation (0.543395)*/,

4, -11, 4, 12/*mean (0.0487292), correlation (0.542818)*/,

2, -4, 2, 1/*mean (0.0575124), correlation (0.554888)*/,

-10, -6, -8, 1/*mean (0.0594242), correlation (0.544026)*/,

-13, 7, -11, 1/*mean (0.0597391), correlation (0.550524)*/,

-13, 12, -11, -13/*mean (0.0608974), correlation (0.55383)*/,

6, 0, 11, -13/*mean (0.065126), correlation (0.552006)*/,

0, -1, 1, 4/*mean (0.074224), correlation (0.546372)*/,

-13, 3, -9, -2/*mean (0.0808592), correlation (0.554875)*/,

-9, 8, -6, -3/*mean (0.0883378), correlation (0.551178)*/,

-13, -6, -8, -2/*mean (0.0901035), correlation (0.548446)*/,

5, -9, 8, 10/*mean (0.0949843), correlation (0.554694)*/,

2, 7, 3, -9/*mean (0.0994152), correlation (0.550979)*/,

-1, -6, -1, -1/*mean (0.10045), correlation (0.552714)*/,

9, 5, 11, -2/*mean (0.100686), correlation (0.552594)*/,

11, -3, 12, -8/*mean (0.101091), correlation (0.532394)*/,

3, 0, 3, 5/*mean (0.101147), correlation (0.525576)*/,

-1, 4, 0, 10/*mean (0.105263), correlation (0.531498)*/,

3, -6, 4, 5/*mean (0.110785), correlation (0.540491)*/,

-13, 0, -10, 5/*mean (0.112798), correlation (0.536582)*/,

5, 8, 12, 11/*mean (0.114181), correlation (0.555793)*/,

8, 9, 9, -6/*mean (0.117431), correlation (0.553763)*/,

7, -4, 8, -12/*mean (0.118522), correlation (0.553452)*/,

-10, 4, -10, 9/*mean (0.12094), correlation (0.554785)*/,

7, 3, 12, 4/*mean (0.122582), correlation (0.555825)*/,

9, -7, 10, -2/*mean (0.124978), correlation (0.549846)*/,

7, 0, 12, -2/*mean (0.127002), correlation (0.537452)*/,

-1, -6, 0, -11/*mean (0.127148), correlation (0.547401)*/

};

// compute the descriptor

//(1)计算角点的方向;(2)计算描述子。

void ComputeORB(const cv::Mat &img, vector &keypoints, vector &descriptors) {

const int half_patch_size = 8; //计算特征点方向时,选取的图像块,16*16

const int half_boundary = 16;//计算描述子时在32*32的图像块中选点

int bad_points = 0; //计算描述子时,在32*32的区域块选择两个点比较,所选择的点超出图像范围的。出现这种情况下的FAST角点的数目。

//遍历所有FAST角点

for (auto &kp: keypoints)

{

//超出图像边界的角点的描述子设为空

if (kp.pt.x < half_boundary || kp.pt.y < half_boundary ||

kp.pt.x >= img.cols - half_boundary || kp.pt.y >= img.rows - half_boundary) {

// outside

bad_points++; //bad_points的描述子设为空

descriptors.push_back({});

continue;

}

//计算16*16图像块的灰度质心

//可参照下面的图片帮助理解

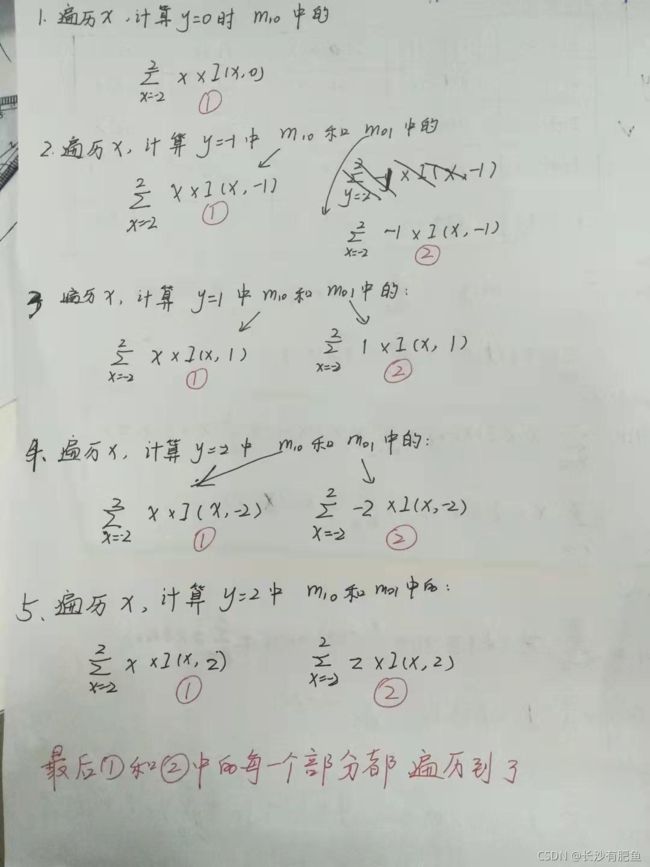

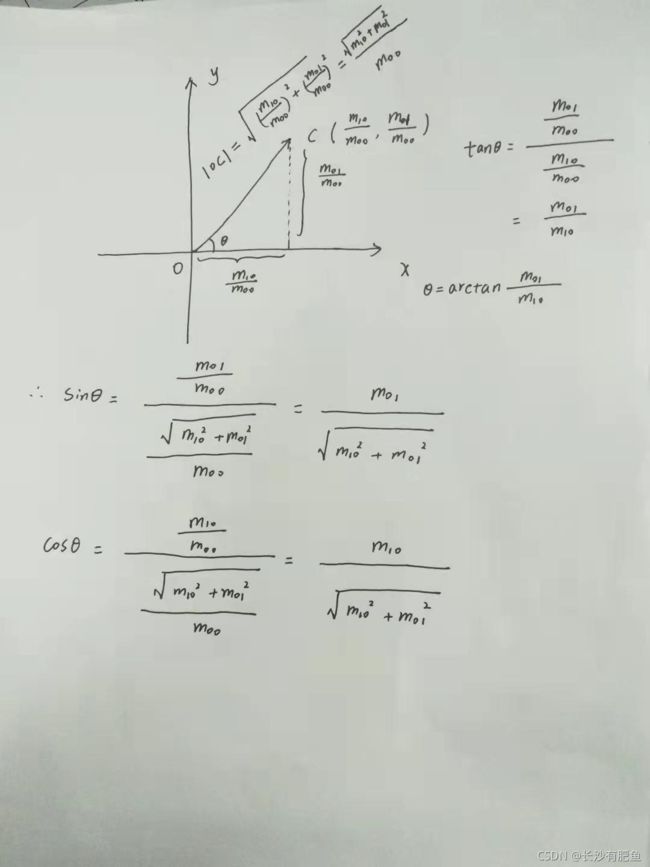

float m01 = 0, m10 = 0;//图像块的矩 视觉slam十四讲中p157

for (int dx = -half_patch_size; dx < half_patch_size; ++dx)

{

for (int dy = -half_patch_size; dy < half_patch_size; ++dy)

{

uchar pixel = img.at(kp.pt.y + dy, kp.pt.x + dx);

m10 += dx * pixel; //pixel表示灰度值 视觉slam十四讲中p157最上面的公式

m01 += dy * pixel;//pixel表示灰度值 视觉slam十四讲中p157最上面的公式

}

}

// angle should be arc tan(m01/m10);参照下面第三章图片帮助理解

float m_sqrt = sqrt(m01 * m01 + m10 * m10) + 1e-18; // avoid divide by zero 1e-18避免了m_sqrt的值为0(图像块全黑)将m01 * m01 + m10 * m10进行开根

float sin_theta = m01 / m_sqrt;//sin_theta = m01 / 根号下(m01 * m01 + m10 * m10))

float cos_theta = m10 / m_sqrt;//cos_theta = m10 / 根号下(m01 * m01 + m10 * m10))

//因为tan_theta = m01/m10 即为tan_theta = sin_theta / cos_theta = [m01 / 根号下(m01 * m01 + m10 * m10] / [m10 / 根号下(m01 * m01 + m10 * m10]

//目的是求出特征点的方向 视觉slam十四讲中p157第三个公式

// compute the angle of this point

DescType desc(8, 0); //8个元素,它们的值初始化为0

for (int i = 0; i < 8; i++) {

uint32_t d = 0;

for (int k = 0; k < 32; k++)

{

int idx_pq = i * 32 + k;//idx_pq表示二进制描述子中的第几位

cv::Point2f p(ORB_pattern[idx_pq * 4], ORB_pattern[idx_pq * 4 + 1]);

cv::Point2f q(ORB_pattern[idx_pq * 4 + 2], ORB_pattern[idx_pq * 4 + 3]);

// rotate with theta

//p,q绕原点旋转theta得到pp,qq

cv::Point2f pp = cv::Point2f(cos_theta * p.x - sin_theta * p.y, sin_theta * p.x + cos_theta * p.y)

+ kp.pt;

cv::Point2f qq = cv::Point2f(cos_theta * q.x - sin_theta * q.y, sin_theta * q.x + cos_theta * q.y)

+ kp.pt;

if (img.at(pp.y, pp.x) < img.at(qq.y, qq.x)) {

d |= 1 << k;

}

}

desc[i] = d;

}

descriptors.push_back(desc);//desc表示该Oriented_FAST角点的描述子

}

cout << "bad/total: " << bad_points << "/" << keypoints.size() << endl;

}

// brute-force matching

void BfMatch(const vector &desc1, const vector &desc2, vector &matches) {

const int d_max = 40;//描述子之间的距离小于这个值,才被认为是正确匹配

for (size_t i1 = 0; i1 < desc1.size(); ++i1) //size_t相当于int,便于代码移植

{

if (desc1[i1].empty()) continue;

cv::DMatch m{i1, 0, 256}; //定义了一个匹配对m

for (size_t i2 = 0; i2 < desc2.size(); ++i2)//计算描述子desc1[i1]和描述子desc2[i2]的距离,即不同位数的数目

{

if (desc2[i2].empty()) continue;

int distance = 0;

for (int k = 0; k < 8; k++) {

distance += _mm_popcnt_u32(desc1[i1][k] ^ desc2[i2][k]);

}

if (distance < d_max && distance < m.distance) {

m.distance = distance;

m.trainIdx = i2;

}

}

if (m.distance < d_max) {

matches.push_back(m);

}

}

}

其实换算到更大的遍历数时,也是一样的道理:

参考链接:

ORBSLAM源码理论分析0—灰度质心法_yys2324826380的博客-CSDN博客_灰度质心法orbslam源码理论分析0—灰度质心法1.灰度质心法原理2.灰度质心法程序实现思路1.灰度质心法原理1.选择某个图像块B,然后将图像块B的矩 mpqm_{pq}mpq 定义为: x,yx,yx,y 表示像素坐标,I(x,y)I(x,y)I(x,y)表示此像素坐标的灰度值。 2.图像块B的质心 CCC 可以通过步骤1中的矩找到: 3.方向向量 O...https://blog.csdn.net/yys2324826380/article/details/105181945/

CMakeLists.txt和上面是一样的

执行命令:

./orb_self 1.png 2.png

程序执行结果:

bad/total: 43/638

bad/total: 8/595

extract ORB cost = 0.00733918 seconds.

match ORB cost = 0.00282784 seconds.

matches: 41

done.

pose_estimation_2d2d.cpp

#include

#include //opencv核心模块

#include //opencv特征点

#include //opencv gui模块

#include

#include

// #include "extra.h" // use this if in OpenCV2

using namespace std;

using namespace cv;

/****************************************************

* 本程序演示了如何使用2D-2D的特征匹配估计相机运动

* **************************************************/

void find_feature_matches(

const Mat &img_1, const Mat &img_2,

std::vector &keypoints_1,

std::vector &keypoints_2,

std::vector &matches);//定义find_feature_matches函数 输入图像1和图像2,输出特征点集合1、特征点集合2和匹配点对

void pose_estimation_2d2d(

std::vector keypoints_1,

std::vector keypoints_2,

std::vector matches,

Mat &R, Mat &t);//定义pose_estimation_2d2d 输入特征点集合1、特征点集合2和匹配点对,输出估计的旋转矩阵、估计的平移向量和本质矩阵,平移向量差了一个尺度因子(可详细阅读书上p175-p176)

//R,t表示旋转矩阵和平移

// 像素坐标转相机归一化坐标

Point2d pixel2cam(const Point2d &p, const Mat &K);//输出一个像素点和相机内参矩阵,输出该像素点在归一化平面上的坐标

int main(int argc, char **argv) {

if (argc != 3) {

cout << "usage: pose_estimation_2d2d img1 img2" << endl;//输出命令行用法

return 1;

}

chrono::steady_clock::time_point t1 = chrono::steady_clock::now(); //计时开始

//-- 读取图像

Mat img_1 = imread(argv[1], CV_LOAD_IMAGE_COLOR);//读取图像1 CV_LOAD_IMAGE_COLOR表示返回一张彩色图像

Mat img_2 = imread(argv[2], CV_LOAD_IMAGE_COLOR);//读取图像2 CV_LOAD_IMAGE_COLOR表示返回一张彩色图像

assert(img_1.data && img_2.data && "Can not load images!");//assert()为断言函数,条件为假则停止执行

//特征提取和特征匹配

vector keypoints_1, keypoints_2;//特征点1 -> 图像1 特征点2 -> 图像2

vector matches;//匹配 matches

find_feature_matches(img_1, img_2, keypoints_1, keypoints_2, matches);//调用find_feature_matches函数

cout << "一共找到了" << matches.size() << "组匹配点" << endl;//输出匹配点数

//-- 估计两张图像间运动

Mat R, t;//R,t表示旋转矩阵和平移

pose_estimation_2d2d(keypoints_1, keypoints_2, matches, R, t);//调用函数

//-- 验证E=t^R*scale

Mat t_x =

(Mat_(3, 3) << 0, -t.at(2, 0), t.at(1, 0),

t.at(2, 0), 0, -t.at(0, 0),

-t.at(1, 0), t.at(0, 0), 0);//Mat_(3, 3) <<()表示opencv中的初始化方法 即对 t_x进行初始化

cout << "t^R=" << endl << t_x * R << endl;//输出t^R

//-- 验证对极约束

Mat K = (Mat_(3, 3) << 520.9, 0, 325.1, 0, 521.0, 249.7, 0, 0, 1);//相机内参矩阵

for(int i = 0; i < matches.size(); i++)

{

DMatch m = matches[i];

Point2d pt1 = pixel2cam(keypoints_1[m.queryIdx].pt, K);//像素坐标转相机归一化坐标

Mat y1 = (Mat_(3, 1) << pt1.x, pt1.y, 1);

Point2d pt2 = pixel2cam(keypoints_2[m.trainIdx].pt, K);

Mat y2 = (Mat_(3, 1) << pt2.x, pt2.y, 1);

Mat d = y2.t() * t_x * R * y1;//对极几何的残差,结果应该为标量0 d = y2(T) * t(^)* R * y1 就是视觉slan十四讲p167的式7.8

cout << "The " << i << " epipolar constraint(匹配点对的对极几何残差): " << d << " !" << endl;

}

// for (DMatch m: matches) //opencv DMatch(int queryIdx, int trainIdx, float distance)

// {

// Point2d pt1 = pixel2cam(keypoints_1[m.queryIdx].pt, K);//像素坐标转相机归一化坐标

// Mat y1 = (Mat_(3, 1) << pt1.x, pt1.y, 1);

// Point2d pt2 = pixel2cam(keypoints_2[m.trainIdx].pt, K);

// Mat y2 = (Mat_(3, 1) << pt2.x, pt2.y, 1);

// Mat d = y2.t() * t_x * R * y1;//对极几何的残差,结果应该为标量0 d = y2(T) * t(^)* R * y1 就是视觉slan十四讲p167的式7.8

// cout << "The" << i << "epipolar constraint(匹配点对的对极几何残差): " << d << " !" << endl;

// //cout << "epipolar constraint = " << d << endl;

// }

chrono::steady_clock::time_point t2 = chrono::steady_clock::now();

chrono::duration time_used = chrono::duration_cast> (t2- t1);

cout << "执行程序所花的时间为" << time_used.count() << "秒!" << endl;

return 0;

}

void find_feature_matches(const Mat &img_1, const Mat &img_2,

std::vector &keypoints_1,

std::vector &keypoints_2,

std::vector &matches) {

//-- 初始化

Mat descriptors_1, descriptors_2;//描述子1he描述子2

// used in OpenCV3

Ptr detector = ORB::create();

Ptr descriptor = ORB::create();

// use this if you are in OpenCV2

// Ptr detector = FeatureDetector::create ( "ORB" );

// Ptr descriptor = DescriptorExtractor::create ( "ORB" );

Ptr matcher = DescriptorMatcher::create("BruteForce-Hamming");//使用汉明距离进行特征匹配

//-- 第一步:检测 Oriented FAST 角点位置

detector->detect(img_1, keypoints_1);//检测图像1的 Oriented FAST 角点

detector->detect(img_2, keypoints_2);//检测图像2的 Oriented FAST 角点

//-- 第二步:根据角点位置计算 BRIEF 描述子

descriptor->compute(img_1, keypoints_1, descriptors_1);

descriptor->compute(img_2, keypoints_2, descriptors_2);

//-- 第三步:对两幅图像中的BRIEF描述子进行匹配,使用 Hamming 距离

vector match;

//BFMatcher matcher ( NORM_HAMMING );

matcher->match(descriptors_1, descriptors_2, match);

//-- 第四步:匹配点对筛选

double min_dist = 150, max_dist = 0; //求最小距离

//找出所有匹配之间的最小距离和最大距离, 即是最相似的和最不相似的两组点之间的距离

for (int i = 0; i < descriptors_1.rows; i++)

{

double dist = match[i].distance;

if (dist < min_dist) min_dist = dist;

if (dist > max_dist) max_dist = dist;

}

printf("-- Max dist : %f \n", max_dist);

printf("-- Min dist : %f \n", min_dist);

//当描述子之间的距离大于两倍的最小距离时,即认为匹配有误.但有时候最小距离会非常小,设置一个经验值30作为下限.、

//遍历所有匹配,排除误匹配

for (int i = 0; i < descriptors_1.rows; i++)

{

if (match[i].distance <= max(2 * min_dist, 30.0))//不同的结果可以在这里设置

//当描述子之间的距离大于两倍的最小距离时,即认为匹配有误 30.0为经验值

{

matches.push_back(match[i]);

}

}

//显示匹配图

Mat img_match;

drawMatches(img_1, keypoints_1, img_2, keypoints_2, matches, img_match, Scalar::all(-1));

imshow("good matches", img_match);

waitKey(0); //程序暂停执行,等待一个按键输入

}

Point2d pixel2cam(const Point2d &p, const Mat &K) {

return Point2d

(

(p.x - K.at(0, 2)) / K.at(0, 0),

(p.y - K.at(1, 2)) / K.at(1, 1)

);

}

void pose_estimation_2d2d(std::vector keypoints_1,

std::vector keypoints_2,

std::vector matches,

Mat &R, Mat &t) {

// 相机内参,TUM Freiburg2

Mat K = (Mat_(3, 3) << 520.9, 0, 325.1, 0, 521.0, 249.7, 0, 0, 1);

//-- 把匹配点转换为vector的形式

vector points1;

vector points2;

for (int i = 0; i < (int) matches.size(); i++) //编译所有匹配点

{

points1.push_back(keypoints_1[matches[i].queryIdx].pt);

points2.push_back(keypoints_2[matches[i].trainIdx].pt);

}

//-- 计算基础矩阵

Mat fundamental_matrix;

fundamental_matrix = findFundamentalMat(points1, points2, CV_FM_8POINT);//采用8点法求解F = K(-T) * E * K(-1)

cout << "fundamental_matrix is " << endl << fundamental_matrix << endl;//输出基础矩阵

//-- 计算本质矩阵

Point2d principal_point(325.1, 249.7); //相机光心, TUM dataset标定值

double focal_length = 521; //相机焦距, TUM dataset标定值

Mat essential_matrix;

essential_matrix = findEssentialMat(points1, points2, focal_length, principal_point);//E = t(^) * R

cout << "essential_matrix is " << endl << essential_matrix << endl;//输出本质矩阵

//-- 计算单应矩阵

//-- 但是本例中场景不是平面,单应矩阵意义不大

Mat homography_matrix;

homography_matrix = findHomography(points1, points2, RANSAC, 3);//H = K * (R - tn(T) / d) * K(-1)

cout << "homography_matrix is " << endl << homography_matrix << endl;

//-- 从本质矩阵中恢复旋转和平移信息.

// 此函数仅在Opencv3中提供

recoverPose(essential_matrix, points1, points2, R, t, focal_length, principal_point);

cout << "R is " << endl << R << endl;//输出旋转矩阵

cout << "t is " << endl << t << endl;//输出平移向量

}

CMakeLists.txt和上面是一样的

执行结果:

./pose_estimation_2d2d 1.png 2.png -- Max dist : 94.000000

-- Min dist : 4.000000

一共找到了79组匹配点

fundamental_matrix is

[4.544437503937326e-06, 0.0001333855576988952, -0.01798499246457619;

-0.0001275657012959839, 2.266794804637672e-05, -0.01416678429258694;

0.01814994639952877, 0.004146055871509035, 1]

essential_matrix is

[0.01097677480088526, 0.2483720528258777, 0.03167429207291153;

-0.2088833206039177, 0.02908423961781584, -0.6744658838357441;

0.008286777636447118, 0.6614041624098427, 0.01676523772725936]

homography_matrix is

[0.9261214281395974, -0.1445322024422794, 33.26921085290527;

0.04535424466077623, 0.9386696693994374, 8.57097996306166;

-1.006197557592423e-05, -3.008140277952937e-05, 0.9999999999999999]

R is

[0.9969387384754708, -0.05155574188737422, 0.05878058527591362;

0.05000441581290405, 0.998368531736214, 0.02756507279306544;

-0.06010582439453527, -0.02454140006844052, 0.9978902793175882]

t is

[-0.9350802885437915;

-0.03514646275858852;

0.3526890700495534]

t^R=

[-0.01552350379276751, -0.3512511256212342, -0.04479421342842761;

0.2954056249512841, -0.04113132611923881, 0.9538388002772655;

-0.01171927332237169, -0.9353667366911764, -0.02370962656974232]

The 0 epipolar constraint(匹配点对的对极几何残差): [-0.0005415187881110395] !

The 1 epipolar constraint(匹配点对的对极几何残差): [-0.002158321995511289] !

The 2 epipolar constraint(匹配点对的对极几何残差): [0.0003344241044139079] !

The 3 epipolar constraint(匹配点对的对极几何残差): [-8.762782289159499e-06] !

The 4 epipolar constraint(匹配点对的对极几何残差): [0.0002121321913991919] !

The 5 epipolar constraint(匹配点对的对极几何残差): [0.0001088766997727475] !

The 6 epipolar constraint(匹配点对的对极几何残差): [0.0004246621771667042] !

The 7 epipolar constraint(匹配点对的对极几何残差): [-0.003173128212208817] !

The 8 epipolar constraint(匹配点对的对极几何残差): [-3.716645801080803e-05] !

The 9 epipolar constraint(匹配点对的对极几何残差): [-0.00145185184224198] !

The 10 epipolar constraint(匹配点对的对极几何残差): [-0.0009607717979376248] !

The 11 epipolar constraint(匹配点对的对极几何残差): [-0.000805199349842689] !

The 12 epipolar constraint(匹配点对的对极几何残差): [-0.001424813546545535] !

The 13 epipolar constraint(匹配点对的对极几何残差): [-0.0004339424745821285] !

The 14 epipolar constraint(匹配点对的对极几何残差): [-0.000478610965853582] !

The 15 epipolar constraint(匹配点对的对极几何残差): [-0.0001965692833058308] !

The 16 epipolar constraint(匹配点对的对极几何残差): [0.001542309777196826] !

The 17 epipolar constraint(匹配点对的对极几何残差): [0.003107154828888181] !

The 18 epipolar constraint(匹配点对的对极几何残差): [0.0006774648890596965] !

The 19 epipolar constraint(匹配点对的对极几何残差): [-0.001176167495933307] !

The 20 epipolar constraint(匹配点对的对极几何残差): [-0.003987986032032251] !

The 21 epipolar constraint(匹配点对的对极几何残差): [-0.001255263863164484] !

The 22 epipolar constraint(匹配点对的对极几何残差): [-0.001553941958847014] !

The 23 epipolar constraint(匹配点对的对极几何残差): [0.002009914869739976] !

The 24 epipolar constraint(匹配点对的对极几何残差): [-0.0006068096307231818] !

The 25 epipolar constraint(匹配点对的对极几何残差): [-2.769881004420494e-05] !

The 26 epipolar constraint(匹配点对的对极几何残差): [0.001274573011402158] !

The 27 epipolar constraint(匹配点对的对极几何残差): [-0.004169986054360231] !

The 28 epipolar constraint(匹配点对的对极几何残差): [-0.001108104733689524] !

The 29 epipolar constraint(匹配点对的对极几何残差): [-0.0005154295520383712] !

The 30 epipolar constraint(匹配点对的对极几何残差): [-0.001776993208794382] !

The 31 epipolar constraint(匹配点对的对极几何残差): [6.677362293025513e-07] !

The 32 epipolar constraint(匹配点对的对极几何残差): [-0.001853977732928253] !

The 33 epipolar constraint(匹配点对的对极几何残差): [-0.0004823070333038748] !

The 34 epipolar constraint(匹配点对的对极几何残差): [0.0004740904480800417] !

The 35 epipolar constraint(匹配点对的对极几何残差): [-0.002961041748859252] !

The 36 epipolar constraint(匹配点对的对极几何残差): [-0.00334765524829733] !

The 37 epipolar constraint(匹配点对的对极几何残差): [-0.0001975680967504223] !

The 38 epipolar constraint(匹配点对的对极几何残差): [-0.002824643387150695] !

The 39 epipolar constraint(匹配点对的对极几何残差): [-0.0004311798170555103] !

The 40 epipolar constraint(匹配点对的对极几何残差): [0.001181203684013504] !

The 41 epipolar constraint(匹配点对的对极几何残差): [1.756266610475343e-07] !

The 42 epipolar constraint(匹配点对的对极几何残差): [-0.002137829859245668] !

The 43 epipolar constraint(匹配点对的对极几何残差): [0.001153415527396465] !

The 44 epipolar constraint(匹配点对的对极几何残差): [-0.002120357728713398] !

The 45 epipolar constraint(匹配点对的对极几何残差): [2.741529055938496e-06] !

The 46 epipolar constraint(匹配点对的对极几何残差): [0.0009044582018204149] !

The 47 epipolar constraint(匹配点对的对极几何残差): [-0.002100484435898768] !

The 48 epipolar constraint(匹配点对的对极几何残差): [-0.0003517789219357331] !

The 49 epipolar constraint(匹配点对的对极几何残差): [-2.720464845591686e-05] !

The 50 epipolar constraint(匹配点对的对极几何残差): [-0.003784823353263345] !

The 51 epipolar constraint(匹配点对的对极几何残差): [-0.001588562608841583] !

The 52 epipolar constraint(匹配点对的对极几何残差): [-0.002928516011805465] !

The 53 epipolar constraint(匹配点对的对极几何残差): [-0.001016592266471106] !

The 54 epipolar constraint(匹配点对的对极几何残差): [0.0001241874577868896] !

The 55 epipolar constraint(匹配点对的对极几何残差): [-0.0009797104629406146] !

The 56 epipolar constraint(匹配点对的对极几何残差): [0.001457875224685545] !

The 57 epipolar constraint(匹配点对的对极几何残差): [-1.153738078286948e-05] !

The 58 epipolar constraint(匹配点对的对极几何残差): [-0.002733247427587723] !

The 59 epipolar constraint(匹配点对的对极几何残差): [0.00141572125781092] !

The 60 epipolar constraint(匹配点对的对极几何残差): [0.001790255871328722] !

The 61 epipolar constraint(匹配点对的对极几何残差): [-0.002547929671675511] !

The 62 epipolar constraint(匹配点对的对极几何残差): [-0.006257078861794559] !

The 63 epipolar constraint(匹配点对的对极几何残差): [-0.001874877415315751] !

The 64 epipolar constraint(匹配点对的对极几何残差): [-0.002104542912833518] !

The 65 epipolar constraint(匹配点对的对极几何残差): [1.39253152928176e-06] !

The 66 epipolar constraint(匹配点对的对极几何残差): [-0.004013502582090461] !

The 67 epipolar constraint(匹配点对的对极几何残差): [-0.004726241071896793] !

The 68 epipolar constraint(匹配点对的对极几何残差): [-0.001328682673648302] !

The 69 epipolar constraint(匹配点对的对极几何残差): [3.995925173527759e-07] !

The 70 epipolar constraint(匹配点对的对极几何残差): [-0.005080511723986325] !

The 71 epipolar constraint(匹配点对的对极几何残差): [-0.001977141850700595] !

The 72 epipolar constraint(匹配点对的对极几何残差): [-0.001661334264687377] !

The 73 epipolar constraint(匹配点对的对极几何残差): [0.004285762870626431] !

The 74 epipolar constraint(匹配点对的对极几何残差): [0.004087325194305862] !

The 75 epipolar constraint(匹配点对的对极几何残差): [0.0001482534285787568] !

The 76 epipolar constraint(匹配点对的对极几何残差): [-0.000962530113462215] !

The 77 epipolar constraint(匹配点对的对极几何残差): [-0.002341076011427135] !

The 78 epipolar constraint(匹配点对的对极几何残差): [-0.006138005035457868] !

执行程序所花的时间为1.04672秒!

triangulation.cpp

#include

#include

#include

// #include "extra.h" // used in opencv2

using namespace std;

using namespace cv;

void find_feature_matches(

const Mat &img_1, const Mat &img_2,

std::vector &keypoints_1,

std::vector &keypoints_2,

std::vector &matches);//定义find_feature_matches函数 输入图像1和图像2,输出特征点集合1、特征点集合2和匹配点对

void pose_estimation_2d2d(

const std::vector &keypoints_1,

const std::vector &keypoints_2,

const std::vector &matches,

Mat &R, Mat &t);定义pose_estimation_2d2d函数 输入特征点集合1、特征点集合2和匹配点对,输出估计的旋转矩阵、估计的平移向量和本质矩阵,平移向量差了一个尺度因子,通常将t进行归一化

void triangulation(

const vector &keypoint_1,

const vector &keypoint_2,

const std::vector &matches,

const Mat &R, const Mat &t,

vector &points

);//定义triangulation函数

/// 作图用

//inline表示内联函数

inline cv::Scalar get_color(float depth) //depth表示输入深度,get_color表示返回颜色信息

{

float up_th = 50, low_th = 10, th_range = up_th - low_th;//这里相当于定义阈值

if (depth > up_th) depth = up_th;//depth > 50 depth = 50

if (depth < low_th) depth = low_th;//depth < 10 depth = 10

return cv::Scalar(255 * depth / th_range, 0, 255 * (1 - depth / th_range)); //Scalar()中的颜色顺序为BGR

//B = 255 * depth / th_range = 255 * depth / 40,G = 0,R = 255 * (1 - depth / th_range) = 255 * (1 - depth /40 )

//反映出的深度信息就是图像里的像素点越远,对应的特征点颜色越红

}

// 像素坐标转相机归一化坐标

Point2f pixel2cam(const Point2d &p, const Mat &K);

int main(int argc, char **argv) {

if (argc != 3) {

cout << "usage: trianguendl imag1 imag2:"< keypoints_1, keypoints_2;//keypoints_1->图像1 keypoints_2->图像2

vector matches;//匹配matches

find_feature_matches(img_1, img_2, keypoints_1, keypoints_2, matches);//调用find_feature_matches函数

cout << "一共找到了" << matches.size() << "组匹配点" << endl;//输出匹配点数

//-- 估计两张图像间运动

Mat R, t;//R,t表示旋转矩阵和平移

pose_estimation_2d2d(keypoints_1, keypoints_2, matches, R, t);//调用函数 pose_estimation_2d2d

//-- 三角化得到特征点在相机1的相机坐标系下的坐标

vector points;//相机1中的特征点在其相机坐标系中的坐标

triangulation(keypoints_1, keypoints_2, matches, R, t, points);

//-- 验证三角化点与特征点的重投影关系

Mat K = (Mat_(3, 3) << 520.9, 0, 325.1, 0, 521.0, 249.7, 0, 0, 1);//相机内参矩阵

Mat img1_plot = img_1.clone();

Mat img2_plot = img_2.clone();

for (int i = 0; i < matches.size(); i++) {

// 第一个图

float depth1 = points[i].z; //图像1深度信息

cout << "depth: " << depth1 << endl;//输出深度信息

Mat pt1_cam = K * (Mat_(3, 1) << points[i].x / points[i].z, points[i].y / points[i].z, 1); //pt1_cam表示的是归一化坐标(u, v, 1) 具体形式可参考视觉slam十四讲p168的式7.11

//Point2d pt1_cam = pixel2cam(keypoints_1[matches[i].queryIdx].pt, K);//像素坐标转换为相机坐标

Point2f pixel1 = keypoints_1[matches[i].queryIdx].pt;

cv::circle(img1_plot, keypoints_1[matches[i].queryIdx].pt, 2, get_color(depth1), 2);//画圆

Point2f residual1;//图像1残差

residual1.x = pt1_cam.at(0, 0) - pixel1.x;

residual1.y = pt1_cam.at(1, 0) - pixel1.y;

cout << "图像1像素点的深度为" << depth1 << "平移单位 "<< endl;

cout<<"图像1的残差为: " << residual1.x << ", " << residual1.y << ")像素单位 " << endl;

// 第二个图

Mat pt2_trans = R * (Mat_(3, 1) << points[i].x, points[i].y, points[i].z) + t;//相当于x2 = R * x1 + t的表达式 //pt2_trans表示的是归一化坐标(u, v, 1) 具体形式可参考视觉slam十四讲p168的式7.11

float depth2 = pt2_trans.at(2, 0); //图像2的深度信息

Point2f pixel2 = keypoints_2[matches[i].trainIdx].pt;

cv::circle(img2_plot, keypoints_2[matches[i].trainIdx].pt, 2, get_color(depth2), 2);//画圆

Point2f residual2;//图像2残差

residual2.x = pt2_trans.at(0, 0) - pixel2.x;

residual2.y = pt2_trans.at(1, 0) - pixel2.y;

cout << "图像2像素点的深度为" << depth2 << "平移单位 "<< endl;

cout<<"图像2的残差为: " << residual2.x << ", " << residual2.y << ")像素单位 " << endl;

}

cv::imshow("img 1", img1_plot);//界面展示图像1的深度信息结果 红圈

cv::imshow("img 2", img2_plot);//界面展示图像2的深度信息结果 红圈

cv::waitKey();

chrono::steady_clock::time_point t2 = chrono::steady_clock::now();//计时结束

chrono::duration time_used = chrono::duration_cast> (t2 - t1);//计算耗时

cout << "执行程序所花费的时间为:" << time_used.count() << "秒!" << endl;

return 0;

}

void find_feature_matches(const Mat &img_1, const Mat &img_2,

std::vector &keypoints_1,

std::vector &keypoints_2,

std::vector &matches) {

//-- 初始化

Mat descriptors_1, descriptors_2;//描述子和描述子2

// used in OpenCV3

Ptr detector = ORB::create();

Ptr descriptor = ORB::create();

// use this if you are in OpenCV2

// Ptr detector = FeatureDetector::create ( "ORB" );

// Ptr descriptor = DescriptorExtractor::create ( "ORB" );

Ptr matcher = DescriptorMatcher::create("BruteForce-Hamming");//使用汉明距离进行特征匹配

//-- 第一步:检测 Oriented FAST 角点位置

detector->detect(img_1, keypoints_1);//检测图像1的 Oriented FAST 角点

detector->detect(img_2, keypoints_2);//检测图像2的 Oriented FAST 角点

//-- 第二步:根据角点位置计算 BRIEF 描述子

descriptor->compute(img_1, keypoints_1, descriptors_1);

descriptor->compute(img_2, keypoints_2, descriptors_2);

//-- 第三步:对两幅图像中的BRIEF描述子进行匹配,使用 Hamming 距离

vector match;

// BFMatcher matcher ( NORM_HAMMING );

matcher->match(descriptors_1, descriptors_2, match);

//-- 第四步:匹配点对筛选

double min_dist = 10000, max_dist = 0;//求最小距离和最大距离 这里已经初始化

//找出所有匹配之间的最小距离和最大距离, 即是最相似的和最不相似的两组点之间的距离

for (int i = 0; i < descriptors_1.rows; i++) {

double dist = match[i].distance;

if (dist < min_dist) min_dist = dist;

if (dist > max_dist) max_dist = dist;

}

printf("-- Max dist : %f \n", max_dist);

printf("-- Min dist : %f \n", min_dist);

//当描述子之间的距离大于两倍的最小距离时,即认为匹配有误.但有时候最小距离会非常小,设置一个经验值30作为下限.

//遍历所有匹配,排除误匹配

for (int i = 0; i < descriptors_1.rows; i++)//不同的结果可以在这里设置

//当描述子之间的距离大于两倍的最小距离时,即认为匹配有误 30.0为经验值

{

if (match[i].distance <= max(2 * min_dist, 30.0)) {

matches.push_back(match[i]);

}

}

Mat img_match;

drawMatches(img_1, keypoints_1, img_2, keypoints_2, matches, img_match, Scalar::all(-1));

imshow("good matches", img_match);

waitKey(0); //程序暂停执行,等待一个按键输入

}

void pose_estimation_2d2d(

const std::vector &keypoints_1,

const std::vector &keypoints_2,

const std::vector &matches,

Mat &R, Mat &t) {

// 相机内参,TUM Freiburg2

Mat K = (Mat_(3, 3) << 520.9, 0, 325.1, 0, 521.0, 249.7, 0, 0, 1);

//-- 把匹配点转换为vector的形式

vector points1;

vector points2;

for (int i = 0; i < (int) matches.size(); i++) {

points1.push_back(keypoints_1[matches[i].queryIdx].pt);// 将像素坐标转换至相机坐标

points2.push_back(keypoints_2[matches[i].trainIdx].pt);// 将像素坐标转换至相机坐标

}

//-- 计算本质矩阵

Point2d principal_point(325.1, 249.7); //相机主点, TUM dataset标定值

int focal_length = 521; //相机焦距, TUM dataset标定值

Mat essential_matrix;

essential_matrix = findEssentialMat(points1, points2, focal_length, principal_point);

//-- 从本质矩阵中恢复旋转和平移信息.

recoverPose(essential_matrix, points1, points2, R, t, focal_length, principal_point);

}

void triangulation(

const vector &keypoint_1,

const vector &keypoint_2,

const std::vector &matches,

const Mat &R, const Mat &t,

vector &points) {

Mat T1 = (Mat_(3, 4) <<

1, 0, 0, 0,

0, 1, 0, 0,

0, 0, 1, 0);

Mat T2 = (Mat_(3, 4) <<

R.at(0, 0), R.at(0, 1), R.at(0, 2), t.at(0, 0),

R.at(1, 0), R.at(1, 1), R.at(1, 2), t.at(1, 0),

R.at(2, 0), R.at(2, 1), R.at(2, 2), t.at(2, 0)

);

Mat K = (Mat_(3, 3) << 520.9, 0, 325.1, 0, 521.0, 249.7, 0, 0, 1);//相机内参矩阵

vector pts_1, pts_2;//归一化平面上的点,triangulatePoints()函数的输入参数

for (DMatch m:matches) {

// 将像素坐标转换至相机坐标

pts_1.push_back(pixel2cam(keypoint_1[m.queryIdx].pt, K));

pts_2.push_back(pixel2cam(keypoint_2[m.trainIdx].pt, K));

}

Mat pts_4d;

cv::triangulatePoints(T1, T2, pts_1, pts_2, pts_4d);

// 转换成非齐次坐标

for (int i = 0; i < pts_4d.cols; i++) {

Mat x = pts_4d.col(i);//取第i列

x /= x.at(3, 0); // 归一化

Point3d p(

x.at(0, 0),

x.at(1, 0),

x.at(2, 0)

);

points.push_back(p);

}

}

Point2f pixel2cam(const Point2d &p, const Mat &K) {

return Point2f

(

(p.x - K.at(0, 2)) / K.at(0, 0),

(p.y - K.at(1, 2)) / K.at(1, 1)

);

}

CMakeLists.txt和上面是一样的

执行命令:

./triangulation 1.png 2.png 执行结果:

-- Max dist : 94.000000

-- Min dist : 4.000000

一共找到了79组匹配点

depth: 14.4036

图像1像素点的深度为14.4036平移单位

图像1的残差为: -0.0148561, -0.150038)像素单位

图像2像素点的深度为14.8448平移单位

图像2的残差为: -323.06, -113.005)像素单位

depth: 9.63635

图像1像素点的深度为9.63635平移单位

图像1的残差为: -0.019322, -0.644624)像素单位

图像2像素点的深度为10.0757平移单位

图像2的残差为: -233.409, -220.17)像素单位

depth: 7.88393

图像1像素点的深度为7.88393平移单位

图像1的残差为: -0.00437226, 0.114155)像素单位

图像2像素点的深度为8.41888平移单位

图像2的残差为: -69.2036, -307.06)像素单位

depth: 8.35771

图像1像素点的深度为8.35771平移单位

图像1的残差为: 5.11942e-05, -0.0027093)像素单位

图像2像素点的深度为8.79158平移单位

图像2的残差为: -187.365, -291.286)像素单位

depth: 7.75093

图像1像素点的深度为7.75093平移单位

图像1的残差为: -0.00163884, 0.0728191)像素单位

图像2像素点的深度为8.29532平移单位

图像2的残差为: -65.2057, -286.407)像素单位

depth: 12.5495

图像1像素点的深度为12.5495平移单位

图像1的残差为: 0.00141988, 0.0284572)像素单位

图像2像素点的深度为12.786平移单位

图像2的残差为: -411.818, -189.515)像素单位

depth: 7.71705

图像1像素点的深度为7.71705平移单位

图像1的残差为: -0.00137752, 0.149456)像素单位

图像2像素点的深度为8.29455平移单位

图像2的残差为: -39.6193, -266.727)像素单位

depth: 7.93482

图像1像素点的深度为7.93482平移单位

图像1的残差为: 0.0346043, -1.0847)像素单位

图像2像素点的深度为8.47505平移单位

图像2的残差为: -68.2496, -300.149)像素单位

depth: 7.57168

图像1像素点的深度为7.57168平移单位

图像1的残差为: 7.85694e-05, -0.0130595)像素单位

图像2像素点的深度为8.14345平移单位

图像2的残差为: -41.504, -263.776)像素单位

depth: 8.46969

图像1像素点的深度为8.46969平移单位

图像1的残差为: -0.00246999, -0.356362)像素单位

图像2像素点的深度为8.59691平移单位

图像2的残差为: -506.949, -265.725)像素单位

depth: 9.78479

图像1像素点的深度为9.78479平移单位

图像1的残差为: -0.00553395, -0.27674)像素单位

图像2像素点的深度为10.1623平移单位

图像2的残差为: -279.9, -236.262)像素单位

depth: 8.88268

图像1像素点的深度为8.88268平移单位

图像1的残差为: -0.0144425, -0.259716)像素单位

图像2像素点的深度为9.43465平移单位

图像2的残差为: -138.443, -183.222)像素单位

depth: 9.02949

图像1像素点的深度为9.02949平移单位

图像1的残差为: -0.00919512, -0.389588)像素单位

图像2像素点的深度为9.32731平移单位

图像2的残差为: -349.947, -231.917)像素单位

depth: 8.87554

图像1像素点的深度为8.87554平移单位

图像1的残差为: -0.000924774, -0.107203)像素单位

图像2像素点的深度为9.00642平移单位

图像2的残差为: -496.976, -262.768)像素单位

depth: 9.66984

图像1像素点的深度为9.66984平移单位

图像1的残差为: -0.00427046, -0.142931)像素单位

图像2像素点的深度为10.1099平移单位

图像2的残差为: -233.415, -220.181)像素单位

depth: 12.2194

图像1像素点的深度为12.2194平移单位

图像1的残差为: -0.00253309, -0.0511311)像素单位

图像2像素点的深度为12.447平移单位

图像2的残差为: -418.904, -189.863)像素单位

depth: 14.8655

图像1像素点的深度为14.8655平移单位

图像1的残差为: 0.0416501, 0.427027)像素单位

图像2像素点的深度为15.3079平移单位

图像2的残差为: -324.033, -115.67)像素单位

depth: 15.0627

图像1像素点的深度为15.0627平移单位

图像1的残差为: 0.0816033, 0.852222)像素单位

图像2像素点的深度为15.4822平移单位

图像2的残差为: -336.842, -118.057)像素单位

depth: 7.7117

图像1像素点的深度为7.7117平移单位

图像1的残差为: -0.00318172, 0.235026)像素单位

图像2像素点的深度为8.27117平移单位

图像2的残差为: -54.7616, -273.224)像素单位

depth: 8.78825

图像1像素点的深度为8.78825平移单位

图像1的残差为: -0.00246132, -0.290719)像素单位

图像2像素点的深度为8.9215平移单位

图像2的残差为: -496.218, -262.571)像素单位

depth: 9.10896

图像1像素点的深度为9.10896平移单位

图像1的残差为: -0.0259298, -1.09092)像素单位

图像2像素点的深度为9.40757平移单位

图像2的残差为: -349.472, -230.729)像素单位

depth: 9.62574

图像1像素点的深度为9.62574平移单位

图像1的残差为: -0.00712829, -0.361729)像素单位

图像2像素点的深度为10.0024平移单位

图像2的残差为: -279.297, -236.649)像素单位

depth: 8.78611

图像1像素点的深度为8.78611平移单位

图像1的残差为: -0.00323575, -0.38321)像素单位

图像2像素点的深度为8.9158平移单位

图像2的残差为: -499.759, -262.569)像素单位

depth: 12.5336

图像1像素点的深度为12.5336平移单位

图像1的残差为: 0.0264397, 0.525318)像素单位

图像2像素点的深度为12.7705平移单位

图像2的残差为: -411.821, -189.525)像素单位

depth: 7.88369

图像1像素点的深度为7.88369平移单位

图像1的残差为: 0.00499926, -0.207999)像素单位

图像2像素点的深度为8.42969平移单位

图像2的残差为: -66.6515, -288.558)像素单位

depth: 8.45255

图像1像素点的深度为8.45255平移单位

图像1的残差为: 0.000167288, -0.00856084)像素单位

图像2像素点的深度为8.88793平移单位

图像2的残差为: -187.39, -291.278)像素单位

depth: 9.65039

图像1像素点的深度为9.65039平移单位

图像1的残差为: 0.0113906, 0.380628)像素单位

图像2像素点的深度为10.0905平移单位

图像2的残差为: -233.364, -220.049)像素单位

depth: 8.64867

图像1像素点的深度为8.64867平移单位

图像1的残差为: -0.00476583, -1.02933)像素单位

图像2像素点的深度为8.77996平移单位

图像2的残差为: -498.154, -267.517)像素单位

depth: 12.2082

图像1像素点的深度为12.2082平移单位

图像1的残差为: -0.0144103, -0.288414)像素单位

图像2像素点的深度为12.4375平移单位

图像2的残差为: -418.203, -188.685)像素单位

depth: 12.1836

图像1像素点的深度为12.1836平移单位

图像1的残差为: -0.0067673, -0.1348)像素单位

图像2像素点的深度为12.4232平移单位

图像2的残差为: -411.177, -188.687)像素单位

depth: 9.18492

图像1像素点的深度为9.18492平移单位

图像1的残差为: -0.0117828, -0.486061)像素单位

图像2像素点的深度为9.48384平移单位

图像2的残差为: -349.468, -230.743)像素单位

depth: 9.52295

图像1像素点的深度为9.52295平移单位

图像1的残差为: 4.27868e-06, 0.000192284)像素单位

图像2像素点的深度为9.89965平移单位

图像2的残差为: -278.817, -236.417)像素单位

depth: 8.39902

图像1像素点的深度为8.39902平移单位

图像1的残差为: 0.0106431, -0.57336)像素单位

图像2像素点的深度为8.83456平移单位

图像2的残差为: -186.708, -290.173)像素单位

depth: 8.78013

图像1像素点的深度为8.78013平移单位

图像1的残差为: -0.00851937, -0.155527)像素单位

图像2像素点的深度为9.32845平移单位

图像2的残差为: -138.758, -184.074)像素单位

depth: 7.80552

图像1像素点的深度为7.80552平移单位

图像1的残差为: -0.00571924, 0.163689)像素单位

图像2像素点的深度为8.35257平移单位

图像2的残差为: -57.1661, -301.558)像素单位

depth: 8.57948

图像1像素点的深度为8.57948平移单位

图像1的残差为: -0.00305312, -0.73087)像素单位

图像2像素点的深度为8.71138平移单位

图像2的残差为: -498.177, -269.224)像素单位

depth: 8.53905

图像1像素点的深度为8.53905平移单位

图像1的残差为: -0.00644693, -0.82554)像素单位

图像2像素点的深度为8.67311平移单位

图像2的残差为: -499.889, -262.427)像素单位

depth: 14.3688

图像1像素点的深度为14.3688平移单位

图像1的残差为: -0.00555154, -0.0547732)像素单位

图像2像素点的深度为14.8153平移单位

图像2的残差为: -321.513, -109.51)像素单位

depth: 9.03321

图像1像素点的深度为9.03321平移单位

图像1的残差为: -0.0188211, -0.77313)像素单位

图像2像素点的深度为9.33315平移单位

图像2的残差为: -348.627, -230.167)像素单位

depth: 12.3013

图像1像素点的深度为12.3013平移单位

图像1的残差为: -0.00555085, -0.112271)像素单位

图像2像素点的深度为12.5303平移单位

图像2的残差为: -417.624, -189.825)像素单位

depth: 12.3386

图像1像素点的深度为12.3386平移单位

图像1的残差为: 0.0156202, 0.308888)像素单位

图像2像素点的深度为12.5778平移单位

图像2的残差为: -411.151, -188.716)像素单位

depth: 14.5313

图像1像素点的深度为14.5313平移单位

图像1的残差为: 4.78995e-06, 6.16349e-05)像素单位

图像2像素点的深度为14.9694平移单位

图像2的残差为: -324.871, -114.589)像素单位

depth: 7.71837

图像1像素点的深度为7.71837平移单位

图像1的残差为: 0.00950829, -0.744489)像素单位

图像2像素点的深度为8.28189平移单位

图像2的残差为: -50.517, -273.209)像素单位

depth: 7.95137

图像1像素点的深度为7.95137平移单位

图像1的残差为: -0.00520178, 0.398407)像素单位

图像2像素点的深度为8.5142平移单位

图像2的残差为: -59.7056, -272.649)像素单位

depth: 9.54909

图像1像素点的深度为9.54909平移单位

图像1的残差为: -0.019034, -0.633304)像素单位

图像2像素点的深度为9.98727平移单位

图像2的残差为: -233.346, -220.024)像素单位

depth: 8.44807

图像1像素点的深度为8.44807平移单位

图像1的残差为: -1.71908e-05, 0.000849519)像素单位

图像2像素点的深度为8.88461平移单位

图像2的残差为: -186.721, -290.178)像素单位

depth: 9.13752

图像1像素点的深度为9.13752平移单位

图像1的残差为: -0.000702037, 0.275408)像素单位

图像2像素点的深度为9.57372平移单位

图像2的残差为: -207.827, -267.512)像素单位

depth: 9.51844

图像1像素点的深度为9.51844平移单位

图像1的残差为: -0.0124249, -0.605402)像素单位

图像2像素点的深度为9.89509平移单位

图像2的残差为: -279.099, -235.276)像素单位

depth: 9.60796

图像1像素点的深度为9.60796平移单位

图像1的残差为: -0.00195225, -0.112312)像素单位

图像2像素点的深度为10.1347平移单位

图像2的残差为: -151.76, -236.421)像素单位

depth: 9.20528

图像1像素点的深度为9.20528平移单位

图像1的残差为: -0.000143149, -0.00869456)像素单位

图像2像素点的深度为9.72495平移单位

图像2的残差为: -150.207, -235.282)像素单位

depth: 8.86045

图像1像素点的深度为8.86045平移单位

图像1的残差为: 0.00071856, -1.15286)像素单位

图像2像素点的深度为9.29351平移单位

图像2的残差为: -207.425, -265.121)像素单位

depth: 9.33464

图像1像素点的深度为9.33464平移单位

图像1的残差为: -0.0106116, -0.434357)像素单位

图像2像素点的深度为9.63318平移单位

图像2的残差为: -349.97, -230.523)像素单位

depth: 9.40554

图像1像素点的深度为9.40554平移单位

图像1的残差为: -0.0272679, -0.874236)像素单位

图像2像素点的深度为9.84166平移单位

图像2的残差为: -233.998, -218.316)像素单位

depth: 12.4849

图像1像素点的深度为12.4849平移单位

图像1的残差为: -0.0133288, -0.264876)像素单位

图像2像素点的深度为12.7155平移单位

图像2的残差为: -416.578, -188.157)像素单位

depth: 8.26873

图像1像素点的深度为8.26873平移单位

图像1的残差为: -0.000708732, 0.038394)像素单位

图像2像素点的深度为8.7021平移单位

图像2的残差为: -187.238, -289.626)像素单位

depth: 9.41741

图像1像素点的深度为9.41741平移单位

图像1的残差为: -0.00454888, -0.311985)像素单位

图像2像素点的深度为9.93379平移单位

图像2的残差为: -154.686, -240.707)像素单位

depth: 9.40039

图像1像素点的深度为9.40039平移单位

图像1的残差为: 0.00999438, 0.398571)像素单位

图像2像素点的深度为9.69934平移单位

图像2的残差为: -349.966, -230.54)像素单位

depth: 9.31937

图像1像素点的深度为9.31937平移单位

图像1的残差为: -0.000107027, -0.00345081)像素单位

图像2像素点的深度为9.75495平移单位

图像2的残差为: -233.168, -219.547)像素单位

depth: 9.22272

图像1像素点的深度为9.22272平移单位

图像1的残差为: -0.0154454, -0.881064)像素单位

图像2像素点的深度为9.75295平移单位

图像2的残差为: -140.383, -236.624)像素单位

depth: 10.0239

图像1像素点的深度为10.0239平移单位

图像1的残差为: 0.00811372, 0.407089)像素单位

图像2像素点的深度为10.4009平移单位

图像2的残差为: -282.057, -236.664)像素单位

depth: 7.69828

图像1像素点的深度为7.69828平移单位

图像1的残差为: -0.0266667, 0.48813)像素单位

图像2像素点的深度为7.94662平移单位

图像2的残差为: -343.108, -362.549)像素单位

depth: 9.09606

图像1像素点的深度为9.09606平移单位

图像1的残差为: -0.011282, -0.812761)像素单位

图像2像素点的深度为9.60653平移单位

图像2的残差为: -152.542, -241.995)像素单位

depth: 8.77566

图像1像素点的深度为8.77566平移单位

图像1的残差为: -0.0434209, -1.71614)像素单位

图像2像素点的深度为9.07921平移单位

图像2的残差为: -346.004, -227.303)像素单位

depth: 9.33225

图像1像素点的深度为9.33225平移单位

图像1的残差为: -0.0173464, -0.559388)像素单位

图像2像素点的深度为9.76659平移单位

图像2的残差为: -234.636, -218.562)像素单位

depth: 10.8048

图像1像素点的深度为10.8048平移单位

图像1的残差为: -0.0297315, -0.634992)像素单位

图像2像素点的深度为11.294平移单位

图像2的残差为: -220.299, -195.281)像素单位

depth: 11.7904

图像1像素点的深度为11.7904平移单位

图像1的残差为: 2.89686e-05, 0.000424129)像素单位

图像2像素点的深度为12.3007平移单位

图像2的残差为: -221.478, -188.113)像素单位

depth: 7.37231

图像1像素点的深度为7.37231平移单位

图像1的残差为: 0.0625925, -1.08867)像素单位

图像2像素点的深度为7.61585平移单位

图像2的残差为: -349.007, -365.542)像素单位

depth: 9.43498

图像1像素点的深度为9.43498平移单位

图像1的残差为: -0.0269321, -1.35977)像素单位

图像2像素点的深度为9.80737平移单位

图像2的残差为: -281.52, -236.128)像素单位

depth: 7.26135

图像1像素点的深度为7.26135平移单位

图像1的残差为: 0.019889, -0.359244)像素单位

图像2像素点的深度为7.50204平移单位

图像2的残差为: -354.309, -363.812)像素单位

depth: 7.231

图像1像素点的深度为7.231平移单位

图像1的残差为: -1.00576e-05, 0.0001053)像素单位

图像2像素点的深度为7.48103平移单位

图像2的残差为: -343.714, -363.822)像素单位

depth: 9.42683

图像1像素点的深度为9.42683平移单位

图像1的残差为: -0.0501229, -1.51601)像素单位

图像2像素点的深度为9.86365平移单位

图像2的残差为: -234.653, -215.621)像素单位

depth: 9.14959

图像1像素点的深度为9.14959平移单位

图像1的残差为: -0.0146069, -0.541718)像素单位

图像2像素点的深度为9.45331平移单位

图像2的残差为: -347.161, -226.166)像素单位

depth: 10.1792

图像1像素点的深度为10.1792平移单位

图像1的残差为: -0.00940457, -0.47708)像素单位

图像2像素点的深度为10.5547平移单位

图像2的残差为: -283.923, -236.748)像素单位

depth: 9.09463

图像1像素点的深度为9.09463平移单位

图像1的残差为: 0.0219701, 1.378)像素单位

图像2像素点的深度为9.61948平移单位

图像2的残差为: -143.166, -236.758)像素单位

depth: 9.35573

图像1像素点的深度为9.35573平移单位

图像1的残差为: 0.0147491, 1.30236)像素单位

图像2像素点的深度为9.8708平移单位

图像2的残差为: -153.801, -243.794)像素单位

depth: 9.51033

图像1像素点的深度为9.51033平移单位

图像1的残差为: 0.000456967, 0.0429056)像素单位

图像2像素点的深度为9.8855平移单位

图像2的残差为: -273.323, -250.802)像素单位

depth: 8.4322

图像1像素点的深度为8.4322平移单位

图像1的残差为: 0.00538225, -0.297219)像素单位

图像2像素点的深度为8.86664平移单位

图像2的残差为: -188.688, -289.543)像素单位

depth: 7.89044

图像1像素点的深度为7.89044平移单位

图像1的残差为: 0.0250301, -0.638023)像素单位

图像2像素点的深度为8.14523平移单位

图像2的残差为: -347.218, -335.447)像素单位

depth: 9.86828

图像1像素点的深度为9.86828平移单位

图像1的残差为: -0.0297473, -1.69913)像素单位

图像2像素点的深度为10.1808平移单位

图像2的残差为: -333.078, -240.229)像素单位

执行程序所花费的时间为:44.147秒!

pose_estimation_3d2d.cpp

#include

#include //opencv核心模块

#include //opencv特征点

#include //opencv gui

#include //求解器头文件

#include //eigen核心模块

#include //g2o顶点(Vertex)头文件 视觉slam十四讲p141用顶点表示优化变量,用边表示误差项

#include //g2o边(edge)头文件

#include //稠密矩阵求解

#include //求解器头文件

#include

#include //高斯牛顿算法头文件

#include //线性求解

#include //李群李代数se3

#include

using namespace std;

using namespace cv;

void find_feature_matches(

const Mat &img_1, const Mat &img_2,

std::vector &keypoints_1,

std::vector &keypoints_2,

std::vector &matches);//定义find_feature_matches函数 输入图像1和图像2,输出特征点集合1、特征点集合2和匹配点对

// 像素坐标转相机归一化坐标

Point2d pixel2cam(const Point2d &p, const Mat &K);

// BA by g2o

typedef vector> VecVector2d;//2d点 见视觉slam十四讲 p180-p187 需要输入2d点

typedef vector> VecVector3d;//3d点 见视觉slam十四讲 p180-p187 需要输入3d点

void bundleAdjustmentG2O(

const VecVector3d &points_3d,

const VecVector2d &points_2d,

const Mat &K,

Sophus::SE3d &pose

); //定义bundleAdjustmentG2O函数 输入3d点和2d点和相机内参矩阵,输出pose为优化变量 使用BA方法

// BA by gauss-newton

void bundleAdjustmentGaussNewton(

const VecVector3d &points_3d,

const VecVector2d &points_2d,

const Mat &K,

Sophus::SE3d &pose

);//定义bundleAdjustmentGaussNewton函数 输入3d点和2d点和相机内参矩阵,输出pose为优化变量 使用高斯牛顿法

int main(int argc, char **argv) {

if (argc != 5) {

cout << "usage: pose_estimation_3d2d img1 img2 depth1 depth2" << endl;//输出命令行用法

return 1;

}

//-- 读取图像

Mat img_1 = imread(argv[1], CV_LOAD_IMAGE_COLOR);//读取图像1 CV_LOAD_IMAGE_COLOR表示返回一张彩色图像

Mat img_2 = imread(argv[2], CV_LOAD_IMAGE_COLOR);//读取图像2 CV_LOAD_IMAGE_COLOR表示返回一张彩色图像

assert(img_1.data && img_2.data && "Can not load images!");//assert()为断言函数,条件为假则停止执行

vector keypoints_1, keypoints_2;//特征点1 -> 图像1 特征点2 -> 图像2

vector matches;//匹配 matches

find_feature_matches(img_1, img_2, keypoints_1, keypoints_2, matches);//调用find_feature_matches函数

cout << "一共找到了" << matches.size() << "组匹配点" << endl;//输出匹配点数

//************************调用opencv函数求解pnp******************************

// 建立3D点

Mat d1 = imread(argv[3], CV_LOAD_IMAGE_UNCHANGED); // 深度图为16位无符号数,单通道图像

Mat K = (Mat_(3, 3) << 520.9, 0, 325.1, 0, 521.0, 249.7, 0, 0, 1);//初始化相机内参矩阵

vector pts_3d;//3d路标点

vector pts_2d;//2d像素点

for (DMatch m:matches) {

ushort d = d1.ptr(int(keypoints_1[m.queryIdx].pt.y))[int(keypoints_1[m.queryIdx].pt.x)];

if (d == 0) // bad depth

continue;

float dd = d / 5000.0;

Point2d p1 = pixel2cam(keypoints_1[m.queryIdx].pt, K);//像素坐标转相机归一化坐标

pts_3d.push_back(Point3f(p1.x * dd, p1.y * dd, dd));

pts_2d.push_back(keypoints_2[m.trainIdx].pt);

}

cout << "3d-2d pairs: " << pts_3d.size() << endl;//输出3d-2d pairs

chrono::steady_clock::time_point t1 = chrono::steady_clock::now();//开始计时

Mat r, t;//r表示旋转向量 t表示平移向量

solvePnP(pts_3d, pts_2d, K, Mat(), r, t, false); // 调用OpenCV 的 PnP 求解,可选择EPNP,DLS等方法

Mat R;//旋转矩阵R

cv::Rodrigues(r, R); // r为旋转向量形式,用Rodrigues公式转换为矩阵 视觉slam十四讲p53式3.15

chrono::steady_clock::time_point t2 = chrono::steady_clock::now();//计时结束

chrono::duration time_used = chrono::duration_cast>(t2 - t1);//计算耗时

cout << "solve pnp in opencv cost time: " << time_used.count() << " seconds." << endl;//输出使用opencv中使用pnp所花费的时间

cout << "R=" << endl << R << endl;//输出旋转矩阵

cout << "t=" << endl << t << endl;//输出平移向量

//*************************使用BA方法*****************************************

VecVector3d pts_3d_eigen;

VecVector2d pts_2d_eigen;

for (size_t i = 0; i < pts_3d.size(); ++i) //遍历3d点

{

pts_3d_eigen.push_back(Eigen::Vector3d(pts_3d[i].x, pts_3d[i].y, pts_3d[i].z));

pts_2d_eigen.push_back(Eigen::Vector2d(pts_2d[i].x, pts_2d[i].y));

}

cout << "calling bundle adjustment by gauss newton" << endl;//输出calling bundle adjustment by gauss newton

Sophus::SE3d pose_gn;

t1 = chrono::steady_clock::now();//计时开始

bundleAdjustmentGaussNewton(pts_3d_eigen, pts_2d_eigen, K, pose_gn);//调用bundleAdjustmentGaussNewton函数

t2 = chrono::steady_clock::now();//计时结束

time_used = chrono::duration_cast>(t2 - t1);//计时结束

cout << "solve pnp by gauss newton cost time: " << time_used.count() << " seconds." << endl;//输出

cout << "calling bundle adjustment by g2o" << endl;

Sophus::SE3d pose_g2o;

t1 = chrono::steady_clock::now();

bundleAdjustmentG2O(pts_3d_eigen, pts_2d_eigen, K, pose_g2o);

t2 = chrono::steady_clock::now();

time_used = chrono::duration_cast>(t2 - t1);

cout << "solve pnp by g2o cost time: " << time_used.count() << " seconds." << endl;//输出在G2O中求解pnp耗时

return 0;

}

void find_feature_matches(const Mat &img_1, const Mat &img_2,

std::vector &keypoints_1,

std::vector &keypoints_2,

std::vector &matches) {

//-- 初始化

Mat descriptors_1, descriptors_2;//描述子和描述子2

// used in OpenCV3

Ptr detector = ORB::create();

Ptr descriptor = ORB::create();

// use this if you are in OpenCV2

// Ptr detector = FeatureDetector::create ( "ORB" );

// Ptr descriptor = DescriptorExtractor::create ( "ORB" );

Ptr matcher = DescriptorMatcher::create("BruteForce-Hamming");//使用汉明距离进行特征匹配

//-- 第一步:检测 Oriented FAST 角点位置

detector->detect(img_1, keypoints_1);

detector->detect(img_2, keypoints_2);

//-- 第二步:根据角点位置计算 BRIEF 描述子

descriptor->compute(img_1, keypoints_1, descriptors_1);//检测图像1的 Oriented FAST 角点

descriptor->compute(img_2, keypoints_2, descriptors_2);//检测图像2的 Oriented FAST 角点

//-- 第三步:对两幅图像中的BRIEF描述子进行匹配,使用 Hamming 距离

vector match;

// BFMatcher matcher ( NORM_HAMMING );

matcher->match(descriptors_1, descriptors_2, match);

//-- 第四步:匹配点对筛选

double min_dist = 10000, max_dist = 0;//求最小距离和最大距离

//找出所有匹配之间的最小距离和最大距离, 即是最相似的和最不相似的两组点之间的距离

for (int i = 0; i < descriptors_1.rows; i++) {

double dist = match[i].distance;

if (dist < min_dist) min_dist = dist;

if (dist > max_dist) max_dist = dist;

}

printf("-- Max dist : %f \n", max_dist);

printf("-- Min dist : %f \n", min_dist);

//当描述子之间的距离大于两倍的最小距离时,即认为匹配有误.但有时候最小距离会非常小,设置一个经验值30作为下限.

//遍历所有匹配,排除误匹配

for (int i = 0; i < descriptors_1.rows; i++) {

if (match[i].distance <= max(2 * min_dist, 30.0))//不同的结果可以在这里设置

//当描述子之间的距离大于两倍的最小距离时,即认为匹配有误 30.0为经验值

{

matches.push_back(match[i]);

}

}

}

Point2d pixel2cam(const Point2d &p, const Mat &K)

{

return Point2d

(

(p.x - K.at(0, 2)) / K.at(0, 0),

(p.y - K.at(1, 2)) / K.at(1, 1)

);

}

void bundleAdjustmentGaussNewton(

const VecVector3d &points_3d,

const VecVector2d &points_2d,

const Mat &K,

Sophus::SE3d &pose) {

typedef Eigen::Matrix Vector6d;//6*1矩阵

const int iterations = 10;//迭代次数设为10

double cost = 0, lastCost = 0;

double fx = K.at(0, 0);//相机内参 具体含义见视觉slam十四讲p99

double fy = K.at(1, 1);//相机内参 具体含义见视觉slam十四讲p99

double cx = K.at(0, 2);//相机内参 具体含义见视觉slam十四讲p99

double cy = K.at(1, 2);//相机内参 具体含义见视觉slam十四讲p99

for (int iter = 0; iter < iterations; iter++)//迭代

{

Eigen::Matrix H = Eigen::Matrix::Zero();//将6*6矩阵初始化为0

Vector6d b = Vector6d::Zero();

cost = 0;//代价函数值置为0

// compute cost

for (int i = 0; i < points_3d.size(); i++)//遍历3d点

//计算增量方程中的H和b以及代价函数值

{

Eigen::Vector3d pc = pose * points_3d[i];//P' = TP =[X',Y',Z'] 视觉slam十四讲p186式7.38

double inv_z = 1.0 / pc[2];//相当于1 / Z'

double inv_z2 = inv_z * inv_z;//相当于( 1 / Z' ) * ( 1 / Z' )

Eigen::Vector2d proj(fx * pc[0] / pc[2] + cx, fy * pc[1] / pc[2] + cy);//u = fx(X' / z') +cx,v = fy(Y' / z') +cy 视觉slam十四讲p186式7.41

Eigen::Vector2d e = points_2d[i] - proj;

cost += e.squaredNorm();

Eigen::Matrix J;//2*6雅克比矩阵 视觉slam十四讲p186式7.46

//雅克比矩阵表达式见 视觉slam十四讲p186式7.46

J << -fx * inv_z,

0,

fx * pc[0] * inv_z2,

fx * pc[0] * pc[1] * inv_z2,

-fx - fx * pc[0] * pc[0] * inv_z2,

fx * pc[1] * inv_z,

0,

-fy * inv_z,

fy * pc[1] * inv_z2,

fy + fy * pc[1] * pc[1] * inv_z2,

-fy * pc[0] * pc[1] * inv_z2,

-fy * pc[0] * inv_z;

H += J.transpose() * J;

b += -J.transpose() * e;

}

// -fx * inv_z 相当于-fx / Z'

//0

//fx * pc[0] * inv_z2相当于fx * X' / ( Z' * Z' )

//-fx - fx * pc[0] * pc[0] * inv_z2相当于fx * X' * Y' / ( Z' * Z')

//fx * pc[1] * inv_z相当于fx * Y' / Z'

//0

//-fy * inv_z相当于-fy / Z'

//fy * pc[1] * inv_z2相当于fy * Y' / (Z' * Z')

//fy + fy * pc[1] * pc[1] * inv_z2相当于fy + fy * Y'*Y' / (Z' * Z')

//-fy * pc[0] * pc[1] * inv_z2相当于fy * X' * Y' / ( Z' * Z')

//-fy * pc[0] * inv_z相当于-fy * X' / Z'

Vector6d dx;

dx = H.ldlt().solve(b);//LDT求解

if (isnan(dx[0])) {

cout << "result is nan!" << endl;

break;

}

if (iter > 0 && cost >= lastCost) {

// cost increase, update is not good

cout << "cost: " << cost << ", last cost: " << lastCost << endl;//输出代价

break;

}

// update your estimation

pose = Sophus::SE3d::exp(dx) * pose;

lastCost = cost;

cout << "iteration " << iter << " cost=" << std::setprecision(12) << cost << endl;//输出迭代次数和代价

if (dx.norm() < 1e-6) {

// converge

break;

}

}

cout << "pose by g-n: \n" << pose.matrix() << endl;//输出pose by g-n

}

/// vertex and edges used in g2o ba

// 曲线模型的顶点,模板参数:优化变量维度和数据类型

class VertexPose : public g2o::BaseVertex<6, Sophus::SE3d>//:表示继承,public表示公有继承;CurveFittingVertex是派生类,:BaseVertex<6, Sophus::SE3d>是基类

{

public://以下定义的成员变量和成员函数都是公有的

EIGEN_MAKE_ALIGNED_OPERATOR_NEW;//解决Eigen库数据结构内存对齐问题

// 重置

virtual void setToOriginImpl() override //virtual表示该函数为虚函数,override保留字表示当前函数重写了基类的虚函数

{

_estimate = Sophus::SE3d();

}

// left multiplication on SE3

// 更新

virtual void oplusImpl(const double *update) override {

Eigen::Matrix update_eigen;

update_eigen << update[0], update[1], update[2], update[3], update[4], update[5];

_estimate = Sophus::SE3d::exp(update_eigen) * _estimate;

}

// 存盘和读盘:留空

virtual bool read(istream &in) override {}//istream类是c++标准输入流的一个基类

//可参照C++ Primer Plus第六版的6.8节

virtual bool write(ostream &out) const override {}//ostream类是c++标准输出流的一个基类

//可参照C++ Primer Plus第六版的6.8节

};

// 误差模型 模板参数:观测值维度,类型,连接顶点类型

class EdgeProjection : public g2o::BaseUnaryEdge<2, Eigen::Vector2d, VertexPose> {

public:

EIGEN_MAKE_ALIGNED_OPERATOR_NEW;//解决Eigen库数据结构内存对齐问题

EdgeProjection(const Eigen::Vector3d &pos, const Eigen::Matrix3d &K) : _pos3d(pos), _K(K) {}//使用列表赋初值

virtual void computeError() override//virtual表示虚函数,保留字override表示当前函数重写了基类的虚函数

{

const VertexPose *v = static_cast (_vertices[0]);//创建指针v

Sophus::SE3d T = v->estimate();//将estimate()值赋给V

Eigen::Vector3d pos_pixel = _K * (T * _pos3d);

pos_pixel /= pos_pixel[2];

_error = _measurement - pos_pixel.head<2>();

}

virtual void linearizeOplus() override {

const VertexPose *v = static_cast (_vertices[0]);

Sophus::SE3d T = v->estimate();

Eigen::Vector3d pos_cam = T * _pos3d;

double fx = _K(0, 0);

double fy = _K(1, 1);

double cx = _K(0, 2);

double cy = _K(1, 2);

double X = pos_cam[0];

double Y = pos_cam[1];

double Z = pos_cam[2];

double Z2 = Z * Z;

_jacobianOplusXi

<< -fx / Z, 0, fx * X / Z2, fx * X * Y / Z2, -fx - fx * X * X / Z2, fx * Y / Z,

0, -fy / Z, fy * Y / (Z * Z), fy + fy * Y * Y / Z2, -fy * X * Y / Z2, -fy * X / Z;

} //雅克比矩阵表达式见 视觉slam十四讲p186式7.46

virtual bool read(istream &in) override {}

virtual bool write(ostream &out) const override {}

private:

Eigen::Vector3d _pos3d;

Eigen::Matrix3d _K;

};

void bundleAdjustmentG2O(

const VecVector3d &points_3d,

const VecVector2d &points_2d,

const Mat &K,

Sophus::SE3d &pose) {

// 构建图优化,先设定g2o

typedef g2o::BlockSolver> BlockSolverType; // pose is 6, landmark is 3

typedef g2o::LinearSolverDense LinearSolverType; // 线性求解器类型

// 梯度下降方法,可以从GN, LM, DogLeg 中选

auto solver = new g2o::OptimizationAlgorithmGaussNewton(

g2o::make_unique(g2o::make_unique()));//c++中的make_unique表示智能指针类型

g2o::SparseOptimizer optimizer; // 图模型

optimizer.setAlgorithm(solver); // 设置求解器

optimizer.setVerbose(true); // 打开调试输出

// vertex

// 往图中增加顶点

VertexPose *vertex_pose = new VertexPose(); // camera vertex_pose

vertex_pose->setId(0);//对顶点进行编号,里面的0你可以写成任意的正整数,但是后面设置edge连接顶点时,必须要和这个一致

vertex_pose->setEstimate(Sophus::SE3d());

optimizer.addVertex(vertex_pose);//添加顶点

// K 相机内参矩阵

Eigen::Matrix3d K_eigen;

K_eigen <<

K.at(0, 0), K.at(0, 1), K.at(0, 2),

K.at(1, 0), K.at(1, 1), K.at(1, 2),

K.at(2, 0), K.at(2, 1), K.at(2, 2);

// edges

// 往图中增加边

int index = 1;

for (size_t i = 0; i < points_2d.size(); ++i)//遍历2d点

{

auto p2d = points_2d[i];

auto p3d = points_3d[i];

EdgeProjection *edge = new EdgeProjection(p3d, K_eigen);

edge->setId(index);//对顶点进行编号,里面的0你可以写成任意的正整数,但是后面设置edge连接顶点时,必须要和这个一致

edge->setVertex(0, vertex_pose); // 设置连接的顶点

edge->setMeasurement(p2d);// 观测数值

edge->setInformation(Eigen::Matrix2d::Identity());// 信息矩阵:协方差矩阵之逆

optimizer.addEdge(edge);//添加边

index++;

}