Yolov5笔记

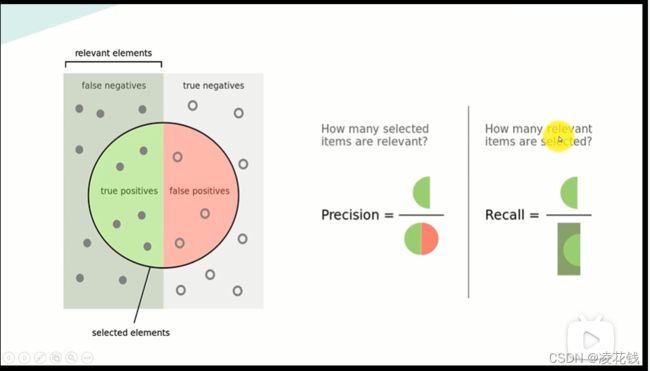

用以区分Precision以及Recall非常形象,便于理解

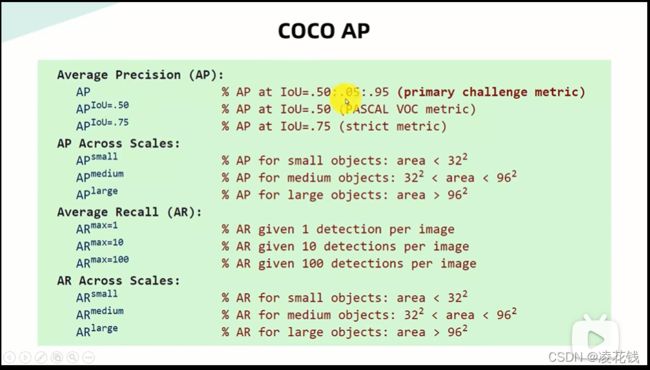

coco对于AP以及mAP的细化定义

文章推荐,讲的非常清楚!!!

深入浅出yolo系列之yolov5

相应的改进策略以及学习方向

如何入门,以及改进策略

这篇yolov5 6.1 与之前yolov5老版本网络之间有非常详细的讲解

强烈推荐

一些改进方法实验记录

1.注意力机制

(1)使用了Conv_CBAM,将所有的Conv替换

这里这里

效果几乎没有!!!

推荐博客如何添加注意力机制

(2)注意力机制结合Bottleneck,替换backbone中的所有C3模块

(3)在backbone最后单独加入注意力模块

(4)yolov56.0 注意力机制添加在SPPF的上面

2.使用ACON激活函数替换Conv模块中的ReLu激活函数

博客推荐非常详细,强烈推荐

3.BiFPN特征融合

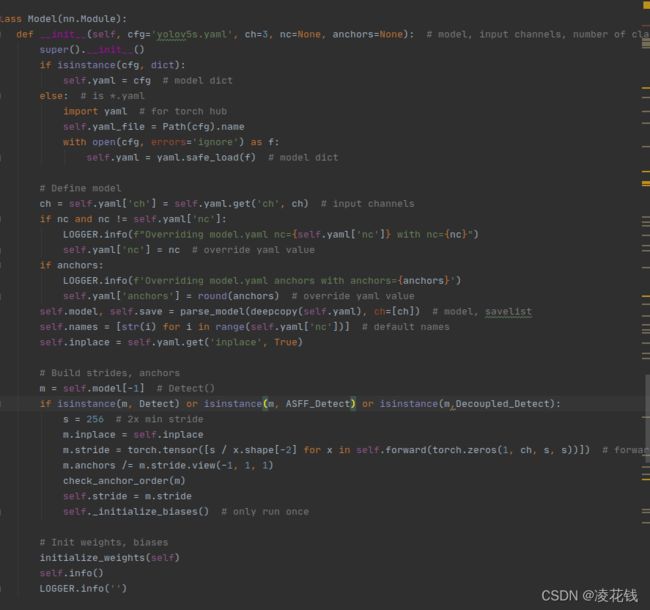

4.ASFF_Detect and Decoupled_Detect

参照这位博主博主传送门的代码修改,可能会出现错误“no stride”

这时候需要去yolo.py文件下找到Model类下面的

build strides,anchars下面去添加模型类型

5.修改损失函数

首先以alpha IOU为例

1.先到yolov5的utils/metrics.py文件下实现alpha IOU

#--------------------------------------alpha_iouloss

def bbox_alpha_iou(box1, box2, x1y1x2y2=False, GIoU=False, DIoU=False, CIoU=False, alpha=2, eps=1e-9):

# Returns tsqrt_he IoU of box1 to box2. box1 is 4, box2 is nx4

box2 = box2.T

# Get the coordinates of bounding boxes

if x1y1x2y2: # x1, y1, x2, y2 = box1

b1_x1, b1_y1, b1_x2, b1_y2 = box1[0], box1[1], box1[2], box1[3]

b2_x1, b2_y1, b2_x2, b2_y2 = box2[0], box2[1], box2[2], box2[3]

else: # transform from xywh to xyxy

b1_x1, b1_x2 = box1[0] - box1[2] / 2, box1[0] + box1[2] / 2

b1_y1, b1_y2 = box1[1] - box1[3] / 2, box1[1] + box1[3] / 2

b2_x1, b2_x2 = box2[0] - box2[2] / 2, box2[0] + box2[2] / 2

b2_y1, b2_y2 = box2[1] - box2[3] / 2, box2[1] + box2[3] / 2

# Intersection area

inter = (torch.min(b1_x2, b2_x2) - torch.max(b1_x1, b2_x1)).clamp(0) * \

(torch.min(b1_y2, b2_y2) - torch.max(b1_y1, b2_y1)).clamp(0)

# Union Area

w1, h1 = b1_x2 - b1_x1, b1_y2 - b1_y1 + eps

w2, h2 = b2_x2 - b2_x1, b2_y2 - b2_y1 + eps

union = w1 * h1 + w2 * h2 - inter + eps

# change iou into pow(iou+eps)

# iou = inter / union

iou = torch.pow(inter/union + eps, alpha)

beta = 2 * alpha

if GIoU or DIoU or CIoU:

cw = torch.max(b1_x2, b2_x2) - torch.min(b1_x1, b2_x1) # convex (smallest enclosing box) width

ch = torch.max(b1_y2, b2_y2) - torch.min(b1_y1, b2_y1) # convex height

if CIoU or DIoU: # Distance or Complete IoU https://arxiv.org/abs/1911.08287v1

c2 = cw ** beta + ch ** beta + eps # convex diagonal

rho_x = torch.abs(b2_x1 + b2_x2 - b1_x1 - b1_x2)

rho_y = torch.abs(b2_y1 + b2_y2 - b1_y1 - b1_y2)

rho2 = (rho_x ** beta + rho_y ** beta) / (2 ** beta) # center distance

if DIoU:

return iou - rho2 / c2 # DIoU

elif CIoU: # https://github.com/Zzh-tju/DIoU-SSD-pytorch/blob/master/utils/box/box_utils.py#L47

v = (4 / math.pi ** 2) * torch.pow(torch.atan(w2 / h2) - torch.atan(w1 / h1), 2)

with torch.no_grad():

alpha_ciou = v / ((1 + eps) - inter / union + v)

# return iou - (rho2 / c2 + v * alpha_ciou) # CIoU

return iou - (rho2 / c2 + torch.pow(v * alpha_ciou + eps, alpha)) # CIoU

else: # GIoU https://arxiv.org/pdf/1902.09630.pdf

# c_area = cw * ch + eps # convex area

# return iou - (c_area - union) / c_area # GIoU

c_area = torch.max(cw * ch + eps, union) # convex area

return iou - torch.pow((c_area - union) / c_area + eps, alpha) # GIoU

else:

return iou # torch.log(iou+eps) or iou

2.将utils/loss.py文件中的bbox_iou替换为bbox_alpha_iou即可

#iou = bbox_iou(pbox.T, tbox[i], x1y1x2y2=False, CIoU=True) # iou(prediction, target)

iou = bbox_alpha_iou(pbox.T, tbox[i], x1y1x2y2=False, alpha=3, CIoU=True)

3.想要修改为EIOU,只需要将bbox_iou后面的参数修改即可

iou = bbox_iou(pbox.T, tbox[i], x1y1x2y2=False, EIoU=True) # iou(prediction, target)

一些数据处理的推荐

这里数据集标签的转换以及数据集划分代码

工具集