1.BERT

文章目录

- Bert模型

-

- 一、bert的实战

-

- 1.bert实战1

- 2.进击!BERT句向量表征.md

- 3.【BERT-多标签文本分类实战】之一——实战项目总览

- 4.【Pytorch】BERT+LSTM+多头自注意力(文本分类)(可以参考)

- 5.BERT+Bi-LSTM+CRF 中文医疗实体识别实战

- 6.自然语言处理(NLP)Bert与Lstm结合

- 7.关于bert+lstm+crf实体识别训练数据的构建

- 8.Bert,后面接lstm。

- 二、bert 输出格式

- 三、Tokenizer快速使用

-

- 2.1Tokenizer常见输入输出实战详解

- 2.2 Tokenizer快速使用

Bert模型

- Bert则是多个Transformer的双向叠加,中间每一个蓝色的圈圈都是一个transformer

- BERT 的整体结构如下图所示,其是以 Transformer 为基础构建的,使用 WordPiece 的方法进行数据预处理,最后通过 MLM 任务和下个句子预测任务进行预训练的语言表示模型。

一、bert的实战

1.bert实战1

https://blog.csdn.net/weixin_43734080/article/details/123680472

2.进击!BERT句向量表征.md

https://jmxgodlz.xyz/2022/11/05/2022-11-05-%E8%BF%9B%E5%87%BB%EF%BC%81BERT%E5%8F%A5%E5%90%91%E9%87%8F%E8%A1%A8%E5%BE%81/#more

https://zhuanlan.zhihu.com/p/580831742

3.【BERT-多标签文本分类实战】之一——实战项目总览

https://blog.csdn.net/qq_43592352/article/details/125949887

4.【Pytorch】BERT+LSTM+多头自注意力(文本分类)(可以参考)

https://blog.csdn.net/weixin_42419611/article/details/123123340?

5.BERT+Bi-LSTM+CRF 中文医疗实体识别实战

https://zhuanlan.zhihu.com/p/453350271

6.自然语言处理(NLP)Bert与Lstm结合

https://blog.csdn.net/zhangtingduo/article/details/108474401

7.关于bert+lstm+crf实体识别训练数据的构建

https://www.cnblogs.com/little-horse/p/11731552.html

8.Bert,后面接lstm。

https://www.zhihu.com/search?type=content&q=Bert%EF%BC%8C%E5%90%8E%E9%9D%A2%E6%8E%A5lstm%E3%80%82

二、bert 输出格式

Bert 输出及例子:https://blog.csdn.net/weixin_44317740/article/details/113248250?

from transformers import AutoTokenizer, AutoModel

tokenizer = AutoTokenizer.from_pretrained("bert-base-chinese")

model = AutoModel.from_pretrained("bert-base-chinese")

inputs = tokenizer("我想快点发论文", return_tensors="pt")

outputs = model(**inputs)

print(inputs)

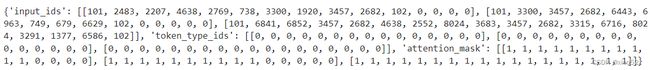

{'input_ids': tensor([[ 101, 2769, 2682, 2571, 4157, 1355, 6389, 3152, 102]]), 'token_type_ids': tensor([[0, 0, 0, 0, 0, 0, 0, 0, 0]]), 'attention_mask': tensor([[1, 1, 1, 1, 1, 1, 1, 1, 1]])}

print(outputs)

(tensor([[[-0.2791, 0.3020, 0.4071, ..., 0.2707, 0.5302, -0.7799],

[ 0.5064, -0.5631, 0.6345, ..., -1.0366, 0.2625, -0.1994],

[ 0.2194, -1.4004, -0.6083, ..., -0.0954, 1.3527, 0.1086],

...,

[-1.3459, 0.2393, -0.1635, ..., 0.2543, 0.3820, -0.6676],

[-0.1877, 0.2440, -0.7461, ..., 0.7493, 1.2351, -0.6387],

[-0.6366, 0.0020, 0.1719, ..., 0.5995, 1.0313, -0.5601]]],

grad_fn=<NativeLayerNormBackward>), tensor([[ 0.9998, 1.0000,

...

-0.9925, 1.0000, 0.9798, -0.9822, -0.9991, -0.9775, -0.9966, 0.9923]],

grad_fn=<TanhBackward>))

print(outputs[0].shape)

torch.Size([1, 9, 768])

print(outputs[1].shape)

torch.Size([1, 768])

# outputs[0]是每个token的表示,outputs[1]是整个输入的合并表达,提取整篇文章的表达,不是基于token级别的

outputs[0]是每个token的表示,outputs[1]是整个输入的合并表达,提取整篇文章的表达,不是基于token级别的

三、Tokenizer快速使用

huggingface使用(一):AutoTokenizer(通用)、BertTokenizer(基于Bert):https://blog.csdn.net/u013250861/article/details/124535020

from transformers import AutoTokenizer, AutoModel

tokenizer = AutoTokenizer.from_pretrained("bert-base-chinese")

model = AutoModel.from_pretrained("bert-base-chinese")

inputs = tokenizer("我想快点发论文", return_tensors="pt")

outputs = model(**inputs)

print(inputs)

{'input_ids': tensor([[ 101, 2769, 2682, 2571, 4157, 1355, 6389, 3152, 102]]),

'token_type_ids': tensor([[0, 0, 0, 0, 0, 0, 0, 0, 0]]),

'attention_mask': tensor([[1, 1, 1, 1, 1, 1, 1, 1, 1]])}

> token_type_ids: This tensor will map every tokens to their corresponding segment (see below).

他的张量将把每个标记映射到它们对应的片段(见下文)。

> attention_mask: This tensor is used to "mask" padded values in a batch of sequence with different lengths (see below)

这个张量用于“屏蔽”一批长度不同的序列中的填充值(见下文)。.

2.1Tokenizer常见输入输出实战详解

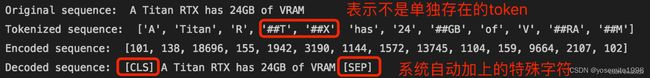

Pytorch Transformer Tokenizer常见输入输出实战详解:https://blog.csdn.net/yosemite1998/article/details/122306758

from transformers import BertTokenizer

tokenizer = BertTokenizer.from_pretrained("bert-base-cased")

‘input_ids’:

“attention_mask”:它会告诉机器返回的数字编码中哪些是需要注意的实际数据,哪些是不需要关心的填充数据。

‘token_type_ids’:有些NLP任务需要将两个句子拼接在一起,它用来表明返回的数字编码中哪些属于第一个句子,哪些属于第二个句子。

2.2 Tokenizer快速使用

Tokenizer快速使用:https://zhuanlan.zhihu.com/p/548347360

Bert的文本编码tokenizer、分隔符(MASK/CLS/SEP)编码:https://blog.csdn.net/pearl8899/article/details/119328276

huggingface使用(一):AutoTokenizer(通用)(有其参数解释)、BertTokenizer(基于Bert):https://blog.csdn.net/u013250861/article/details/124535020

from transformers import AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained("bert-base-chinese")

sens = ["弱小的我也有大梦想",

"有梦想谁都了不起",

"追逐梦想的心,比梦想本身,更可贵"]

# max_length: 设定最大文本长度

res = tokenizer(sens, padding="max_length", max_length=15)

res