pytorch 自定义网络(由参数决定网络) 创建网络 layers

方法一:用make_layer数组

先构造layers数组,使用nn.Sequential(*layers)实现网络。

make layer是对每一层的层自定义,有点类似于nn.Sequential(),但是layer是将每一层所要的网络或者激活函数归一化存在一个数组,目前看来他的优点,可以用一个for循环去定义整个网络,不用对对每一层都写同样的函数。从而只改变他的参数就行了。添加链接描述

例一

self.cfg = [64, 64, 'M', 128, 128, 'M', 256, 256, 256, 256, 'M', 512, 512, 512, 512, 'M', 512, 512, 512, 512, 'M']

# 数字为卷积层输出维度,M代表添加池化层

def make_layers(self, cfg, batch_norm=False):

layers = []

in_channels = 3

for v in cfg:

if v == 'M':

layers += [nn.MaxPool2d(kernel_size=2, stride=2)]

else:

conv2d = nn.Conv2d(in_channels, v, kernel_size=3, padding=1)

if batch_norm:

layers += [conv2d, nn.BatchNorm2d(v), nn.ReLU(inplace=True)]

else:

layers += [conv2d, nn.ReLU(inplace=True)]

in_channels = v

return nn.Sequential(*layers)

self.features = self.make_layers(self.cfg, batch_norm)

例二

def _make_layer(self, block, planes, blocks, shortcut_type, stride=1):

downsample = None

if stride != 1 or self.in_planes != planes * block.expansion:

if shortcut_type == 'A':

downsample = partial(self._downsample_basic_block,

planes=planes * block.expansion,

stride=stride)

else:

downsample = nn.Sequential(

conv1x1x1(self.in_planes, planes * block.expansion, stride),

nn.BatchNorm3d(planes * block.expansion))

layers = []

layers.append(

block(in_planes=self.in_planes,

planes=planes,

stride=stride,

downsample=downsample))

self.in_planes = planes * block.expansion

for i in range(1, blocks):

layers.append(block(self.in_planes, planes))

return nn.Sequential(*layers)

self.layer1 = self._make_layer(block, block_inplanes[0], layers[0],shortcut_type)

self.layer2 = self._make_layer(block,block_inplanes[1],layers[1],shortcut_type,stride=2)

self.layer3 = self._make_layer(block,block_inplanes[2],layers[2],shortcut_type,stride=2)

self.layer4 = self._make_layer(block,block_inplanes[3],layers[3],shortcut_type,stride=2)

方法二:

pytorch中的add_module函数,手动添加自定义的网络模块。

文章内容来自

错误:

import torch

import torch.nn as nn

class testNet(nn.Module):

def __init__(self, input_dim, hidden_dim, step=1):

super(testNet, self).__init__()

self.linear = nn.Linear(100, 100) #dummy module

self.linear_combines1 = []

self.linear_combines2 = []

for i in range(step):

self.linear_combines1.append(nn.Linear(input_dim, hidden_dim))

self.linear_combines2.append(nn.Linear(hidden_dim, hidden_dim))

net = testNet(128, 256, 3)

print(net) #Won't print what is in the list

# testNet(

# (linear): Linear(in_features=100, out_features=100, bias=True)

# )

net.cuda() #Won't send the module in the list to gpu

修改:

import torch

import torch.nn as nn

import torch.nn.functional as F

# 将所有模块保存在一个容器中

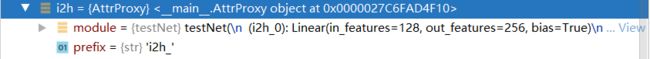

class AttrProxy(object):

"""Translates index lookups into attribute lookups."""

def __init__(self, module, prefix):

self.module = module

self.prefix = prefix

def __getitem__(self, i):

return getattr(self.module, self.prefix + str(i))

class testNet(nn.Module):

def __init__(self, input_dim, hidden_dim, steps=1):

super(testNet, self).__init__()

self.steps = steps

for i in range(steps):

self.add_module('i2h_' + str(i), nn.Linear(input_dim, hidden_dim))

self.add_module('h2h_' + str(i), nn.Linear(hidden_dim, hidden_dim))

self.i2h = AttrProxy(self, 'i2h_')

self.h2h = AttrProxy(self, 'h2h_')

def forward(self, input, hidden):

# here, use self.i2h[t] and self.h2h[t] to index

# input2hidden and hidden2hidden modules for each step,

# or loop over them, like in the example below

# (assuming first dim of input is sequence length)

for inp, i2h, h2h in zip(input, self.i2h, self.h2h):

hidden = F.tanh(i2h(input) + h2h(hidden))

return hidden

net = testNet(128, 256, 3)

print(net) #Won't print what is in the list

# testNet(

# (i2h_0): Linear(in_features=128, out_features=256, bias=True)

# (h2h_0): Linear(in_features=256, out_features=256, bias=True)

# (i2h_1): Linear(in_features=128, out_features=256, bias=True)

# (h2h_1): Linear(in_features=256, out_features=256, bias=True)

# (i2h_2): Linear(in_features=128, out_features=256, bias=True)

# (h2h_2): Linear(in_features=256, out_features=256, bias=True)

# )

net.cuda() #Won't send the module in the list to gpu