pytorch

https://pytorch-cn.readthedocs.io/zh/latest/

根据model计算参数数量

def count_param(model):

param_count = 0

layer = 0

for param in model.parameters():

layer += 1

param_layer = param.view(-1).size()[0]

param_count += param_layer

print(f'layer - {layer} shape:{param.size()} layer amount : {param_layer} sum : {param_count}')

print(f'The amount of parameters is {param_count} ')

输出相关

进度条tqdm

直接套在range外面就行

for epoch in tqdm(range(1,self.num_epochs+1-self.last_epoch))

日志输出

def setlogger(path):

logger = logging.getLogger()

logger.setLevel(logging.INFO)

logFormatter = logging.Formatter("%(asctime)s %(message)s", "%m-%d %H:%M:%S")

fileHandler = logging.FileHandler(path)

fileHandler.setFormatter(logFormatter)

logger.addHandler(fileHandler)

consoleHandler = logging.StreamHandler()

consoleHandler.setFormatter(logFormatter)

logger.addHandler(consoleHandler)

参数微调

with torch.no_grad():

所有计算得出的tensor的requires_grad都自动设置为False。

就是在计算时不会更新,相当于冻结

音频输入输出:

https://librosa.org/doc/latest/ioformats.html?highlight=output

keras文档

模型保存和测试

保存

运行

测试

model.save("./model.h5")

new_model = load_model('./model.h5')

y = new_model.predict(feature)

y输出结构看fit时结构,和pytorch的区别是没有forword。

model.fit(feature, label, batch_size=128, epochs=200, validation_split=0.1)

gpu

gpu相关设置

gpu安装

dataloader

0.dataloader的迭代次数

for i, data in enumerate(self.dataloader['train_loader']):

迭代次数和就是数据集数据量/batchsize取整

epoch 与 batch 关系

一个epoch完整过一次数据集

batch:一次forward,backward用多少数据

iteration :一次传播

iteration和batch是对应的

for epoch_i in range(start_epoch+1, end_epoch+1):

for i,data in enumerate(rand_loader):

1.dataloader的基础使用

必要

test_data = torchvision.datasets.CIFAR10("./CIFAR10", train=False, transform=torchvision.transforms.ToTensor())

test_loader = DataLoader(dataset=test_data, batch_size=4, shuffle=True, num_workers=0, drop_last=False)

Training_data_Name = 'Training_Data.mat'

Training_data = sio.loadmat('./%s/%s' % (args.data_dir, Training_data_Name))

Training_labels = Training_data['labels']

rand_loader = DataLoader(dataset=RandomDataset(Training_labels, nrtrain), batch_size=batch_size, num_workers=0, shuffle=True)

2.dataloader中的data

继承dataset类

实现三个方法,或者迭代器方法

以下如何实现的,迭代中的data就是咋样的

def __getitem__(self, index):

return torch.Tensor(self.data[index, :]).float()

def __iter__(self):

return self.generator

item和iter中返回的是单个数据

但是迭代中data返回batch*单个,这一处理由dataloader实现

1.简单实现三个函数

init的参数可操作

其他两个是固定的不能变

getitem:接收一个索引, 返回一个样本

for i,data in enumerate(rand_loader):

batch_x = data

class RandomDataset(Dataset):

def __init__(self, data, length):

self.data = data

self.len = length

def __getitem__(self, index):

return torch.Tensor(self.data[index, :]).float()

def __len__(self):

return self.len

2.用生成器自定义迭代方法

def get_random_batch_generator(self, Set):#'train'

#其他处理过程

batch = {'data_input': batch_inputs, 'condition_input': condition_inputs}, {

'data_output_1': batch_outputs_1, 'data_output_2': batch_outputs_2}

yield batch

class denoising_dataset(torch.utils.data.IterableDataset):

def __init__(self, generator):

self.generator = generator

def __iter__(self):

return self.generator

train_set_generator = dataset.get_random_batch_generator('train')

train_set_iterator = datasets.denoising_dataset(train_set_generator)

train_loader = DataLoader(train_set_iterator, batch_size=None)

dataloader = {'train_loader':train_loader, 'valid_loader':valid_loader}

for i, data in enumerate(self.dataloader['train_loader']):

x, y = data

yield用法

- yield就是 return 返回一个值,并且记住这个返回的位置,下次迭代就从这个位置后开始

- 如果用getitem和列表,就是加载整个列表,再取值,用生成器函数和yield就是用一个加载一个

- 使用场景:需要逐个去获取容器内的某些数据,容器内的元素数量非常多,或者容器内的元素体积非常大

- 如果一个函数定义中包含 yield 表达式,那么该函数是一个生成器函数

- 底层实现和next函数有关

data = next(self.dataset_iter)

使用yield相当于返回了一个可供迭代的列表

在函数开始处,加入 result = list();

将每个 yield 表达式 yield expr 替换为 result.append(expr);

在函数末尾处,加入 return result。

def get_random_batch_generator(self, Set):#'train'

if Set not in ['train', 'test']:

raise ValueError("Argument SET must be either 'train' or 'test'")

while True:

sample_indices = np.random.randint(0, len(self.sequences[Set]['clean']), self.batch_size) #btach.size:10

condition_inputs = []

batch_inputs = []

batch_outputs_1 = []

batch_outputs_2 = []

for i, sample_i in enumerate(sample_indices):#[ 9941 5657 5610 6613 6152 2843 10611 2560 5729 10366]

while True:

speech = self.retrieve_sequence(Set, 'clean', sample_i) #52480

noisy = self.retrieve_sequence(Set, 'noisy', sample_i) #52480

noise = noisy - speech

if self.extract_voice:

speech = speech[self.voice_indices[Set][sample_i][0]:self.voice_indices[Set][sample_i][1]] #shape:39200

speech_regained = speech * self.regain_factors[Set][sample_i] #39200 20800(5657)

noise_regained = noise * self.regain_factors[Set][sample_i]

if len(speech_regained) < self.model.input_length:

sample_i = np.random.randint(0, len(self.sequences[Set]['clean']))

else:

break

offset = np.squeeze(np.random.randint(0, len(speech_regained) - self.model.input_length, 1)) #29494 7516(5610)

speech_fragment = speech_regained[offset:offset + self.model.input_length] #shape:7739

noise_fragment = noise_regained[offset:offset + self.model.input_length]

Input = noise_fragment + speech_fragment #7739

output_speech = speech_fragment #7739

output_noise = noise_fragment

if self.noise_only_percent > 0:

if np.random.uniform(0, 1) <= self.noise_only_percent:

Input = output_noise #Noise only

output_speech = np.array([0] * self.model.input_length) #Silence

batch_inputs.append(Input) #list

batch_outputs_1.append(output_speech)

batch_outputs_2.append(output_noise)

if np.random.uniform(0, 1) <= 1.0 / self.get_num_condition_classes():

condition_input = 0

else:

condition_input = self.speaker_mapping[self.speakers[Set][sample_i]]

if condition_input > 28: #If speaker is in test set, use wildcard condition class 0

condition_input = 0

condition_inputs.append(condition_input)

batch_inputs = np.array(batch_inputs, dtype='float32')#array

batch_outputs_1 = np.array(batch_outputs_1, dtype='float32')

batch_outputs_2 = np.array(batch_outputs_2, dtype='float32')

batch_outputs_1 = batch_outputs_1[:, self.model.get_padded_target_field_indices()]

batch_outputs_2 = batch_outputs_2[:, self.model.get_padded_target_field_indices()]

condition_inputs = self.condition_encode_function(np.array(condition_inputs, dtype='uint8'), self.model.num_condition_classes)

batch = {'data_input': batch_inputs, 'condition_input': condition_inputs}, {

'data_output_1': batch_outputs_1, 'data_output_2': batch_outputs_2}

yield batch

复数处理

view_as_real

在最后增加一个维度,大小为2

1->1,2

1+2j->[1,2]

取实数部分

x = torch.view_as_real(x)[:,:,:,0].squeeze(-1)

x_input = x.view(-1, 1, 39, 39)

运算,转化为numpy

https://blog.csdn.net/Stephanie2014/article/details/105984274

维度处理

size不变

squeeze()只能去值为1的维度,否则不变

返回张量与输入张量共享内存,所以改变其中一个的内容会改变另一个

a.unsqueeze(x)在x维(从0开始计数,从前往后数)上增加1维

a.unsqueeze(0)

6 ->1,6

a.squeeze(x) 去掉x维,

-x dim为负,则将会被转化dim+input.dim()+1

-1就是size从前往后最后一维

6->6,1

a=squeeze(a)去掉所有值为1的维度

size变化,值复制

repeat

在对应维度上repeat几倍

a.repeat(2,1).size() # 原始值:torch.Size([33, 55])

torch.Size([66, 55])

condition_out = layers.expand_dims(condition_out, -1)#torch.Size([50, 128, 1])

condition_out = condition_out.repeat(1,1,self.input_length)#torch.Size([50, 128, 7739])最后维度每个值重复7739

创建同维度的0矩阵

x=torch.zeros([2,3])

y=torch.zeros(x.shape)

创建指定维度的矩阵

要用列表,不能(2,3)

x=torch.zeros([2,3])

保持数据量不变的情况下变化形状

#xw:torch.Size([128, 1089])

x_input = xw.view(-1, 1, 33, 33) #torch.Size([128, 1, 33, 33])

- 参数中的-1就代表这个位置由其他位置的数字来推断

- 正整数参数就是指定维度

某一维度的长度

x.shape[n]

传播过程

def expand_dims(x, axes):

if axes == 1:

x = x.unsqueeze_(1)

if axes == -1:

x = x.unsqueeze_(-1)

return x

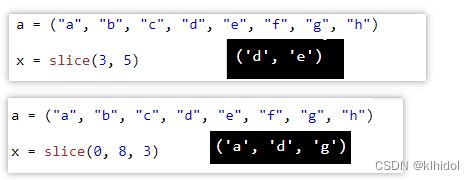

切片

对多维的单维切片

layers.slicing(data_expanded,n, m+ 1, 1),2)

def slicing(x, slice_idx, axes):

if axes == 1:

return x[:,slice_idx,:]

if axes == 2:

return x[:,:,slice_idx]

data_out_2 = layers.slicing(data_out, slice(self.config['model']['filters']['depths']['res'],

2*self.config['model']['filters']['depths']['res'],1), 1)

拼接与求和?

data_out = torch.stack( skip_connections, dim = 0).sum(dim = 0)

stack:https://cxymm.net/article/m0_61899108/122666906

detach()?

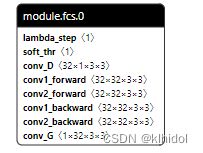

model 323

batch_metric.append(self.metric_fn(y['data_output_1'].detach(), y_hat[0].detach()))

批量数据处理

items() 返回字典所有键值对

变量:操作,实际值

map(lambda x: x ** 2, [1, 2, 3, 4, 5]) # 使用 lambda 匿名函数

[1, 4, 9, 16, 25]

x = dict(map(lambda i: (i[0], i[1].to(self.device, dtype=torch.float32)), x.items()))

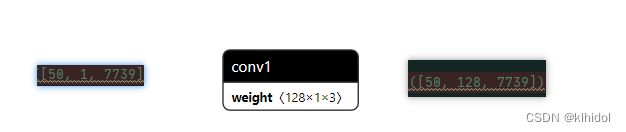

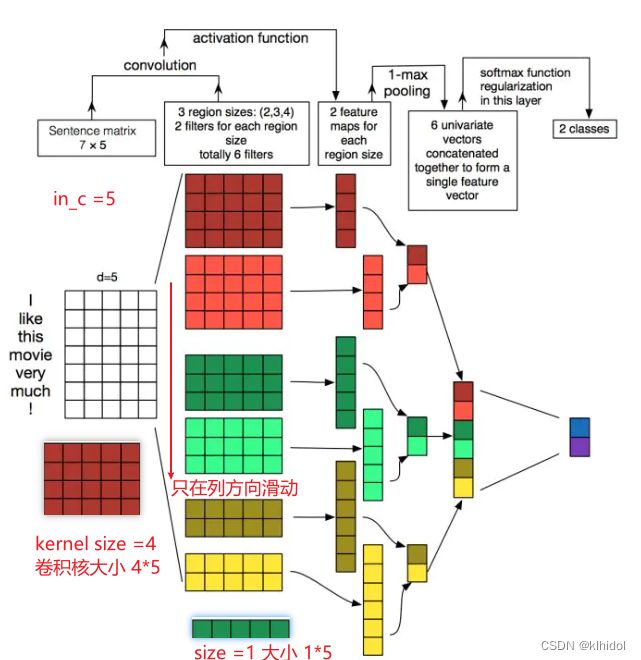

卷积层定义

nn.conv1d

对于输入的要求[batch_size,输入通道数,信号大小]

nn.Conv1d(in_channels, out_channels, kernel_size, stride=1, padding=0, dilation=1, groups=1, bias=True)

通道可以用RGB帮助理解,就是某个特定方面的信息

- inchannel:输入信号的维度,不包括batchsize

输入通道数的物理含义 对图片取决于图片类型,RGB为3,对声音就是1,对文本自定义

- out_channels:输出通道数,会影响通过该层后的输出

物理含义是过滤器(卷积核)数量(深度)

- kernel_size(int or tuple) - 卷积核的尺寸,卷积核的大小为(in_channels,size),

横向维度是由in_channels来决定的,所以实际上卷积大小为kernel_size*in_channels

会对output产生影响的只有out_channels

- stride: 卷积步长

- bias: 如果bias=True,添加偏置

nn.conv1d只在列方向滑动,所以卷积核大小中的高维必然与inchannel保持一致

通过之后的效果

n.Conv1d(1, 128, 3,stride=1, bias = False, padding=1)

Conv1d顺序不同于参数结构顺序

in要对应输入

Conv1d :in out kernel

卷积核:out in kernel

- input 结构

batch_size*input_channel*信号size - 该层参数结构:

output_channel * (input_channel*kernel_size) - 输出结构:

batch_size*output_channel*信号size处理后 - 在stride和pad都为1的情况下,只有output_channel会变化

nn.conv2d

在横向和竖向都移动卷积核

对输入维度的改变与conv1d没有区别

主要是 in - out

如果有pad 和 stride 则后两维也会改变

![]()

layer = nn.Conv2d(1, 3, kernel_size=3, stride=1, padding=0)

- in

- out

- kernel_size:

- 一个数 则大小为n*n

- 一个元组(m*n)

主体训练结构

for epoch_i in range(start_epoch+1, end_epoch+1):

for i,data in enumerate(rand_loader):

batch_x = data

batch_x = batch_x.to(device)

y_hat = self.model(x)

optimizer.zero_grad()

loss_all.backward(retain_graph=True)

optimizer.step()

loss

是一个tensor即可

loss_discrepancy = torch.mean(torch.pow(x_output - batch_x, 2))

loss_constraint = torch.mean(torch.pow(loss_layers_sym[0], 2))

for k in range(layer_num-1):

loss_constraint += torch.mean(torch.pow(loss_layers_sym[k+1], 2))

gamma2 = torch.Tensor([0.01]).to(device)

# loss_all = loss_discrepancy

loss_all = loss_discrepancy + torch.mul(gamma2, loss_constraint)

def get_out_1_loss(self):

if self.config['training']['loss']['out_1']['weight'] == 0:

return lambda y_true, y_pred: y_true * 0

return lambda y_true, y_pred: self.config['training']['loss']['out_1']['weight'] * util.l1_l2_loss(

y_true, y_pred, self.config['training']['loss']['out_1']['l1'],

self.config['training']['loss']['out_1']['l2'])

def get_loss_fn(self, y_hat, y):

target_speech = y['data_output_1']

target_noise = y['data_output_2']

output_speech = y_hat[0]

output_noise = y_hat[1]

loss1 = self.out_1_loss(target_speech, output_speech)

loss2 = self.out_2_loss(target_noise, output_noise)

loss = loss1 + loss2

return loss

optimizer

optimizer = torch.optim.Adam(model.parameters(), lr=learning_rate)

optimizer = optim.Adam(self.model.parameters(), lr=self.config['optimizer']['lr'], weight_decay=self.config['optimizer']['decay'])

retain_graph

retain_graph=True,目的为是为保留该过程中计算的梯度,后续G网络更新时使用

model

model = ISTANet(layer_num)

model = nn.DataParallel(model)

model = model.to(device)

self.device = self.cuda_device()

self.model = model.to(self.device)

model = models.DenoisingWavenet(config)

class DenoisingWavenet(nn.Module):

def __init__(self, config, input_length=None, target_field_length=None):

super().__init__()

def forward(self, x):

卷积操作与卷积层

使用时卷积层只需传入输入

data_out = self.conv1(data_x)

self.conv1 = nn.Conv1d(in, out,kernel_size, stride=1, bias = False, dilation = self.dilation, padding=int(self.dilation))

卷积操作需要传入输入和卷积核

卷积核是 out in size 卷积操作是in out size

torch.nn.functional.conv2d(input, weight, bias=None, stride=1, padding=0, dilation=1, groups=1)

- input

- weight : out in kernel

self.conv1_forward = nn.Parameter(init.xavier_normal_(torch.Tensor(32, 1, 3, 3)))

x = F.conv2d(x_input, self.conv1_forward, padding=1)

数据集划分

https://zhuanlan.zhihu.com/p/385229355

格式转化

torch.tensor(,dtype=)

维度转置

y1 = x.transpose()