EfficientNetV1 V2网络理解+pytorch源码

目录

-

- 一、EfficientNet V1

- 二、EfficientNet V2

-

- Fused-MBConv模块

- 三、源码

一、EfficientNet V1

1)Google2019发表的文章

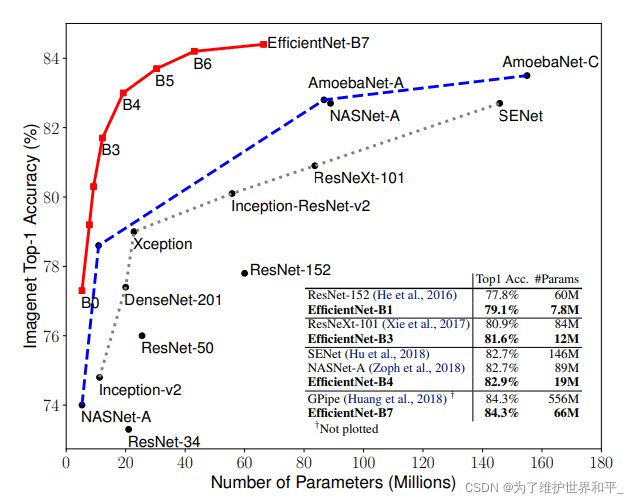

2)论文中提出,EfficientNet-B7在Imagenet top-1上达到了当年最高准确率84.3%

3)与之前准确率最高的GPip相比,参数量仅仅为其1/8.4,推理速度提升了6.1倍

模型的大小与准确率对比如下(Top1)

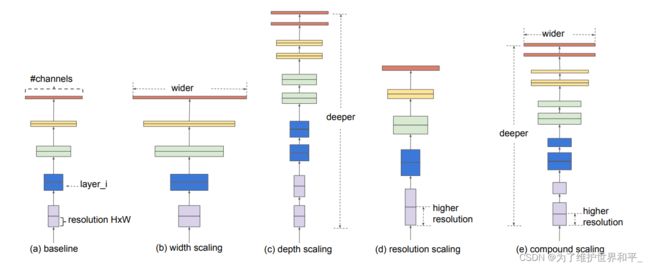

同时探索输入分辨率,网络的深度,宽度的影响(图E)

宽度:特征矩阵的channel

增加网络的深度depth能够得到更加丰富、复杂的特征。但面临梯度消失,训练困难的问题。

增加网络的width能够获得更高细粒度的特征并且也更容易训练,但对于witdh很大而深度较浅的网络很难学习更深层次的特征

增加输入图片的分辨率能够潜在获得更高细粒度的特征模板,但对于非常高的输入分辨率,准确率的增益也会减小,并且分辨率图像会增加计算量

单独的宽度、深度、分辨率,在准确率达到80%基本饱和了,但同时改变则可以超过82%。

网络结构

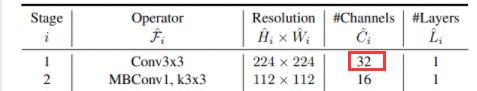

EfficientNet-B0

Channel 输出特征矩阵个数

layers:重复MBConv多少次

- 第一个升维的1x1卷积层,它的卷积核个数是输入特征矩阵channel的n倍(表格中的1 6)

- 当n=1时,不要第一个升维的1x1卷积层,即Stage2中的MBConv1结构都没有第一个升维1x1卷积层

- shortcut连接,仅当输入MBConv结构的特征矩阵与输入的特征矩阵shape相同时才存在。

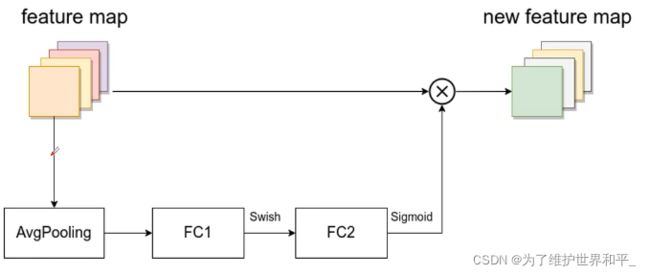

SE模块

一个全局平均池化,两个全连接层

第一个全连接层

- 节点个数是输入该MBConv特征矩阵的1/4

- 激活函数:Swish。

第二个全连接层的

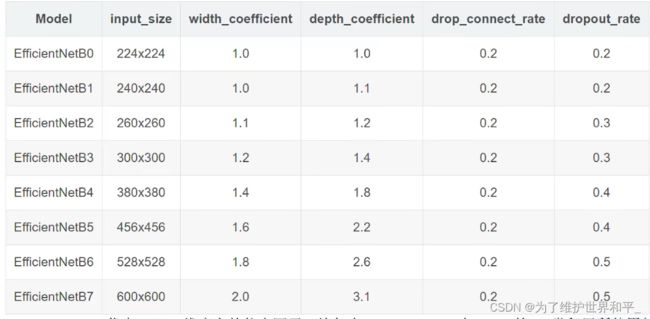

width_coefficient代表channel维度的倍率因子,如下

32*1.8=57.6 取到离它最近的8的证书倍即56

depth_coefficient代表depth维度上的倍率因子,从Stage2-Stage8

![]()

4*2.6=10.4 向上取证即11

性能对比

1)准确率高

2)参数个数少

3)占用GPU的内存,推理速度与FLOPS不是直接相关的

二、EfficientNet V2

1)2021.4 CVPR 上发表

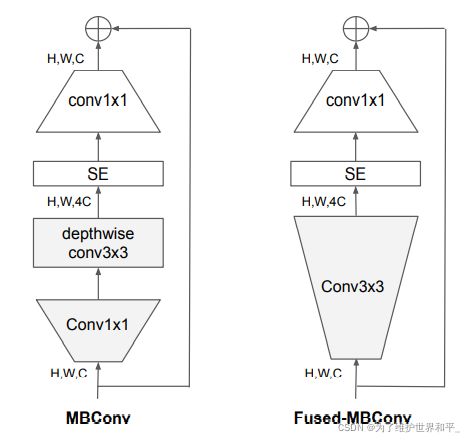

2) 引入Fused-MBConv模块

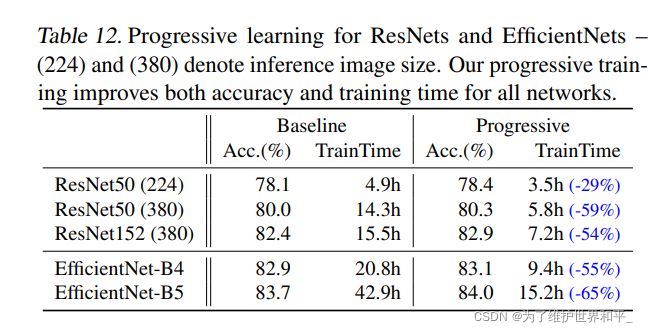

3)引入渐进式学习策略(训练更快)

4)Top-1达到87.3%

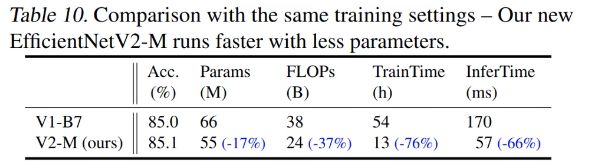

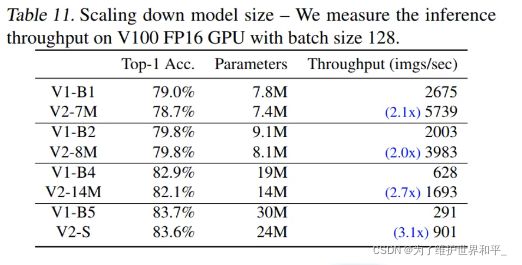

训练速度提升11倍,参数量减少1/6.8

由上图得知,V2准确率高,速度快

EfficientNetV1中,关注的是准确率,参数数量以及FLOPs,在EfficientV2中作者关注模型的训练速度。

Efficientv1中存在的问题以及V2的解决方法

-

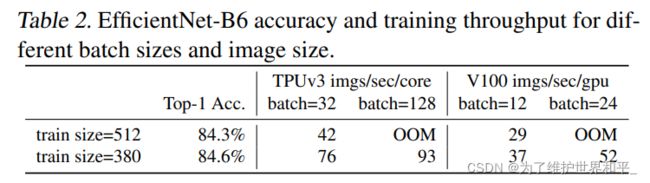

训练图像的尺寸很大时,训练速度慢

1)小尺寸的准确率反而高一些

2)batch=24的时候,出现内存溢出,而batch在训练时大一些好,所以降低训练图片尺寸 -

在网络浅层中使用Depthwise convolution 速度很慢

无法重复使用加速器,将MBConv 替换为FusedMBConv

Fused stage1-3浅层 最优

-

同等放大每个stage是次优的

深度和宽度是同等放大的,但每个stage对网络的训练速度以及参数数量的贡献并不相同,直接使用同等缩放策略不合理。作者使用了非均匀的缩放策略来缩放模型。

EfficientV2网络框架

Layers:重复的次数

Stride:步距 2(对第一层而言)

- 除了使用MBConv 模块,还使用Fused-MBConv模块

- 使用较小的expansion ratio

- 偏向使用更小的kernel_size(3x3)

- 移除了EfficientNetV1中最后一个步距为1的stage

Fused-MBConv模块

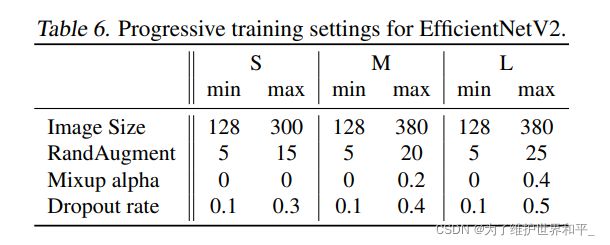

Progressive Learning 渐进式学习策略

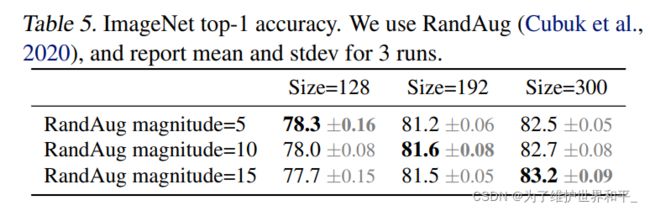

在训练不同的图片尺寸时,使用不同的正则化方法的强度。

训练早期使用较小的训练尺寸以及较弱的正则化方法weak regularization。接着逐渐提升图像尺寸,同时增强正则化方法。regularization包括Dropout,RandAugment以及Mixup

三、源码

EfficientNetV2

from collections import OrderedDict

from functools import partial

from typing import Callable, Optional

import torch.nn as nn

import torch

from torch import Tensor

def drop_path(x, drop_prob: float = 0., training: bool = False):

"""

Drop paths (Stochastic Depth) per sample (when applied in main path of residual blocks).

"Deep Networks with Stochastic Depth", https://arxiv.org/pdf/1603.09382.pdf

This function is taken from the rwightman.

It can be seen here:

https://github.com/rwightman/pytorch-image-models/blob/master/timm/models/layers/drop.py#L140

"""

if drop_prob == 0. or not training:

return x

keep_prob = 1 - drop_prob

shape = (x.shape[0],) + (1,) * (x.ndim - 1) # work with diff dim tensors, not just 2D ConvNets

random_tensor = keep_prob + torch.rand(shape, dtype=x.dtype, device=x.device)

random_tensor.floor_() # binarize

output = x.div(keep_prob) * random_tensor

return output

class DropPath(nn.Module):

"""

Drop paths (Stochastic Depth) per sample (when applied in main path of residual blocks).

"Deep Networks with Stochastic Depth", https://arxiv.org/pdf/1603.09382.pdf

"""

def __init__(self, drop_prob=None):

super(DropPath, self).__init__()

self.drop_prob = drop_prob

def forward(self, x):

return drop_path(x, self.drop_prob, self.training)

class ConvBNAct(nn.Module):

def __init__(self,

in_planes: int,

out_planes: int,

kernel_size: int = 3,

stride: int = 1,

groups: int = 1,

norm_layer: Optional[Callable[..., nn.Module]] = None,

activation_layer: Optional[Callable[..., nn.Module]] = None):

super(ConvBNAct, self).__init__()

padding = (kernel_size - 1) // 2

if norm_layer is None:

norm_layer = nn.BatchNorm2d

if activation_layer is None:

activation_layer = nn.SiLU # alias Swish (torch>=1.7)

self.conv = nn.Conv2d(in_channels=in_planes,

out_channels=out_planes,

kernel_size=kernel_size,

stride=stride,

padding=padding,

groups=groups,

bias=False)

self.bn = norm_layer(out_planes)

self.act = activation_layer()

def forward(self, x):

result = self.conv(x)

result = self.bn(result)

result = self.act(result)

return result

class SqueezeExcite(nn.Module):

def __init__(self,

input_c: int, # block input channel

expand_c: int, # block expand channel

se_ratio: float = 0.25):

super(SqueezeExcite, self).__init__()

squeeze_c = int(input_c * se_ratio)

self.conv_reduce = nn.Conv2d(expand_c, squeeze_c, 1)

self.act1 = nn.SiLU() # alias Swish

self.conv_expand = nn.Conv2d(squeeze_c, expand_c, 1)

self.act2 = nn.Sigmoid()

def forward(self, x: Tensor) -> Tensor:

scale = x.mean((2, 3), keepdim=True)

scale = self.conv_reduce(scale)

scale = self.act1(scale)

scale = self.conv_expand(scale)

scale = self.act2(scale)

return scale * x

class MBConv(nn.Module):

def __init__(self,

kernel_size: int,

input_c: int,

out_c: int,

expand_ratio: int,

stride: int,

se_ratio: float,

drop_rate: float,

norm_layer: Callable[..., nn.Module]):

super(MBConv, self).__init__()

if stride not in [1, 2]:

raise ValueError("illegal stride value.")

self.has_shortcut = (stride == 1 and input_c == out_c)

activation_layer = nn.SiLU # alias Swish

expanded_c = input_c * expand_ratio

# 在EfficientNetV2中,MBConv中不存在expansion=1的情况所以conv_pw肯定存在

assert expand_ratio != 1

# Point-wise expansion

self.expand_conv = ConvBNAct(input_c,

expanded_c,

kernel_size=1,

norm_layer=norm_layer,

activation_layer=activation_layer)

# Depth-wise convolution

self.dwconv = ConvBNAct(expanded_c,

expanded_c,

kernel_size=kernel_size,

stride=stride,

groups=expanded_c,

norm_layer=norm_layer,

activation_layer=activation_layer)

self.se = SqueezeExcite(input_c, expanded_c, se_ratio) if se_ratio > 0 else nn.Identity()

# Point-wise linear projection

self.project_conv = ConvBNAct(expanded_c,

out_planes=out_c,

kernel_size=1,

norm_layer=norm_layer,

activation_layer=nn.Identity) # 注意这里没有激活函数,所有传入Identity

self.out_channels = out_c

# 只有在使用shortcut连接时才使用dropout层

self.drop_rate = drop_rate

if self.has_shortcut and drop_rate > 0:

self.dropout = DropPath(drop_rate)

def forward(self, x: Tensor) -> Tensor:

result = self.expand_conv(x)

result = self.dwconv(result)

result = self.se(result)

result = self.project_conv(result)

if self.has_shortcut:

if self.drop_rate > 0:

result = self.dropout(result)

result += x

return result

class FusedMBConv(nn.Module):

def __init__(self,

kernel_size: int,

input_c: int,

out_c: int,

expand_ratio: int,

stride: int,

se_ratio: float,

drop_rate: float,

norm_layer: Callable[..., nn.Module]):

super(FusedMBConv, self).__init__()

assert stride in [1, 2]

assert se_ratio == 0

self.has_shortcut = stride == 1 and input_c == out_c

self.drop_rate = drop_rate

self.has_expansion = expand_ratio != 1

activation_layer = nn.SiLU # alias Swish

expanded_c = input_c * expand_ratio

# 只有当expand ratio不等于1时才有expand conv

if self.has_expansion:

# Expansion convolution

self.expand_conv = ConvBNAct(input_c,

expanded_c,

kernel_size=kernel_size,

stride=stride,

norm_layer=norm_layer,

activation_layer=activation_layer)

self.project_conv = ConvBNAct(expanded_c,

out_c,

kernel_size=1,

norm_layer=norm_layer,

activation_layer=nn.Identity) # 注意没有激活函数

else:

# 当只有project_conv时的情况

self.project_conv = ConvBNAct(input_c,

out_c,

kernel_size=kernel_size,

stride=stride,

norm_layer=norm_layer,

activation_layer=activation_layer) # 注意有激活函数

self.out_channels = out_c

# 只有在使用shortcut连接时才使用dropout层

self.drop_rate = drop_rate

if self.has_shortcut and drop_rate > 0:

self.dropout = DropPath(drop_rate)

def forward(self, x: Tensor) -> Tensor:

if self.has_expansion:

result = self.expand_conv(x)

result = self.project_conv(result)

else:

result = self.project_conv(x)

if self.has_shortcut:

if self.drop_rate > 0:

result = self.dropout(result)

result += x

return result

class EfficientNetV2(nn.Module):

def __init__(self,

model_cnf: list,

num_classes: int = 1000,

num_features: int = 1280,

dropout_rate: float = 0.2,

drop_connect_rate: float = 0.2):

super(EfficientNetV2, self).__init__()

for cnf in model_cnf:

assert len(cnf) == 8

norm_layer = partial(nn.BatchNorm2d, eps=1e-3, momentum=0.1)

stem_filter_num = model_cnf[0][4]

self.stem = ConvBNAct(3,

stem_filter_num,

kernel_size=3,

stride=2,

norm_layer=norm_layer) # 激活函数默认是SiLU

total_blocks = sum([i[0] for i in model_cnf])

block_id = 0

blocks = []

for cnf in model_cnf:

repeats = cnf[0]

op = FusedMBConv if cnf[-2] == 0 else MBConv

for i in range(repeats):

blocks.append(op(kernel_size=cnf[1],

input_c=cnf[4] if i == 0 else cnf[5],

out_c=cnf[5],

expand_ratio=cnf[3],

stride=cnf[2] if i == 0 else 1,

se_ratio=cnf[-1],

drop_rate=drop_connect_rate * block_id / total_blocks,

norm_layer=norm_layer))

block_id += 1

self.blocks = nn.Sequential(*blocks)

head_input_c = model_cnf[-1][-3]

head = OrderedDict()

head.update({"project_conv": ConvBNAct(head_input_c,

num_features,

kernel_size=1,

norm_layer=norm_layer)}) # 激活函数默认是SiLU

head.update({"avgpool": nn.AdaptiveAvgPool2d(1)})

head.update({"flatten": nn.Flatten()})

if dropout_rate > 0:

head.update({"dropout": nn.Dropout(p=dropout_rate, inplace=True)})

head.update({"classifier": nn.Linear(num_features, num_classes)})

self.head = nn.Sequential(head)

# initial weights

for m in self.modules():

if isinstance(m, nn.Conv2d):

nn.init.kaiming_normal_(m.weight, mode="fan_out")

if m.bias is not None:

nn.init.zeros_(m.bias)

elif isinstance(m, nn.BatchNorm2d):

nn.init.ones_(m.weight)

nn.init.zeros_(m.bias)

elif isinstance(m, nn.Linear):

nn.init.normal_(m.weight, 0, 0.01)

nn.init.zeros_(m.bias)

def forward(self, x: Tensor) -> Tensor:

x = self.stem(x)

x = self.blocks(x)

x = self.head(x)

return x

def efficientnetv2_s(num_classes: int = 1000):

"""

EfficientNetV2

https://arxiv.org/abs/2104.00298

"""

# train_size: 300, eval_size: 384

# repeat, kernel, stride, expansion, in_c, out_c, operator, se_ratio

model_config = [[2, 3, 1, 1, 24, 24, 0, 0],

[4, 3, 2, 4, 24, 48, 0, 0],

[4, 3, 2, 4, 48, 64, 0, 0],

[6, 3, 2, 4, 64, 128, 1, 0.25],

[9, 3, 1, 6, 128, 160, 1, 0.25],

[15, 3, 2, 6, 160, 256, 1, 0.25]]

model = EfficientNetV2(model_cnf=model_config,

num_classes=num_classes,

dropout_rate=0.2)

return model

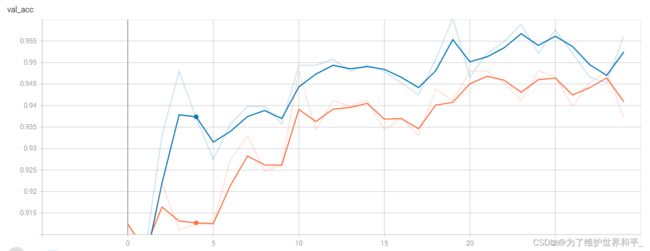

训练

[valid epoch 29] loss: 0.163, acc: 0.956: 100%|