YOLO-V3-SPP 训练loss计算源码解析之compute_loss

前言

理论详解:YOLO-V3-SPP详细解析

该函数需要了解dataloader那边筛选出来的gt,即build_targets函数

compute_loss

主要讲解model的pred和筛选的gt进行loss计算过程,包括正负样本的区分,以及二值交叉熵loss和forcal loss的转换及使用代码,还有关于IOU的计算。

讲解形式结合图文信息,不会那么枯燥,尽量形象点。

源码

def compute_loss(p, targets, model): # predictions, targets, model

device = p[0].device

lcls = torch.zeros(1, device=device) # Tensor(0)

lbox = torch.zeros(1, device=device) # Tensor(0)

lobj = torch.zeros(1, device=device) # Tensor(0)

tcls, tbox, indices, anchors = build_targets(p, targets, model) # targets

h = model.hyp # hyperparameters

red = 'mean' # Loss reduction (sum or mean)

"""

tcls:筛选出来的gt的类索引,shape(YoloLayer_num,targets_num)

tbox:筛选出来的gt的box信息,tx,ty,w,h。其中tx,ty是偏移量;w,h是宽高,shape(YoloLayer_num,targets_num,txtywh)

indices:(YoloLayer_num,img_index+anchor_index+grid_y+grid_x)

anch:每个target对应使用的anchor尺度,shape(YoloLayer_num,targets_num,wh)

"""

# Define criteria

BCEcls = nn.BCEWithLogitsLoss(pos_weight=torch.tensor([h['cls_pw']], device=device), reduction=red)

BCEobj = nn.BCEWithLogitsLoss(pos_weight=torch.tensor([h['obj_pw']], device=device), reduction=red)

# class label smoothing https://arxiv.org/pdf/1902.04103.pdf eqn 3

cp, cn = smooth_BCE(eps=0.1)

# focal loss

g = h['fl_gamma'] # focal loss gamma

if g > 0:

BCEcls, BCEobj = FocalLoss(BCEcls, g), FocalLoss(BCEobj, g)

# per output

nt = 0 # targets

for i, pi in enumerate(p): # layer index, layer predictions

b, a, gj, gi = indices[i] # image, anchor, gridy, gridx

tobj = torch.zeros_like(pi[..., 0], device=device) # target obj

nb = b.shape[0] # number of targets

if nb:

# 对应匹配到正样本的预测信息

ps = pi[b, a, gj, gi] # prediction subset corresponding to targets

# GIoU

pxy = ps[:, :2].sigmoid()

pwh = ps[:, 2:4].exp().clamp(max=1E3) * anchors[i]

pbox = torch.cat((pxy, pwh), 1) # predicted box

giou = bbox_iou(pbox.t(), tbox[i], x1y1x2y2=False, GIoU=True) # giou(prediction, target)

lbox += (1.0 - giou).mean() # giou loss

# Obj

tobj[b, a, gj, gi] = (1.0 - model.gr) + model.gr * giou.detach().clamp(0).type(tobj.dtype) # giou ratio

# Class

if model.nc > 1: # cls loss (only if multiple classes)

t = torch.full_like(ps[:, 5:], cn, device=device) # targets

t[range(nb), tcls[i]] = cp

lcls += BCEcls(ps[:, 5:], t) # BCE

# Append targets to text file

# with open('targets.txt', 'a') as file:

# [file.write('%11.5g ' * 4 % tuple(x) + '\n') for x in torch.cat((txy[i], twh[i]), 1)]

lobj += BCEobj(pi[..., 4], tobj) # obj loss

# 乘上每种损失的对应权重

lbox *= h['giou']

lobj *= h['obj']

lcls *= h['cls']

# loss = lbox + lobj + lcls

return {"box_loss": lbox,

"obj_loss": lobj,

"class_loss": lcls}

详解

def compute_loss(p, targets, model): # predictions, targets, model

device = p[0].device

lcls = torch.zeros(1, device=device) # Tensor(0)

lbox = torch.zeros(1, device=device) # Tensor(0)

lobj = torch.zeros(1, device=device) # Tensor(0)

tcls, tbox, indices, anchors = build_targets(p, targets, model) # targets

h = model.hyp # hyperparameters

这里需要了解dataloader那边筛选出来的gt,即build_targets函数

tcls:筛选出来的gt的类索引,shape(YoloLayer_num,targets_num)

tbox:筛选出来的gt的box信息,tx,ty,w,h。其中tx,ty是偏移量;w,h是宽高, s h a p e ( Y o l o L a y e r _ n u m , t a r g e t s _ n u m , t x t y w h ) shape(YoloLayer\_num,targets\_num,t_xt_ywh) shape(YoloLayer_num,targets_num,txtywh)

indices:(YoloLayer_num,img_index+anchor_index+grid_y+grid_x)

anch:每个target对应使用的anchor尺度,shape(YoloLayer_num,targets_num,wh)

red = 'mean' # Loss reduction (sum or mean)

# Define criteria

BCEcls = nn.BCEWithLogitsLoss(pos_weight=torch.tensor([h['cls_pw']], device=device), reduction=red)

BCEobj = nn.BCEWithLogitsLoss(pos_weight=torch.tensor([h['obj_pw']], device=device), reduction=red)

这里如果采用BCELOSS,即二值交叉熵损失函数,取的是平均二值交叉熵loss。

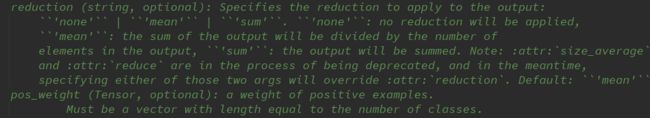

这里BCEWithLogitsLoss传入了一个参数reduction,关于reduction的说明如下:

这里采用的是平均二值交叉熵

关于YOLOV3应用BCELOSS的公式参考BCELOSS公式

# class label smoothing https://arxiv.org/pdf/1902.04103.pdf eqn 3

cp, cn = smooth_BCE(eps=0.1)

# focal loss

g = h['fl_gamma'] # focal loss gamma

if g > 0:

BCEcls, BCEobj = FocalLoss(BCEcls, g), FocalLoss(BCEobj, g)

这里cp,cn是对样本标签进行平滑的参数,后续会用到再提到。这里贴出标签平滑的源码:

def smooth_BCE(eps=0.1): # https://github.com/ultralytics/yolov3/issues/238#issuecomment-598028441

# return positive, negative label smoothing BCE targets

return 1.0 - 0.5 * eps, 0.5 * eps

这里如果超参数fl_gamma>0,则将定义好的BCEloss module传入Foralloss module修改为Forcalloss。

这里贴出Forcalloss的源码:

class FocalLoss(nn.Module):

# Wraps focal loss around existing loss_fcn(), i.e. criteria = FocalLoss(nn.BCEWithLogitsLoss(), gamma=1.5)

def __init__(self, loss_fcn, gamma=1.5, alpha=0.25):

super(FocalLoss, self).__init__()

self.loss_fcn = loss_fcn # must be nn.BCEWithLogitsLoss()

self.gamma = gamma

self.alpha = alpha

self.reduction = loss_fcn.reduction

self.loss_fcn.reduction = 'none' # required to apply FL to each element

def forward(self, pred, true):

loss = self.loss_fcn(pred, true)

# p_t = torch.exp(-loss)

# loss *= self.alpha * (1.000001 - p_t) ** self.gamma # non-zero power for gradient stability

# TF implementation https://github.com/tensorflow/addons/blob/v0.7.1/tensorflow_addons/losses/focal_loss.py

pred_prob = torch.sigmoid(pred) # prob from logits

p_t = true * pred_prob + (1 - true) * (1 - pred_prob)

alpha_factor = true * self.alpha + (1 - true) * (1 - self.alpha)

modulating_factor = (1.0 - p_t) ** self.gamma

loss *= alpha_factor * modulating_factor

if self.reduction == 'mean':

return loss.mean()

elif self.reduction == 'sum':

return loss.sum()

else: # 'none'

return loss

output的处理

# per output

nt = 0 # targets

for i, pi in enumerate(p): # layer index, layer predictions

b, a, gj, gi = indices[i] # image, anchor, gridy, gridx

tobj = torch.zeros_like(pi[..., 0], device=device) # target obj

nb = b.shape[0] # number of targets

p的shape为(YoloLayer_num,batch_size,anchor_num,grid_x,grid_y,xywh+obj_confidence+classes_num)

pi的shape为(batch_size,anchor_num,grid_x,grid_y,xywh+obj_confidence+classes_num)

这里计算loss是训练阶段的输出,那么这里的输出的p是未归一化的信息。

b , a , g j , g i b,a,gj,gi b,a,gj,gi分别表示 i m a g e _ i n d e x , a n c h o r _ i n d e x , g r i d _ y , g i r d _ x image\_index,anchor\_index,grid\_y,gird\_x image_index,anchor_index,grid_y,gird_x

tobj生成一个和预测类别个数相同维度的,值全为0的tensor

nb获取这一批次筛选出来的gt数量,即targets数量

if nb:

# 对应匹配到正样本的预测信息

ps = pi[b, a, gj, gi] # prediction subset corresponding to targets

# GIoU

pxy = ps[:, :2].sigmoid()

pwh = ps[:, 2:4].exp().clamp(max=1E3) * anchors[i]

pbox = torch.cat((pxy, pwh), 1) # predicted box

giou = bbox_iou(pbox.t(), tbox[i], x1y1x2y2=False, GIoU=True) # giou(prediction, target)

lbox += (1.0 - giou).mean() # giou loss

对当前pi的shape为(batch_size,anchor_num,grid_x,grid_y,xywh+obj_confidence+classes_num)

b表示该batch的所有target的图片索引

a表示该batch的所有target的anchor索引

gj和gi表示所有target的grid_cell坐标

ps = pi[b, a, gj, gi]表示取该模型输出中前四个维度为[image_index,anchor_index,gj,gi]的[x,y,w,h,obj,cls]

ps的shape为(targets_num,xywh+obj+cls]

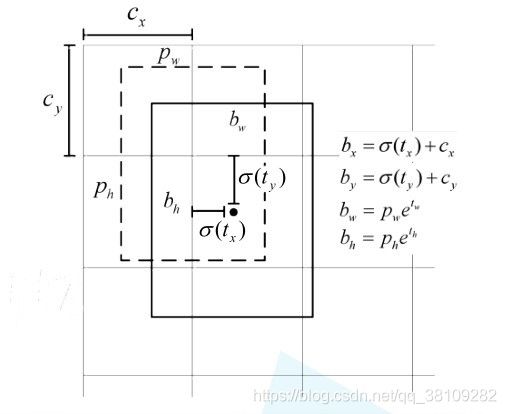

pxy对xy输出进行sigmoid处理,即归一化,shape为(targets_num,2)

pwh将预测的未处理的宽高维度的信息基于anchor映射到feature map尺度上的宽高信息,shape为(targets_num,2)

宽高映射在models.py的yololayer的前向传播中也定义了,两者没有什么太大的区别,感兴趣可以回去看看models.py的代码

注:pwh的映射调用一个clamp函数1E3表示 1 × 1 0 3 1\times 10^3 1×103,表示将pwh的值限制在1000之内,不清楚有无必要,models的处理没有用到这个。

io[..., 2:4] = torch.exp(io[..., 2:4]) * self.anchor_wh

论文中,关于预测输出的映射关系如下: σ \sigma σ表示sigmoid处理

pbox将pxy和pwh在第二维度拼接,得到shape(targets_num,xywh)

关于iou计算中使用偏移量来计算的原因

pbox:预测输出中xy是对应grid_cell的中心偏移量,尺度均是feature map尺度

s h a p e ( t a r g e t s _ n u m , x y w h ) shape(targets\_num,xywh) shape(targets_num,xywh)

tbox:筛选出来的gt的box信息,tx,ty,w,h。其中tx,ty是偏移量;w,h是宽高,尺度均是feature map尺度

s h a p e ( Y o l o L a y e r _ n u m , t a r g e t s _ n u m , t x t y w h ) shape(YoloLayer\_num,targets\_num,t_xt_ywh) shape(YoloLayer_num,targets_num,txtywh)

pbox和tbox的尺度是feature map尺度

pbox为网络的输出,回顾之前代码:

for i, pi in enumerate(p): # layer index, layer predictions

b, a, gj, gi = indices[i] # image, anchor, gridy, gridx

tobj = torch.zeros_like(pi[..., 0], device=device) # target obj

nb = b.shape[0] # number of targets

if nb:

# 对应匹配到正样本的预测信息

ps = pi[b, a, gj, gi] # prediction subset corresponding to targets

# GIoU

pxy = ps[:, :2].sigmoid()

pwh = ps[:, 2:4].exp().clamp(max=1E3) * anchors[i]

pbox = torch.cat((pxy, pwh), 1) # predicted box

pi的shape为(batch_size,anchor_num,grid_x,grid_y,xywh+obj_confidence+classes_num),其中xy表示基于当前grid_x和grid_y的偏移量,pxy对该偏移量经过sigmoid处理后得到的xy就是feature map尺度上的对应grid_x和grid_y的偏移量(注:ps将gt对应图片,使用anchor,所在的gridcell的预测xywh筛选出来,和tbox一一对应)

build_targets函数中tbox是feature map尺度上的,详情见YOLO-V3-SPP utils.py build_targets函数-详细解读(ultralytic版本)

loss中giou的计算

giou = bbox_iou(pbox.t(), tbox[i], x1y1x2y2=False, GIoU=True)

# giou(prediction, target)

这里pbox经过转置传入bbox_iou,方便bbox_iou计算iou

pbox:预测输出中恢复到feature map尺度的xywh,xy也是偏移量

s h a p e ( t a r g e t s _ n u m , x y w h ) shape(targets\_num,xywh) shape(targets_num,xywh)

tbox:筛选出来的gt的box信息,tx,ty,w,h。其中tx,ty是偏移量;w,h是宽高

s h a p e ( Y o l o L a y e r _ n u m , t a r g e t s _ n u m , t x t y w h ) shape(YoloLayer\_num,targets\_num,t_xt_ywh) shape(YoloLayer_num,targets_num,txtywh)

bbox_iou源码

def bbox_iou(box1, box2, x1y1x2y2=True, GIoU=False, DIoU=False, CIoU=False):

# Returns the IoU of box1 to box2. box1 is 4, box2 is nx4

box2 = box2.t()

# Get the coordinates of bounding boxes

if x1y1x2y2: # x1, y1, x2, y2 = box1

b1_x1, b1_y1, b1_x2, b1_y2 = box1[0], box1[1], box1[2], box1[3]

b2_x1, b2_y1, b2_x2, b2_y2 = box2[0], box2[1], box2[2], box2[3]

else: # transform from xywh to xyxy

b1_x1, b1_x2 = box1[0] - box1[2] / 2, box1[0] + box1[2] / 2

b1_y1, b1_y2 = box1[1] - box1[3] / 2, box1[1] + box1[3] / 2

b2_x1, b2_x2 = box2[0] - box2[2] / 2, box2[0] + box2[2] / 2

b2_y1, b2_y2 = box2[1] - box2[3] / 2, box2[1] + box2[3] / 2

# Intersection area

inter = (torch.min(b1_x2, b2_x2) - torch.max(b1_x1, b2_x1)).clamp(0) * \

(torch.min(b1_y2, b2_y2) - torch.max(b1_y1, b2_y1)).clamp(0)

# Union Area

w1, h1 = b1_x2 - b1_x1, b1_y2 - b1_y1

w2, h2 = b2_x2 - b2_x1, b2_y2 - b2_y1

union = (w1 * h1 + 1e-16) + w2 * h2 - inter

iou = inter / union # iou

if GIoU or DIoU or CIoU:

cw = torch.max(b1_x2, b2_x2) - torch.min(b1_x1, b2_x1) # convex (smallest enclosing box) width

ch = torch.max(b1_y2, b2_y2) - torch.min(b1_y1, b2_y1) # convex height

if GIoU: # Generalized IoU https://arxiv.org/pdf/1902.09630.pdf

c_area = cw * ch + 1e-16 # convex area

return iou - (c_area - union) / c_area # GIoU

if DIoU or CIoU: # Distance or Complete IoU https://arxiv.org/abs/1911.08287v1

# convex diagonal squared

c2 = cw ** 2 + ch ** 2 + 1e-16

# centerpoint distance squared

rho2 = ((b2_x1 + b2_x2) - (b1_x1 + b1_x2)) ** 2 / 4 + ((b2_y1 + b2_y2) - (b1_y1 + b1_y2)) ** 2 / 4

if DIoU:

return iou - rho2 / c2 # DIoU

elif CIoU: # https://github.com/Zzh-tju/DIoU-SSD-pytorch/blob/master/utils/box/box_utils.py#L47

v = (4 / math.pi ** 2) * torch.pow(torch.atan(w2 / h2) - torch.atan(w1 / h1), 2)

with torch.no_grad():

alpha = v / (1 - iou + v)

return iou - (rho2 / c2 + v * alpha) # CIoU

return iou

bbox_iou源码解析

# giou = bbox_iou(pbox.t(), tbox[i], x1y1x2y2=False, GIoU=True)

def bbox_iou(box1, box2, x1y1x2y2=True, GIoU=False, DIoU=False, CIoU=False):

# Returns the IoU of box1 to box2. box1 is 4, box2 is nx4

box2 = box2.t()

第一个pbox传进来前进行了转置,第二个参数tbox传进来后也得进行转置方便计算iou,所以传参前后进行一次转置均可

函数的第三个参数x1y1x2y2表示计算iou的box坐标格式的布尔变量

其他参数表示所使用的iou属于哪种iou

# Get the coordinates of bounding boxes

if x1y1x2y2: # x1, y1, x2, y2 = box1

b1_x1, b1_y1, b1_x2, b1_y2 = box1[0], box1[1], box1[2], box1[3]

b2_x1, b2_y1, b2_x2, b2_y2 = box2[0], box2[1], box2[2], box2[3]

else: # transform from xywh to xyxy

b1_x1, b1_x2 = box1[0] - box1[2] / 2, box1[0] + box1[2] / 2

b1_y1, b1_y2 = box1[1] - box1[3] / 2, box1[1] + box1[3] / 2

b2_x1, b2_x2 = box2[0] - box2[2] / 2, box2[0] + box2[2] / 2

b2_y1, b2_y2 = box2[1] - box2[3] / 2, box2[1] + box2[3] / 2

这段代码处理box的格式

# Intersection area \为续行符

inter = (torch.min(b1_x2, b2_x2) - torch.max(b1_x1, b2_x1)).clamp(0) * \

(torch.min(b1_y2, b2_y2) - torch.max(b1_y1, b2_y1)).clamp(0)

计算两个框之间的交集区域面积 ∣ A ∩ B ∣ |A\cap B| ∣A∩B∣,其中clamp(0)约束当两框不相交时,IOU取0

# Union Area

w1, h1 = b1_x2 - b1_x1, b1_y2 - b1_y1

w2, h2 = b2_x2 - b2_x1, b2_y2 - b2_y1

union = (w1 * h1 + 1e-16) + w2 * h2 - inter

iou = inter / union # iou

计算两个框之间的并集区域面积 ∣ A ∪ B ∣ |A\cup B| ∣A∪B∣,其中1e-16是一个很小的正数,目的是防止iou的除法运算遇到分母为0导致计算逻辑错误的情况

if GIoU or DIoU or CIoU:

cw = torch.max(b1_x2, b2_x2) - torch.min(b1_x1, b2_x1) # convex (smallest enclosing box) width

ch = torch.max(b1_y2, b2_y2) - torch.min(b1_y1, b2_y1) # convex height

if GIoU: # Generalized IoU https://arxiv.org/pdf/1902.09630.pdf

c_area = cw * ch + 1e-16 # convex area

return iou - (c_area - union) / c_area # GIoU

if DIoU or CIoU: # Distance or Complete IoU https://arxiv.org/abs/1911.08287v1

# convex diagonal squared

c2 = cw ** 2 + ch ** 2 + 1e-16

# centerpoint distance squared

rho2 = ((b2_x1 + b2_x2) - (b1_x1 + b1_x2)) ** 2 / 4 + ((b2_y1 + b2_y2) - (b1_y1 + b1_y2)) ** 2 / 4

if DIoU:

return iou - rho2 / c2 # DIoU

elif CIoU: # https://github.com/Zzh-tju/DIoU-SSD-pytorch/blob/master/utils/box/box_utils.py#L47

v = (4 / math.pi ** 2) * torch.pow(torch.atan(w2 / h2) - torch.atan(w1 / h1), 2)

with torch.no_grad():

alpha = v / (1 - iou + v)

return iou - (rho2 / c2 + v * alpha) # CIoU

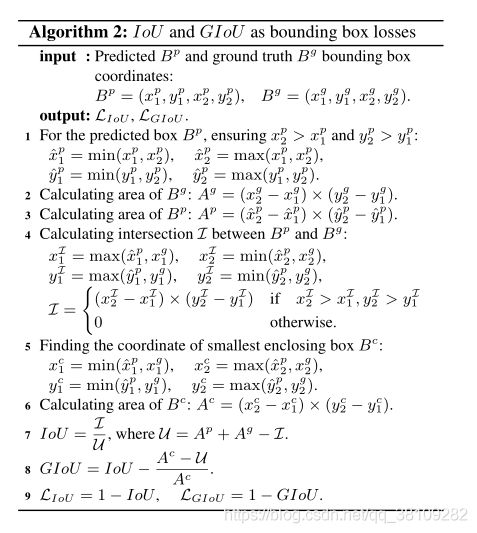

这里只讲GIOU的实现部分,回顾下GIOU的公式:

G I O U = I O U − ∣ C − ( A ∪ B ) ∣ ∣ C ∣ GIOU=IOU-\frac{|C-(A\cup B)|}{|C|} GIOU=IOU−∣C∣∣C−(A∪B)∣

cw,ch分别表示A和B的最小闭包C的宽高

最终bbox_iou的返回值为tensor(targets_num,)表示每个预测输出和gt的giou值

loss中定位损失的计算

giou = bbox_iou(pbox.t(), tbox[i], x1y1x2y2=False, GIoU=True) # giou(prediction, target)

lbox += (1.0 - giou).mean() # giou loss

这里giou状态为tensor(targets_num,),表示每个预测输出和gt的giou值

回顾GIOU损失计算公式: L G I O U = 1 − G I O U L_{GIOU}=1-GIOU LGIOU=1−GIOU

lbox属于定位损失,这里做了mean()处理,即求giou的tensor里所有数值的和取平均:

l b o x = ∑ j = 1 t a r g e t s _ n u m ( 1 − g i o u j ) t a r g e t s _ n u m lbox =\frac{\sum^{targets\_num}_{j=1}(1-giou_j)}{targets\_num} lbox=targets_num∑j=1targets_num(1−giouj)

loss中置信度损失的计算

注:这里指的BCEloss均是BCEWithLogitsLoss,与BCEloss的区别就是BCEWithLogitsLoss在使用时内置了对input的sigmoid处理。

参考:Pytorch详解BCELoss和BCEWithLogitsLoss

# Obj

tobj[b, a, gj, gi] = (1.0 - model.gr) + model.gr * giou.detach().clamp(0).type(tobj.dtype) # giou ratio

lobj += BCEobj(pi[..., 4], tobj) # obj loss

detach的目的

使giou这个tensor的required_grad参数从true设为false

具体可以参考这个博客的解释:Pytorch之requires_grad

clamp(0)的目的

这里giou经过了clamp(0),将giou的下限设为0,giou的理论范围是 [ − 1 , 1 ] [-1,1] [−1,1],在进行loss计算时, L G I O U = 1 − G I O U L_{GIOU}=1-GIOU LGIOU=1−GIOU使在pred和gt不重叠时得到的负值giou变为正值,从而能够训练。但在计算置信度损失时,对于不重叠的pred和gt,默认是将giou置0,表示置信度为0。

model.gr的作用

从代码就可以看出,对于求出来的giou乘上了model.gr参数,yolov3默认使用model.gr=1.0,这个参数在train.py文件有定义

model.gr = 1.0 # giou loss ratio (obj_loss = 1.0 or giou)

对于简单样本(pred和gt拟合效果好),giou会越接近1,置信度会越高。

但对于困难样本(pred和gt拟合效果差),giou经过clamp(0)会越接近0,置信度会越低。

这里gr是平衡简单样本的置信度和困难样本的置信度,yolov3-spp的gr=1,相当于没有平衡。对于困难样本较多的情况,我们可以适当设置这个gr来平衡。

置信度损失和分类损失计算

回顾下tobj的初始化

tobj = torch.zeros_like(pi[..., 0], device=device) # target obj

tobj[b, a, gj, gi]筛选了对应targets的tensor维度的数据进行置信度填充

tobj[b, a, gj, gi] = (1.0 - model.gr) + model.gr * giou.detach().clamp(0).type(tobj.dtype)

lobj += BCEobj(pi[..., 4], tobj) # obj loss

求出每个target的置信度之后,将置信度tobj(也是giou)和pred的置信度传入BCEobj

这里给出BCEloss的公式

L c o n f ( o , c ) = − ∑ i ( o i ln ( c ^ i ) + ( 1 − o i ) ln ( 1 − c ^ i ) ) N L_{conf}(o,c)=-\frac{\sum_i(o_i\ln(\hat{c}_i)+(1-o_i)\ln(1-\hat{c}_i))}{N} Lconf(o,c)=−N∑i(oiln(c^i)+(1−oi)ln(1−c^i))

c ^ i = S i g m o i d ( c i ) \hat{c}_i=Sigmoid(c_i) c^i=Sigmoid(ci)

其中 o i ∈ [ 0 , 1 ] o_i\in[0,1] oi∈[0,1],表示预测目标边界框与真实目标边界框的IOU,

c c c为预测值, c ^ i \hat{c}_i c^i为 c c c通过 S i g m o i d Sigmoid Sigmoid函数得到的预测置信度(预测的IOU)

N N N为正负样本个数

注: o i o_i oi和 c ^ i \widehat{c}_i c i均指IOU,唯一区别是 o i o_i oi是pred和gt的IOU(作为label指导训练),而 c ^ i \widehat{c}_i c i是网络pred的IOU。

注:对交叉熵不太了解的看看这篇博文交叉熵的理解

BCEloss能解决二分类问题,这里一类是pred的 c ^ i \widehat{c}_i c i,一类是pred和gt的 o i o_i oi(作为label指导训练)。对于每个target,置信度的训练都是一个二分类问题,因此使用BCEloss作为loss计算,这里对每个targets求得的BCE进行求和取平均。

如果采用了Forcal loss,BCEloss将初始化为Forcal loss类

初始化代码:

# focal loss

g = h['fl_gamma'] # focal loss gamma

if g > 0:

BCEcls, BCEobj = FocalLoss(BCEcls, g), FocalLoss(BCEobj, g)

Forcal loss类定义

注:BCE和forcal类均是torch计算图的module,属于网络传播的一部分。

class FocalLoss(nn.Module):

# Wraps focal loss around existing loss_fcn(), i.e. criteria = FocalLoss(nn.BCEWithLogitsLoss(), gamma=1.5)

def __init__(self, loss_fcn, gamma=1.5, alpha=0.25):

super(FocalLoss, self).__init__()

self.loss_fcn = loss_fcn # must be nn.BCEWithLogitsLoss()

self.gamma = gamma

self.alpha = alpha

self.reduction = loss_fcn.reduction

self.loss_fcn.reduction = 'none' # required to apply FL to each element

def forward(self, pred, true):

loss = self.loss_fcn(pred, true)

# p_t = torch.exp(-loss)

# loss *= self.alpha * (1.000001 - p_t) ** self.gamma # non-zero power for gradient stability

# TF implementation https://github.com/tensorflow/addons/blob/v0.7.1/tensorflow_addons/losses/focal_loss.py

pred_prob = torch.sigmoid(pred) # prob from logits

p_t = true * pred_prob + (1 - true) * (1 - pred_prob)

alpha_factor = true * self.alpha + (1 - true) * (1 - self.alpha)

modulating_factor = (1.0 - p_t) ** self.gamma

loss *= alpha_factor * modulating_factor

if self.reduction == 'mean':

return loss.mean()

elif self.reduction == 'sum':

return loss.sum()

else: # 'none'

return loss

Forcal loss源码详解

# Wraps focal loss around existing loss_fcn(), i.e. criteria = FocalLoss(nn.BCEWithLogitsLoss(), gamma=1.5)

def __init__(self, loss_fcn, gamma=1.5, alpha=0.25):

super(FocalLoss, self).__init__()

self.loss_fcn = loss_fcn # must be nn.BCEWithLogitsLoss()

self.gamma = gamma

self.alpha = alpha

self.reduction = loss_fcn.reduction

self.loss_fcn.reduction = 'none' # required to apply FL to each element

init函数有一个loss_fcn参数,必须是nn.BCEWithLogitsLoss()对象,在调用Forcal loss的使用传入,gamma和alpha参数默认为1.5和0.2。

self.reduction = loss_fcn.reduction

self.loss_fcn.reduction = 'none' # required to apply FL to each element

注:reduction是loss的结果处理参数,主要有以下三种状态:

reduction=mean:表示对loss的所有targets结果进行求均

reduction=sum:表示对loss的所有targets结果进行求和

reduction=none:表示不对loss的结果处理,输出一个包含所有targets结果的tensor

这里第一句代码的reduction是forcal loss的reduction,loss_fcn的reduciton是mean,表示forcal loss输出是对所有targets结果进行求均

第二句代码将BCE的reduction从mean修改为none,这样将传入进来的BCE的输出将是所有targets结果的tensor,将此结果去计算forcal loss

def forward(self, pred, true):

loss = self.loss_fcn(pred, true)

这里是前向传播的参数,pred是预测信息,true是“lable”(作label指导训练的)。

调用代码如下:

lobj += BCEobj(pi[..., 4], tobj) # obj loss

前向传播细节

pred_prob = torch.sigmoid(pred) # prob from logits

p_t = true * pred_prob + (1 - true) * (1 - pred_prob)

alpha_factor = true * self.alpha + (1 - true) * (1 - self.alpha)

modulating_factor = (1.0 - p_t) ** self.gamma

loss *= alpha_factor * modulating_factor

pred_prob将pi的置信度进行sigmoid处理,缩放到[0,1]区间,代表置信度概率。

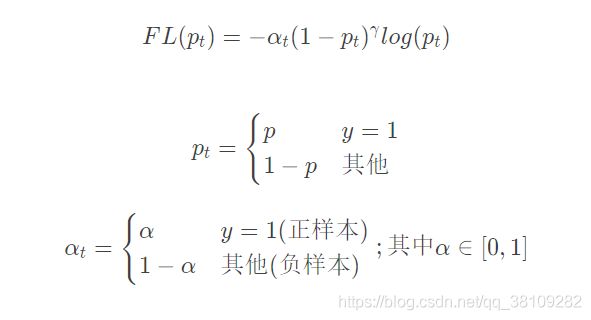

这里给出forcal loss的公式

p_t = true * pred_prob + (1 - true) * (1 - pred_prob)

p_t表示targets的预测正确或预测错误的概率,对应公式中的 p t p_t pt

alpha_factor = true * self.alpha + (1 - true) * (1 - self.alpha)

alpha_factor表示平衡正负样本在loss的权重,对应公式中的 α t \alpha_t αt

modulating_factor = (1.0 - p_t) ** self.gamma

对应公式的 ( 1 − p t ) γ (1-p_t)^\gamma (1−pt)γ

loss *= alpha_factor * modulating_factor

alpha_factor * modulating_factor对应公式中的 α t ( 1 − p t ) γ \alpha_t(1-p_t)^\gamma αt(1−pt)γ

loss = self.loss_fcn(pred, true)

这里返回得到的loss为shape(targets_num,pred_num)

pred_num指预测输出的数目,比如预测置信度是1个,那么pred_num=1,再比如预测类别,pred_num=类别数目。pred_num的每一个数值都表示当前预测的二值交叉熵。

loss得到的值对应公式中的 − l o g ( p t ) -log(p_t) −log(pt)

loss *= alpha_factor * modulating_factor

这里将上述求得的 α t ( 1 − p t ) γ \alpha_t(1-p_t)^\gamma αt(1−pt)γ和 − l o g ( p t ) -log(p_t) −log(pt),相乘得 F L ( p t ) = − α t ( 1 − p t ) γ l o g ( p t ) FL(p_t)=-\alpha_t(1-p_t)^\gamma log(p_t) FL(pt)=−αt(1−pt)γlog(pt)

if self.reduction == 'mean':

return loss.mean()

elif self.reduction == 'sum':

return loss.sum()

else: # 'none'

return loss

这里对loss的所有维度取平均,得到的是一个数值

回到置信度和分类损失计算

# Class

if model.nc > 1: # cls loss (only if multiple classes)

t = torch.full_like(ps[:, 5:], cn, device=device) # targets

t[range(nb), tcls[i]] = cp

lcls += BCEcls(ps[:, 5:], t) # BCE

分类损失和前面置信度的计算大同小异,这里不再赘述。

comupte_loss返回值

# 乘上每种损失的对应权重

lbox *= h['giou']

lobj *= h['obj']

lcls *= h['cls']

# loss = lbox + lobj + lcls

return {"box_loss": lbox,

"obj_loss": lobj,

"class_loss": lcls}

这里对lbox(定位损失),lobj(置信度损失),lcls(分类损失)乘以一个分配权重,作为超参,这个参数是作者优化得到的,不会轻易改动。

一些注意的地方

compute_loss,for循环三个预测器中,唯独置信度损失lobj是需要考虑正样本和负样本的,其他损失只需考虑正样本的计算。

置信度损失lbox在计算的时候需要计算正负样本的损失,所以在对lbox进行累加时,lbox的累加是放在if nb:之外的,即判断体之外,这样才能累加到正负样本。

还有一个值得一提的是,由于我们筛选的gt是经过whIOU得到的,导致得到的targets是不全的,在计算lbox时会将那些没有被筛选到的gt当成负样本来算,导致lbox可能会偏高一点。(实际上那些被whIOU筛掉的gt没有经过计算,lbox将这些被筛掉gt对应的tobj置0,会让lbox有点虚高)

当然这些是能够被一些trick平衡掉一些

下一步要研究的地方

对loss的计算以及正负样本的分配已经基本了解

后面会研究预测模块对数据的处理