CNN模型复现12 Inception v1\v2\v3\v4

Inception v1\v2\v3\v4

- 1.InceptionV1

-

- 1.1 InceptionV1的特点

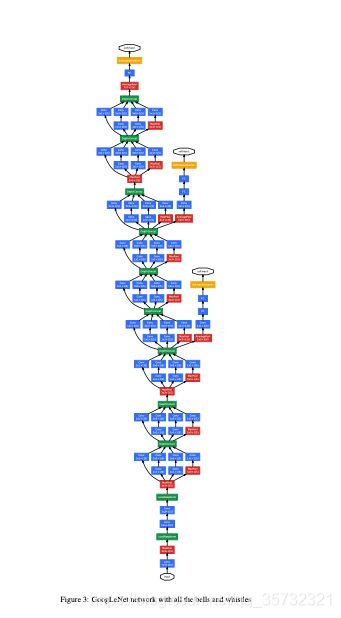

- 1.2 GoogLeNet结构

- 1.3 实验结果

- 1.4 InceptionV1代码

- 2.InceptionV2

-

- 2.1 结构

- 2.2 代码

- 3.InceptionV3

-

- 3.1 结构

- 3.2 代码

- 4.InceptionV4

-

- 4.1 代码

1.InceptionV1

论文链接:https://arxiv.org/pdf/1409.4842.pdf

1.1 InceptionV1的特点

- 在不增加计算复杂度的情况下,增加每一个stage的通道数。

- 在stage与stage之间减少了输入的维度。

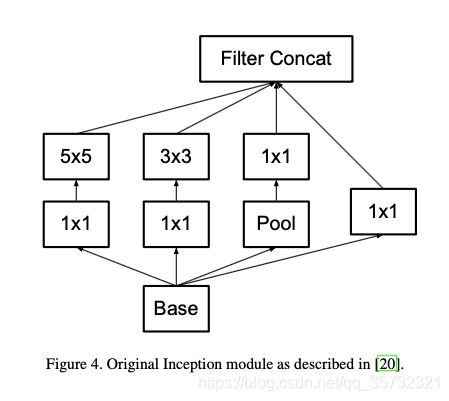

- 在每一个stage中,用不同的卷积核提取不同尺寸的特征,增强提取特征的能力。

- 增加网络的宽度和深度,提高计算效率。

- Inception结构运算速度是non-Inception结构运算速的2~3倍。

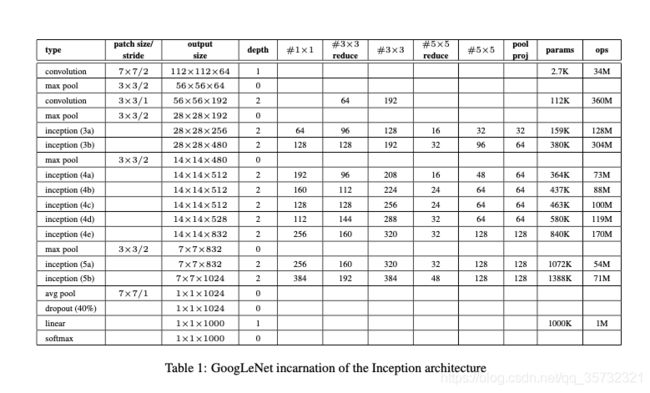

1.2 GoogLeNet结构

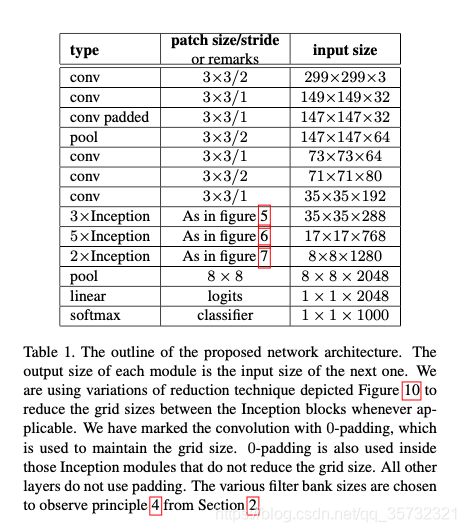

- GoogLeNet incarnation of the Inception architecture

a. input:[224,224,3]

b. “#3×3 reduce” 、 “#5×5 reduce” 是Conv(3x3),Conv(5x5)之前Conv(1x1)对应的通道数

c. pool proj 列是MaxPool之后Conv(1x1)对应的通道数

d. Inception模块, 使用修正的线性激活函数 - GoogLeNet结构

a. 平均池化层pool_size=5,strides=3,(4a)的输出4×4×512,(4d)的输出4×4×528。

b. Conv(1x1,f=128)+ReLU

c. Dence(unit=1024)+ReLU

d. DropOut(rete=0.7)

e. SoftMax(1000)

f. sgd(momentum=0.9)

g. learning rate每8个epochs降低4%。

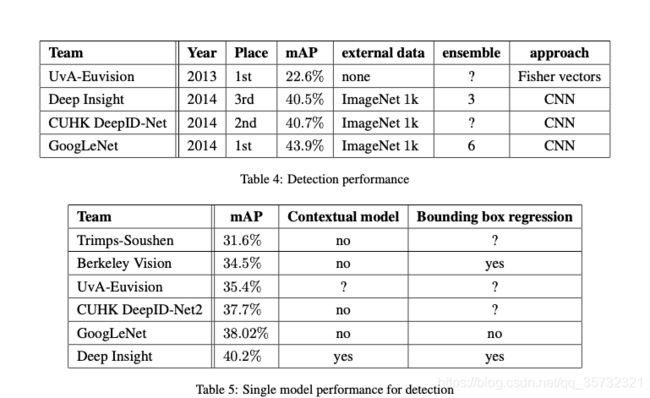

1.3 实验结果

1.4 InceptionV1代码

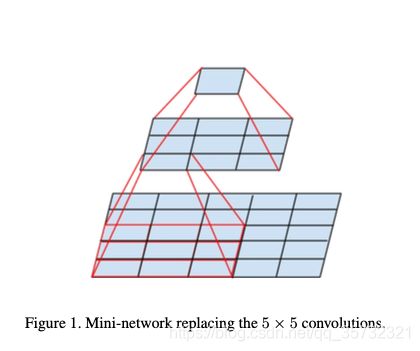

2.InceptionV2

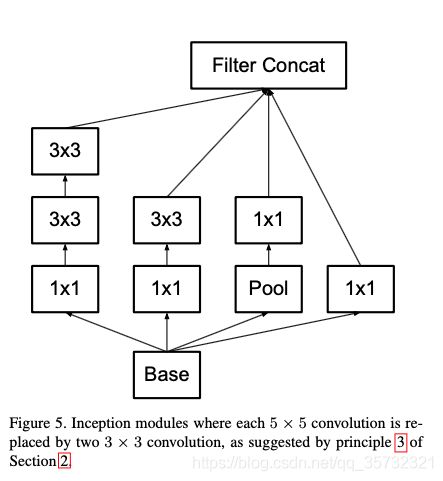

2.1 结构

2.2 代码

3.InceptionV3

3.1 结构

3.2 代码

4.InceptionV4

4.1 代码

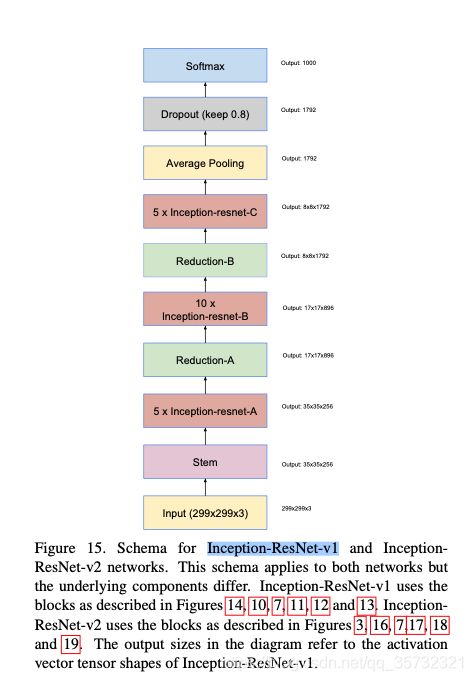

- 流程

input

↓

Stem

↓

Inception-resnet-A * 5

↓

Reduction-A

↓

Inception-resnet-B * 10

↓

Reduction-B

↓

Inception-resnet-C * 5

↓

AvgPooling

↓

Dropout(0.8)

↓

softmax

- Stem

(1) Stem结构

input (160*160*3)

↓

Conv(32,3*3,2,v) (79*79*32)

↓

Conv(32,3*3,v) (77*77*32)

↓

Conv(64,3*3) (77*77*64)

↓

MaxPool(s=2,v) (38*38*64)

↓

Conv(80,1*1) (38*38*80)

↓

Conv(192,3*3,v) (36*36*192)

↓

Conv(256,3*3,2,v) (17*17*256)

(2) Stem代码

inputs = Input(shape=input_shape)

# 160*160*3 -> 77,77,64

x = conv2d_bn(inputs,32,3,strides=2,padding='valid') # (160-3+1)/2=158/2=79

x = conv2d_bn(x,32,3,padding='valid') # 79-2=77

x = conv2d_bn(x,64,3) # 77/1

# 77,77,64 -> 38,38,64

x = MaxPooling2D(3,strides=2)(x) # (77-3)/2+1=38

# 38*38*64 -> 17,17,256

x = conv2d_bn(x,80,1,padding='valid') # (38-1+1)/1 = 38

x = conv2d_bn(x,192,3,padding='valid') # (38-3+1)/1 = 36

x = conv2d_bn(x,256,3,strides=2,padding='valid') # (36-3+1)/2 = 17

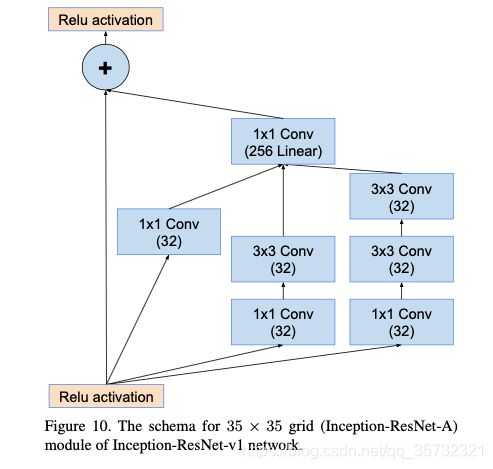

input

↓

input->conv(32,1)->p1

input->conv(32,1)->conv(32,3)->p2

input->conv(32,1)->conv(32,3)->conv(32,3)->p3

↓

concatenate([p1,p2,p3])->conv(256,1) + input->Relu

(2) Inception-resnet-A代码

branch_0 = conv2d_bn(x, 32, 1)

branch_1 = conv2d_bn(x, 32, 1)

branch_1 = conv2d_bn(branch_1, 32, 3)

branch_2 = conv2d_bn(x, 32, 1)

branch_2 = conv2d_bn(branch_2, 32, 3)

branch_2 = conv2d_bn(branch_2, 32, 3)

branches = [branch_0, branch_1, branch_2]

mixed = Concatenate(axis=channel_axis)(branches)

up = conv2d_bn(mixed,K.int_shape(x)[channel_axis],1,activation=None,use_bias=True)

up = Lambda(scaling,

output_shape=K.int_shape(up)[1:],

arguments={'scale': scale})(up)

x = add([x, up])

if activation is not None:

x = Activation(activation)(x)

- Reduction-A

(1) Reduction-A结构

input

↓

input->conv(384,3,s=2,v)->p1

input->conv(192,1)->conv(192,3)->conv(256,3,2,v)->p2

input->MaxPool(3,3,v)->p3

↓

concatenate([p1,p2,p3])

(2) Reduction-A代码

branch_0 = conv2d_bn(x, 384, 3,strides=2,padding='valid',name=name_fmt('Conv2d_1a_3x3', 0))

branch_1 = conv2d_bn(x, 192, 1, name=name_fmt('Conv2d_0a_1x1', 1))

branch_1 = conv2d_bn(branch_1, 192, 3, name=name_fmt('Conv2d_0b_3x3', 1))

branch_1 = conv2d_bn(branch_1,256,3,strides=2,padding='valid',name=name_fmt('Conv2d_1a_3x3', 1))

branch_pool = MaxPooling2D(3,strides=2,padding='valid',name=name_fmt('MaxPool_1a_3x3', 2))(x)

branches = [branch_0, branch_1, branch_pool]

x = Concatenate(axis=channel_axis, name='Mixed_6a')(branches)

- Inception-resnet-B

(1) Inception-resnet-B结构

input

↓

input->conv(128,1)->p1

input->conv(128,1)->conv(128,1*7)->conv(128,7*1)->p2

↓

concatenate([p1,p2])->conv(896,1) + input->Relu

(2) Inception-resnet-B代码

branch_0 = conv2d_bn(x, 128, 1, name=name_fmt('Conv2d_1x1', 0))

branch_1 = conv2d_bn(x, 128, 1, name=name_fmt('Conv2d_0a_1x1', 1))

branch_1 = conv2d_bn(branch_1, 128, [1, 7], name=name_fmt('Conv2d_0b_1x7', 1))

branch_1 = conv2d_bn(branch_1, 128, [7, 1], name=name_fmt('Conv2d_0c_7x1', 1))

branches = [branch_0, branch_1]

mixed = Concatenate(axis=channel_axis, name=name_fmt('Concatenate'))(branches)

up = conv2d_bn(mixed,K.int_shape(x)[channel_axis],1,activation=None,use_bias=True,

name=name_fmt('Conv2d_1x1'))

up = Lambda(scaling,

output_shape=K.int_shape(up)[1:],

arguments={'scale': scale})(up)

x = add([x, up])

if activation is not None:

x = Activation(activation, name=name_fmt('Activation'))(x)

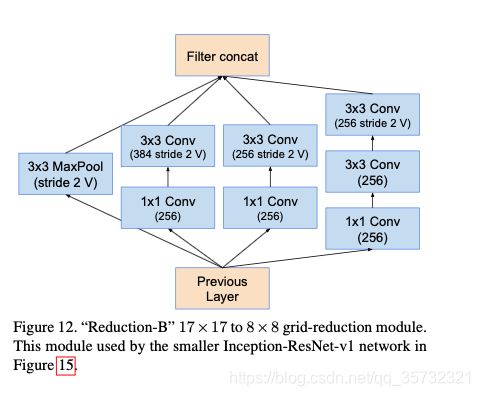

input

↓

input->conv(256,1)->conv(384,3,s=2,v)->p1

input->conv(256,1)->conv(256,3,2,v)->p2

input->conv(256,1)->conv(256,3)->conv(256,3,2,v)->p3

input->MaxPool(3,2,v)->p4

↓

concatenate([p1,p2,p3,p4])

(2) Reduction-B代码

name_fmt = partial(_generate_layer_name, prefix='Mixed_7a')

branch_0 = conv2d_bn(x, 256, 1, name=name_fmt('Conv2d_0a_1x1', 0))

branch_0 = conv2d_bn(branch_0,384,3,strides=2,padding='valid',name=name_fmt('Conv2d_1a_3x3', 0))

branch_1 = conv2d_bn(x, 256, 1, name=name_fmt('Conv2d_0a_1x1', 1))

branch_1 = conv2d_bn(branch_1,256,3,strides=2,padding='valid',name=name_fmt('Conv2d_1a_3x3', 1))

branch_2 = conv2d_bn(x, 256, 1, name=name_fmt('Conv2d_0a_1x1', 2))

branch_2 = conv2d_bn(branch_2, 256, 3, name=name_fmt('Conv2d_0b_3x3', 2))

branch_2 = conv2d_bn(branch_2,256,3,strides=2,padding='valid',name=name_fmt('Conv2d_1a_3x3', 2))

branch_pool = MaxPooling2D(3,strides=2,padding='valid',name=name_fmt('MaxPool_1a_3x3', 3))(x)

branches = [branch_0, branch_1, branch_2, branch_pool]

x = Concatenate(axis=channel_axis, name='Mixed_7a')(branches)

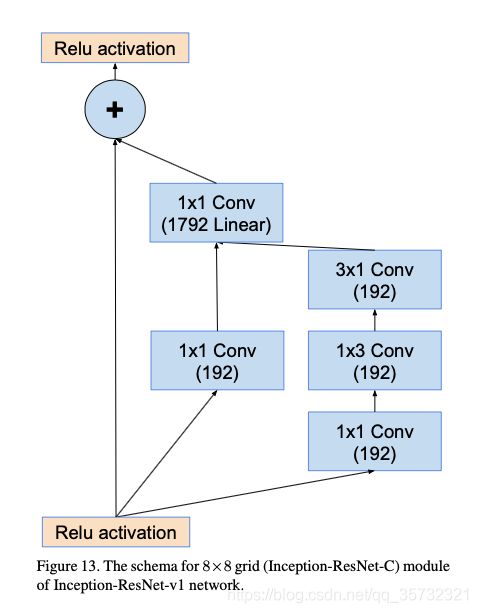

input

↓

input->conv(128,1)->p1

input->conv(192,1)->conv(192,1*3)->conv(128,3*1)->p2

↓

concatenate([p1,p2])->conv(1792,1) + input->Relu

(2) Inception-resnet-C代码

branch_0 = conv2d_bn(x, 192, 1, name=name_fmt('Conv2d_1x1', 0))

branch_1 = conv2d_bn(x, 192, 1, name=name_fmt('Conv2d_0a_1x1', 1))

branch_1 = conv2d_bn(branch_1, 192, [1, 3], name=name_fmt('Conv2d_0b_1x3', 1))

branch_1 = conv2d_bn(branch_1, 192, [3, 1], name=name_fmt('Conv2d_0c_3x1', 1))

branches = [branch_0, branch_1]

mixed = Concatenate(axis=channel_axis, name=name_fmt('Concatenate'))(branches)

up = conv2d_bn(mixed,K.int_shape(x)[channel_axis],1,activation=None,use_bias=True,

name=name_fmt('Conv2d_1x1'))

up = Lambda(scaling,

output_shape=K.int_shape(up)[1:],

arguments={'scale': scale})(up)

x = add([x, up])

if activation is not None:

x = Activation(activation, name=name_fmt('Activation'))(x)

- Inception-ResNetV1网络

(1) Inception-ResNetV1网络代码

from functools import partial

from keras.models import Model

from keras.layers import Activation

from keras.layers import BatchNormalization

from keras.layers import Concatenate

from keras.layers import Conv2D

from keras.layers import Dense

from keras.layers import Dropout

from keras.layers import GlobalAveragePooling2D

from keras.layers import Input

from keras.layers import Lambda

from keras.layers import MaxPooling2D

from keras.layers import add

from keras import backend as K

def scaling(x, scale):

return x * scale

def _generate_layer_name(name, branch_idx=None, prefix=None):

if prefix is None:

return None

if branch_idx is None:

return '_'.join((prefix, name))

return '_'.join((prefix, 'Branch', str(branch_idx), name))

def conv2d_bn(x,filters,kernel_size,strides=1,padding='same',activation='relu',use_bias=False,name=None):

x = Conv2D(filters,

kernel_size,

strides=strides,

padding=padding,

use_bias=use_bias,

name=name)(x)

if not use_bias:

x = BatchNormalization(axis=3, momentum=0.995, epsilon=0.001,

scale=False, name=_generate_layer_name('BatchNorm', prefix=name))(x)

if activation is not None:

x = Activation(activation, name=_generate_layer_name('Activation', prefix=name))(x)

return x

def _inception_resnet_block(x, scale, block_type, block_idx, activation='relu'):

channel_axis = 3

if block_idx is None:

prefix = None

else:

prefix = '_'.join((block_type, str(block_idx)))

name_fmt = partial(_generate_layer_name, prefix=prefix)

if block_type == 'Block35':

branch_0 = conv2d_bn(x, 32, 1, name=name_fmt('Conv2d_1x1', 0))

branch_1 = conv2d_bn(x, 32, 1, name=name_fmt('Conv2d_0a_1x1', 1))

branch_1 = conv2d_bn(branch_1, 32, 3, name=name_fmt('Conv2d_0b_3x3', 1))

branch_2 = conv2d_bn(x, 32, 1, name=name_fmt('Conv2d_0a_1x1', 2))

branch_2 = conv2d_bn(branch_2, 32, 3, name=name_fmt('Conv2d_0b_3x3', 2))

branch_2 = conv2d_bn(branch_2, 32, 3, name=name_fmt('Conv2d_0c_3x3', 2))

branches = [branch_0, branch_1, branch_2]

elif block_type == 'Block17':

branch_0 = conv2d_bn(x, 128, 1, name=name_fmt('Conv2d_1x1', 0))

branch_1 = conv2d_bn(x, 128, 1, name=name_fmt('Conv2d_0a_1x1', 1))

branch_1 = conv2d_bn(branch_1, 128, [1, 7], name=name_fmt('Conv2d_0b_1x7', 1))

branch_1 = conv2d_bn(branch_1, 128, [7, 1], name=name_fmt('Conv2d_0c_7x1', 1))

branches = [branch_0, branch_1]

elif block_type == 'Block8':

branch_0 = conv2d_bn(x, 192, 1, name=name_fmt('Conv2d_1x1', 0))

branch_1 = conv2d_bn(x, 192, 1, name=name_fmt('Conv2d_0a_1x1', 1))

branch_1 = conv2d_bn(branch_1, 192, [1, 3], name=name_fmt('Conv2d_0b_1x3', 1))

branch_1 = conv2d_bn(branch_1, 192, [3, 1], name=name_fmt('Conv2d_0c_3x1', 1))

branches = [branch_0, branch_1]

mixed = Concatenate(axis=channel_axis, name=name_fmt('Concatenate'))(branches)

up = conv2d_bn(mixed,K.int_shape(x)[channel_axis],1,activation=None,use_bias=True,

name=name_fmt('Conv2d_1x1'))

up = Lambda(scaling,

output_shape=K.int_shape(up)[1:],

arguments={'scale': scale})(up)

x = add([x, up])

if activation is not None:

x = Activation(activation, name=name_fmt('Activation'))(x)

return x

def InceptionResNetV1(input_shape=(160, 160, 3),

classes=128,

dropout_keep_prob=0.8):

channel_axis = 3

inputs = Input(shape=input_shape)

# 160,160,3 -> 77,77,64

x = conv2d_bn(inputs, 32, 3, strides=2, padding='valid', name='Conv2d_1a_3x3')

x = conv2d_bn(x, 32, 3, padding='valid', name='Conv2d_2a_3x3')

x = conv2d_bn(x, 64, 3, name='Conv2d_2b_3x3')

# 77,77,64 -> 38,38,64

x = MaxPooling2D(3, strides=2, name='MaxPool_3a_3x3')(x)

# 38,38,64 -> 17,17,256

x = conv2d_bn(x, 80, 1, padding='valid', name='Conv2d_3b_1x1')

x = conv2d_bn(x, 192, 3, padding='valid', name='Conv2d_4a_3x3')

x = conv2d_bn(x, 256, 3, strides=2, padding='valid', name='Conv2d_4b_3x3')

# 5x Block35 (Inception-ResNet-A block):

for block_idx in range(1, 6):

x = _inception_resnet_block(x,scale=0.17,block_type='Block35',block_idx=block_idx)

# Reduction-A block:

# 17,17,256 -> 8,8,896

name_fmt = partial(_generate_layer_name, prefix='Mixed_6a')

branch_0 = conv2d_bn(x, 384, 3,strides=2,padding='valid',name=name_fmt('Conv2d_1a_3x3', 0))

branch_1 = conv2d_bn(x, 192, 1, name=name_fmt('Conv2d_0a_1x1', 1))

branch_1 = conv2d_bn(branch_1, 192, 3, name=name_fmt('Conv2d_0b_3x3', 1))

branch_1 = conv2d_bn(branch_1,256,3,strides=2,padding='valid',name=name_fmt('Conv2d_1a_3x3', 1))

branch_pool = MaxPooling2D(3,strides=2,padding='valid',name=name_fmt('MaxPool_1a_3x3', 2))(x)

branches = [branch_0, branch_1, branch_pool]

x = Concatenate(axis=channel_axis, name='Mixed_6a')(branches)

# 10x Block17 (Inception-ResNet-B block):

for block_idx in range(1, 11):

x = _inception_resnet_block(x,

scale=0.1,

block_type='Block17',

block_idx=block_idx)

# Reduction-B block

# 8,8,896 -> 3,3,1792

name_fmt = partial(_generate_layer_name, prefix='Mixed_7a')

branch_0 = conv2d_bn(x, 256, 1, name=name_fmt('Conv2d_0a_1x1', 0))

branch_0 = conv2d_bn(branch_0,384,3,strides=2,padding='valid',name=name_fmt('Conv2d_1a_3x3', 0))

branch_1 = conv2d_bn(x, 256, 1, name=name_fmt('Conv2d_0a_1x1', 1))

branch_1 = conv2d_bn(branch_1,256,3,strides=2,padding='valid',name=name_fmt('Conv2d_1a_3x3', 1))

branch_2 = conv2d_bn(x, 256, 1, name=name_fmt('Conv2d_0a_1x1', 2))

branch_2 = conv2d_bn(branch_2, 256, 3, name=name_fmt('Conv2d_0b_3x3', 2))

branch_2 = conv2d_bn(branch_2,256,3,strides=2,padding='valid',name=name_fmt('Conv2d_1a_3x3', 2))

branch_pool = MaxPooling2D(3,strides=2,padding='valid',name=name_fmt('MaxPool_1a_3x3', 3))(x)

branches = [branch_0, branch_1, branch_2, branch_pool]

x = Concatenate(axis=channel_axis, name='Mixed_7a')(branches)

# 5x Block8 (Inception-ResNet-C block):

for block_idx in range(1, 6):

x = _inception_resnet_block(x,

scale=0.2,

block_type='Block8',

block_idx=block_idx)

x = _inception_resnet_block(x,scale=1.,activation=None,block_type='Block8',block_idx=6)

# 平均池化

x = GlobalAveragePooling2D(name='AvgPool')(x)

x = Dropout(1.0 - dropout_keep_prob, name='Dropout')(x)

# 全连接层到128

x = Dense(classes, use_bias=False, name='Bottleneck')(x)

bn_name = _generate_layer_name('BatchNorm', prefix='Bottleneck')

x = BatchNormalization(momentum=0.995, epsilon=0.001, scale=False,

name=bn_name)(x)

# 创建模型

model = Model(inputs, x, name='inception_resnet_v1')

return model