tensorflow1.14(一、初识)

默认图与自定义图

import tensorflow as tf

a = tf.constant(1)

b = tf.constant(3)

c = tf.constant(5,name="name1")

d = tf.constant(7,name="name1") # name:操作名称,可自定义

e = a+b+c+d

print(a,a.graph)

print(b,b.graph)

print(c,c.graph)

print(d,d.graph)

print(e,e.graph)

with tf.Session() as sess:

e_value = sess.run(e)

print(e_value)

print(sess.graph)

# 自定义图------------------------------------------------------------

my_g = tf.Graph()

with my_g.as_default():

my_a = tf.constant(10)

my_b = tf.constant(30)

my_c = tf.constant(50, name="name1")

my_d = tf.constant(70, name="name1") # name:操作名称,可自定义

my_e = my_a + my_b + my_c + my_d

print(my_a, my_a.graph)

print(my_b, my_b.graph)

print(my_c, my_c.graph)

print(my_d, my_d.graph)

print(my_e, my_e.graph)

with tf.Session(graph=my_g) as my_sess:

my_e_value = my_sess.run(my_e)

print(my_e_value)

print(my_sess.graph)

Tensor("Const:0", shape=(), dtype=int32)

Tensor("Const_1:0", shape=(), dtype=int32)

Tensor("name1:0", shape=(), dtype=int32)

Tensor("name1_1:0", shape=(), dtype=int32)

Tensor("add_2:0", shape=(), dtype=int32)

16

Tensor("Const:0", shape=(), dtype=int32)

Tensor("Const_1:0", shape=(), dtype=int32)

Tensor("name1:0", shape=(), dtype=int32)

Tensor("name1_1:0", shape=(), dtype=int32)

Tensor("add_2:0", shape=(), dtype=int32)

160

结论:

1. sess.graph和a.graph均可以查询图程序的地址

2. 不同图有不同的命名空间(不同图的操作名称可以重复,同一图程序则不能重复)

操作函数与操作对象

操作函数:tf.constant(Tensor对象) 输入一个Tensor对象,输出一个Const对象

操作对象:Const

一个操作函数会返回操作对象

a = tf.constant(5,name="name1")

print(a)

……

e = a+b+c+d

print(e)

Tensor("name1:0", shape=(), dtype=int32)

创建了一个名为name1的tf.Operation并返回了一个名为"name1:0"的tf.Tensor

Tensor("add_2:0", shape=(), dtype=int32) add:指令名称 0:输出序号

创建了一个名为add_2的tf.Operation并返回了一个名为"add_2:0"的tf.Tensor

TensorBoard基础操作

# 将图写入本地生成event文件

tf.summary.FileWriter("../summary",graph=sess.graph) #一定是运行的时候才能写吗(tf1) G:\Pyfor4\Tensorflor114_prj>tensorboard --logdir="./summary"

浏览器输入:127.0.0.1:6006

两种会话

tf.InteractiveSession()与a.eval()的配合:用于交互式上下文中的TensorFlow,例如shell

>>>tf.InteractiveSession()

>>>a = tf.constant(3)

>>>a

Out[5]:

>>>a.eval()

Out[6]: 3 tf.Session()用于完整的程序当中sess.run()等同于eva() :运行图程序并获取张量值

#试图运行自定义图

with tf.Session(graph=my_g) as my_sess: #not an element of this graph.

# my_C = my_sess.run(c_new)

# print(my_C)

print(c_new.eval()) # 等同于my_sess.run(c_new)

*传统会话(需要手动close)

# 传统的会话方式,为了方便回收通常使用上下文管理器

my_sess = tf.Session(graph=my_g)

my_e_value = my_sess.run(my_e)

print(my_e_value)

my_sess.close()*tf.Session的两个参数:关于远程调试

tf.Session(graph=my_g,config=tf.ConfigProto(allow_soft_placement=True,

log_device_placement=True))2022-11-18 07:09:01.925172: I tensorflow/core/common_runtime/direct_session.cc:296] Device mapping:

2022-11-18 07:09:01.925773: I tensorflow/core/common_runtime/placer.cc:54] Add: (Add)/job:localhost/replica:0/task:0/device:CPU:0

2022-11-18 07:09:01.925896: I tensorflow/core/common_runtime/placer.cc:54] Const: (Const)/job:localhost/replica:0/task:0/device:CPU:0

2022-11-18 07:09:01.926035: I tensorflow/core/common_runtime/placer.cc:54] Const_1: (Const)/job:localhost/replica:0/task:0/device:CPU:0

2022-11-18 07:09:01.926153: I tensorflow/core/common_runtime/placer.cc:54] name1: (Const)/job:localhost/replica:0/task:0/device:CPU:0

2022-11-18 07:09:01.926272: I tensorflow/core/common_runtime/placer.cc:54] name1_1: (Const)/job:localhost/replica:0/task:0/device:CPU:0

会话的run()

sess = tf.Session()

# 计算C的值

print(sess.run(c))

print(c.eval(session=sess))同时查看多个Operation:用列表的形式传入

my_eabc_value = my_sess.run([my_e,my_a,my_b,my_c])[90, 10, 30, 50]

会话中占位符的处理:

占位符:tf.placeholder()

sess.run(sum_ab,feed_dict={a:3.0,b:5.0}) 必须有feed_dict参数

a = tf.placeholder(tf.float32)

b = tf.placeholder(tf.float32)

sum_ab = tf.add(a,b)

with tf.Session() as sess:

print(sess.run(sum_ab,feed_dict={a:3.0,b:5.0})) #当叶子为占位符、必须传递feed_dict

会话报错:

张量(Tensor)

n维数组、类似numpy.ndarray的操作

创建:

# 常规

a = tf.constant(10) # shape=()

b = tf.constant([12,34,45]) # shape=(3,)

c = tf.constant([[12,34,45],

[23,45,65]]) # shape=(2, 3)

# 零张量与壹张量

tf.zeros(shape = [3,4]) # dtype=float32

tf.ones(shape = [3,4]) # dtype=float32

# 随机张量

tf.random_normal(shape = [3,4])

tf.random_normal(shape = [3,4], mean=1.75, stddev=0.12)

# 其他……张量的变换:

1. dtype转变

2.更新静态形状

3. 更新动态形状

#dtype转变

a = tf.constant([[1,2,3,4],[5,6,7,8]])

print(a) # Tensor("Const:0", shape=(2, 4), dtype=int32)

res_a = tf.cast(a,dtype=tf.float32) # 类型转换:并不会改变原始的type ,返回新的张量

print(a) # Tensor("Const:0", shape=(2, 4), dtype=int32)

print(res_a) # Tensor("Cast:0", shape=(2, 4), dtype=float32)# 更新静态形状(在形状没有完全固定下来的占位符)

a_p = tf.placeholder(dtype=tf.float32, shape=[None,None])

b_p = tf.placeholder(dtype=tf.float32, shape=[None,10])

c_p = tf.placeholder(dtype=tf.float32, shape=[3,2])

print(a_p) # Tensor("Placeholder:0", shape=(?, ?), dtype=float32)

print(b_p) # Tensor("Placeholder_1:0", shape=(?, 10), dtype=float32)

print(c_p) # Tensor("Placeholder_2:0", shape=(3, 2), dtype=float32)

a_p.set_shape([2,3]) # 要兼容原有的shape,只能更新没有完全定下来的部分

b_p.set_shape([2,10])

print(a_p) # Tensor("Placeholder:0", shape=(2, 3), dtype=float32)

print(b_p) # Tensor("Placeholder_1:0", shape=(2, 10), dtype=float32)# 更新动态形状 tf.reshape():可以跨阶,但是不能修改元素的个数

a_p_321 = tf.reshape(a_p, shape=[3,2,1])

print("reshape后的a_p:",a_p_321) # reshape后的a_p: Tensor("Reshape:0", shape=(3, 2, 1), dtype=float32)张量的运算

降维运算tf.reduce_all(x)

def math_reduce():

x = tf.constant([[True,True],

[False,False]])

print(x)

a1 = tf.reduce_all(x)

print(a1)

a2 = tf.reduce_all(x,0) # 纵轴

print(a2)

a3 = tf.reduce_all(x,1) # 横轴

print(a2)

with tf.Session() as sess:

print(sess.run([x,a1,a2,a3]))Tensor("Const:0", shape=(2, 2), dtype=bool)

Tensor("All:0", shape=(), dtype=bool)

Tensor("All_1:0", shape=(2,), dtype=bool)

Tensor("All_1:0", shape=(2,), dtype=bool)

[array([[ True, True],

[False, False]]), False, array([False, False]), array([ True, False])]

变量OP

- 存储持久化

- 可修改值

- 可指定被训练

创建、初始化变量

def variable_demo():

# 定义变量

a = tf.Variable(initial_value=50)

b = tf.Variable(initial_value=40)

c = tf.add(a,b)

print("a:\n",a) #

print("b:\n",b) #

print("c:\n",c) # Tensor("Add:0", shape=(), dtype=int32)

#初始化变量

init = tf.global_variables_initializer()

#开启会话

with tf.Session() as sess:

sess.run(init)

print(sess.run([a,b,c])) # [50, 40, 90]

return None 定义新的命名空间 :tf.variable_scope("my_scope")

- 使得结构更加清晰

with tf.variable_scope("my_scope"):

a = tf.Variable(initial_value=50)

b = tf.Variable(initial_value=40)

with tf.variable_scope("your_scope"):

c = tf.add(a,b)

print("a:\n",a) #

print("b:\n",b) #

print("c:\n",c) # Tensor("your_scope/Add:0", shape=(), dtype=int32) 其他基础API

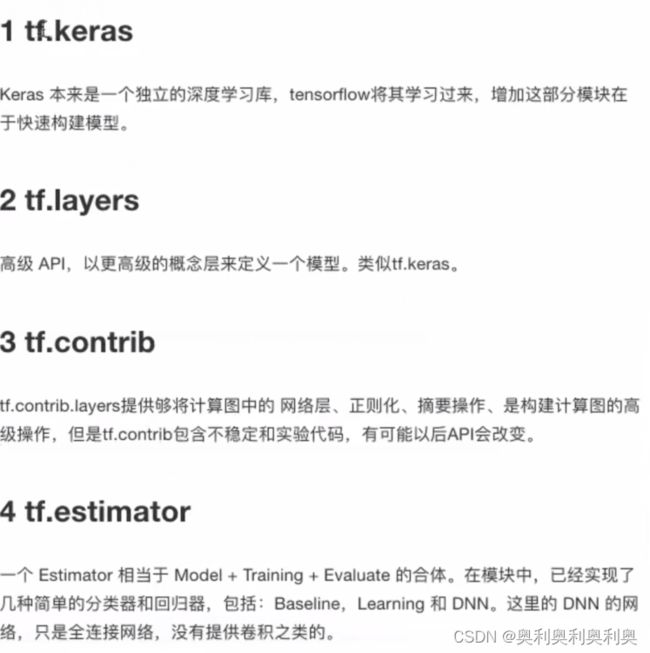

高级API