SparkDF操作与SQL交互和相关函数整理

SparkDF与SparkSQL交互操作函数笔记

-

-

- 一、生成DF方式

-

- 1.toDF

- 2.createDataFrame

- 3.list 转 DF

- 4.schema动态创建DataFrame

- 5.通过读取文件创建DF

- 二、DateFrame保存文件

-

- 1.DF保存为文件

- 2.DateFrame写入相关数据库

- 三、DF相关API

-

- 1.Action

- 2.RDD类操作

- 3.Excel类操作

- 四、DF与SQL交互操作

-

- 1.查询 select,selectExpr,where

- 2.表连接 join,union,unionAll

- 3.表分组 groupby,agg,pivot

- 4.窗口函数、爆炸函数、复合型函数

-

- 4-1.窗口函数

- 4-2.爆炸函数

- 4-3.复合函数

-

- 4-3-1 集合类型

- 4-3-2 array类型

- 4-3-3 struct类型

- 4-3-4 map类型

- 5.构造json和解析json数据

- 6.DF与SQL交互操作

-

- 6-1 创建临时表视图

- 6-2 对Hive表进行增删改查操作**

-

- 6-2-1 删除hive表

- 6-2-2 建立hive分区表

- 6-2-3 动态写入数据到hive分区表

- 6-2-4 写入静态分区

- 6-2-4 写入混合分区

- 6-2-5 删除分区

-

from pyspark.sql import SparkSession

import pandas as pd

import findspark

findspark.init()

spark=SparkSession.builder.appName("test1").config("master","local[*]").enableHiveSupport().getOrCreate()

sc=spark.sparkContext

一、生成DF方式

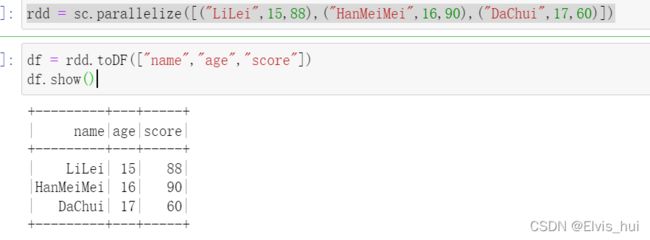

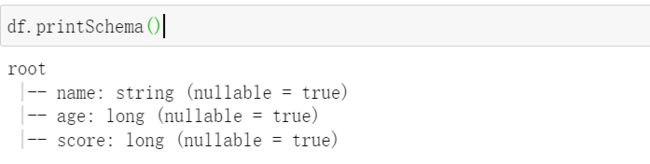

1.toDF

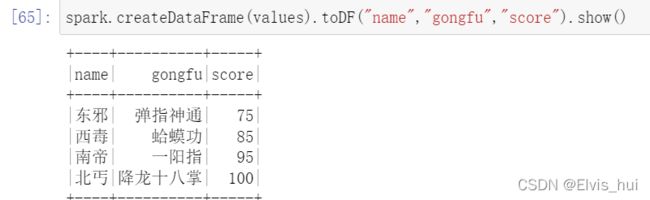

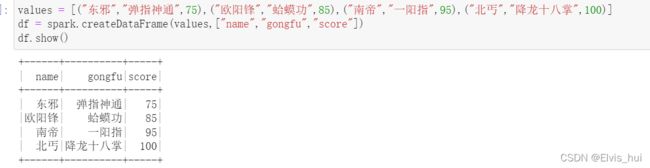

2.createDataFrame

3.list 转 DF

4.schema动态创建DataFrame

5.通过读取文件创建DF

#读取json

df = spark.read.json()#json

#读取csv

df = spark.read.option("header","true") \

.option("inferSchema","true") \

.option("delimiter", ",") \

.csv("data/iris.csv")

/or

df = spark.read.format("com.databricks.spark.csv") \

.option("header","true") \

.option("inferSchema","true") \

.option("delimiter", ",") \

.load("data/iris.csv")

#读取parquet文件

df = spark.read.parquet("data/users.parquet")

#读取hive数据

spark.sql("CREATE TABLE IF NOT EXISTS src (key INT, value STRING) USING hive")

spark.sql("LOAD DATA LOCAL INPATH 'data/kv1.txt' INTO TABLE src")

df = spark.sql("SELECT key, value FROM src WHERE key < 10 ORDER BY key")

#读取mysql数据表生成DataFrame

df = spark.read.format("jdbc") \

.option("url", url) \

.option("dbtable", "runoob_tbl") \

.option("user", "root") \

.option("password", "0845") \

.load()

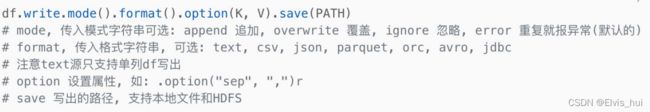

二、DateFrame保存文件

1.DF保存为文件

#保存csv文件

df.write.format("csv").option("header","true").save("data/people_write.csv")

#先转rdd再保存text文件

df.rdd.saveAsTextFile("data/people_rdd.txt")

#保存json

df.write.json("data/people.json")

#保存成parquet文件, 压缩格式, 占用存储小, 且是spark内存中存储格式,加载最快

df.write.partitionBy("age").format("parquet").save("data/namesAndAges.parquet")

df.write.parquet("data/people_write.parquet")

#保存成hive数据表

df.write.bucketBy(42, "name").sortBy("age").saveAsTable("people_bucketed")

2.DateFrame写入相关数据库

#读取数据库Mysql

df=spark.read.format("jdbc").\

option("url","jdbc:mysql://node01:3306/test?useSSL=false&useUnicode=true").\

option("dbtable"."u_data").\

option("user","root").\

option("password","123456").load()

# 读取的数据自带schema,不需要设置,load()不需要添加参数,

#将df写入数据库

df.write.mode("overwrite").\

format("jdbc").\

option("url","jdbc:mysql://node01:3306/test?useSSL=false&useUnicode=true&characterEncoding=utf8").\

option("dbtable"."u_data").\

option("user","root").\

option("password","123456").

save()

#jdbc连接字符串中,建议使用useSSL=false 确保连接可以正常连接(不使用SSL安全协议进行连接)#jdbc连接字符串中,建议使用useUnicode=true来确保传输中不出现乱码

#save()不要填参数,没有路径,是写出数据库

#dbtable属性:指定写出的表名

#写入hive

df.write.mode("overwrite").saveAsTable("DB.Table","parquet")

#数据库.表 保存格式

#直接saveAsTable 就行,要求已经配置spark on hive

三、DF相关API

1.Action

DataFrame的Action操作包括show,count,collect,describe,take,head,first等操作。

| show | show(numRows: Int, truncate: Boolean) 第二个参数设置是否当输出字段长度超过20时进行截取 |

|---|---|

| count | 统计个数 |

| collect | 输出结构 |

| first、take、head | 类似pandas功能 |

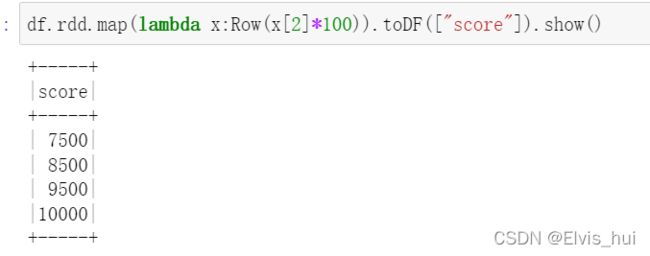

2.RDD类操作

DataFrame支持RDD中一些诸如 distinct,cache,sample,foreach,intersect,except等操作,可以把DataFrame当做数据类型为Row的RDD来进行操作,必要时可以将其转换成RDD来操作

#map

rdd=df.rdd.map(lambda x:Row(x[0].upper()))

rdd.toDF(["value"]).show()

#flatMap,需要先转换成rdd

df_flat = df.rdd.flatMap(lambda x:x[0].split(" ")).map(lambda x:Row(x)).toDF(["value"])

#filter过滤 #endswith 是以某些字符结尾的字符

df_filter = df.rdd.filter(lambda s:s[0].endswith("Spark")).toDF(["value"])

# filter和broadcast混合使用

broads = sc.broadcast(["Hello","World"])

df_filter_broad = df_flat.filter(~col("value").isin(broads.value))

#distinct

df_distinct = df_flat.distinct()

#cache缓存

df.cache()

df.unpersist()

#sample抽样

dfsample = df.sample(False,0.6,0)

#intersect交集 和 exceptAll补集

df.intersect(df2) 和 df.exceptAll(df2)

3.Excel类操作

增加列,删除列,重命名列,排序等操作,去除重复行,去除空行,就跟操作Excel表格一样

#增加列

df.withColumn("birthyear",-df["age"]+2020)

#置换列的顺序

df.select("name","age","birthyear","gender")

#删除列

df.drop("gender")

#重命名列

df.withColumnRenamed("gender","sex")

#排序sort,可以指定升序降序

df.sort(df["age"].desc())

#排序orderby,默认为升序,可以根据多个字段

df.orderBy(df["age"].desc(),df["gender"].desc())

#去除nan值行

df.na.drop()

#填充nan值

df.na.fill("female")

#替换某些值

df.na.replace({"":"female","RuHua":"SiYu"})

#去重,默认根据全部字段

df2 = df.unionAll(df)

df2.show()

df2.dropDuplicates()

#去重,根据部分字段

dfunique_part = df.dropDuplicates(["age"])

#简单聚合操作

df.agg({"name":"count","age":"max"})

#汇总信息

df.describe()

#频率超过0.5的年龄和性别

df.stat.freqItems(("age","gender"),0.5)

四、DF与SQL交互操作

1.查询 select,selectExpr,where

#表查询select

df.select("name").limit(2)

df.select("name",df["age"] + 1)

df.select("name",-df["age"]+2020).toDF("name","birth_year")#200-年龄并字段命名birth_year

#表查询where, 指定SQL中的where字句表达式

df.where("gender='male' and age>15")

#表查询filter

df.filter(df["age"]>16)

df.filter("gender ='male'")

2.表连接 join,union,unionAll

#表连接join,根据单个字段、

#注:该操作会过滤掉没有共同的name字段

df.join(dfscore.select("name","score"),"name")

#表连接join,根据多个字段

dfjoin = df.join(dfscore,["name","gender"])

#表连接join,根据多个字段

#可以指定连接方式为"inner","left","right","outer","semi","full","leftanti","anti"等多种方式

df.join(dfscore,["name","gender"],"right")

#表连接,灵活指定连接关系,

#实际中有些表字段有多个名,此时可以修改列名,在进行连接

dfscore.withColumnRenamed("gender","sex")

df.join(dfmark,(df["name"] == dfmark["name"]) & (df["gender"]==dfmark["sex"]),

"inner")

#union

df.union(df1)

3.表分组 groupby,agg,pivot

#表分组 groupBy

from pyspark.sql import functions as F

df.groupBy("gender").max("age").show()

#表分组后聚合,groupBy,agg

dfagg = df.groupBy("gender").agg(F.mean("age").alias("mean_age"),

F.collect_list("name").alias("names"))

#F.exper

dfagg = df.groupBy("gender").agg(F.expr("avg(age)"),F.expr("collect_list(name)"))

#按性别,年龄分组,将分组符合条件的name collect_list

df.groupBy("gender","age").agg(F.collect_list(col("name"))).show()

#将collect_list 拆成多行 用 explode

df.select(df("name"),explode(df("myScore"))).toDF("name","myScore")

#表分组后透视,groupBy,pivot

dfstudent = spark.createDataFrame([("张学友",18,"male",1),("刘德华",16,"female",1),

("郭富城",17,"male",2),("黎明",20,"male",2)]).toDF("name","age","gender","class")

dfstudent.show()

dfstudent.groupBy("class").pivot("gender").max("age").show()

4.窗口函数、爆炸函数、复合型函数

4-1.窗口函数

df.selectExpr("name","score","class",

"row_number() over (partition by class order by score desc) as order")

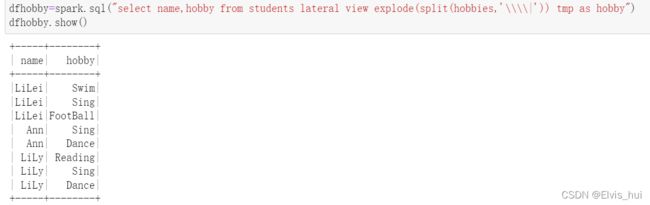

4-2.爆炸函数

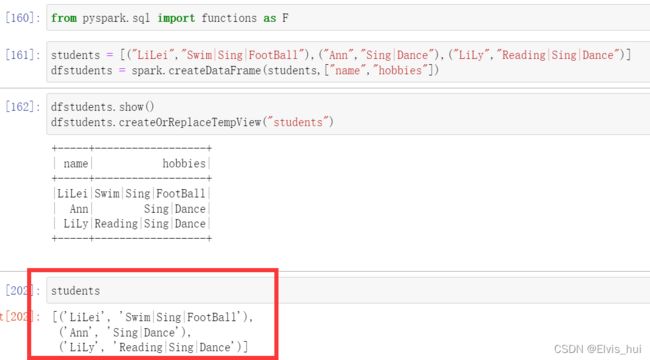

explode 一行转多行,通常搭配LATERAL VIEW使用

df1=spark.sql("select name,hobby from students LATERAL VIEW explode(split(hobbies,'\\\\|')) tmp as hobby") #注意特殊字符作为分隔符要加四个斜杠

df1.show()

#统计每种hobby有多少同学喜欢

df.groupBy("hobby").agg(F.expr("count(name) as cnt")).show()

4-3.复合函数

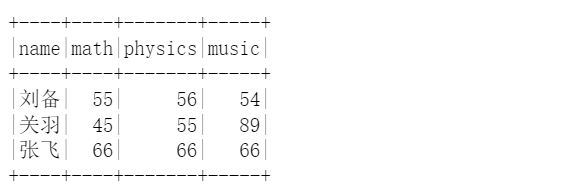

4-3-1 集合类型

#集合创建

import pyspark.sql.functions as F

students = [("刘备",55,56,54),("关羽",45,55,89),("张飞",66,66,66)]

df=spark.createDataFrame(students).toDF("name","math","physics","music")

df.show()

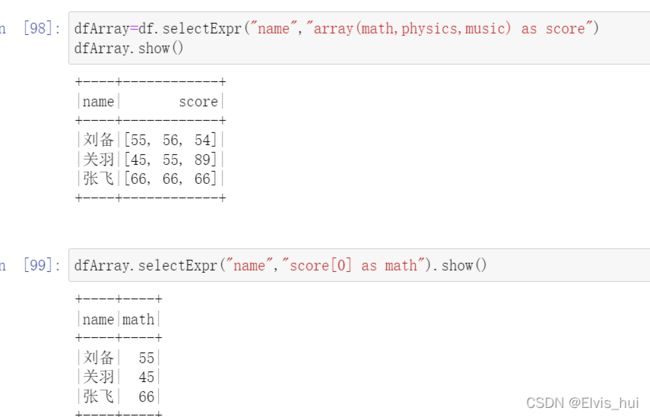

4-3-2 array类型

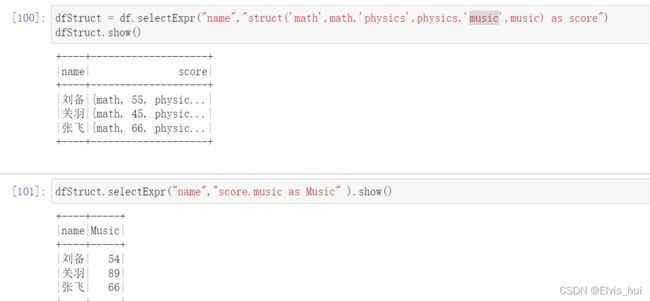

4-3-3 struct类型

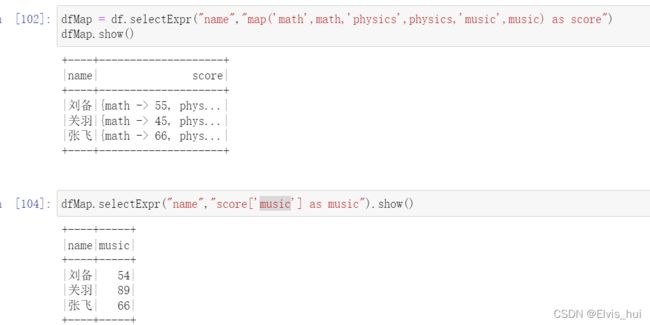

4-3-4 map类型

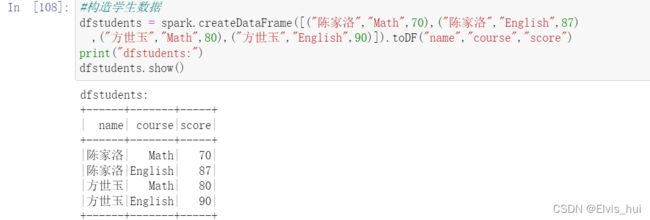

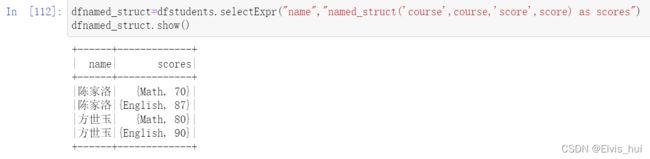

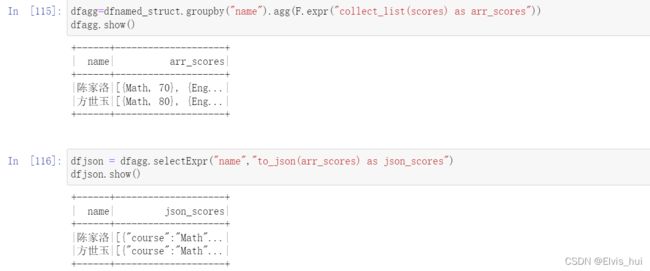

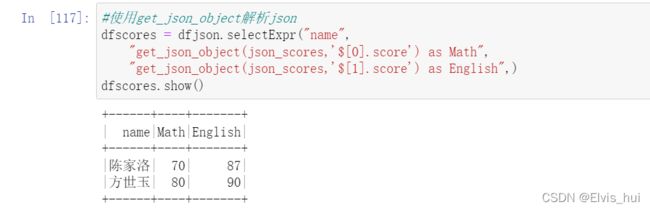

5.构造json和解析json数据

#to_json

dfjson=dfagg.selectExpr("name","to_json(arr_scores) as json_scores").show()

#get_json_object

dfscores = dfjson.selectExpr("name",

"get_json_object(json_scores,'$[0].score') as Math",

"get_json_object(json_scores,'$[1].score') as English",)

6.DF与SQL交互操作

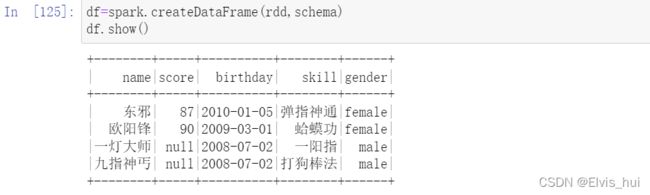

6-1 创建临时表视图

将DF表注册为临时表视图,是的生命周期与Sparksession相关联

因此可以进行sql的一切操作,对hive表进行包括增删改查

from pyspark.sql.types import *

from datetime import datetime

from pyspark.sql import Row

#造数据

schema = StructType([StructField("name", StringType(), nullable = False),

StructField("score", IntegerType(), nullable = True),

StructField("birthday", DateType(), nullable = True),

StructField("skill",StringType(), nullable = False),

StructField("gender",StringType(),nullable = False)])

rdd = sc.parallelize([Row("东邪",87,datetime(2010,1,5),"弹指神通","female"),

Row("欧阳锋",90,datetime(2009,3,1),"蛤蟆功","female"),

Row("一灯大师",None,datetime(2008,7,2),"一阳指","male"),

Row("九指神丐",None,datetime(2008,7,2),"打狗棒法","male"),])

#注册为临时表视图, 其生命周期和SparkSession相关联

df.createOrReplaceTempView("Four")

spark.sql("select * from Four where gender='male'").show()

#注册为全局临时表视图,其生命周期和整个Spark应用程序关联

df.createOrReplaceGlobalTempView("student")

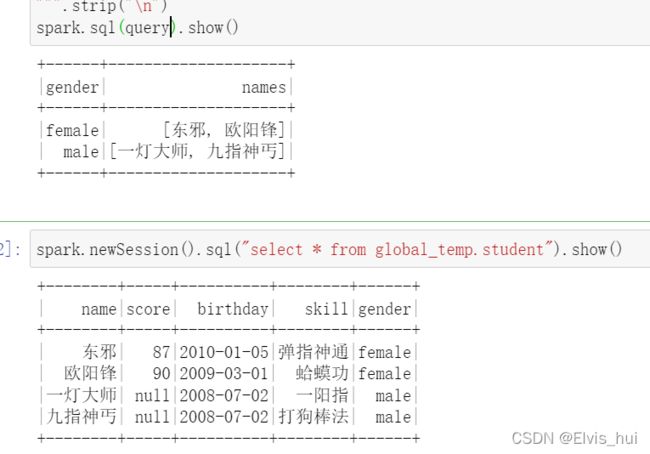

query = """

select t.gender

, collect_list(t.name) as names

from global_temp.student t

group by t.gender

""".strip("\n")

spark.sql(query).show()

#可以在新的Session中访问

spark.newSession().sql("select * from global_temp.student").show()

6-2 对Hive表进行增删改查操作**

6-2-1 删除hive表

query = "DROP TABLE IF EXISTS students"

spark.sql(query)

6-2-2 建立hive分区表

#(注:不可以使用中文字段作为分区字段)

query = """CREATE TABLE IF NOT EXISTS `students`

(`name` STRING COMMENT '姓名',

`age` INT COMMENT '年龄'

)

PARTITIONED BY ( `class` STRING COMMENT '班级', `gender` STRING COMMENT '性别')

""".replace("\n"," ")

spark.sql(query)

6-2-3 动态写入数据到hive分区表

spark.conf.set("hive.exec.dynamic.partition.mode", "nonstrict") #注意此处有一个设置操作

dfstudents = spark.createDataFrame([("LiLei",18,"class1","male"),

("HanMeimei",17,"class2","female"),

("DaChui",19,"class2","male"),

("Lily",17,"class1","female")]).toDF("name","age","class","gender")

#动态写入分区

dfstudents.write.mode("overwrite").format("hive")\

.partitionBy("class","gender").saveAsTable("students")

6-2-4 写入静态分区

#写入到静态分区

dfstudents = spark.createDataFrame([("Jim",18,"class3","male"),

("Tom",19,"class3","male")]).toDF("name","age","class","gender")

dfstudents.createOrReplaceTempView("dfclass3")

#INSERT INTO 尾部追加, INSERT OVERWRITE TABLE 覆盖分区

query = """

INSERT OVERWRITE TABLE `students`

PARTITION(class='class3',gender='male')

SELECT name,age from dfclass3

""".replace("\n"," ")

spark.sql(query)

6-2-4 写入混合分区

#写入到混合分区

dfstudents = spark.createDataFrame([("David",18,"class4","male"),

("Amy",17,"class4","female"),

("Jerry",19,"class4","male"),

("Ann",17,"class4","female")]).toDF("name","age","class","gender")

dfstudents.createOrReplaceTempView("dfclass4")

query = """

INSERT OVERWRITE TABLE `students`

PARTITION(class='class4',gender)

SELECT name,age,gender from dfclass4

""".replace("\n"," ")

spark.sql(query)

6-2-5 删除分区

query = """

ALTER TABLE `students` DROP IF EXISTS PARTITION(class='class3') """.replace("\n"," ")