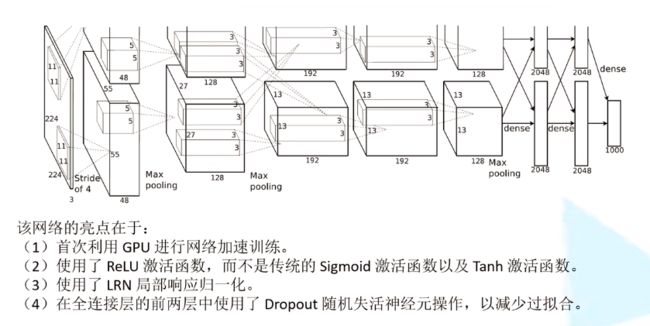

AlexNet网络结构学习

一、网络详解

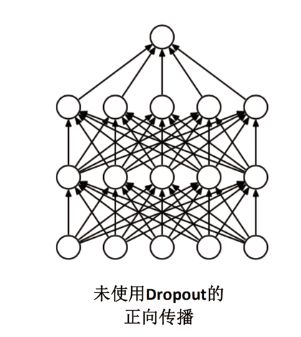

Dropout:

通过随机失活一部分神经元来减小网络训练参数,从而避免过拟合

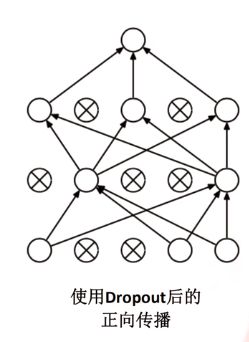

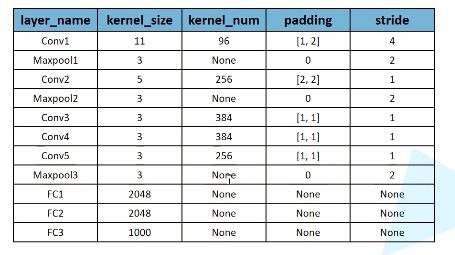

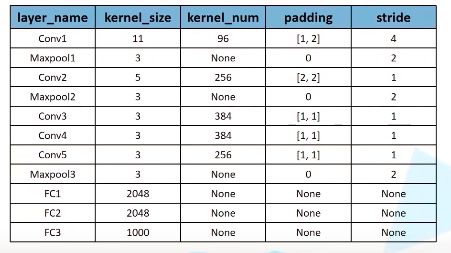

各连接层参数计算

二、网络复现

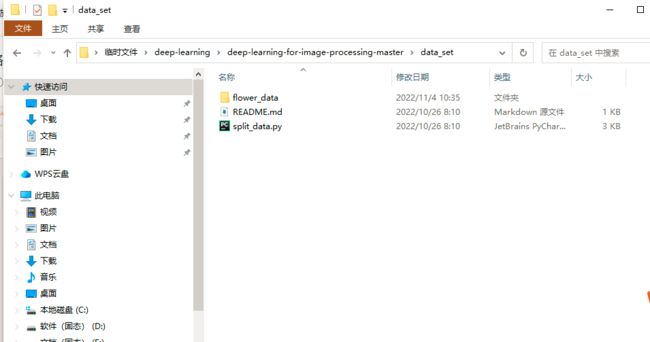

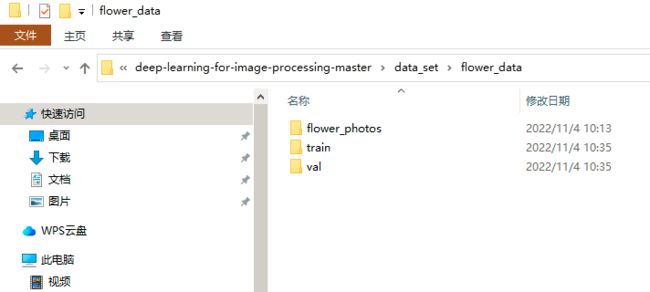

1.数据集分类

在界面按住shift+鼠标右键,点powershell,输入python split_data.py运行。

2.pytorch搭建Alexnet模型

model.py:

网络设置:

self.features = nn.Sequential(

nn.Conv2d(3, 48, kernel_size=11, stride=4, padding=2), # input[3, 224, 224] output[48, 55, 55]

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=3, stride=2), # output[48, 27, 27]

nn.Conv2d(48, 128, kernel_size=5, padding=2), # output[128, 27, 27]

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=3, stride=2), # output[128, 13, 13]

nn.Conv2d(128, 192, kernel_size=3, padding=1), # output[192, 13, 13]

nn.ReLU(inplace=True),

nn.Conv2d(192, 192, kernel_size=3, padding=1), # output[192, 13, 13]

nn.ReLU(inplace=True),

nn.Conv2d(192, 128, kernel_size=3, padding=1), # output[128, 13, 13]

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=3, stride=2), # output[128, 6, 6]

)nn.sequential():将激活函数和网络层封装,实际在forward里才调用激活函数

padding:padding=1:在特征矩阵上下左右各补一行一列0

padding=(1,2):在特征值上下补一行0,左右补两列0

nn.ZeroPad2d[(1,2,1,2)]:在特征值上、左补一行(列)0,右、下补两列(行)0

N算出来不为整数,自动舍弃部分数据

nn.Conv2d(3, 48, kernel_size=11, stride=4, padding=2)padding=2:特征矩阵上下左右各补两行列0,N不为整数,则舍弃右下各一列行0,达到nn.ZeroPad2d[(1,2,1,2)]的效果

self.classifier = nn.Sequential(

nn.Dropout(p=0.5),

nn.Linear(128 * 6 * 6, 2048),

nn.ReLU(inplace=True),

nn.Dropout(p=0.5),

nn.Linear(2048, 2048),

nn.ReLU(inplace=True),

nn.Linear(2048, num_classes),

)nn.Dropout定义随机失活率,num_classes为分类类别,在本实验中,对五类花进行分类,num_classes=5

if init_weights:

self._initialize_weights()初始化权重

def _initialize_weights(self):

for m in self.modules():

if isinstance(m, nn.Conv2d):

nn.init.kaiming_normal_(m.weight, mode='fan_out', nonlinearity='relu')

if m.bias is not None:

nn.init.constant_(m.bias, 0)

elif isinstance(m, nn.Linear):

nn.init.normal_(m.weight, 0, 0.01)

nn.init.constant_(m.bias, 0)for循环遍历网络层,当为卷积层时,权重采用凯明初始化方法,若为全连接层,采用正态分布进行权重赋值,均值0,方差0.01。

pytorch里默认采用凯明权重初始化,可不加权重初始化部分

def forward(self, x):

x = self.features(x)

x = torch.flatten(x, start_dim=1)

x = self.classifier(x)

return xx为输入变量,经feature网络层,torch.flatten展平成一维向量,tensor类型为(batch,channel,height,weight),start_dim=1从channel开始将channel*height*weight三维展平,后输入全连接层。

train.py

data_transform = {

"train": transforms.Compose([transforms.RandomResizedCrop(224),#随机裁剪224*224

transforms.RandomHorizontalFlip(),#水平方向随机翻转 默认p=0.5,一半翻,一半不翻

transforms.ToTensor(),#转换为Tensor数据类型

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))]),#三个维度的均值、标准差

"val": transforms.Compose([transforms.Resize((224, 224)), # cannot 224, must (224, 224)

transforms.ToTensor(),

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))])}训练集和验证集不同的预处理方式

data_root = os.path.abspath(os.path.join(os.getcwd(), "../../..")) # get data root path

os.getcwd()获取当前文件目录

print(os.getcwd())

E:\pycharm\Pycharm_project\pycharm_modelos.path.join(os.getcwd(),''../..'') 返回当前路径的上上级目录

image_path = os.path.join(data_root, "data_set", "flower_data") 获取数据集路径

assert os.path.exists(image_path), "{} path does not exist.".format(image_path)假设路径存在,否则输出“...path does not exist”

train_dataset = datasets.ImageFolder(root=os.path.join(image_path, "train"),#ImageFolder加载数据集

transform=data_transform["train"])ImageFolder加载数据集

ImageFolder(root, transform=None, target_transform=None, loader=default_loader) root:在root指定的路径下寻找图片

transform:对PIL Image进行的转换操作,transform的输入是使用loader读取图片的返回对象

target_transform:对label的转换

loader:给定路径后如何读取图片,默认读取为RGB格式的PIL Image对象

原文链接:https://blog.csdn.net/weixin_39386156/article/details/102686909

DataLoader 数据加载器

dataloader = DataLoader(dataset, batch_size = 2, shuffle=True,collate_fn = mycollate)

dataset:传入数据

shuffle=True/False:是否打乱数据

flower_list = train_dataset.class_to_idx#获取分类对应的索引

#flower_list= {'daisy':0, 'dandelion':1, 'roses':2, 'sunflower':3, 'tulips':4}

# cla_dict ={0: 'daisy', 1: 'dandelion', 2: 'roses', 3: 'sunflowers', 4: 'tulips'}

cla_dict = dict((val, key) for key, val in flower_list.items())#

# write dict into json file

json_str = json.dumps(cla_dict, indent=4)#将python转换城JSON,indent=4为格式化输出,更美观

with open('class_indices.json', 'w') as json_file:

json_file.write(json_str)with open( '...', 'w' ) as f :文件存在则写内容,不存在则创建新文件

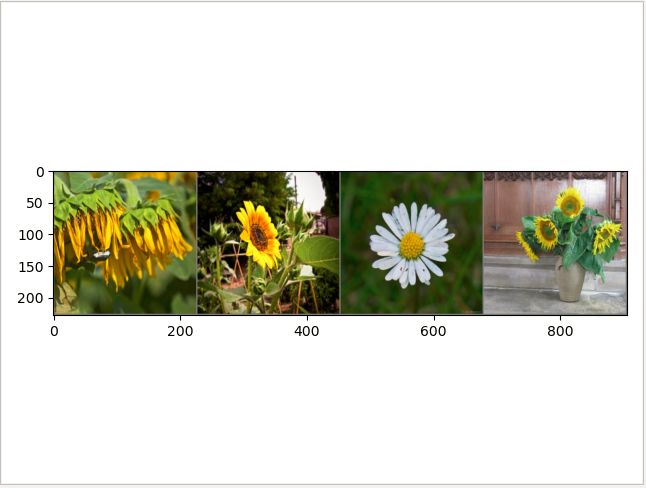

查看数据集:

def imshow(img):

img = img / 2 + 0.5 # unnormalize

npimg = img.numpy()

plt.imshow(np.transpose(npimg, (1, 2, 0)))

plt.show()

print(' '.join('%5s' % cla_dict[test_label[j].item()] for j in range(4)))

imshow(utils.make_grid(test_image))sunflowers sunflowers daisy sunflowers

# train

net.train()

...

# validate

net.eval()

...net.eval(),不启用 BatchNormalization 和 Dropout。此时pytorch会自动把BN和DropOut固定住,不会取平均,而是用训练好的值。

net.train() :启用 BatchNormalization 和 Dropout。 在模型测试阶段使用net.train() 让model变成训练模式,此时 dropout和batch normalization的操作在训练时起到防止网络过拟合的问题。

原文连接:https://blog.csdn.net/qq_46284579/article/details/120439049

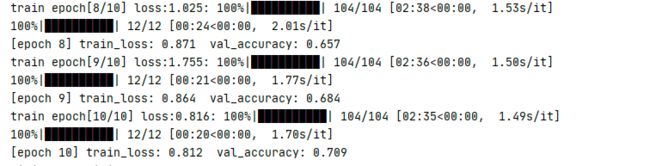

训练结果:

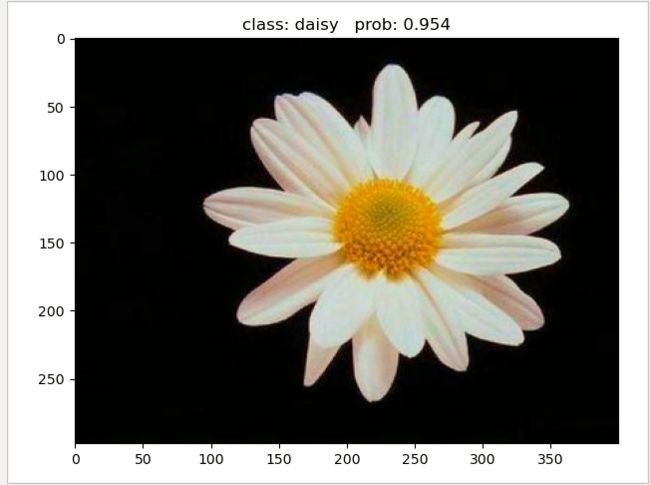

predict.py

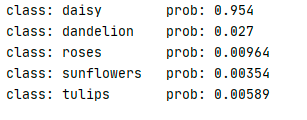

网上下载雏菊图片

运行结果: