李宏毅机器学习Lesson2——Logistic Regression实现收入预测

李宏毅机器学习Lesson2——Logistic Regression实现收入预测

-

- 数据集介绍

- 加载数据集、标准化

- 定义相关函数

- 交叉验证训练模型(小小地实现了一下损失函数惩罚项中系数的选择)

-

- 此处也用到了黄海广博士在吴恩达机器学习笔记中的代码,感谢如此详实的代码

数据集介绍

数据集来源于Kaggle,是一个美国公民收入的数据集,本次任务的目标是根据多个特征准确区分样本的收入属于哪一个类别(大于50k美元/小于50k美元),从网上下载的数据集中有六个文件

前三个是原始数据集,本次任务使用后三个,这是助教已经根据原始数据集进行一定数据清洗后可以直接拿来建模的数据。

加载数据集、标准化

X_train_fpath = '/Users/weiyubai/Desktop/学习资料/机器学习相关/李宏毅数据等多个文件/数据/hw2/data/X_train'

Y_train_fpath = '/Users/weiyubai/Desktop/学习资料/机器学习相关/李宏毅数据等多个文件/数据/hw2/data/Y_train'

with open(X_train_fpath) as f:

next(f)

X_train = np.array([line.strip('\n').split(',')[1:] for line in f], dtype = float)

with open(Y_train_fpath) as f:

next(f)

Y_train = np.array([line.strip('\n').split(',')[1] for line in f], dtype = float)

def normalize_feature(df):

# """Applies function along input axis(default 0) of DataFrame."""

return df.apply(lambda column: (column - column.mean()) / (column.std() if column.std() else 1))#特征缩放函数定义

X_train_no = normalize_feature(pd.DataFrame(X_train))#标准化

X_train_no = np.concatenate((np.ones(len(X_train)).reshape(len(X_train),1),X_train_no.values),axis = 1)#为特征添1,作为截距项

至此,数据导入及处理已经完毕。查看一下数据集,X_train_no为54256*511的np数组

定义相关函数

接下来定义梯度下降过程中需要用到的各个函数

def sigmoid(z):

return 1 / (1 + np.exp(-z))

def cross_entropy(X, y, theta):

e=1e-9

return np.mean(-y * np.log(np.maximum(sigmoid(X @ theta),e)) - (1 - y ) * np.log(np.maximum(1 - sigmoid(X @ theta),e)))

#添加惩罚项的lossfunction

def regularized_cost(X, y,theta, l=1):

# '''you don't penalize theta_0'''

theta_j1_to_n = theta[1:]

regularized_term = (l / (2 * len(X))) * np.power(theta_j1_to_n, 2).sum()

# return regularized_term

return cross_entropy(X, y, theta) + regularized_term

def gradient(X, y, theta):

return (1 / len(X)) * (X.T @ (sigmoid(X @ theta) - y))

#添加惩罚项的gradient

def regularized_gradient(X, y,theta, l=1):

# '''still, leave theta_0 alone'''

theta_j1_to_n = theta[1:]

regularized_theta = (l / len(X)) * theta_j1_to_n

# by doing this, no offset is on theta_0

regularized_term = np.concatenate([np.array([0]), regularized_theta])

return gradient(X, y,theta) + regularized_term

def predict(x,theta):

prob = sigmoid(x @ theta)

return (prob >= 0.5).astype(int)

交叉验证训练模型(小小地实现了一下损失函数惩罚项中系数的选择)

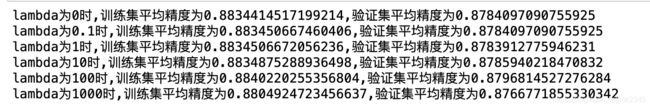

此处实现了一下李宏毅老师在开头的课上说的交叉验证选择模型,对加上惩罚项的损失函数进行minimize,选择了六个不同的惩罚项系数,分别进行三折交叉验证,查看其训练集和验证集的平均精度。

from sklearn.model_selection import StratifiedKFold

# 划分交叉验证集并保存

n_splits = 3

trainx_set = dict()

validx_set = dict()

trainy_set = dict()

validy_set = dict()

gkf = StratifiedKFold(n_splits=3).split(X=X_train_no, y=Y_train)

for fold, (train_idx, valid_idx) in enumerate(gkf):

trainx_set[fold] = X_train_no[train_idx]

trainy_set[fold] = Y_train[train_idx]

validx_set[fold] = X_train_no[valid_idx]

validy_set[fold] = Y_train[valid_idx]

交叉验证集先保存下来,随后循环的时候直接取用,不容易出错

# 初始化

lambda_ = [0,0.1,1,10,100,1000]

sum_valid_acc = {}

sum_train_acc = {}

sum_train_loss = {}

sum_valid_loss = {}

# 训练

for l in lambda_:

# theta = np.ones(X_train.shape[1])

learning_rate = 0.1

epochs = 1000

train_loss = {}

valid_loss = {}

train_acc = {}

valid_acc = {}

for fold in range(n_splits):

theta = np.ones(X_train_no.shape[1])

train_inputs = trainx_set[fold]

train_outputs = trainy_set[fold]

valid_inputs = validx_set[fold]

valid_outputs = validy_set[fold]

# 迭代训练一次

for epoch in range(epochs):

loss = cross_entropy(train_inputs, train_outputs, theta)

gra = regularized_gradient(train_inputs, train_outputs, theta, l=l)

theta = theta - learning_rate * gra

y_pred_train = predict(train_inputs,theta)

_acc = 1 - np.abs(train_outputs - y_pred_train).sum()/len(train_outputs)

train_acc[fold] = _acc

y_pred_valid = predict(valid_inputs,theta)

acc_ = 1 - np.abs(valid_outputs - y_pred_valid).sum()/len(valid_outputs)

valid_acc[fold] = acc_

train_loss[fold] = loss

valid_loss[fold] = cross_entropy(valid_inputs, valid_outputs, theta)

print('在训练惩罚为{}模型的第{}折'.format(l,fold+1))

sum_train_loss[l] = [train_loss[fold] for fold in train_loss]

sum_valid_loss[l] = [valid_loss[fold] for fold in valid_loss]

sum_valid_acc[l] = [valid_acc[fold] for fold in valid_acc]

sum_train_acc[l] = [train_acc[fold] for fold in train_acc]

print("已经训练完惩罚为{}的模型".format(l))

最后查看一下六个不同系数惩罚项分别经过3次3折交叉验证后的训练集、验证集平均精度

for l in lambda_:

print('lambda为{}时,训练集平均精度为{},验证集平均精度为{}'.format(l,np.mean(np.array(sum_train_acc[l])),np.mean(np.array(sum_valid_acc[l]))))

不过看上去结果也没相差很远,就当作一次小小地记录吧,总体来说验证集的精度都在87%左右