RTX30系列linux+docker容器的GPU配置(tensorflow-gpu==1.15~2.x、tensorrt 7、cuda、cudnn)附加resnet50模型测试

目录

- 简介

- 1、安装tensorflow-gpu=1.15

-

- 测试gpu

- 测试模型:使用 ResNet-50 基准测试安装的GPU

- 验证tensorrt

- 2、安装tensorflow-gpu==2.x

-

- 安装记录附件

- 参考

简介

目的:介于解决以往开发中针对tensorflow-gpu,因为cuda和nvidia版本的升级导致不能使用GPU的问题,本部参考了一些资料,在ubuntu18.04+docker环境下只需两行命令配置gpu的问题。当然还有其他很多的方法,也不止这一种,只是觉得这里的tensorflow1.15gpu安装的方式是直接给你封装一起安装了(包含cudnn,cuda),如果觉得想了解环境的配置细致,可以先创建conda+python3.6,再安装对应的cuda、cudnn版本就可以了,这里只是记录以下,希望能帮助到大家。

在保证宿主机安装好nvidia驱动的前提下,创建docker容器导入nvidia环境

-e NVIDIA_VISIBLE_DEVICES=all

其他方式参考这里:https://blog.csdn.net/u011622208/article/details/109222643

1、安装tensorflow-gpu=1.15

几行命令安装tf1.15-gpu:

pip install nvidia-pyindex

pip install nvidia-tensorflow

安装之后的所有内容:

zipp, typing-extensions, six, numpy, importlib-metadata, dataclasses, cached-property, werkzeug, webencodings, tensorboard, protobuf, nvidia-dali-cuda110, nvidia-cudnn, nvidia-cuda-runtime,

nvidia-cuda-nvrtc, nvidia-cublas, markdown, h5py, grpcio, absl-py, wrapt, termcolor,

tensorflow-estimator, opt-einsum, nvidia-tensorrt, nvidia-tensorboard, nvidia-nccl,

nvidia-dali-nvtf-plugin, nvidia-cusparse, nvidia-cusolver, nvidia-curand, nvidia-cufft,

nvidia-cuda-nvcc, nvidia-cuda-cupti, keras-preprocessing, keras-applications,

google-pasta, gast, astor, nvidia-tensorflow

测试gpu

import tensorflow as tf

print(tf.__version__,tf.test.is_gpu_available())

输出:

(py36) root@ovo:/# python

Python 3.6.13 |Anaconda, Inc.| (default, Jun 4 2021, 14:25:59)

[GCC 7.5.0] on linux

Type "help", "copyright", "credits" or "license" for more information.

>>> import tensorflow as tf

2022-07-26 15:56:13.313203: I tensorflow/stream_executor/platform/default/dso_loader.cc:49] Successfully opened dynamic library libcudart.so.11.0

WARNING:tensorflow:Deprecation warnings have been disabled. Set TF_ENABLE_DEPRECATION_WARNINGS=1 to re-enable them.

>>> print(tf.__version__,tf.test.is_gpu_available())

2022-07-26 15:56:23.251414: I tensorflow/core/platform/profile_utils/cpu_utils.cc:94] CPU Frequency: 2600000000 Hz

2022-07-26 15:56:23.252011: I tensorflow/compiler/xla/service/service.cc:168] XLA service 0x5619f369dd30 initialized for platform Host (this does not guarantee that XLA will be used). Devices:

2022-07-26 15:56:23.252024: I tensorflow/compiler/xla/service/service.cc:176] StreamExecutor device (0): Host, Default Version

2022-07-26 15:56:23.253610: I tensorflow/stream_executor/platform/default/dso_loader.cc:49] Successfully opened dynamic library libcuda.so.1

2022-07-26 15:56:23.346301: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:1082] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero

2022-07-26 15:56:23.346757: I tensorflow/compiler/xla/service/service.cc:168] XLA service 0x5619f347be20 initialized for platform CUDA (this does not guarantee that XLA will be used). Devices:

2022-07-26 15:56:23.346770: I tensorflow/compiler/xla/service/service.cc:176] StreamExecutor device (0): GeForce GTX 1650 Ti, Compute Capability 7.5

2022-07-26 15:56:23.346976: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:1082] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero

2022-07-26 15:56:23.362726: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1665] Found device 0 with properties:

name: GeForce GTX 1650 Ti major: 7 minor: 5 memoryClockRate(GHz): 1.485

pciBusID: 0000:01:00.0

2022-07-26 15:56:23.362843: I tensorflow/stream_executor/platform/default/dso_loader.cc:49] Successfully opened dynamic library libcudart.so.11.0

2022-07-26 15:56:23.364564: I tensorflow/stream_executor/platform/default/dso_loader.cc:49] Successfully opened dynamic library libcublas.so.11

2022-07-26 15:56:23.365207: I tensorflow/stream_executor/platform/default/dso_loader.cc:49] Successfully opened dynamic library libcufft.so.10

2022-07-26 15:56:23.365465: I tensorflow/stream_executor/platform/default/dso_loader.cc:49] Successfully opened dynamic library libcurand.so.10

2022-07-26 15:56:23.367308: I tensorflow/stream_executor/platform/default/dso_loader.cc:49] Successfully opened dynamic library libcusolver.so.11

2022-07-26 15:56:23.367752: I tensorflow/stream_executor/platform/default/dso_loader.cc:49] Successfully opened dynamic library libcusparse.so.11

2022-07-26 15:56:23.367873: I tensorflow/stream_executor/platform/default/dso_loader.cc:49] Successfully opened dynamic library libcudnn.so.8

2022-07-26 15:56:23.367983: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:1082] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero

2022-07-26 15:56:23.368307: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:1082] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero

2022-07-26 15:56:23.368558: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1793] Adding visible gpu devices: 0

2022-07-26 15:56:23.368579: I tensorflow/stream_executor/platform/default/dso_loader.cc:49] Successfully opened dynamic library libcudart.so.11.0

2022-07-26 15:56:23.568710: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1206] Device interconnect StreamExecutor with strength 1 edge matrix:

2022-07-26 15:56:23.568734: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1212] 0

2022-07-26 15:56:23.568738: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1225] 0: N

2022-07-26 15:56:23.568988: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:1082] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero

2022-07-26 15:56:23.569407: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:1082] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero

2022-07-26 15:56:23.569775: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1351] Created TensorFlow device (/device:GPU:0 with 2429 MB memory) -> physical GPU (device: 0, name: GeForce GTX 1650 Ti, pci bus id: 0000:01:00.0, compute capability: 7.5)

1.15.4 True

测试模型:使用 ResNet-50 基准测试安装的GPU

终端执行:

wget https://github.com/dbkinghorn/NGC-TF1-nvidia-examples/archive/main/NGC-TF1-nvidia-examples.tar.gz

ar xf NGC-TF1-nvidia-examples.tar.gz

cd NGC-TF1-nvidia-examples-main/cnn/

pip install horovod -i http://pypi.douban.com/simple/ --trusted-host=pypi.douban.com/simple

安装输出:

Created wheel for horovod: filename=horovod-0.25.0-cp36-cp36m-linux_x86_64.whl size=10106547 sha256=4ce1b42610c25cd51291d2d54fcb27dee8b77936183de64c38aece04848ee442

Stored in directory: /tmp/pip-ephem-wheel-cache-up8n2r2y/wheels/c8/9d/31/41018b5090938ef06b1365c52453ecd53dbc9aa97e3ac4ac99

Successfully built horovod

Installing collected packages: pycparser, pyyaml, psutil, cloudpickle, cffi, horovod

Successfully installed cffi-1.15.1 cloudpickle-2.1.0 horovod-0.25.0 psutil-5.9.1 pycparser-2.21 pyyaml-6.0

对于单个 NVIDIA GPU:

python resnet.py --layers=50 --batch_size=64 --precision=fp32

对于 multi GPU -np 2 for 2 GPUs: ...确保您已为 MPI 设置了库路径。见步骤 3)

mpiexec --bind-to socket -np 2 python resnet.py --layers=50 -batch_size=128 --precision=fp32

resne.py代码:

from __future__ import print_function

from builtins import range

import nvutils

import tensorflow as tf

import argparse

nvutils.init()

default_args = {

'image_width' : 224,

'image_height' : 224,

'image_format' : 'channels_first',

'distort_color' : False,

'batch_size' : 256,

'data_dir' : None,

'log_dir' : None,

'export_dir' : None,

'precision' : 'fp16',

'momentum' : 0.9,

'learning_rate_init' : 2.0,

'learning_rate_power' : 2.0,

'weight_decay' : 1e-4,

'loss_scale' : 128.0,

'larc_eta' : 0.003,

'larc_mode' : 'clip',

'num_iter' : 90,

'iter_unit' : 'epoch',

'checkpoint_secs' : None,

'display_every' : 10,

'use_dali' : None,

}

formatter = argparse.ArgumentDefaultsHelpFormatter

parser = argparse.ArgumentParser(formatter_class=formatter)

parser.add_argument('--layers', default=50, type=int, required=True,

choices=[18, 34, 50, 101, 152],

help="""Number of resnet layers.""")

args, flags = nvutils.parse_cmdline(default_args, parser)

def resnet_bottleneck_v1(builder, inputs, depth, depth_bottleneck, stride,

basic=False):

if builder.data_format == 'channels_first':

num_inputs = inputs.get_shape().as_list()[1]

else:

num_inputs = inputs.get_shape().as_list()[-1]

x = inputs

with tf.name_scope('resnet_v1'):

if depth == num_inputs:

if stride == 1:

shortcut = x

else:

shortcut = builder.max_pooling2d(x, 1, stride)

else:

shortcut_depth = depth_bottleneck if basic else depth

shortcut = builder.conv2d_linear(x, shortcut_depth, 1, stride, 'SAME')

if basic:

x = builder.pad2d(x, 1)

x = builder.conv2d( x, depth_bottleneck, 3, stride, 'VALID')

x = builder.conv2d_linear(x, depth_bottleneck, 3, 1, 'SAME')

else:

x = builder.conv2d( x, depth_bottleneck, 1, stride, 'SAME')

x = builder.conv2d( x, depth_bottleneck, 3, 1, 'SAME')

x = builder.conv2d_linear(x, depth, 1, 1, 'SAME')

x = tf.nn.relu(x + shortcut)

return x

def inference_resnet_v1_impl(builder, inputs, layer_counts, basic=False):

x = inputs

x = builder.pad2d(x, 3)

x = builder.conv2d( x, 64, 7, 2, 'VALID')

x = builder.max_pooling2d(x, 3, 2, 'SAME')

for i in range(layer_counts[0]):

x = resnet_bottleneck_v1(builder, x, 256, 64, 1, basic)

for i in range(layer_counts[1]):

x = resnet_bottleneck_v1(builder, x, 512, 128, 2 if i==0 else 1, basic)

for i in range(layer_counts[2]):

x = resnet_bottleneck_v1(builder, x, 1024, 256, 2 if i==0 else 1, basic)

for i in range(layer_counts[3]):

x = resnet_bottleneck_v1(builder, x, 2048, 512, 2 if i==0 else 1, basic)

return builder.spatial_average2d(x)

def resnet_v1(inputs, training=False):

"""Deep Residual Networks family of models

https://arxiv.org/abs/1512.03385

"""

builder = nvutils.LayerBuilder(tf.nn.relu, args['image_format'], training, use_batch_norm=True)

if flags.layers == 18: return inference_resnet_v1_impl(builder, inputs, [2,2, 2,2], basic=True)

elif flags.layers == 34: return inference_resnet_v1_impl(builder, inputs, [3,4, 6,3], basic=True)

elif flags.layers == 50: return inference_resnet_v1_impl(builder, inputs, [3,4, 6,3])

elif flags.layers == 101: return inference_resnet_v1_impl(builder, inputs, [3,4,23,3])

elif flags.layers == 152: return inference_resnet_v1_impl(builder, inputs, [3,8,36,3])

else: raise ValueError("Invalid layer count (%i); must be one of: 18,34,50,101,152" %

flags.layers)

if args['predict']:

if args['log_dir'] is not None and args['data_dir'] is not None:

nvutils.predict(resnet_v1, args)

else:

nvutils.train(resnet_v1, args)

if args['log_dir'] is not None and args['data_dir'] is not None:

nvutils.validate(resnet_v1, args)

其他:

版本python3.6.13:

pip uninstall nvidia-index

pip install nvidia-pyindex

pip install nvidia-tensorflow

版本python3.8:

pip install nvidia-tensorflow[horovod]

pip install nvidia-tensorboard==1.15

验证tensorrt

>>> import tensorrt

>>> tensorrt.__version__

'7.2.1.6'

2、安装tensorflow-gpu==2.x

where x=0、1、2、3、4、5、6、7...

conda和pip查询包的可用版本方法:

conda search package-name

pip index versions package-name

案例:conda先装cuda=11.3.1,cudnn=8.2.1,再装tensorflow-gpu=2.6.5

参考链接在文章末尾。

conda install cuda==11.3.1

conda install cudnn==8.2.1

pip install tensorflow-gpu==2.6.5 -i https://pypi.douban.com/simple

安装记录附件

pip install nvidia-pyindex

Collecting nvidia-pyindex

Downloading nvidia-pyindex-1.0.9.tar.gz (10 kB)

Building wheels for collected packages: nvidia-pyindex

Building wheel for nvidia-pyindex (setup.py) ... done

Created wheel for nvidia-pyindex: filename=nvidia_pyindex-1.0.9-py3-none-any.whl size=8416 sha256=2706ce8622b87a6f2ad631f5bd4a1be52e8a9054e600da276146cf3fa369f838

Stored in directory: /root/.cache/pip/wheels/1a/79/65/9cb980b5f481843cd9896e1579abc1c1f608b5f9e60ca90e03

Successfully built nvidia-pyindex

Installing collected packages: nvidia-pyindex

Successfully installed nvidia-pyindex-1.0.9

WARNING: Running pip as the 'root' user can result in broken permissions and conflicting behaviour with the system package manager. It is recommended to use a virtual environment instead: https://pip.pypa.io/warnings/venv

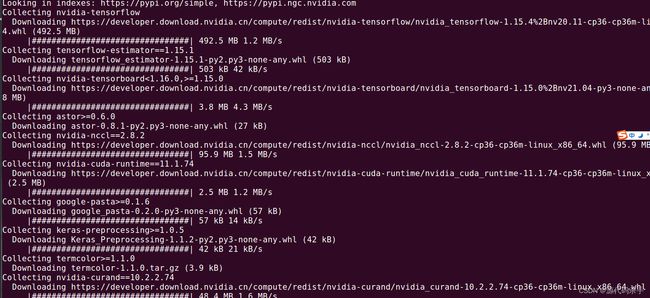

(py36) root@ovo:/# pip install nvidia-tensorflow

Looking in indexes: https://pypi.org/simple, https://pypi.ngc.nvidia.com

Collecting nvidia-tensorflow

Downloading https://developer.download.nvidia.cn/compute/redist/nvidia-tensorflow/nvidia_tensorflow-1.15.4%2Bnv20.11-cp36-cp36m-linux_x86_64.whl (492.5 MB)

|################################| 492.5 MB 1.2 MB/s

Collecting tensorflow-estimator==1.15.1

Downloading tensorflow_estimator-1.15.1-py2.py3-none-any.whl (503 kB)

|################################| 503 kB 42 kB/s

Collecting nvidia-tensorboard<1.16.0,>=1.15.0

Downloading https://developer.download.nvidia.cn/compute/redist/nvidia-tensorboard/nvidia_tensorboard-1.15.0%2Bnv21.04-py3-none-any.whl (3.8 MB)

|################################| 3.8 MB 4.3 MB/s

Collecting astor>=0.6.0

Downloading astor-0.8.1-py2.py3-none-any.whl (27 kB)

Collecting nvidia-nccl==2.8.2

Downloading https://developer.download.nvidia.cn/compute/redist/nvidia-nccl/nvidia_nccl-2.8.2-cp36-cp36m-linux_x86_64.whl (95.9 MB)

|################################| 95.9 MB 1.5 MB/s

Collecting nvidia-cuda-runtime==11.1.74

Downloading https://developer.download.nvidia.cn/compute/redist/nvidia-cuda-runtime/nvidia_cuda_runtime-11.1.74-cp36-cp36m-linux_x86_64.whl (2.5 MB)

|################################| 2.5 MB 1.2 MB/s

Collecting google-pasta>=0.1.6

Downloading google_pasta-0.2.0-py3-none-any.whl (57 kB)

|################################| 57 kB 14 kB/s

Collecting keras-preprocessing>=1.0.5

Downloading Keras_Preprocessing-1.1.2-py2.py3-none-any.whl (42 kB)

|################################| 42 kB 21 kB/s

Collecting termcolor>=1.1.0

Downloading termcolor-1.1.0.tar.gz (3.9 kB)

Collecting nvidia-curand==10.2.2.74

Downloading https://developer.download.nvidia.cn/compute/redist/nvidia-curand/nvidia_curand-10.2.2.74-cp36-cp36m-linux_x86_64.whl (48.4 MB)

|################################| 48.4 MB 1.6 MB/s

Collecting opt-einsum>=2.3.2

Downloading opt_einsum-3.3.0-py3-none-any.whl (65 kB)

|################################| 65 kB 21 kB/s

Collecting gast==0.2.2

Downloading gast-0.2.2.tar.gz (10 kB)

Collecting nvidia-cudnn==8.0.4.30

Downloading https://developer.download.nvidia.cn/compute/redist/nvidia-cudnn/nvidia_cudnn-8.0.4.30-cp36-cp36m-linux_x86_64.whl (847.5 MB)

|################################| 847.5 MB 5.3 MB/s

Collecting nvidia-cuda-cupti==11.1.69

Downloading https://developer.download.nvidia.cn/compute/redist/nvidia-cuda-cupti/nvidia_cuda_cupti-11.1.69-cp36-cp36m-linux_x86_64.whl (8.2 MB)

|################################| 8.2 MB 2.6 MB/s

Collecting keras-applications>=1.0.8

Downloading Keras_Applications-1.0.8-py3-none-any.whl (50 kB)

|################################| 50 kB 16 kB/s

Collecting six>=1.10.0

Downloading six-1.16.0-py2.py3-none-any.whl (11 kB)

Requirement already satisfied: wheel>=0.26 in /opt/conda/envs/py36/lib/python3.6/site-packages (from nvidia-tensorflow) (0.37.1)

Collecting grpcio>=1.8.6

Downloading grpcio-1.47.0-cp36-cp36m-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (4.5 MB)

|################################| 4.5 MB 22 kB/s

Collecting numpy<1.19.0,>=1.16.0

Downloading numpy-1.18.5-cp36-cp36m-manylinux1_x86_64.whl (20.1 MB)

|################################| 20.1 MB 26 kB/s

WARNING: Retrying (Retry(total=4, connect=None, read=None, redirect=None, status=None)) after connection broken by 'ReadTimeoutError("HTTPSConnectionPool(host='pypi.org', port=443): Read timed out. (read timeout=15)",)': /simple/nvidia-cufft/

Collecting nvidia-cufft==10.3.0.74

Downloading https://developer.download.nvidia.cn/compute/redist/nvidia-cufft/nvidia_cufft-10.3.0.74-cp36-cp36m-linux_x86_64.whl (168.4 MB)

|################################| 168.4 MB 2.4 MB/s

Collecting protobuf>=3.6.1

Downloading protobuf-3.19.4-cp36-cp36m-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (1.1 MB)

|################################| 1.1 MB 13 kB/s

Collecting nvidia-dali-nvtf-plugin==0.27.0+nv20.11

Downloading https://developer.download.nvidia.cn/compute/redist/nvidia-dali-nvtf-plugin/nvidia_dali_nvtf_plugin-0.27.0%2Bnv20.11-cp36-cp36m-linux_x86_64.whl (65 kB)

|################################| 65 kB 3.0 MB/s

Collecting nvidia-cuda-nvcc==11.1.74

Downloading https://developer.download.nvidia.cn/compute/redist/nvidia-cuda-nvcc/nvidia_cuda_nvcc-11.1.74-cp36-cp36m-linux_x86_64.whl (11.7 MB)

|################################| 11.7 MB 1.3 MB/s

Collecting nvidia-tensorboard<1.16.0,>=1.15.0

Downloading https://developer.download.nvidia.cn/compute/redist/nvidia-tensorboard/nvidia_tensorboard-1.15.0%2Bnv20.11-py3-none-any.whl (3.8 MB)

|################################| 3.8 MB 3.1 MB/s

Collecting absl-py>=0.7.0

Downloading absl_py-1.2.0-py3-none-any.whl (123 kB)

|################################| 123 kB 24 kB/s

Collecting wrapt>=1.11.1

Downloading wrapt-1.14.1-cp36-cp36m-manylinux_2_5_x86_64.manylinux1_x86_64.manylinux_2_17_x86_64.manylinux2014_x86_64.whl (74 kB)

|################################| 74 kB 28 kB/s

Collecting nvidia-cusparse==11.2.0.275

Downloading https://developer.download.nvidia.cn/compute/redist/nvidia-cusparse/nvidia_cusparse-11.2.0.275-cp36-cp36m-linux_x86_64.whl (159.5 MB)

|################################| 159.5 MB 4.8 MB/s

WARNING: Retrying (Retry(total=4, connect=None, read=None, redirect=None, status=None)) after connection broken by 'ReadTimeoutError("HTTPSConnectionPool(host='pypi.ngc.nvidia.com', port=443): Read timed out. (read timeout=15)",)': /nvidia-cublas/

Collecting nvidia-cublas==11.2.1.74

Downloading https://developer.download.nvidia.cn/compute/redist/nvidia-cublas/nvidia_cublas-11.2.1.74-cp36-cp36m-linux_x86_64.whl (231.4 MB)

|################################| 231.4 MB 2.0 MB/s

Collecting nvidia-tensorrt==7.2.1.6

Downloading https://developer.download.nvidia.cn/compute/redist/nvidia-tensorrt/nvidia_tensorrt-7.2.1.6-cp36-none-linux_x86_64.whl (263.7 MB)

|################################| 263.7 MB 632 kB/s

Collecting nvidia-cusolver==11.0.0.74

Downloading https://developer.download.nvidia.cn/compute/redist/nvidia-cusolver/nvidia_cusolver-11.0.0.74-cp36-cp36m-linux_x86_64.whl (612.1 MB)

|################################| 612.1 MB 3.0 MB/s

Requirement already satisfied: setuptools in /opt/conda/envs/py36/lib/python3.6/site-packages (from nvidia-cublas==11.2.1.74->nvidia-tensorflow) (58.0.4)

WARNING: Retrying (Retry(total=4, connect=None, read=None, redirect=None, status=None)) after connection broken by 'ReadTimeoutError("HTTPSConnectionPool(host='pypi.org', port=443): Read timed out. (read timeout=15)",)': /simple/nvidia-dali-cuda110/

Collecting nvidia-dali-cuda110==0.27.0

Downloading https://developer.download.nvidia.cn/compute/redist/nvidia-dali-cuda110/nvidia_dali_cuda110-0.27.0-1699648-py3-none-manylinux2014_x86_64.whl (390.2 MB)

|################################| 390.2 MB 1.9 MB/s

Collecting tensorboard@ https://pypi.ngc.nvidia.com/tensorboard/tensorboard-1.15.0-py2.py3-none-any.whl

Downloading https://pypi.ngc.nvidia.com/tensorboard/tensorboard-1.15.0-py2.py3-none-any.whl (1.6 kB)

Collecting webencodings

WARNING: Retrying (Retry(total=4, connect=None, read=None, redirect=None, status=None)) after connection broken by 'ReadTimeoutError("HTTPSConnectionPool(host='files.pythonhosted.org', port=443): Read timed out. (read timeout=15)",)': /packages/f4/24/2a3e3df732393fed8b3ebf2ec078f05546de641fe1b667ee316ec1dcf3b7/webencodings-0.5.1-py2.py3-none-any.whl

Downloading webencodings-0.5.1-py2.py3-none-any.whl (11 kB)

Collecting markdown>=2.6.8

Downloading Markdown-3.3.7-py3-none-any.whl (97 kB)

|################################| 97 kB 126 kB/s

Collecting werkzeug>=0.11.15

Downloading Werkzeug-2.0.3-py3-none-any.whl (289 kB)

|################################| 289 kB 38 kB/s

Collecting nvidia-cuda-nvrtc<11.2,>=11.1

Downloading https://developer.download.nvidia.cn/compute/redist/nvidia-cuda-nvrtc/nvidia_cuda_nvrtc-11.1.105-py3-none-manylinux1_x86_64.whl (15.0 MB)

|################################| 15.0 MB 414 kB/s

Collecting h5py

Downloading h5py-3.1.0-cp36-cp36m-manylinux1_x86_64.whl (4.0 MB)

|################################| 4.0 MB 20 kB/s

Collecting importlib-metadata>=4.4

Downloading importlib_metadata-4.8.3-py3-none-any.whl (17 kB)

Collecting zipp>=0.5

Downloading zipp-3.6.0-py3-none-any.whl (5.3 kB)

Collecting typing-extensions>=3.6.4

Downloading typing_extensions-4.1.1-py3-none-any.whl (26 kB)

Collecting dataclasses

Downloading dataclasses-0.8-py3-none-any.whl (19 kB)

Collecting cached-property

Downloading cached_property-1.5.2-py2.py3-none-any.whl (7.6 kB)

Building wheels for collected packages: gast, termcolor

Building wheel for gast (setup.py) ... done

Created wheel for gast: filename=gast-0.2.2-py3-none-any.whl size=7554 sha256=dfe9a3c3a32f2900aa90723c7decbe010aa12fd65083072758fc741997ff5a3f

Stored in directory: /tmp/pip-ephem-wheel-cache-jun34h70/wheels/19/a7/b9/0740c7a3a7d1d348f04823339274b90de25fbcd217b2ee1fbe

Building wheel for termcolor (setup.py) ... done

Created wheel for termcolor: filename=termcolor-1.1.0-py3-none-any.whl size=4848 sha256=03d0696175d078bf5b49e52d4bc29a8edc4be696795444e1f43129f3b2bb31b0

Stored in directory: /tmp/pip-ephem-wheel-cache-jun34h70/wheels/93/2a/eb/e58dbcbc963549ee4f065ff80a59f274cc7210b6eab962acdc

Successfully built gast termcolor

Installing collected packages: zipp, typing-extensions, six, numpy, importlib-metadata, dataclasses, cached-property, werkzeug, webencodings, tensorboard, protobuf, nvidia-dali-cuda110, nvidia-cudnn, nvidia-cuda-runtime, nvidia-cuda-nvrtc, nvidia-cublas, markdown, h5py, grpcio, absl-py, wrapt, termcolor, tensorflow-estimator, opt-einsum, nvidia-tensorrt, nvidia-tensorboard, nvidia-nccl, nvidia-dali-nvtf-plugin, nvidia-cusparse, nvidia-cusolver, nvidia-curand, nvidia-cufft, nvidia-cuda-nvcc, nvidia-cuda-cupti, keras-preprocessing, keras-applications, google-pasta, gast, astor, nvidia-tensorflow

Successfully installed absl-py-1.2.0 astor-0.8.1 cached-property-1.5.2 dataclasses-0.8 gast-0.2.2 google-pasta-0.2.0 grpcio-1.47.0 h5py-3.1.0 importlib-metadata-4.8.3 keras-applications-1.0.8 keras-preprocessing-1.1.2 markdown-3.3.7 numpy-1.18.5 nvidia-cublas-11.2.1.74 nvidia-cuda-cupti-11.1.69 nvidia-cuda-nvcc-11.1.74 nvidia-cuda-nvrtc-11.1.105 nvidia-cuda-runtime-11.1.74 nvidia-cudnn-8.0.4.30 nvidia-cufft-10.3.0.74 nvidia-curand-10.2.2.74 nvidia-cusolver-11.0.0.74 nvidia-cusparse-11.2.0.275 nvidia-dali-cuda110-0.27.0 nvidia-dali-nvtf-plugin-0.27.0+nv20.11 nvidia-nccl-2.8.2 nvidia-tensorboard-1.15.0+nv20.11 nvidia-tensorflow-1.15.4+nv20.11 nvidia-tensorrt-7.2.1.6 opt-einsum-3.3.0 protobuf-3.19.4 six-1.16.0 tensorboard-1.15.0 tensorflow-estimator-1.15.1 termcolor-1.1.0 typing-extensions-4.1.1 webencodings-0.5.1 werkzeug-2.0.3 wrapt-1.14.1 zipp-3.6.0

参考

https://blog.csdn.net/zhaoyi40233relevant_index=4

https://blog.csdn.net/weixin_41631106/article/details/125918998?spm=1001.2014.3001.5506

https://blog.csdn.net/wu496963386/article/details/109583045?spm=1001.2014.3001.5506

https://blog.csdn.net/mygugu/article/details/123503334

不用下载cuda,命令安装:

sudo apt install libnccl2=2.4.8-1+cuda10.0 libnccl-dev=2.4.8-1+cuda10.0

参考这里:https://blog.csdn.net/mygugu/article/details/123503334

https://blog.csdn.net/TFATS/article/details/120026833

用Horovod(Uber出品)可以极大简化多GPU训练

https://blog.csdn.net/qianshuqinghan/article/details/105312784

3070虚拟机GPU:https://blog.csdn.net/weixin_47123600/article/details/116995978

Tensorflow 1.15 + CUDA + cuDNN installation using Conda:https://stackoverflow.com/questions/64811841/tensorflow-1-15-cuda-cudnn-installation-using-conda

https://fmorenovr.medium.com/install-conda-and-set-up-a-tensorflow-1-15-cuda-10-0-environment-on-ubuntu-windows-2a18097e6a98

NVIDIA RTX30 GPU 安装 TensorFlow 1.15