二维 TCN pytorch实现完整代码和语法记录

pytorch实现T-GCN

- 参考代码

- 完整 二维TCN实现代码

- 相关语法

-

- Tensor.contiguous()

- torch.transpose() 和 torch.permute()比较

- 基础模型实验

-

- 1、nn.Cov1D(input,output,kernal_size,padding)

- 2、nn.Cov2D(input,output,kernal_size,padding)

参考代码

TCN(Temporal Convolutional Network)由Shaojie Bai et al.提出,

https://arxiv.org/pdf/1803.01271.pdf

原始代码来自github:https://github.com/locuslab/TCN

完整 二维TCN实现代码

class delect_padding(nn.Module):

def __init__(self, chomp_size):

super(time_padding, self).__init__()

self.chomp_size = chomp_size

def forward(self, x):

"""

其实就是一个裁剪的模块,裁剪多出来的padding

"""

return x[:, :,:, :-self.chomp_size].contiguous()

class TCN(nn.Module):

#输入数据(B,C1,H,W)

#输出数据(B,C2,H,W-1)

#通过调整padding值的设定可以改变输出数据的形式,在这里我设定的kernel_size=3,padding=1,W纬度得到W-1的输出

def __init__(self, n_inputs, n_outputs, kernel_size, padding, dropout=0.2):

"""

:param n_inputs: int, 输入通道数

:param n_outputs: int, 输出通道数

:param kernel_size: int, 卷积核尺寸

:param padding: int, 填充系数

:param dropout: float, dropout比率

"""

super(TCN, self).__init__()

self.conv1 = weight_norm(nn.Conv2d(n_inputs, n_outputs, (1,kernel_size), padding=(0,padding)))

self.delect_pad = delect_padding(padding)

self.relu1 = nn.ReLU()

self.dropout1 = nn.Dropout(dropout)

self.net = nn.Sequential(self.conv1, self.delect_pad, self.relu1, self.dropout1)

self.relu = nn.ReLU()

self.init_weights()

def init_weights(self):

"""

参数初始化

"""

self.conv1.weight.data.normal_(0, 0.01)

def forward(self, x):

out = self.net(x)

return self.relu(out)

相关语法

Tensor.contiguous()

Tensor.contiguous(memory_format=torch.contiguous_format)

保存为一个连续格式tensor,一般用于 transpose/permute 后和 view 前

torch.transpose() 和 torch.permute()比较

a=torch.tensor([[[[0,1,2],[2,3,4]],

[[1,1,1],[4,3,1]],

[[2,1,1],[2,2,2]],

[[1,3,1],[2,1,1]]]])#shape=(1,4,2,3)

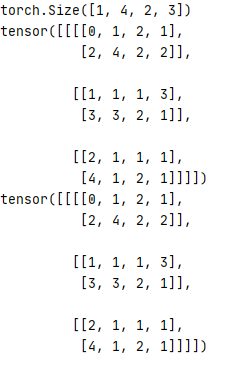

print(a.shape)

test_x_1=a.transpose(1,3)#shape=(1,3,2,4)

test_x_2 = a.permute(0,3,2,1) #shape=(1,3,2,4)

print(test_x_1)

print(test_x_2)

#好像...在这里没有区别

基础模型实验

1、nn.Cov1D(input,output,kernal_size,padding)

输入:[N, C1, H]

输出:[N, C2, H]

import torch

import torch.nn as nn

#nn.Cov1D(input,output,kernal_size,padding)

test_x=torch.randn(16,2,6)#(B,F1,T1)

text=nn.Conv1d(2,16,3,padding=0)

text_y=text(test_x)#(16,16,4)=(B,F2,T2)

普通1D-CNN实验,kernal=3, padding=0,输出时间片减少2个

2、nn.Cov2D(input,output,kernal_size,padding)

输入:[N, C1, H, W]

输出:[N, C2, H, W]