# _*_ coding: utf-8 _*_ #

# @Time :2022/7/20 14:55

# 输入姓名 输出属于哪个国家

# dataset (name,languages country)

#package

import csv

import gzip

import torch

import torch.nn as nn

from torch.nn.utils.rnn import pack_padded_sequence

from torch.utils.data import Dataset, DataLoader

import numpy as np

import matplotlib.pyplot as plt

# prepared data

# name---->character---->ASCII-->padding(长短不一 不足补0)

# Usami --->['U','s','a','m','i']---->[85 115 97 109 105]

class NameDataset(Dataset):

def __init__(self, is_train_set=True) -> None:

filename = 'rnn_data/names_train.csv.gz' if is_train_set else 'rnn_data/names_test.csv.gz'

with gzip.open(filename, 'rt') as f:

reader = csv.reader(f)

rows = list(reader) # (name,countries)

self.names = [row[0] for row in rows]

self.len = len(self.names) # 一共有多少入数据量

self.countries = [row[1] for row in rows]

self.country_list = list(sorted(set(self.countries))) #['chinese', 'uk', 'jp']

self.country_dict = self.getCountryDict() # 国家改为索引 chinese --0 uk--->1

self.country_num = len(self.country_list) # 一共有多少国家

def __getitem__(self, index):

return self.names[index], self.country_dict[self.countries[index]] # 第i条数据 姓名和国家的索引

def __len__(self):

return self.len

def getCountryDict(self):

country_dict = dict()

for index, country_name in enumerate(self.country_list, 0):

country_dict[country_name] = index

return country_dict

def index2country(self, index):

return self.country_list[index]

def getCountriesNumber(self):

return self.country_num

# parameters

HIDDEN_SIZE = 100

BATCH_SIZE = 256

N_LAYER = 2

N_EPOCHS = 100

N_CHARS = 128

Device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

# 训练集

train_set = NameDataset(is_train_set=True)

train_dataloader = DataLoader(dataset=train_set, batch_size=BATCH_SIZE, shuffle=True)

# 测试集

test_set = NameDataset(is_train_set=False)

test_dataloader = DataLoader(dataset=test_set, batch_size=BATCH_SIZE, shuffle=True)

N_country = train_set.getCountriesNumber() # 国家数量

# designed model

class RNNClassifier(nn.Module):

def __init__(self, input_size, hidden_size, output_size, n_layers=1, bidirectional=True) -> None:

super().__init__()

self.hidden_size = hidden_size

self.n_layers = n_layers

self.n_directions = 2 if bidirectional else 1

self.embedding = nn.Embedding(input_size, hidden_size)

self.gru = nn.GRU(hidden_size, hidden_size, n_layers, bidirectional=bidirectional)

self.fc = nn.Linear(hidden_size * self.n_directions, output_size)

def _init_hidden(self, batch_size):

hidden = torch.zeros(self.n_layers * self.n_directions, batch_size, self.hidden_size)

return create_tensor(hidden)

def forward(self, input, seq_len):

# input shape BXs -->SxB

input = input.t()

batch_size = input.size(1)

hidden = self._init_hidden(batch_size)

embedding = self.embedding(input)

gru_input = pack_padded_sequence(embedding, seq_len)

output, hidden = self.gru(gru_input, hidden)

if self.n_directions == 2:

hidden_cat = torch.cat([hidden[-1], hidden[-2]], dim=1)

else:

hidden_cat = hidden[-1]

fc_output = self.fc(hidden_cat)

return fc_output

def name2List(name):

arr = [ord(c) for c in name]

return arr, len(arr)

def make_tensor(names, countries):

sequences_and_lengths = [name2List(name) for name in names]

name_sequences = [s1[0] for s1 in sequences_and_lengths]

seq_lengths = torch.LongTensor([s1[1] for s1 in sequences_and_lengths])

countries = countries.long()

# make tensor of name Batch x seq padding

seq_tensor = torch.zeros(len(name_sequences), seq_lengths.max()).long()

for idx, (seq, seq_len) in enumerate(zip(name_sequences, seq_lengths), 0):

seq_tensor[idx, :seq_len] = torch.LongTensor(seq)

seq_lengths, perm_idx = seq_lengths.sort(dim=0, descending=True)

seq_tensor = seq_tensor[perm_idx]

countries = countries[perm_idx]

return create_tensor(seq_tensor), seq_lengths, create_tensor(countries)

def create_tensor(tensor):

return tensor.to(Device)

def trainModel():

total_loss = 0

for i, (names, countries) in enumerate(train_dataloader, 1):

inputs, seq_lengths, target = make_tensor(names, countries)

output = classifier(inputs, seq_lengths)

loss =criterion(output,target)

optimizer.zero_grad()

loss.backward()

optimizer.step()

total_loss+=loss.item()

return total_loss

def testModel():

correct=0

total=len(test_set)

with torch.no_grad():

for i, (names, countries) in enumerate(test_dataloader, 1):

inputs, seq_lengths, target = make_tensor(names, countries)

output = classifier(inputs, seq_lengths)

pred=output.max(dim=1,keepdim=True)[1]

correct+=pred.eq(target.view_as(pred)).sum().item()

return correct/total

if __name__ == '__main__':

classifier = RNNClassifier(N_CHARS, HIDDEN_SIZE, N_country, N_LAYER).to(Device) #gpu加速

#loss and optimizer

criterion = torch.nn.CrossEntropyLoss()

optimizer = torch.optim.Adam(classifier.parameters(), lr=0.001)

print('training for {} epochs..'.format(N_EPOCHS))

acc_list = [] # 记录每一轮准确

for epoch in range(1, N_EPOCHS + 1):

trainModel()

acc = testModel()

acc_list.append(acc)

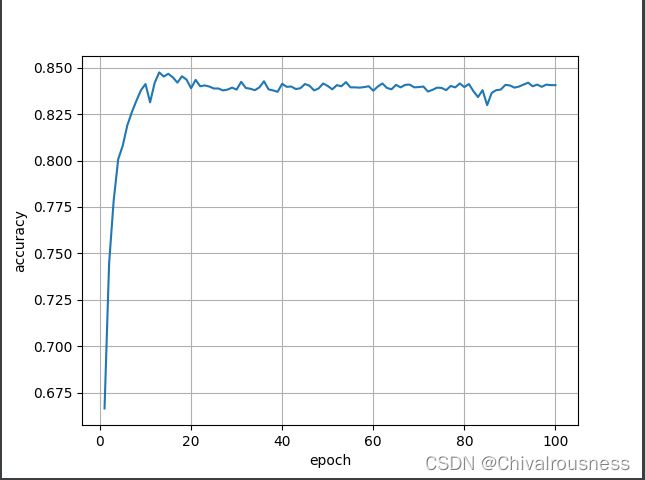

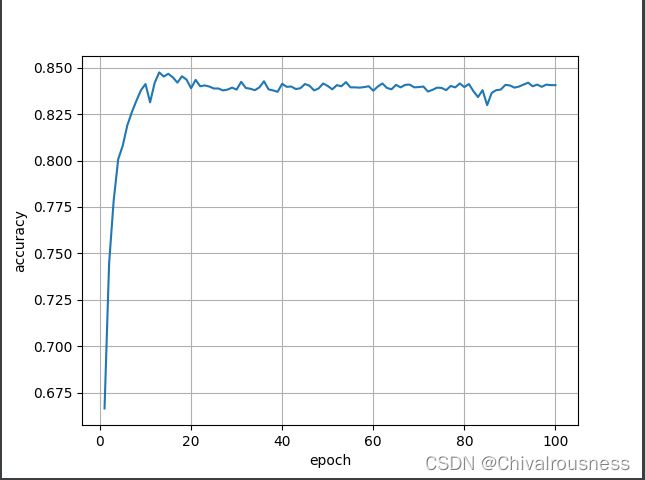

# 绘图

epoch = np.arange(1, len(acc_list) + 1, 1)

acc_list = np.array(acc_list)

plt.plot(epoch, acc_list)

plt.xlabel('epoch')

plt.ylabel('accuracy')

plt.grid()

plt.show()

数据集在刘老师代码课件里面

视频地址 《PyTorch深度学习实践》完结合集_哔哩哔哩_bilibili

结果数据图