【文本生成】评价指标:BERTScore

BERTScore

这是2020ICLR由Cornell University提出来的论文:BERTSCORE: EVALUATING TEXT GENERATION WITH BERT

主要是基于BERT预训练模型,使用contextual embedding来描述句子,计算两个句子之间的余弦相似度。

基于n-gram matching metric 的常见缺陷:

-

semantically-correct phrases are penalized because they differ from the surface form of the reference.

解决: In contrast to string matching (e.g., in BLEU) or matching heuristics (e.g., in METEOR), we compute similarity using contextualized token embeddings, which have been shown to be effective for paraphrase detection

-

n-gram models fail to capture distant dependencies and penalize semantically-critical ordering changes.

解决: contextualized embeddings are trained to effectively capture distant dependencies and ordering

实验结果:

(1)In machine translation, BERTSCORE shows stronger system-level and segment-level correlations

with human judgments than existing metrics on multiple common benchmarks.

(2)BERTSCORE is well-correlated with human annotators for image captioning, surpassing SPICE.

直接下载预训练模型,运用eval方式输入batch sentence,输出cls的embedding,对sentence_can和sentence_ref做相似度矩阵和idf计算,用greedy方式选取最大的相似度,乘以该词的idf,输出BERTScore

论文中还用了+1 smoothing和rescaling来微调BERTScore

结论:在机器翻译常用F_BERT作为指标;在评估英文生成时推荐使用 24-layer RoBERTa large model;多语言任务推荐multilingual BERT,中文自动选择的是"bert-base-chinese"。

代码实例:非常简单!

!pip install bert_score

from bert_score import score

# data

cands = ['我们都曾经年轻过']

refs = ['虽然我们都年少,但还是懂事的']

P, R, F1 = score(cands, refs, lang="zh", verbose=True)

print(f"System level F1 score: {F1.mean():.3f}")

# System level F1 score: 0.959

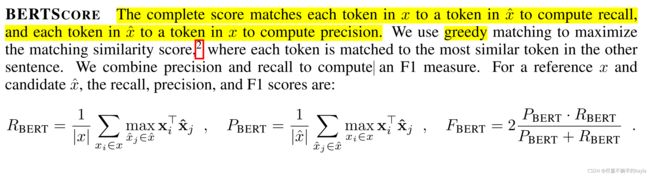

其中P,R,F1的计算:

def greedy_cos_idf(ref_embedding, ref_masks, ref_idf, hyp_embedding, hyp_masks, hyp_idf, all_layers=False):

"""

Compute greedy matching based on cosine similarity.

"""

……

batch_size = ref_embedding.size(0)

sim = torch.bmm(hyp_embedding, ref_embedding.transpose(1, 2))

masks = torch.bmm(hyp_masks.unsqueeze(2).float(), ref_masks.unsqueeze(1).float())

masks = masks.float().to(sim.device)

sim = sim * masks

word_precision = sim.max(dim=2)[0]

word_recall = sim.max(dim=1)[0]

hyp_idf.div_(hyp_idf.sum(dim=1, keepdim=True))

ref_idf.div_(ref_idf.sum(dim=1, keepdim=True))

precision_scale = hyp_idf.to(word_precision.device)

recall_scale = ref_idf.to(word_recall.device)

P = (word_precision * precision_scale).sum(dim=1)

R = (word_recall * recall_scale).sum(dim=1)

F = 2 * P * R / (P + R)

……

return P, R, F

如果需要相似度矩阵图形:

import matplotlib.pyplot as plt

font = {'family': 'SimHei', 'size':'10'}

plt.rc('font', **font)

from bert_score import plot_example

cand = cands[0]

ref = refs[0]

plot_example(cand, ref, lang="zh")

# bert以字划分中文,这里不显示中文是因为colab服务器上没有中文字体

github地址:https://github.com/Tiiiger/bert_score/blob/3227a18cb2a546978a00beb94bf138fd72fef8cf/bert_score/score.py