基于贝叶斯分类器(朴素贝叶斯)的手写数字识别

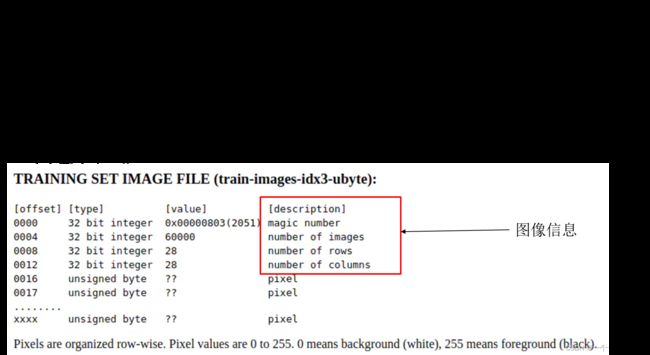

1.认识mnist数据集

2.朴素贝叶斯分类

原理就不再介绍:

3.代码如下:

import numpy as np

from struct import unpack

import matplotlib.pyplot as plt

from PIL import Image

from collections import Counter

import math

from sklearn.decomposition import PCA

# 配置文件

config = {

# 训练集文件

'train_images_idx3_ubyte_file_path': 'data/train-images.idx3-ubyte',

# 训练集标签文件

'train_labels_idx1_ubyte_file_path': 'data/train-labels.idx1-ubyte',

# 测试集文件

'test_images_idx3_ubyte_file_path': 'data/t10k-images.idx3-ubyte',

# 测试集标签文件

'test_labels_idx1_ubyte_file_path': 'data/t10k-labels.idx1-ubyte',

# 特征提取阙值

'binarization_limit_value': 0.14,

# 特征提取后的边长

'side_length': 14

}

def decode_idx3_ubyte(path):

'''

加载文件数据

'''

'''

也可不解压,直接打开.gz文件。path是.gz文件的路径

import gzip

with gzip.open(path, 'rb') as f:

'''

print('loading %s' % path) #提醒的标志性语句

with open(path, 'rb') as f:

# 前16位为附加数据,每4位为一个整数,分别为幻数,图片数量,每张图片像素行数,列数。

magic, num, rows, cols = unpack('>4I', f.read(16))

print('magic:%d 数量:%d 行数:%d 列数:%d' % (magic, num, rows, cols))

mnistImage = np.fromfile(f, dtype=np.uint8).reshape(num, rows, cols)

print('完成操作')

#print('下面是处理的具体的图像')

#print(mnistImage)

return mnistImage

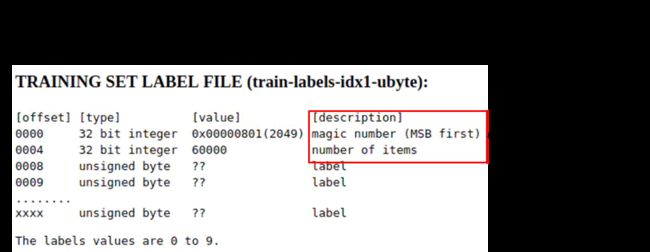

def decode_idx1_ubyte(path):

'''

解析idx1-ubyte文件,即解析MNIST标签文件

'''

print('loading %s' % path)

with open(path, 'rb') as f:

# 前8位为附加数据,每4位为一个整数,分别为幻数,标签数量。

magic, num = unpack('>2I', f.read(8))

print('magic:%d num:%d' % (magic, num))

mnistLabel = np.fromfile(f, dtype=np.uint8)

print('done')

#print('下面是训练集的标签')

#print(mnistLabel)

return mnistLabel

def normalizeImage(image):

'''

将图像的像素值归一化为0.0 ~ 1.0

'''

res = image.astype(np.float32) / 255.0

return res

def load_train_images(path=config['train_images_idx3_ubyte_file_path']):

return normalizeImage(decode_idx3_ubyte(path))

def load_train_labels(path=config['train_labels_idx1_ubyte_file_path']):

return decode_idx1_ubyte(path)

def load_test_images(path=config['test_images_idx3_ubyte_file_path']):

return normalizeImage(decode_idx3_ubyte(path))

def load_test_labels(path=config['test_labels_idx1_ubyte_file_path']):

return decode_idx1_ubyte(path)

def oneImagesFeatureExtraction(image):

'''

对单张图片进行特征提取 均值特征提取

'''

res = np.empty((config['side_length'], config['side_length']))

num = 28//config['side_length'] # //先做除法向下取整

for i in range(0, config['side_length']):

for j in range(0, config['side_length']):

tempMean = image[num*i:num*(i+1), num*j:num*(j+1)].mean() #采用均值进行降维 步长为2

if tempMean > config['binarization_limit_value']: #对图像进行二维化处理

res[i, j] = 1

else:

res[i, j] = 0

return res

def oneImagesFeatureExtraction1(image):

'''

对单张图片进行特征提取 最大值特征提取

'''

res = np.empty((config['side_length'], config['side_length']))

num = 28//config['side_length'] # //先做除法向下取整

for i in range(0, config['side_length']):

for j in range(0, config['side_length']):

tempMean = image[num*i:num*(i+1), num*j:num*(j+1)].max()#采用均值进行降维 步长为2

if tempMean > config['binarization_limit_value']: #对图像进行二维化处理

res[i, j] = 1

else:

res[i, j] = 0

return res

def get_main_cahrticter(image):

''''对单张图片进行PCA降维处理进行特征提取'''

pca=PCA(n_components=14)

pca.fit(image)

answer=pca.transform(image) #降维后的数据

#print(answer)

res = np.empty((config['side_length'], config['side_length']))

num=28//config['side_length']

for i in range(0,config['side_length']):

for j in range(0, config['side_length']):

tempMean=answer[num*i:num*(i+1),j].mean()

if tempMean > config['binarization_limit_value']: #对图像进行二维化处理

res[i, j] = 1

else:

res[i, j] = 0

return res

def featureExtraction(images):

'''将所有的图片信息转化为一个矩阵'''

res = np.empty((images.shape[0], config['side_length'],config['side_length']), dtype=np.float32) # '''将所有的图片信息转化为一个矩阵'''

"""empty函数的用法:numpy.empty(shape, dtype=float, order=‘C’) shape表示空间中的维数,dty表示返回的类型"""

for i in range(images.shape[0]):

res[i] = oneImagesFeatureExtraction(images[i]) #单张图片的降维处理

return res

def bayesModelTrain(train_x, train_y):

'''

贝叶斯分类器模型训练

'''

# 计算先验概率

totalNum = train_x.shape[0] #计算训练数据的大小

#print(totalNum)

classNumDic = Counter(train_y) #计算返回每一类出现的次数

#print(classNumDic)

prioriP = np.array([classNumDic[i]/totalNum for i in range(10)]) #计算每一类的先验概率

#print(prioriP)

# 计算类条件概率

oldShape = train_x.shape #返回的内容是:(60000, 14, 14)

#print(oldShape)

train_x.resize((oldShape[0], oldShape[1]*oldShape[2])) #将三维图像转换为二维

#print(train_x.shape[1])

posteriorNum = np.empty((10, train_x.shape[1])) #返回一个二维大小的数组,里面存储的是

#print(posteriorNum)

posteriorP = np.empty((10, train_x.shape[1])) #返回一个二维大小的数组

#print(posteriorP)

for i in range(10):

posteriorNum[i] = train_x[np.where(train_y == i)].sum(axis=0) #axis=1表示按行相加 , axis=0表示按列相加

# 拉普拉斯平滑

posteriorP[i] = (posteriorNum[i] + 1) / (classNumDic[i] + 2)

train_x.resize(oldShape)

return prioriP, posteriorP

def bayesClassifier(test_x, prioriP, posteriorP):

'''

使用贝叶斯分类器进行分类(极大似然估计)

'''

oldShape = test_x.shape

test_x.resize(oldShape[0]*oldShape[1])

classP = np.empty(10)

for j in range(10):

temp = sum([math.log(1-posteriorP[j][x]) if test_x[x] == 0 else math.log(posteriorP[j][x]) for x in range(test_x.shape[0])])

# 很奇怪,在降维成7*7的时候,注释掉下面这一句正确率反而更高

classP[j] = np.array(math.log(prioriP[j]) + temp)

classP[j] = np.array(temp)

test_x.resize(oldShape)

return np.argmax(classP) #返回概率最大点的位置上的数

def modelEvaluation(test_x, test_y, prioriP, posteriorP):

'''

对贝叶斯分类器的模型进行评估

'''

bayesClassifierRes = np.empty(test_x.shape[0])

for i in range(test_x.shape[0]):

bayesClassifierRes[i] = bayesClassifier(test_x[i], prioriP, posteriorP)

#print('预测值:%d 真实值:%d'%(bayesClassifierRes[i],test_y[i]))

return bayesClassifierRes, (bayesClassifierRes == test_y).sum() / test_y.shape[0]

if __name__ == '__main__':

print('加载数据')

train_images = load_train_images() #加载训练的图片

train_labels = load_train_labels() #加载训练的标签

test_images = load_test_images() #加载测试的图片

test_labels = load_test_labels() #加载测试的标签

print('加载完毕')

print('图片转换')

train_images_feature = featureExtraction(train_images)

print('图片转换结束')

print('模型训练开始')

prioriP, posteriorP = bayesModelTrain(train_images_feature, train_labels)

print('模型训练完毕')

# print(prioriP)

# print(posteriorP)

print('模型评价开始')

test_images_feature = featureExtraction(test_images)

res, val = modelEvaluation(test_images_feature, test_labels, prioriP, posteriorP)

print('贝叶斯分类器的准确度为%.2f %%' % (val*100))

print('模型评价结束')

4.运行结果:

| 特征提取方式 |

降维维数 |

特征阈值 |

测试集准确率 |

| 均值池化 |

14*14 |

0.14 |

83.58% |

| 均值池化 |

28*28 |

0.14 |

84.64% |

| 均值池化 |

9*9 |

0.14 |

80.67% |

| 均值池化 |

15*15 |

0.14 |

63% |

| 均值池化 |

|||

| 最大池化 |

14*14 |

0.14 |

83.06% |

| 最大池化 |

28*28 |

0.14 |

84.64% |

| 最大池化 |

9*9 |

0.14 |

78.79% |

| 最大池化 |

10*10 |

0.14 |

79.85% |

| 最大池化 |

15*15 |

0.14 |

63% |