FCN 论文代码解读(二)-模型构建

FCN 论文代码解读二–模型构建

- FCN

参数类别数

class FCN(nn.Module):

def __init__(self, num_classes):

super().__init__()

self.stage1 = pretrained_net.features[:7] # 一第一个block conv relu bn pooling 一共6层

self.stage2 = pretrained_net.features[7:14]

self.stage3 = pretrained_net.features[14:24]

self.stage4 = pretrained_net.features[24:34]

self.stage5 = pretrained_net.features[34:]

self.scores1 = nn.Conv2d( 512, num_classes, 1) # FCN 32S 预测图

self.scores2 = nn.Conv2d(512, num_classes, 1) # # FCN 16S

self.scores3 = nn.Conv2d(128, num_classes, 1) # FCN 8S

self.conv_trans1 = nn.Conv2d(512, 256, 1) # 过度,1x1 conv

self.conv_trans2 = nn.Conv2d(256, num_classes, 1)

self.upsample_8x = nn.ConvTranspose2d(num_classes, num_classes, 16, 8, 4, bias=False)

self.upsample_8x.weight.data = bilinear_kernel(num_classes, num_classes, 16)

self.upsample_2x_1 = nn.ConvTranspose2d(512, 512, 4, 2, 1, bias=False)

self.upsample_2x_1.weight.data = bilinear_kernel(512, 512, 4)

self.upsample_2x_2 = nn.ConvTranspose2d(256, 256, 4, 2, 1, bias=False) #2倍

self.upsample_2x_2.weight.data = bilinear_kernel(256, 256, 4)

前向传播forward

以VGG16为例,调用torch自带模型,获取模型架构,pre_trained =True

====打印出来理解更深刻:

如下为打印结果,可知0-6为一个block,以此类推,一个pooling一个划分。

Sequential(

(0): Conv2d(3, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(4): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(5): ReLU(inplace=True)

(6): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(7): Conv2d(64, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(8): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(9): ReLU(inplace=True)

(10): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(11): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(12): ReLU(inplace=True)

(13): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(14): Conv2d(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(15): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(16): ReLU(inplace=True)

(17): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(18): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(19): ReLU(inplace=True)

(20): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(21): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(22): ReLU(inplace=True)

(23): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(24): Conv2d(256, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(25): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(26): ReLU(inplace=True)

(27): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(28): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(29): ReLU(inplace=True)

(30): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(31): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(32): ReLU(inplace=True)

(33): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(34): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(35): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(36): ReLU(inplace=True)

(37): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(38): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(39): ReLU(inplace=True)

(40): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(41): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(42): ReLU(inplace=True)

(43): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

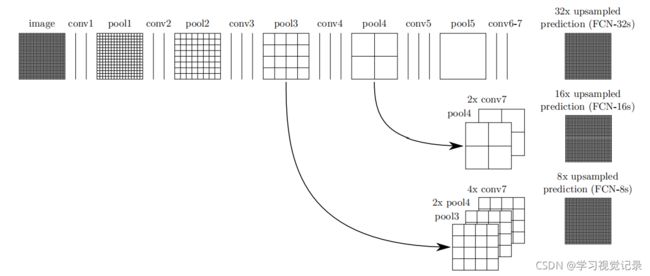

维度变化举例分析:

输入一张图片【352 480 3】,RGB

经过5个stage后,[276 240 64],[88 120 128],[ 44 60 256],[22 30 512],[11 15 512]

再上采样和skip connection

FCN8s:为例:

POOL3+POOL4+ POOL5

add1= s5 + s4上采样---->22 30 512

s3+add1上采样 —> 44 60 256

def forward(self, x): # x 352 480 3

s1 = self.stage1(x) # 276 240 64 一半

s2 = self.stage2(s1) # 88 120 128

s3 = self.stage3(s2) # 44 60 256

s4 = self.stage4(s3) # 22 30 512

s5 = self.stage5(s4) # 11 15 512

scores1 = self.scores1(s5)

s5 = self.upsample_2x_1(s5)

add1 = s5 + s4 # 22 30 512

scores2 = self.scores2(add1) # 22 30 12

add1 = self.conv_trans1(add1) # 过度卷积,改变通道数 512-》256

add1 = self.upsample_2x_2(add1) #扩大尺寸 44 60 256

add2 = add1 + s3

output = self.conv_trans2(add2) # 过度卷积,改变通道数 256 ---》12 ,44 60, 12

output = self.upsample_8x(output) # 44*8, 60*8, 12

return output

可以

3. 参数初始化

bilinear_kernel(in_channels, out_channels, kernel_size)

通过参数获得初始化weight,双线性插值公式可以参考:

https://blog.csdn.net/weixin_40163266/article/details/113776345?ops_request_misc=&request_id=&biz_id=102&utm_term=%E5%8F%8C%E7%8E%B0%E8%B1%A1%E6%8F%92%E5%80%BC&utm_medium=distribute.pc_search_result.none-task-blog-2blogsobaiduweb~default-2-113776345.pc_v2_rank_blog_default&spm=1018.2226.3001.4450.

~~