数据挖掘笔记Ⅱ——数据清洗(房租预测)

缺失值分析及处理

- 缺失值出现的原因分析

- 采取合适的方式对缺失值进行填充

异常值分析及处理

-

根据测试集数据的分布处理训练集的数据分布

-

使用合适的方法找出异常值

-

对异常值进行处理

深度清洗

- 分析每一个communityName、city、region、plate的数据分布并对其进行数据清洗

0、调包加载数据

首先,我们要做的就是加载可能要用到的包。以及源数据的导入;

#coding:utf-8

#导入warnings包,利用过滤器来实现忽略警告语句。

import warnings

warnings.filterwarnings('ignore')

# GBDT

from sklearn.ensemble import GradientBoostingRegressor

# XGBoost

import xgboost as xgb

# LightGBM

import lightgbm as lgb

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns #Seaborn其实是在matplotlib的基础上进行了更高级的API封装

from sklearn.model_selection import KFold #用于交叉验证的包

from sklearn.metrics import r2_score # 用于评价回归模型 的R²

from sklearn.preprocessing import LabelEncoder # 实现标签(Label) 标准化

import pickle #将对象以文件的形式存放在磁盘上,对一个 Python 对象结构的二进制序列化和反序列化

import multiprocessing #基于进程的并行

from sklearn.preprocessing import StandardScaler #数据预处理 去均值和方差归一化。且是针对每一个特征维度来做

ss = StandardScaler()

# k折交叉切分 用法类似Kfold,但是他是分层采样,确保训练集,测试集中各类别样本的比例与原始数据集中相同

from sklearn.model_selection import StratifiedKFold

# 从线性模型里导包

from sklearn.linear_model import ElasticNet, Lasso, BayesianRidge, LassoLarsIC,LinearRegression,LogisticRegression

# 机器学习里的包 不知道具体怎么用

from sklearn.pipeline import make_pipeline

from sklearn.preprocessing import RobustScaler

from sklearn.model_selection import train_test_split

from sklearn.ensemble import IsolationForest

# 导入数据:测试集 + 训练集

data_train = pd.read_csv("F:\\数据建模\\team-learning-master\\数据竞赛(房租预测)\\数据集\\train_data.csv")

data_test = pd.read_csv("F:\\数据建模\\team-learning-master\\数据竞赛(房租预测)\\数据集\\test_a.csv")

# 加辅助列

data_train['Type'] = 'Train'

data_test['Type'] = 'Test'

# 合并数据集

data_all = pd.concat([data_train, data_test], ignore_index=True) #纵向合并 横向用merge

data_all.head()

| ID | Type | area | bankNum | buildYear | busStationNum | city | communityName | drugStoreNum | gymNum | ... | totalWorkers | tradeLandArea | tradeLandNum | tradeMeanPrice | tradeMoney | tradeNewMeanPrice | tradeNewNum | tradeSecNum | tradeTime | uv | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 100309852 | Train | 68.06 | 16 | 1953 | 36 | SH | XQ00051 | 12 | 15 | ... | 28248 | 0.0 | 0 | 47974.22551 | 2000.0 | 104573.48460 | 25 | 111 | 2018/11/28 | 284.0 |

| 1 | 100307942 | Train | 125.55 | 16 | 2007 | 184 | SH | XQ00130 | 27 | 5 | ... | 14823 | 0.0 | 0 | 40706.66775 | 2000.0 | 33807.53497 | 2 | 2 | 2018/12/16 | 22.0 |

| 2 | 100307764 | Train | 132.00 | 37 | 暂无信息 | 60 | SH | XQ00179 | 24 | 35 | ... | 77645 | 0.0 | 0 | 34384.35089 | 16000.0 | 109734.16040 | 11 | 555 | 2018/12/22 | 20.0 |

| 3 | 100306518 | Train | 57.00 | 47 | 暂无信息 | 364 | SH | XQ00313 | 83 | 30 | ... | 8750 | 108037.8 | 1 | 20529.55050 | 1600.0 | 30587.07058 | 58 | 260 | 2018/12/21 | 279.0 |

| 4 | 100305262 | Train | 129.00 | 10 | 暂无信息 | 141 | SH | XQ01257 | 21 | 5 | ... | 800 | 0.0 | 0 | 24386.36577 | 2900.0 | 51127.32846 | 34 | 38 | 2018/11/18 | 480.0 |

5 rows × 52 columns

1、缺失值处理

然后就是确实值得处理,先理清思路。什么是缺失值,已经在上篇文章中,阐述过了。这里按照实际情况处理缺失值;

主要思路分析

虽然这步骤是缺失值处理,但还会涉及到一些最最基础的数据处理。

- 缺失值处理

缺失值的处理手段大体可以分为:删除、填充、映射到高维(当做类别处理)。

根据任务一,直接找到的缺失值情况是pu和pv;但是,根据特征nunique分布的分析,可以发现rentType存在"–“的情况,这也算是一种缺失值。

此外,诸如rentType的"未知方式”;houseToward的"暂无数据"等,本质上也算是一种缺失值,但是对于这些缺失方式,我们可以把它当做是特殊的一类处理,而不需要去主动修改或填充值。

将rentType的"–“转换成"未知方式"类别;

pv/pu的缺失值用均值填充;

buildYear存在"暂无信息”,将其用众数填充。

-

转换object类型数据

这里直接采用LabelEncoder的方式编码,详细的编码方式请自行查阅相关资料学习。 -

时间字段的处理

buildYear由于存在"暂无信息",所以需要主动将其转换int类型;

tradeTime,将其分割成月和日。 -

删除无关字段

ID是唯一码,建模无用,所以直接删除;

city只有一个SH值,也直接删除;

tradeTime已经分割成月和日,删除原来字段

def preprocessingData(data):

# 填充缺失值

data['rentType'][data['rentType'] == '--'] = '未知方式' # 把rentType列 里 "--" 的值填充为 "未知方式"

# 转换object类型数据

columns = ['rentType','communityName','houseType', 'houseFloor', 'houseToward', 'houseDecoration', 'region', 'plate']

for feature in columns:

data[feature] = LabelEncoder().fit_transform(data[feature])

# LabelEncoder() 调用处理标签的包 对数据进行标准化,降维,归一化等操作(比如标准化~N(0,1)

# 将buildYear列转换为整型数据

buildYearmean = pd.DataFrame(data[data['buildYear'] != '暂无信息']['buildYear'].mode()) # mode() 众数函数

# 找出buildYear 列 值不等于"暂无信息" 的值得集合 中的众数

data.loc[data[data['buildYear'] == '暂无信息'].index, 'buildYear'] = buildYearmean.iloc[0, 0] # 用众数填补 暂无信息 的值

# loc:通过选取行(列)标签索引数据

# iloc:通过选取行(列)位置编号索引数据

# ix:既可以通过行(列)标签索引数据,也可以通过行(列)位置编号索引数据

data['buildYear'] = data['buildYear'].astype('int')# 将变量buildYear转换为int型

# 处理pv和uv的空值

data['pv'].fillna(data['pv'].mean(), inplace=True) # 均值填充缺失值

data['uv'].fillna(data['uv'].mean(), inplace=True) # 同上

data['pv'] = data['pv'].astype('int') # 将变量 转换为int型

data['uv'] = data['uv'].astype('int')

# 分割交易时间

def month(x):

month = int(x.split('/')[1]) # split() 通过指定分隔符对字符串进行切片

return month

def day(x):

day = int(x.split('/')[2])

return day

data['month'] = data['tradeTime'].apply(lambda x: month(x)) # 根据month()函数 切割tradeTime

data['day'] = data['tradeTime'].apply(lambda x: day(x)) # 根据day()函数 切割tradeTime

# 去掉部分特征

data.drop('city', axis=1, inplace=True) #drop函数默认删除行,列需要加axis = 1

data.drop('tradeTime', axis=1, inplace=True)

data.drop('ID', axis=1, inplace=True)

return data

# 凡是会对原数组作出修改并返回一个新数组的,往往都有一个 inplace可选参数。

# 如果手动设定为True(默认为False),那么原数组直接就被替换。

# 也就是说,采用inplace=True之后,原数组名对应的内存值直接改变;

data_train = preprocessingData(data_train)

这里一共处理了:

- 填充出租方式的的空值("–")

- 将类别变量标准化处理

- 将buildYear列转换为整型数据,用众数填补buildYear的缺失值

- 处理pv和uv的空值,用均值填补

- 将成交时间的日期切割

- 删除没有用处的"city,tradeTime,ID"

2、异常值处理

主要思路分析

- 这里主要针对area和tradeMoney两个维度处理。

- 针对tradeMoney,这里采用的是IsolationForest模型自动处理;

- 针对areahetotalFloor是主观+数据可视化的方式得到的结果。

1)什么是异常值

- 异常值是指样本中的个别值,其数值明显偏离其余的观测值。也被称为离群点;

- 如果数据服从正态分布,在3σ原则下,异常值被定义为一组测定值中与平均值的偏差超过3倍标准差的值。在正态分布的假设下,距离平均值3σ之外的值出现的概率为P(|x-μ|>3σ)≤0.003

2)检测租金的异常值,并删除

# clean data

def IF_drop(train):

IForest = IsolationForest(contamination=0.01) # 异常值的检测算法函数 contamination 参数是设定样本的异常值比例

IForest.fit(train["tradeMoney"].values.reshape(-1,1))

# fit() 对 tradeMoney 列的值用 reshape(-1,1)

y_pred = IForest.predict(train["tradeMoney"].values.reshape(-1,1))

drop_index = train.loc[y_pred==-1].index

print(drop_index)

train.drop(drop_index,inplace=True)

return train

data_train = IF_drop(data_train)

C:\ProgramData\Anaconda3\lib\site-packages\sklearn\ensemble\iforest.py:417: DeprecationWarning: threshold_ attribute is deprecated in 0.20 and will be removed in 0.22.

" be removed in 0.22.", DeprecationWarning)

Int64Index([ 62, 69, 128, 131, 246, 261, 266, 297, 308,

313,

...

39224, 39228, 39319, 39347, 39352, 39434, 39563, 41080, 41083,

41233],

dtype='int64', length=402)

这里删除了了租金的异常值,列出了索引,共402个。

3)删掉一些不合理的异常值

def dropData(train):

# 丢弃部分异常值

train = train[train.area <= 200] # 筛选房屋面积小于200

train = train[(train.tradeMoney <=16000) & (train.tradeMoney >=700)] # 筛选租金在700-16000的

train.drop(train[(train['totalFloor'] == 0)].index, inplace=True) # 删除楼层数为0的

return train

#数据集异常值处理

data_train = dropData(data_train)

这里从业务逻辑上出发,删除了不符合常理或出现较少的情况下的行记录

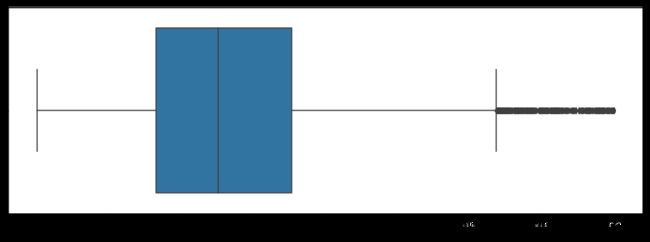

4)用箱线图识别异常值

- 箱型图提供了识别异常值的一个标准:异常值通常被定义为小于Q_{l} -1.5IQR 或大于Q_{u} +1.5IQR的值。Q_{l}称为下四分位数,表示全部观察值中有四分之一的数据取值比它下;Q_{u}称为上四分位数,表示全部观察值中有四分之一的数据取值比它大;IQR称为四分位数间距,是上四分位数和下四分位数之差,期间包含了全部观察值的一半。(规定:超过上四分位+1.5倍IQR距离,或者下四分位-1.5倍IQR距离的点为异常值)

# 这里想看一下处理后的训练集情况

# data_train.info()

# 处理异常值后再次查看面积和租金分布图

plt.figure(figsize=(15,5))

sns.boxplot(data_train.area)

plt.show()

plt.figure(figsize=(15,5))

sns.boxplot(data_train.tradeMoney)

plt.show()

- 虽然已经删除了房屋面积大于200的记录,但还是存在房屋面积在160-200之间的离群数据;

- 同理,9000-16000的离群数据也还是有很多

3、深度清洗

主要思路分析

- 针对每一个region的数据,对area和tradeMoney两个维度进行深度清洗。 采用主观+数据可视化的方式。

注:drop函数,index是找出满足条件行的索引,inplace参数为True是在原数据集上进行操作,且不返回处理后的结果

- If True, do operation inplace and return None

def cleanData(data):

data.drop(data[(data['region']=='RG00001') & (data['tradeMoney']<1000)&(data['area']>50)].index,inplace=True)

data.drop(data[(data['region']=='RG00001') & (data['tradeMoney']>25000)].index,inplace=True)

data.drop(data[(data['region']=='RG00001') & (data['area']>250)&(data['tradeMoney']<20000)].index,inplace=True)

data.drop(data[(data['region']=='RG00001') & (data['area']>400)&(data['tradeMoney']>50000)].index,inplace=True)

data.drop(data[(data['region']=='RG00001') & (data['area']>100)&(data['tradeMoney']<2000)].index,inplace=True)

data.drop(data[(data['region']=='RG00002') & (data['area']<100)&(data['tradeMoney']>60000)].index,inplace=True)

data.drop(data[(data['region']=='RG00003') & (data['area']<300)&(data['tradeMoney']>30000)].index,inplace=True)

data.drop(data[(data['region']=='RG00003') & (data['tradeMoney']<500)&(data['area']<50)].index,inplace=True)

data.drop(data[(data['region']=='RG00003') & (data['tradeMoney']<1500)&(data['area']>100)].index,inplace=True)

data.drop(data[(data['region']=='RG00003') & (data['tradeMoney']<2000)&(data['area']>300)].index,inplace=True)

data.drop(data[(data['region']=='RG00003') & (data['tradeMoney']>5000)&(data['area']<20)].index,inplace=True)

data.drop(data[(data['region']=='RG00003') & (data['area']>600)&(data['tradeMoney']>40000)].index,inplace=True)

data.drop(data[(data['region']=='RG00004') & (data['tradeMoney']<1000)&(data['area']>80)].index,inplace=True)

data.drop(data[(data['region']=='RG00006') & (data['tradeMoney']<200)].index,inplace=True)

data.drop(data[(data['region']=='RG00005') & (data['tradeMoney']<2000)&(data['area']>180)].index,inplace=True)

data.drop(data[(data['region']=='RG00005') & (data['tradeMoney']>50000)&(data['area']<200)].index,inplace=True)

data.drop(data[(data['region']=='RG00006') & (data['area']>200)&(data['tradeMoney']<2000)].index,inplace=True)

data.drop(data[(data['region']=='RG00007') & (data['area']>100)&(data['tradeMoney']<2500)].index,inplace=True)

data.drop(data[(data['region']=='RG00010') & (data['area']>200)&(data['tradeMoney']>25000)].index,inplace=True)

data.drop(data[(data['region']=='RG00010') & (data['area']>400)&(data['tradeMoney']<15000)].index,inplace=True)

data.drop(data[(data['region']=='RG00010') & (data['tradeMoney']<3000)&(data['area']>200)].index,inplace=True)

data.drop(data[(data['region']=='RG00010') & (data['tradeMoney']>7000)&(data['area']<75)].index,inplace=True)

data.drop(data[(data['region']=='RG00010') & (data['tradeMoney']>12500)&(data['area']<100)].index,inplace=True)

data.drop(data[(data['region']=='RG00004') & (data['area']>400)&(data['tradeMoney']>20000)].index,inplace=True)

data.drop(data[(data['region']=='RG00008') & (data['tradeMoney']<2000)&(data['area']>80)].index,inplace=True)

data.drop(data[(data['region']=='RG00009') & (data['tradeMoney']>40000)].index,inplace=True)

data.drop(data[(data['region']=='RG00009') & (data['area']>300)].index,inplace=True)

data.drop(data[(data['region']=='RG00009') & (data['area']>100)&(data['tradeMoney']<2000)].index,inplace=True)

data.drop(data[(data['region']=='RG00011') & (data['tradeMoney']<10000)&(data['area']>390)].index,inplace=True)

data.drop(data[(data['region']=='RG00012') & (data['area']>120)&(data['tradeMoney']<5000)].index,inplace=True)

data.drop(data[(data['region']=='RG00013') & (data['area']<100)&(data['tradeMoney']>40000)].index,inplace=True)

data.drop(data[(data['region']=='RG00013') & (data['area']>400)&(data['tradeMoney']>50000)].index,inplace=True)

data.drop(data[(data['region']=='RG00013') & (data['area']>80)&(data['tradeMoney']<2000)].index,inplace=True)

data.drop(data[(data['region']=='RG00014') & (data['area']>300)&(data['tradeMoney']>40000)].index,inplace=True)

data.drop(data[(data['region']=='RG00014') & (data['tradeMoney']<1300)&(data['area']>80)].index,inplace=True)

data.drop(data[(data['region']=='RG00014') & (data['tradeMoney']<8000)&(data['area']>200)].index,inplace=True)

data.drop(data[(data['region']=='RG00014') & (data['tradeMoney']<1000)&(data['area']>20)].index,inplace=True)

data.drop(data[(data['region']=='RG00014') & (data['tradeMoney']>25000)&(data['area']>200)].index,inplace=True)

data.drop(data[(data['region']=='RG00014') & (data['tradeMoney']<20000)&(data['area']>250)].index,inplace=True)

data.drop(data[(data['region']=='RG00005') & (data['tradeMoney']>30000)&(data['area']<100)].index,inplace=True)

data.drop(data[(data['region']=='RG00005') & (data['tradeMoney']<50000)&(data['area']>600)].index,inplace=True)

data.drop(data[(data['region']=='RG00005') & (data['tradeMoney']>50000)&(data['area']>350)].index,inplace=True)

data.drop(data[(data['region']=='RG00006') & (data['tradeMoney']>4000)&(data['area']<100)].index,inplace=True)

data.drop(data[(data['region']=='RG00006') & (data['tradeMoney']<600)&(data['area']>100)].index,inplace=True)

data.drop(data[(data['region']=='RG00006') & (data['area']>165)].index,inplace=True)

data.drop(data[(data['region']=='RG00012') & (data['tradeMoney']<800)&(data['area']<30)].index,inplace=True)

data.drop(data[(data['region']=='RG00007') & (data['tradeMoney']<1100)&(data['area']>50)].index,inplace=True)

data.drop(data[(data['region']=='RG00004') & (data['tradeMoney']>8000)&(data['area']<80)].index,inplace=True)

data.loc[(data['region']=='RG00002')&(data['area']>50)&(data['rentType']=='合租'),'rentType']='整租'

data.loc[(data['region']=='RG00014')&(data['rentType']=='合租')&(data['area']>60),'rentType']='整租'

data.drop(data[(data['region']=='RG00008')&(data['tradeMoney']>15000)&(data['area']<110)].index,inplace=True)

data.drop(data[(data['region']=='RG00008')&(data['tradeMoney']>20000)&(data['area']>110)].index,inplace=True)

data.drop(data[(data['region']=='RG00008')&(data['tradeMoney']<1500)&(data['area']<50)].index,inplace=True)

data.drop(data[(data['region']=='RG00008')&(data['rentType']=='合租')&(data['area']>50)].index,inplace=True)

data.drop(data[(data['region']=='RG00015') ].index,inplace=True)

data.reset_index(drop=True, inplace=True)

return data

data_train = cleanData(data_train)

4、结论

通过一些检测方法我们可以找到异常值,但所得结果并不是绝对正确的,具体情况还需自己根据业务的理解加以判断。同样,对于异常值如何处理,是该删除,修正,还是不处理也需结合实际情况考虑,没有固定的。