opencv4.1无法加载python-cnn模型,编译第三方库libtensorflow_cc.so巨坑

两个月前同事在python下训练的cnn模型(加了batchnorm层、dropout层,模型是.pb结尾),但发现opencv不支持加载(可能是这样)。于是我找啊找,发现可以自己编译第三方库libtensorflow_cc.so,就可以加载python下的任何模型,不管是什么layer不管是.pb还是.meta等模型。

那时候我按照 TensorFlow C++动态库编译 - 简书 、 tensorflow c++ 环境配置 - 简书 、编译使用tensorflow c版本动态链接库 - handspeaker - 博客园 等各位大神的都试过:

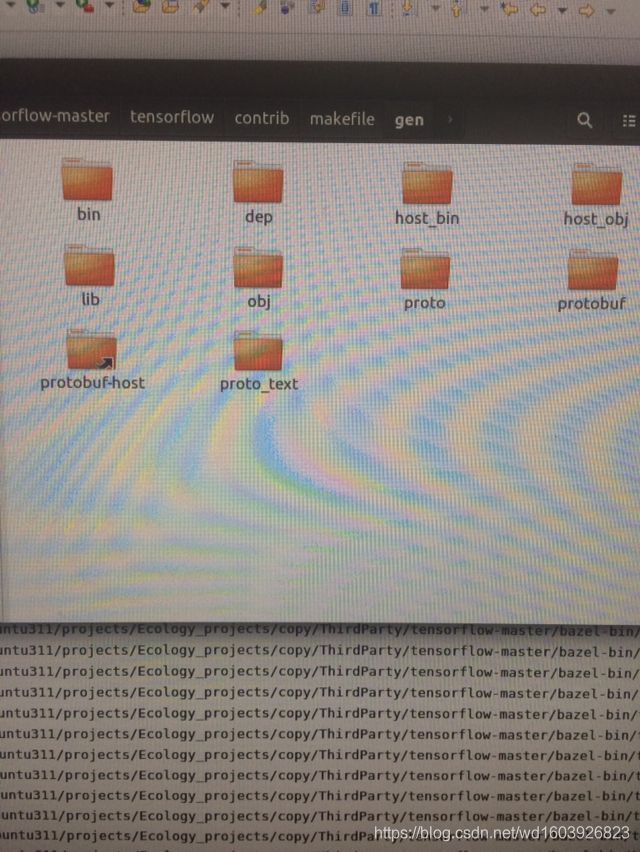

按照他们的教程,我只到达这一步!即耗时巨久,终于成功编译出libtensorflow_cc.so。但是

按照他们的教程,我只到达这一步!即耗时巨久,终于成功编译出libtensorflow_cc.so。但是

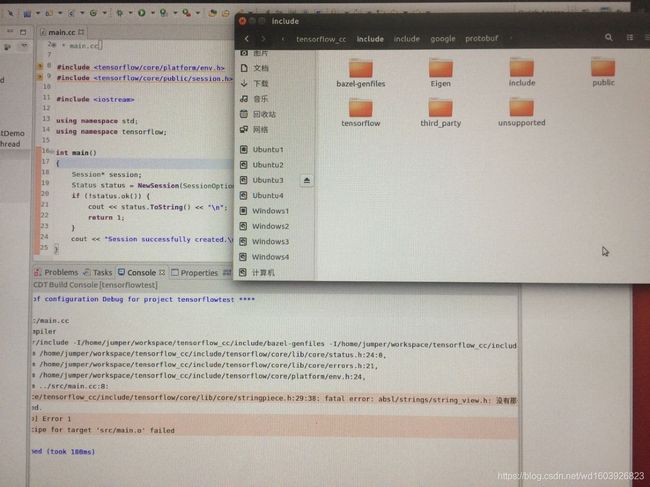

这些文件夹都编译出来了。但是在我将libtensorflow_cc.so加入环境变量时报错:

这些文件夹都编译出来了。但是在我将libtensorflow_cc.so加入环境变量时报错: 到此,我查了N多方法,还是没搞定!!!

到此,我查了N多方法,还是没搞定!!!

今天我又不得不开始搞这个,我没有全部重新编译,只是接着两个月前编译好的libtensorlow_cc.so,按照 linux 源码安装 tensorflow C++_dragonchow123的博客-CSDN博客 这个大神的下半段操作“5.整理头文件和so库”。这次不会上次的信息了“魔数错误”!但是运行例子时发现即使我配置好了include和lib,仍旧始终显示找不到libtensorflow_cc.so!!!我再次试了网上所有提到的方法:比如将路径加入ld.so.conf 然后ldconfig更新;加入bashrc或profile然后source更新,加入LD_LIBRARY_PATH或LIBRARY_PATH、或者直接将其拷贝到/lib或/usr/lib下面等等等等。结果运行例子时还是显示找不到libtensorflow_cc.so!!!

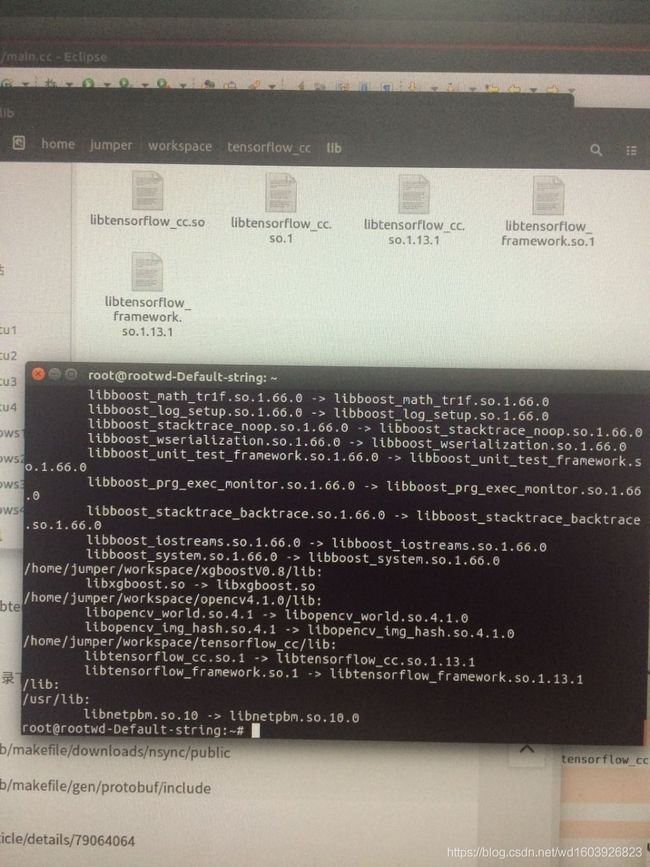

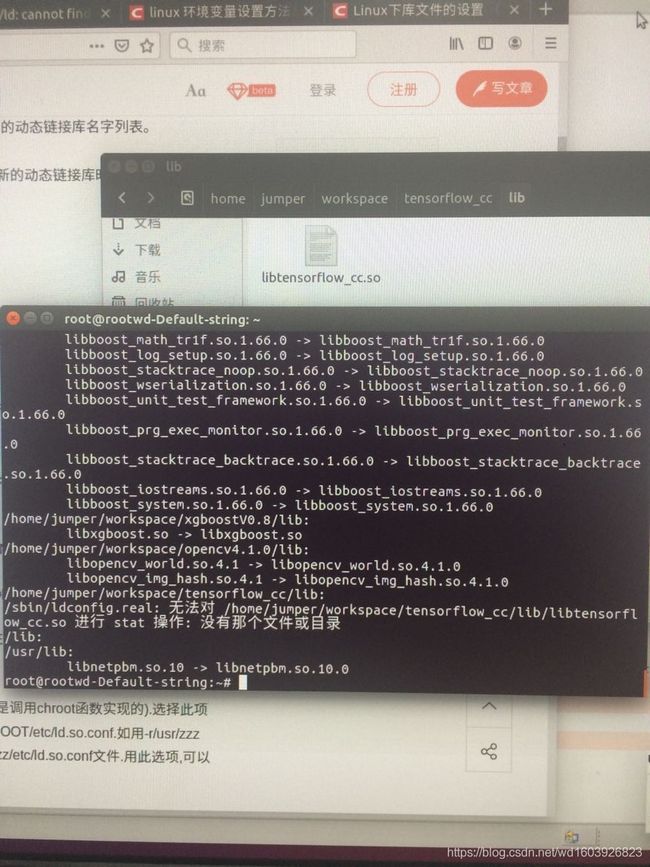

我直接运行ldconfig -v显示下面的信息:

可以看到明明这个文件夹里有这个库,可是报错却像睁眼说瞎话一样说没有这个库!!!气死我了!gcc -ltensorflow_cc --verbose肯定也是一样找不到这个库!那运行例子肯定也一样找不到这个库!!!

可以看到明明这个文件夹里有这个库,可是报错却像睁眼说瞎话一样说没有这个库!!!气死我了!gcc -ltensorflow_cc --verbose肯定也是一样找不到这个库!那运行例子肯定也一样找不到这个库!!!

此时又试了N多网上将库添加进环境变量之类的方法,仍无效!!!

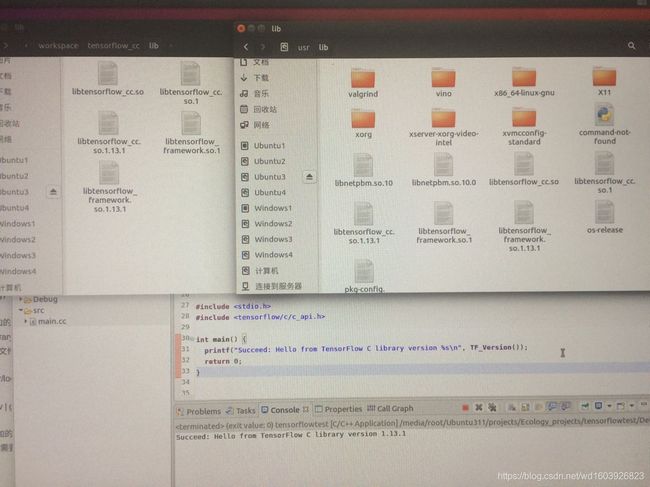

后来无聊将这几个库都拷贝进来:然后竟然不报错了哈哈!!!!!!!!!

但是gcc -ltensorflow_cc --verbose还是没有这个库。于是我又试着将这5个库都拷贝到/usr/lib下面,然后ldconfig一下!然后竟然可以了!!!!

看,这个例子终于正确输出第三方库tensorflow的信息了!!!!此时加班到九点的我泪流满面!!!

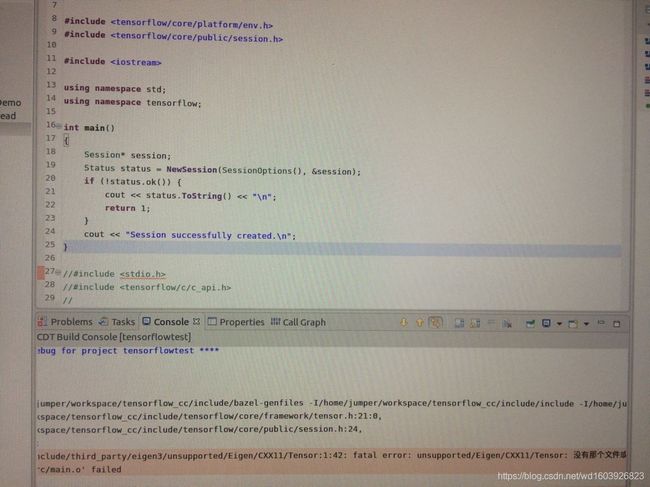

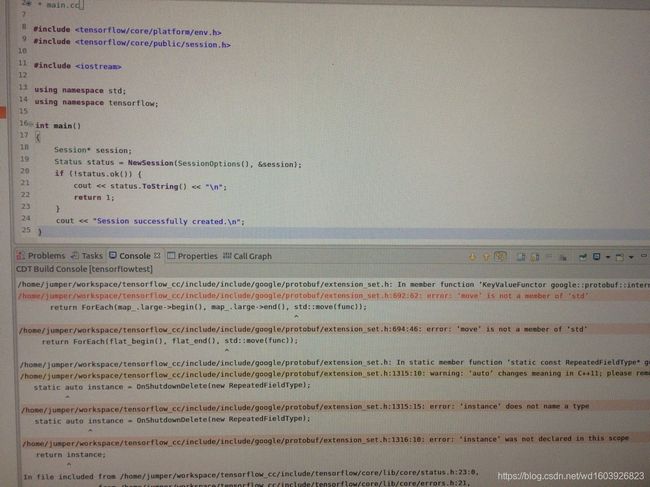

今天我又来试了另一个例子:

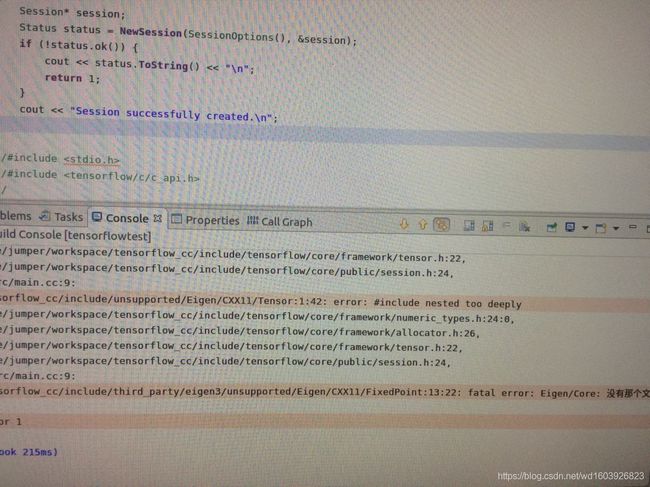

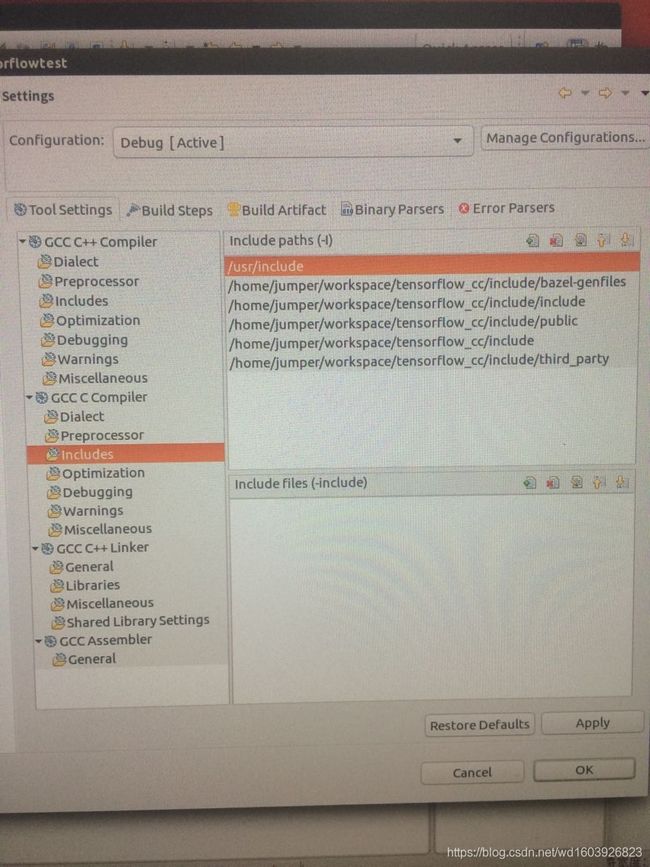

于是我开始按照它报的这些错,添加-std=c++11,并将报错的fatal error: absl/strings/string_view.h: 没有那个文件或目录 、CXX11/Tensor:1:42: fatal error: unsupported/Eigen/CXX11/Tensor: 没有那个文件或目录 加入include中

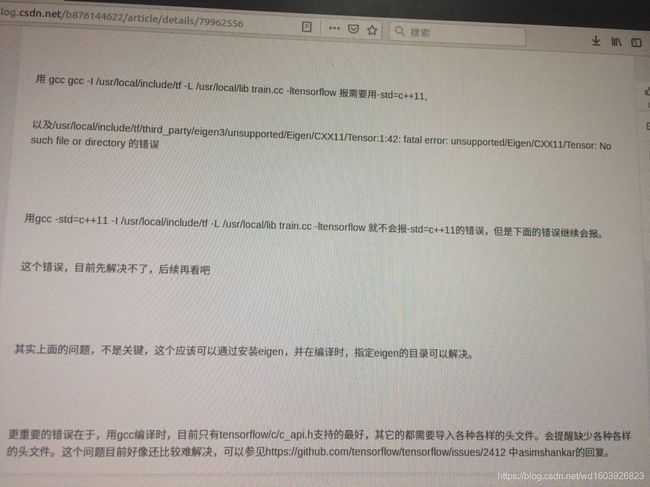

它还没完没了了。我查了一下,网上有人说:(看下图最后一句)

对c/c_api.h的支持最好,难怪我昨天那个例子可以成功?!!!

google/protobuf/extension_set.h:692:62: error: ‘move’ is not a member of ‘std’

bazel-genfiles/tensorflow/core/lib/core/error_codes.pb.h:76:58: error: ‘<::’ cannot begin a template-argument list [-fpermissive]

absl/base/internal/throw_delegate.h:39:1: error: expected unqualified-id before ‘[’ token

absl/strings/string_view.h:146:9: error: expected nested-name-specifier before ‘traits_type’现在它报的这些没完没了的问题。。。哎这个东西坑太多了。

解决办法:eigen3/unsupported/Eigen/CXX11/Tensor:1:42: fatal error: unsupported/Eigen/CXX11/Tensor: 没有那个文件或目录----------原来是我的系统的python版本里面没有自带的tensorflow!于是我按照 Ubuntu下安装Tensorflow(CPU版本) - 简书 这个人的安装对应版本的tensorflow进python里。然后将这个python下的tensorflow里的unsupported文件夹放到我之前弄好的放tensorflow_cc的头文件的地方:

我之前的错误之处在于我是将第三方库tensorflow里的unsupported文件夹放在这里,不对!应该放python的tensorflow的unsupported文件夹才对!!上面编译时之前的错误消失了。

现在只要解决这个错误就好了 “tensorflow/core/lib/core/stringpiece.h:29:38: fatal error: absl/strings/string_view.h: 没有那个文件或目录” 我原来的解决办法是将第三方库tensorflow里的随便找的一个absl文件夹放在上图的include里,这是不对的!有人说要 tensorflow/contrib/makefile/downloads/absl/absl 将这个文件夹放进include才行。但我试了一下,发现报的错误更多。烦死了。

刚刚又按照 这个大神的方法在tmp下编译proto和eigen文件夹。

编译proto时出现了这个小错误没有eigen3/gebp_neon.patch,但我没管。

继续编译eigen。

然后将我原来的Eigen和unsupported文件夹删掉,重新替换成刚刚tmp/下proto和eigen中的Eigen和unsupported文件夹。然后将tmp下proto与eigen的include和lib加入工程目录,以及环境变量。

因为我之前说过我编译出的libtensorflow_cc.so的同时竟然没有libtensorflow_framework.so,只有libtensorflow_framework.so.1、libtensorflow_framework.so.1.13.1。所以我软链接创造了一个libtensorflow_framework.so,并添加到工程目录。然后竟然编译成功了,如上图所示。

但是运行时报了错:

2019-06-25 20:09:37.474938: E tensorflow/core/common_runtime/session.cc:81] Not found: No session factory registered for the given session options: {target: "" config: } Registered factories are {}.

Not found: No session factory registered for the given session options: {target: "" config: } Registered factories are {}.不管怎样,能编译成功我已经很知足了。

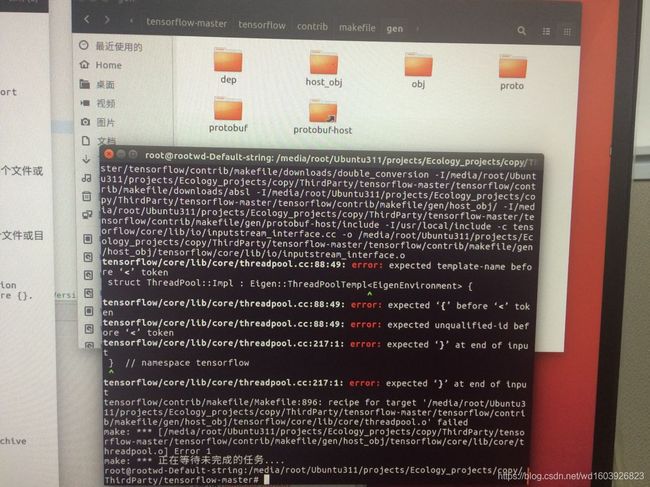

我查了一下,发现别人说这是因为 libtensorflow-core这个库没有链接进工程。我尝试了gitbub上有人说的 -Wl,--allow-multiple-definition -Wl,--whole-archive 发现无用。又试了 使用TensorFlow C++ API构建线上预测服务 - 第一篇_青松愉快的博客-CSDN博客 等大神说的 ./tensorflow/contrib/makefile/build_all_linux.sh 终于看到快成功,然而这个没有安装成功:

出现这些报错:

./unsupported/Eigen/CXX11/Tensor:1:42: error: #include nested too deeply

tensorflow/core/lib/core/threadpool.cc:88:49: error: expected template-name before ‘<’ token

struct ThreadPool::Impl : Eigen::ThreadPoolTempl {

^

tensorflow/core/lib/core/threadpool.cc:88:49: error: expected ‘{’ before ‘<’ token

tensorflow/core/lib/core/threadpool.cc:88:49: error: expected unqualified-id before ‘<’ token

tensorflow/core/lib/core/threadpool.cc:217:1: error: expected ‘}’ at end of input

} // namespace tensorflow

^

tensorflow/core/lib/core/threadpool.cc:217:1: error: expected ‘}’ at end of input 然后gen下面也没有找到libtensorflow_core这个库。所以应该就是这里的问题。这些报错是因为什么我找了好久还没解决。

但是我刚刚又看到 用Tensorflow C++ API训练模型 - Spockwang's Blog 这位大神的解说,也就是要么使用libtensorflow_cc.so动态共享库,要么使用libtensorflow_core.a静态库。我既然跑通了libtensorflow_cc.so的共享库,其实是不用再编译libtensorflow_core.a的!?所以我共享库跑第二个例子时报错说没注册的问题是不是该用这位大神说的“如果运行时报错说有些操作没有注册,这需要将相应的操作源代码文件(在tensorflow/core/kernels/下)放到tf_op_files.txt中重新编译。” 这样来解决?我试下。然而还是没成功,因为那个报错的session.cc不在core/kernels下。

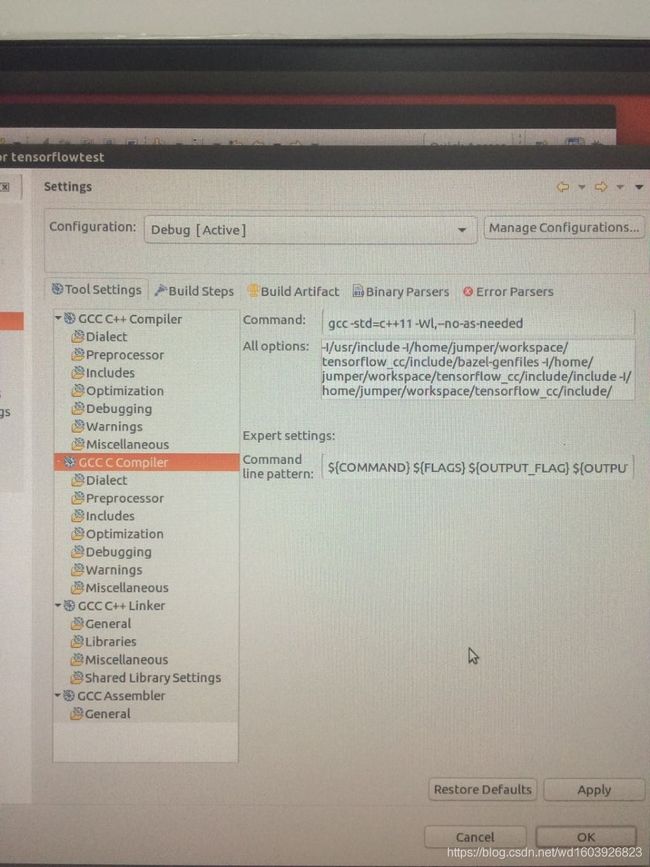

今天又试了一个办法:-Wl,--no-as-needed

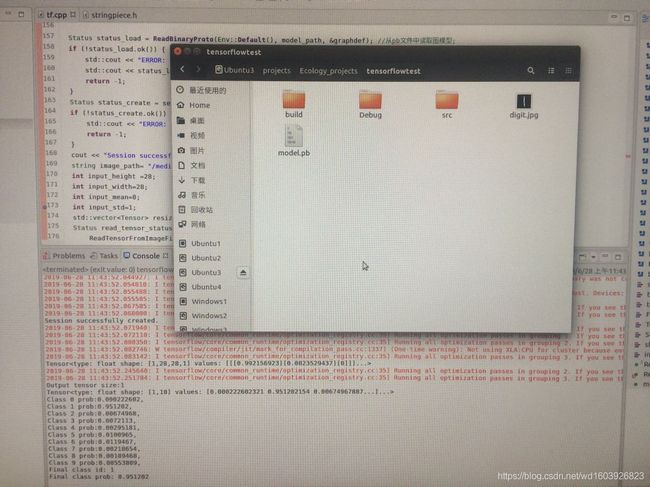

竟然成功输出了!但是有红色部分报警。但是还是算正确输出了。这是我的include和lib,下面介绍我工程的此时的配置:

暂时告一段落,因为正确输出了。太开心了啊啊啊啊啊啊啊啊狂吼

************************************************************************************************************

解决上次的红色报警部分。

2019-06-27 14:24:40.050190: I tensorflow/core/platform/cpu_feature_guard.cc:142] Your CPU supports instructions that this TensorFlow binary was not compiled to use: SSE4.1 SSE4.2 AVX AVX2 FMA

2019-06-27 14:24:40.070870: I tensorflow/core/platform/profile_utils/cpu_utils.cc:94] CPU Frequency: 3407965000 Hz

2019-06-27 14:24:40.071201: I tensorflow/compiler/xla/service/service.cc:168] XLA service 0x1ef7780 executing computations on platform Host. Devices:

2019-06-27 14:24:40.071216: I tensorflow/compiler/xla/service/service.cc:175] StreamExecutor device (0): ,

Session successfully created. 即这个问题。我查了一下这个报警是说我的CPU支持更高级的配置,意思就是我还可以升级,那样tf运算速度会比现在快三倍!!!太开心!!!

#include "tensorflow/cc/client/client_session.h"

#include "tensorflow/cc/ops/standard_ops.h"

#include "tensorflow/core/framework/tensor.h"

int main() {

using namespace tensorflow;

using namespace tensorflow::ops;

Scope root = Scope::NewRootScope();

// Matrix A = [3 2; -1 0]

auto A = Const(root, { {3.f, 2.f}, {-1.f, 0.f}});

// Vector b = [3 5]

auto b = Const(root, { {3.f, 5.f}});

// v = Ab^T

auto v = MatMul(root.WithOpName("v"), A, b, MatMul::TransposeB(true));

std::vector outputs;

ClientSession session(root);

// Run and fetch v

TF_CHECK_OK(session.Run({v}, &outputs));

// Expect outputs[0] == [19; -3]

LOG(INFO) << outputs[0].matrix();

return 0;

} 我运行了下这个例子,可以正确输出 19 -3

2019-06-27 14:58:54.463627: I tensorflow/core/platform/cpu_feature_guard.cc:142] Your CPU supports instructions that this TensorFlow binary was not compiled to use: SSE4.1 SSE4.2 AVX AVX2 FMA

2019-06-27 14:58:54.480724: I tensorflow/core/platform/profile_utils/cpu_utils.cc:94] CPU Frequency: 3407935000 Hz

2019-06-27 14:58:54.481503: I tensorflow/compiler/xla/service/service.cc:168] XLA service 0x2b1d6d0 executing computations on platform Host. Devices:

2019-06-27 14:58:54.481545: I tensorflow/compiler/xla/service/service.cc:175] StreamExecutor device (0): ,

2019-06-27 14:58:54.484842: I tensorflow/core/common_runtime/optimization_registry.cc:35] Running all optimization passes in grouping 0. If you see this a lot, you might be extending the graph too many times (which means you modify the graph many times before execution). Try reducing graph modifications or using SavedModel to avoid any graph modification

2019-06-27 14:58:54.487872: I tensorflow/core/common_runtime/optimization_registry.cc:35] Running all optimization passes in grouping 1. If you see this a lot, you might be extending the graph too many times (which means you modify the graph many times before execution). Try reducing graph modifications or using SavedModel to avoid any graph modification

2019-06-27 14:58:54.491739: I tensorflow/core/common_runtime/optimization_registry.cc:35] Running all optimization passes in grouping 2. If you see this a lot, you might be extending the graph too many times (which means you modify the graph many times before execution). Try reducing graph modifications or using SavedModel to avoid any graph modification

2019-06-27 14:58:54.493086: W tensorflow/compiler/jit/mark_for_compilation_pass.cc:1337] (One-time warning): Not using XLA:CPU for cluster because envvar TF_XLA_FLAGS=--tf_xla_cpu_global_jit was not set. If you want XLA:CPU, either set that envvar, or use experimental_jit_scope to enable XLA:CPU. To confirm that XLA is active, pass --vmodule=xla_compilation_cache=1 (as a proper command-line flag, not via TF_XLA_FLAGS) or set the envvar XLA_FLAGS=--xla_hlo_profile.

2019-06-27 14:58:54.493679: I tensorflow/core/common_runtime/optimization_registry.cc:35] Running all optimization passes in grouping 3. If you see this a lot, you might be extending the graph too many times (which means you modify the graph many times before execution). Try reducing graph modifications or using SavedModel to avoid any graph modification

2019-06-27 14:58:54.499085: I ../src/main.cc:27] 19

-3 虽然也会报警,但报警的部分先忽略。

太开心了,可以用起来了。

*************************************************************************************************

测试网上的例程 加载模型测试:深度学习部署-调用c++进行前向处理_visionshop的博客-CSDN博客

但是结果却报了错:

../src/tf.cpp:55:37: error: ‘using StringPiece = class absl::string_view {aka class absl::string_view}’ has no member named ‘ToString’

output->scalar()() = data.ToString();

^

../src/tf.cpp: In function ‘tensorflow::Status ReadTensorFromImageFile(const string&, int, int, float, float, std::vector*)’:

../src/tf.cpp:86:42: error: ‘using StringPiece = class absl::string_view {aka class absl::string_view}’ has no member named ‘ends_with’

if (tensorflow::StringPiece(file_name).ends_with(".png")) {

^

../src/tf.cpp:89:49: error: ‘using StringPiece = class absl::string_view {aka class absl::string_view}’ has no member named ‘ends_with’

} else if (tensorflow::StringPiece(file_name).ends_with(".gif")) {

^

../src/tf.cpp:94:49: error: ‘using StringPiece = class absl::string_view {aka class absl::string_view}’ has no member named ‘ends_with’

} else if (tensorflow::StringPiece(file_name).ends_with(".bmp")) {

^

../src/tf.cpp: In function ‘int main(int, char**)’:

../src/tf.cpp:189:7: warning: unused variable ‘ndim2’ [-Wunused-variable]

int ndim2 = t.shape().dims(); // Get the dimension of the tensor

^

make: *** [src/tf.o] Error 1

src/subdir.mk:32: recipe for target 'src/tf.o' failed 找了很久原因没解决。因为我去看string_view的源码,真的没有报错的这几个成员函数。所以可能是我用错了版本还是这几个函数已经被废弃。

今天终于解决了。原来上面这个例程是按照 tensorflow/main.cc at master · tensorflow/tensorflow · GitHub 仿照这个来写的,而外国人进行了更新,国内很多人以前的博客都是老版本,所以我使用会出问题。

所以我按照外国人的写法,把以前错误的注释掉:

/*

* test tensorflow_cc c++ successfully

* load mnist.pb model successfully

* 2019.6.28

* wang dan

* conference:https://github.com/tensorflow/tensorflow/blob/master/tensorflow/examples/label_image

* */

#include

#include

#include

#include

#include

#include "tensorflow/cc/ops/const_op.h"

#include "tensorflow/cc/ops/image_ops.h"

#include "tensorflow/cc/ops/standard_ops.h"

#include "tensorflow/core/framework/graph.pb.h"

#include "tensorflow/core/framework/tensor.h"

#include "tensorflow/core/graph/default_device.h"

#include "tensorflow/core/graph/graph_def_builder.h"

#include "tensorflow/core/lib/core/errors.h"

#include "tensorflow/core/lib/core/stringpiece.h"

#include "tensorflow/core/lib/core/threadpool.h"

#include "tensorflow/core/lib/io/path.h"

#include "tensorflow/core/lib/strings/stringprintf.h"

#include "tensorflow/core/platform/env.h"

#include "tensorflow/core/platform/init_main.h"

#include "tensorflow/core/platform/logging.h"

#include "tensorflow/core/platform/types.h"

#include "tensorflow/core/public/session.h"

#include "tensorflow/core/util/command_line_flags.h"

using namespace std;

using namespace tensorflow;

using namespace tensorflow::ops;

using tensorflow::Flag;

using tensorflow::Tensor;

using tensorflow::Status;

using tensorflow::string;

using tensorflow::int32;

static Status ReadEntireFile(tensorflow::Env* env, const string& filename,

Tensor* output) {

tensorflow::uint64 file_size = 0;

TF_RETURN_IF_ERROR(env->GetFileSize(filename, &file_size));

string contents;

contents.resize(file_size);

std::unique_ptr file;

TF_RETURN_IF_ERROR(env->NewRandomAccessFile(filename, &file));

tensorflow::StringPiece data;

TF_RETURN_IF_ERROR(file->Read(0, file_size, &data, &(contents)[0]));

if (data.size() != file_size) {

return tensorflow::errors::DataLoss("Truncated read of '", filename,

"' expected ", file_size, " got ",

data.size());

}

// output->scalar()() = data.ToString();

output->scalar()() = string(data);

return Status::OK();

}

Status ReadTensorFromImageFile(const string& file_name, const int input_height,

const int input_width, const float input_mean,

const float input_std,

std::vector* out_tensors) {

auto root = tensorflow::Scope::NewRootScope();

using namespace ::tensorflow::ops;

string input_name = "file_reader";

string output_name = "normalized";

// read file_name into a tensor named input

Tensor input(tensorflow::DT_STRING, tensorflow::TensorShape());

TF_RETURN_IF_ERROR(

ReadEntireFile(tensorflow::Env::Default(), file_name, &input));

// use a placeholder to read input data

auto file_reader =

Placeholder(root.WithOpName("input"), tensorflow::DataType::DT_STRING);

std::vector> inputs = {

{"input", input},

};

// Now try to figure out what kind of file it is and decode it.

const int wanted_channels = 1;

// tensorflow::Output image_reader;

// if (tensorflow::StringPiece(file_name).ends_with(".png")) {

// image_reader = DecodePng(root.WithOpName("png_reader"), file_reader,

// DecodePng::Channels(wanted_channels));

// } else if (tensorflow::StringPiece(file_name).ends_with(".gif")) {

// // gif decoder returns 4-D tensor, remove the first dim

// image_reader =

// Squeeze(root.WithOpName("squeeze_first_dim"),

// DecodeGif(root.WithOpName("gif_reader"), file_reader));

// } else if (tensorflow::StringPiece(file_name).ends_with(".bmp")) {

// image_reader = DecodeBmp(root.WithOpName("bmp_reader"), file_reader);

// } else {

// // Assume if it's neither a PNG nor a GIF then it must be a JPEG.

// image_reader = DecodeJpeg(root.WithOpName("jpeg_reader"), file_reader,

// DecodeJpeg::Channels(wanted_channels));

// }

tensorflow::Output image_reader;

if (tensorflow::str_util::EndsWith(file_name, ".png")) {

image_reader = DecodePng(root.WithOpName("png_reader"), file_reader,

DecodePng::Channels(wanted_channels));

} else if (tensorflow::str_util::EndsWith(file_name, ".gif")) {

// gif decoder returns 4-D tensor, remove the first dim

image_reader =

Squeeze(root.WithOpName("squeeze_first_dim"),

DecodeGif(root.WithOpName("gif_reader"), file_reader));

} else if (tensorflow::str_util::EndsWith(file_name, ".bmp")) {

image_reader = DecodeBmp(root.WithOpName("bmp_reader"), file_reader);

} else {

// Assume if it's neither a PNG nor a GIF then it must be a JPEG.

image_reader = DecodeJpeg(root.WithOpName("jpeg_reader"), file_reader,

DecodeJpeg::Channels(wanted_channels));

}

// Now cast the image data to float so we can do normal math on it.

auto float_caster =

Cast(root.WithOpName("float_caster"), image_reader, tensorflow::DT_FLOAT);

auto dims_expander = ExpandDims(root.WithOpName("expand"), float_caster, 0);

float input_max = 255;

Div(root.WithOpName("div"),dims_expander,input_max);

tensorflow::GraphDef graph;

TF_RETURN_IF_ERROR(root.ToGraphDef(&graph));

std::unique_ptr session(

tensorflow::NewSession(tensorflow::SessionOptions()));

TF_RETURN_IF_ERROR(session->Create(graph));

// std::vector out_tensors;

// TF_RETURN_IF_ERROR(session->Run({}, {output_name + ":0", output_name + ":1"},

// {}, &out_tensors));

TF_RETURN_IF_ERROR(session->Run({inputs}, {"div"}, {}, out_tensors));

return Status::OK();

}

int main()

{

Session* session;

Status status = NewSession(SessionOptions(), &session);//创建新会话Session

string model_path="model.pb";

GraphDef graphdef; //Graph Definition for current model

Status status_load = ReadBinaryProto(Env::Default(), model_path, &graphdef); //从pb文件中读取图模型;

if (!status_load.ok()) {

std::cout << "ERROR: Loading model failed..." << model_path << std::endl;

std::cout << status_load.ToString() << "\n";

return -1;

}

Status status_create = session->Create(graphdef); //将模型导入会话Session中;

if (!status_create.ok()) {

std::cout << "ERROR: Creating graph in session failed..." << status_create.ToString() << std::endl;

return -1;

}

cout << "Session successfully created."<< endl;

string image_path= "/media/root/Ubuntu311/projects/Ecology_projects/tensorflowtest/digit.jpg";

int input_height =28;

int input_width=28;

int input_mean=0;

int input_std=1;

std::vector resized_tensors;

Status read_tensor_status =

ReadTensorFromImageFile(image_path, input_height, input_width, input_mean,

input_std, &resized_tensors);

if (!read_tensor_status.ok()) {

LOG(ERROR) << read_tensor_status;

cout<<"resing error"< outputs;

string output_node = "softmax";

Status status_run = session->Run({{"inputs", resized_tensor}}, {output_node}, {}, &outputs);

if (!status_run.ok()) {

std::cout << "ERROR: RUN failed..." << std::endl;

std::cout << status_run.ToString() << "\n";

return -1;

}

//Fetch output value

std::cout << "Output tensor size:" << outputs.size() << std::endl;

for (std::size_t i = 0; i < outputs.size(); i++) {

std::cout << outputs[i].DebugString()<(); // Tensor Shape: [batch_size, target_class_num]

int output_dim = t.shape().dim_size(1); // Get the target_class_num from 1st dimension

std::vector tout;

// Argmax: Get Final Prediction Label and Probability

int output_class_id = -1;

double output_prob = 0.0;

for (int j = 0; j < output_dim; j++)

{

std::cout << "Class " << j << " prob:" << tmap(0, j) << "," << std::endl;

if (tmap(0, j) >= output_prob) {

output_class_id = j;

output_prob = tmap(0, j);

}

}

std::cout << "Final class id: " << output_class_id << std::endl;

std::cout << "Final class prob: " << output_prob << std::endl;

return 0;

} 这样就可以了。

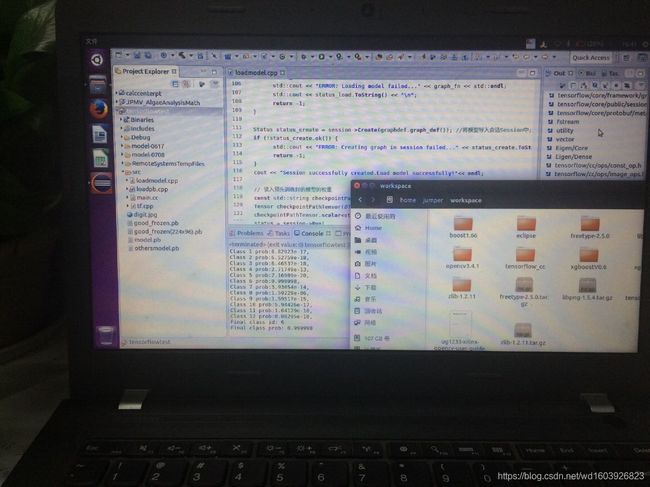

已经正确输出了测试结果。

今天我将这个库复制到别的笔记本上,然后新建测试工程,已经测试通过。所以以后再也不用重新编译了,直接使用这个库到工程中即可。

本来想把这个编译好的动态库直接上传供大家直接下载使用,而不用你们自己重新编译,但是文件大小超过了CSDN的限制,所以传不了。抱歉。

po一张狗子的生图哈哈