Tensorflow2.0的简单GCN代码(使用cora数据集)

废话不多说先放代码。

本文的代码需要两个部分组成——自定义的GCN层的GCN_layer和训练代码train。

首先是自定义的GCN层的GCN_layer.py:

import tensorflow as tf

import numpy as np

class my_GCN_layer(tf.keras.layers.Layer):

def __init__(self, fea_dim, out_dim):

super().__init__()

self.fea_dim = fea_dim

self.out_dim = out_dim

def build(self, input_shape):

features_dim = self.fea_dim

self.wei = self.add_variable(name='wei', shape=[features_dim, self.out_dim], initializer=tf.zeros_initializer())

def call(self, inputs, support):

#inputs = np.array(inputs, dtype=float)

#support = np.array(support, dtype=float)

inputs = tf.cast(inputs, dtype=tf.float32)

support = tf.cast(support, dtype=tf.float32)

H_t = tf.matmul(support, inputs)

output = tf.matmul(H_t, self.wei)

return tf.sigmoid(output)

本身代码并不复杂,简单熟悉tensorflow语法便可以轻松阅读。就是tensorflow2.0中自定义层的典型流程——注意需要先继承keras的layers类。在构造函数中规定一下矩阵的shape,而后需要定义build函数创建层的权重,也就是训练参数啦。最后的call函数则是定义了自定义层的前向传播过程。

其中的张量support是输入的图数据的邻接矩阵的归一化矩阵,在GCN的公式推导中有提到,这里就不再赘述,推荐一个简洁的推导博客:https://www.cnblogs.com/denny402/p/10917820.html。

同时上面的inputs是图数据的特征矩阵。

接下来是train.py:

import tensorflow as tf

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

from GCN_layer import my_GCN_layer as Con

num_epoch = 10000

learning_rate = 1e-3

path1 = 'cora.content'

path2 = 'cora.cites'

class PCAtest():

def __init__(self, k):

self.k = k

def get_res(self, data):

mean_vector = np.mean(data, axis=0)

standdata = data - mean_vector

cov_mat = np.cov(standdata, rowvar=0)

fValue, fVector = np.linalg.eig(cov_mat)

fValueSort = np.argsort(-fValue)

fValueTopN = fValueSort[:self.k]

vectorMat = fVector[:, fValueTopN]

return np.dot(standdata, vectorMat)

class data_loader:

def __init__(self, path1, path2):

self.path1 = path1

self.path2 = path2

self.raw_data = pd.read_csv(self.path1, sep='\t', header=None)

num = self.raw_data.shape[0]

a = list(self.raw_data.index)

b = list(self.raw_data[0])

c = zip(b, a)

map = dict(c)

self.features = self.raw_data.iloc[:, 1:-1]

self.labels = pd.get_dummies(self.raw_data[1434])

raw_data_cites = pd.read_csv(self.path2, sep='\t', header=None)

self.matrix = np.zeros((num, num))

for i, j in zip(raw_data_cites[0], raw_data_cites[1]):

x = map[i]; y = map[j]

self.matrix[x][y] = self.matrix[y][x] = 1

tem_d = np.sum(self.matrix, axis=1)

self.d = np.zeros((num, num))

for i in range(len(tem_d)):

self.d[i][i] = 1.0/np.sqrt(tem_d[i])

self.support = tf.matmul(tf.matmul(self.d, self.matrix), self.d)

def get_data(self):

return np.array(self.features), np.array(self.labels), np.array(self.support)

def get_batch(self, batch_size):

for i in range(batch_size):

class GCN(tf.keras.Model):

def __init__(self, node_dim, fea_dim, out_dim):

super().__init__()

self.node_dim = node_dim

self.fea_dim = fea_dim

self.out_dim = out_dim

self.con1 = Con(self.fea_dim, 2048)

self.con2 = Con(2048, self.out_dim)

#self.con3 = Con(4096, self.out_dim)

self.fla1 = tf.keras.layers.Flatten(input_shape=[self.node_dim, self.out_dim])

self.den1 = tf.keras.layers.Dense(self.type_num, activation='softmax')

def call(self, inputs, support):

hidden1 = self.con1(inputs, support)

#hidden2 = self.con2(hidden1, support)

unflattened = self.con2(hidden1, support)

undensed = self.fla1(unflattened)

output = self.den1(undensed)

return output

dataloader = data_loader(path1, path2)

X, Y, support = dataloader.get_data()

model = GCN(X.shape[-1], Y.shape[-1])

check_point = tf.train.Checkpoint(myAwesomeModel=model)

optimizer = tf.keras.optimizers.Adam(learning_rate=learning_rate)

for i in range(num_epoch):

with tf.GradientTape() as tape:

y_pred = model(X, support)

loss = tf.keras.losses.categorical_crossentropy(Y, y_pred)

loss = tf.reduce_mean(loss)

#loss = tf.nn.l2_loss(Y - y_pred)

#loss = tf.reduce_mean(loss)

print("epoch:", i+1, " || loss:", loss)

grads = tape.gradient(loss, model.variables)

optimizer.apply_gradients(grads_and_vars=zip(grads, model.variables))

if((i+1)%50 == 0):

path = check_point.save('./save/GCN_model.ckpt')

print('Saving in:', path)

if((i+1) == 10000):

pca = PCAtest(2)

res = pca.get_res(y_pred)

for point in res:

plt.plot(point[0], point[1], color='g', marker='o', ls='None')

plt.title('test')

plt.show()

这部分的代码也并非十分复杂,基本上遵循了tensorflow2.0框架使用keras api进行网络构建的流程,即在GCN中根据之前写的GCN_layer来搭建多层的网络结构。故这部分不再赘述。但需要注意跑代码时需要修改path1与path2的值,修改为自己本地的cora数据集路径便可。

上面代码中较为复杂的还是data_loader类的构建,关系到整个数据集的合理的输入方式以及归一化的邻接矩阵(即上面所提到的张量support)的计算,故下面还是要针对这个类进行一定的讨论的。

首先,cora数据集的python读取方法是参考了大奸猫大佬的博客(https://blog.csdn.net/yeziand01/article/details/93374216)对于本文的工作帮助很大,在此感谢。而将cora数据集全部读取的方法在这个博客里面已经讲得很清楚了,在此就不再赘述。重点讲解一下邻接矩阵的归一化过程,也就是这部分代码:

tem_d = np.sum(self.matrix, axis=1)

self.d = np.zeros((num, num))

for i in range(len(tem_d)):

self.d[i][i] = 1.0/np.sqrt(tem_d[i])

self.support = tf.matmul(tf.matmul(self.d, self.matrix), self.d)

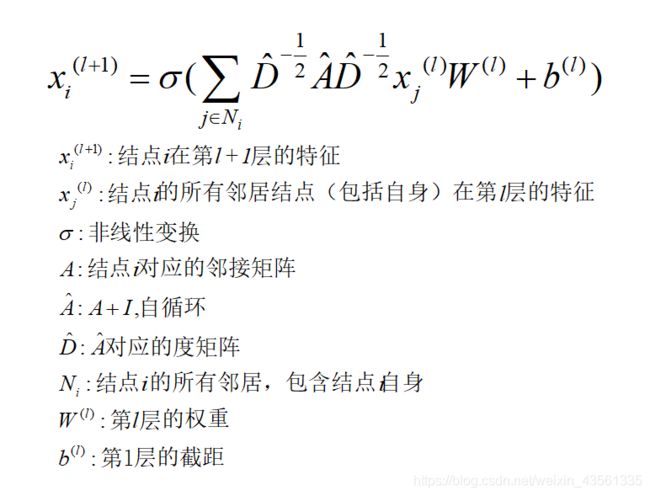

根据GCN的公式推导:

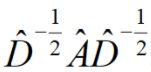

也就是中间的 是需要我们进行计算的support。

是需要我们进行计算的support。

那么回到代码,tem_d就是通过邻接矩阵计算了每个节点的度,当然包括它自己,而后通过将一个全零的矩阵的对角全部填入对应节点度的-1/2次方之后,就完成了D^(-1/2)的计算(还不熟悉md的公式编写,还望见谅),而A在上面已经计算出来了(self.matrix),故接下来矩阵乘法两次便可求出support。

那么到此代码的解析便结束了,笔者也是刚刚开始学习GCN和tensorflow2.0的相关知识,故代码极为粗糙简陋,还望见谅。