【Randaugment】《Randaugment:Practical automated data augmentation with a reduced search space》

CVPRW-2020

文章目录

- 1 Background and Motivation

- 2 Related Work

- 3 Advantages / Contributions

- 4 Method

-

- 4.1 Systematic failures of a separate proxy task

- 4.2 Automated data augmentation without a proxy task

- 5 Experiments

-

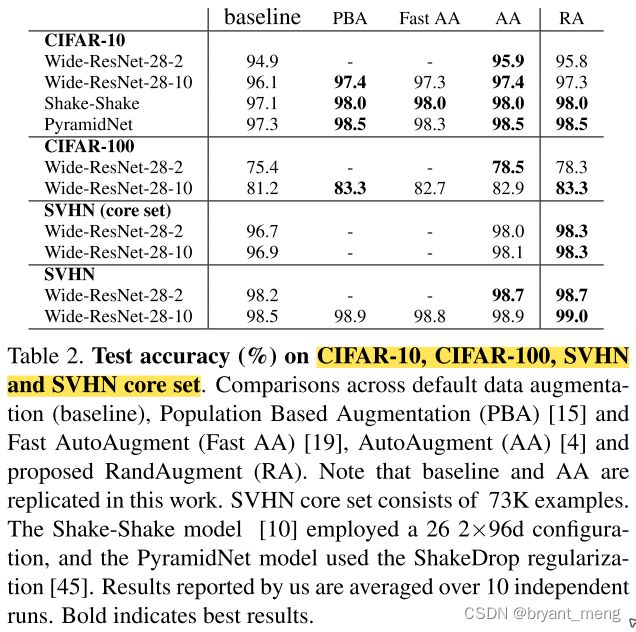

- 5.1 CIFAR-10 and SVHN

- 5.2 Image classification with ImageNet dataset

- 5.3 Object detection with COCO dataset

- 5.4 Investigating the dependence on the included

- 5.5 Learning the probabilities for selecting image transformations

- 6 Appendix

-

- 6.1 Magnitude methods

- 6.2 Optimizing individual transformation magnitudes

- 7 Conclusion(own)

1 Background and Motivation

数据增广的好处无需多言,然而 manually designed(概率参数的配置) makes it difficult to scale to new applications.

automate the design of augmentation 成了新的趋势

当前的 auto 数据增广方法 require a separate(每种方法单独调增广等级) and expensive search phase,所以很慢很吃资源,只能 use a smaller proxy task 搜(比如 cifar 或者 reduced ImageNet),然后迁移到大数据集上(比如 ImageNet)

it was not clear if the optimal hyperparameters found on the proxy task are also optimal for the actual task.

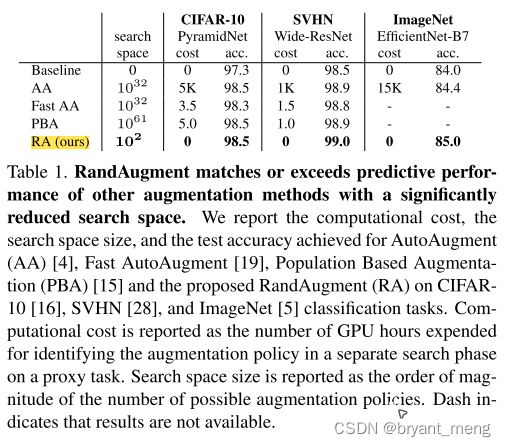

本文,作者提出了 Randaugment 方法,optimize all of the operations jointly with a single distortion magnitude(所有 aug 增广同步) while setting the probability of each operation to uniform,极大了压缩了 auto 时的 search space,取得了和 autoaugment 方法差不多的结果(参考 【AutoAugment】《AutoAugment:Learning Augmentation Policies from Data》)

2 Related Work

-

individual operations to augment data

-

optimal strategies for combining different operations

-

learning data augmentation strategies from data

3 Advantages / Contributions

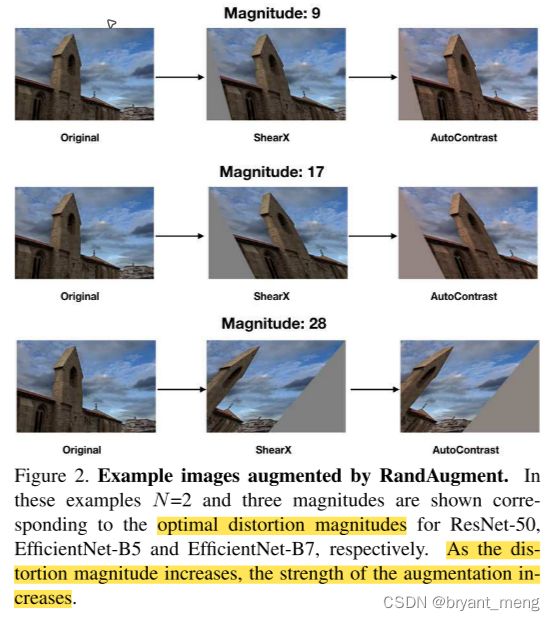

实验发现 the optimal strength of a data augmentation distortions depends on the model size and training set size

indicates that a separate optimization of an augmentation policy on a smaller proxy task may be sub-optimal for learning and transferring augmentation policies.

提出了 Randaugment 方法,所有增广方法用同一等级的增广强度,大大降低搜索空间,效果还不错

4 Method

4.1 Systematic failures of a separate proxy task

图1a可以看出 larger networks demand larger data distortions for regularization

图1b, fixed distortion magnitude (dashed line) for all architectures that is clearly sub-optimal

图1c,optimal distortion magnitude is larger for models that are trained on larger datasets.

这个结论有点违背我们的直觉,我们觉得小数据集样本太少,需要加大增广方法的 distortion magnitude 以丰富其样本(smaller datasets require stronger regularization)

作者给出的解释是 aggressive data augmentation leads to a low signal-to-noise ratio in small datasets(过猛增广引入的噪声可能盖过了增广方法本身)

增广强度随着数据集的增大,随着网络的变大而变大,indicate that a small proxy task may provide a sub-optimal indicator of performance on a larger task.

然后作者统一所有增广方法的增广强度(用一个变量控制,unified

optimization),极大压缩了搜索空间,直接在大数据集上搜

Figure 1 suggest that merely searching for a shared distortion magnitude M M M across all transformations may provide sufficient gains that exceed learned optimization methods using proxy tasks.

Ps:感觉也只能说直接在大数据集上搜而不是在小数据集上搜然后迁移到大数据上是最好的,可是之前的方法搜索空间太大,在大数据集上搜起来太慢了,作者强行把所有增广方法的增广强度统一到一个变量控制,肯定不是最优的,不过这么操作起来,确实可以比 using proxy tasks 理论上结果更好,速度上也可行。本图的贡献,或者说本文的贡献之一应该是更直观了说明了 using proxy task 不好吧!!!

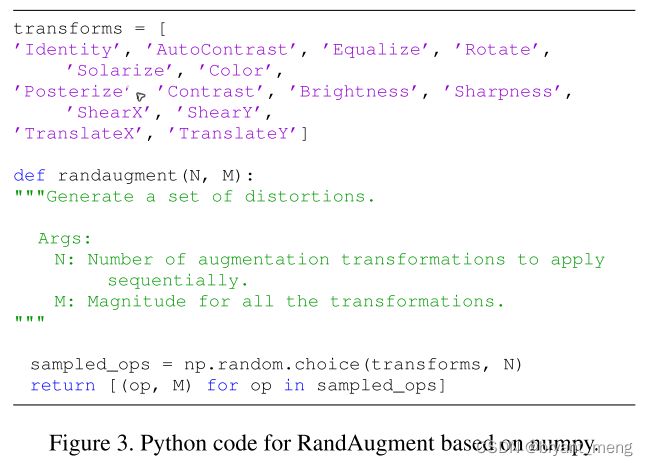

4.2 Automated data augmentation without a proxy task

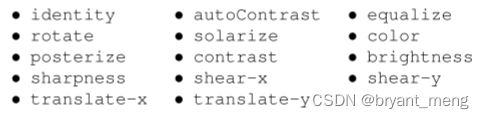

N N N 和 M M M 两个需要搜索的参数

-

N N N 表示一次使用的增广方法的数量,从 K = 14 K = 14 K=14 种数据增广的方法中随机抽 N N N 种来进行数据增广,RandAugment may express K N K^N KN potential policies

-

M M M 是增广方法的 distortion magnitudes,也即增广等级

14 种增广方法对应如下

5 Experiments

Datasets

- CIFAR-10

- CIFAR-100

- SVHN

- ImageNet

- COCO

5.1 CIFAR-10 and SVHN

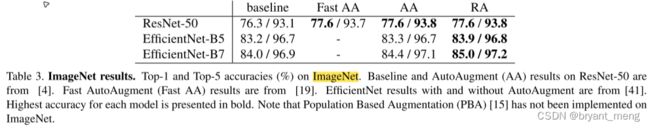

5.2 Image classification with ImageNet dataset

AA 是在 reduced ImageNet(随机 120 cls, 6000 samples) 上搜的

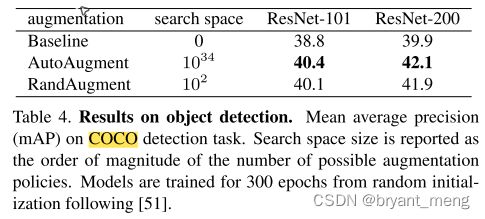

5.3 Object detection with COCO dataset

AutoAugment leveraged additional, specialized transformations not afforded to RandAugment in order to augment the localized bounding box of an image

可能也是为啥 workshop 的原因吧

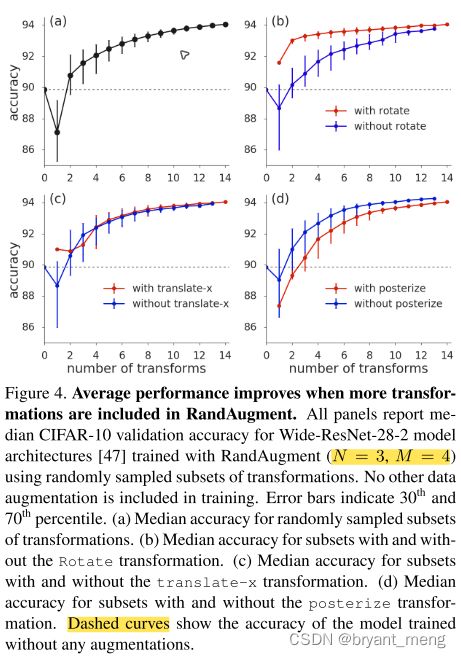

5.4 Investigating the dependence on the included

transformations

横坐标应该是 K K K,奇了怪,从14 里面选哪几个出来做实验呢?random?

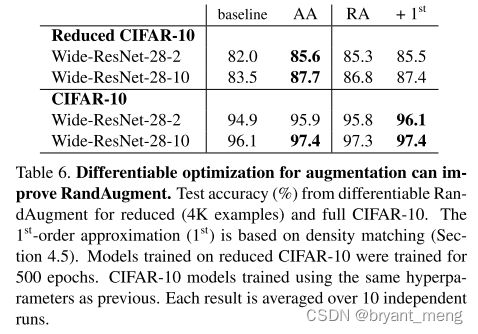

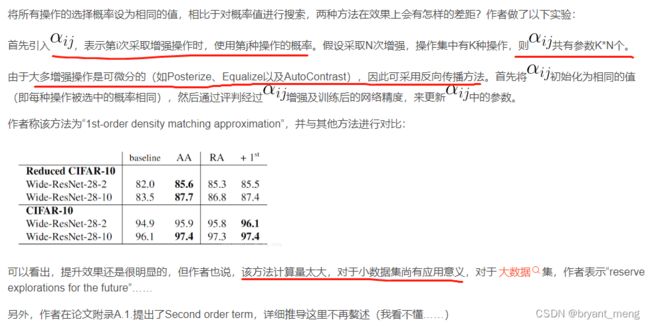

5.5 Learning the probabilities for selecting image transformations

这部分解读看

来自 论文笔记:RandAugment

6 Appendix

6.1 Magnitude methods

效果差不多,作者选了 Constant Magnitude,because this strategy includes only a single hyper-parameter, and we employ this for the rest of the work.

实话说,没有理解四个的区别,哈哈哈

6.2 Optimizing individual transformation magnitudes

这个图还是很不错的,可以看到,squares 和 diamonds 的差距并不太大,也即,所有增广统一的 M M M 和各自单独最优的 M M M 之间的差距也没有那么明显

This suggests that tying all magnitudes together into a single value M M M is not greatly hurting the model performance.

7 Conclusion(own)

代码,来自 [CVPR2020]RandAugment:搜索空间减小的基于NAS方法的数据增强策略

调用

from torchvision.transforms import transforms

from augmentations import RandAugment

transform_train = transforms.Compose([

transforms.RandomCrop(32, padding=4),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

transforms.Normalize(_CIFAR_MEAN, _CIFAR_STD),

])

# Add RandAugment with N, M(hyperparameter)

transform_train.transforms.insert(0, RandAugment(N, M))

实现

# augmentations.py

def ShearX(img, v): # [-0.3, 0.3]

assert -0.3 <= v <= 0.3

if random.random() > 0.5:

v = -v

return img.transform(img.size, PIL.Image.AFFINE, (1, v, 0, 0, 1, 0))

def ShearY(img, v): # [-0.3, 0.3]

assert -0.3 <= v <= 0.3

if random.random() > 0.5:

v = -v

return img.transform(img.size, PIL.Image.AFFINE, (1, 0, 0, v, 1, 0))

def TranslateX(img, v): # [-150, 150] => percentage: [-0.45, 0.45]

assert -0.45 <= v <= 0.45

if random.random() > 0.5:

v = -v

v = v * img.size[0]

return img.transform(img.size, PIL.Image.AFFINE, (1, 0, v, 0, 1, 0))

def TranslateXabs(img, v): # [-150, 150] => percentage: [-0.45, 0.45]

assert 0 <= v

if random.random() > 0.5:

v = -v

return img.transform(img.size, PIL.Image.AFFINE, (1, 0, v, 0, 1, 0))

def TranslateY(img, v): # [-150, 150] => percentage: [-0.45, 0.45]

assert -0.45 <= v <= 0.45

if random.random() > 0.5:

v = -v

v = v * img.size[1]

return img.transform(img.size, PIL.Image.AFFINE, (1, 0, 0, 0, 1, v))

def TranslateYabs(img, v): # [-150, 150] => percentage: [-0.45, 0.45]

assert 0 <= v

if random.random() > 0.5:

v = -v

return img.transform(img.size, PIL.Image.AFFINE, (1, 0, 0, 0, 1, v))

def Rotate(img, v): # [-30, 30]

assert -30 <= v <= 30

if random.random() > 0.5:

v = -v

return img.rotate(v)

def AutoContrast(img, _):

return PIL.ImageOps.autocontrast(img)

def Invert(img, _):

return PIL.ImageOps.invert(img)

def Equalize(img, _):

return PIL.ImageOps.equalize(img)

def Flip(img, _): # not from the paper

return PIL.ImageOps.mirror(img)

def Solarize(img, v): # [0, 256]

assert 0 <= v <= 256

return PIL.ImageOps.solarize(img, v)

def SolarizeAdd(img, addition=0, threshold=128):

img_np = np.array(img).astype(np.int)

img_np = img_np + addition

img_np = np.clip(img_np, 0, 255)

img_np = img_np.astype(np.uint8)

img = Image.fromarray(img_np)

return PIL.ImageOps.solarize(img, threshold)

def Posterize(img, v): # [4, 8]

v = int(v)

v = max(1, v)

return PIL.ImageOps.posterize(img, v)

def Contrast(img, v): # [0.1,1.9]

assert 0.1 <= v <= 1.9

return PIL.ImageEnhance.Contrast(img).enhance(v)

def Color(img, v): # [0.1,1.9]

assert 0.1 <= v <= 1.9

return PIL.ImageEnhance.Color(img).enhance(v)

def Brightness(img, v): # [0.1,1.9]

assert 0.1 <= v <= 1.9

return PIL.ImageEnhance.Brightness(img).enhance(v)

def Sharpness(img, v): # [0.1,1.9]

assert 0.1 <= v <= 1.9

return PIL.ImageEnhance.Sharpness(img).enhance(v)

def Cutout(img, v): # [0, 60] => percentage: [0, 0.2]

assert 0.0 <= v <= 0.2

if v <= 0.:

return img

v = v * img.size[0]

return CutoutAbs(img, v)

def CutoutAbs(img, v): # [0, 60] => percentage: [0, 0.2]

# assert 0 <= v <= 20

if v < 0:

return img

w, h = img.size

x0 = np.random.uniform(w)

y0 = np.random.uniform(h)

x0 = int(max(0, x0 - v / 2.))

y0 = int(max(0, y0 - v / 2.))

x1 = min(w, x0 + v)

y1 = min(h, y0 + v)

xy = (x0, y0, x1, y1)

color = (125, 123, 114)

# color = (0, 0, 0)

img = img.copy()

PIL.ImageDraw.Draw(img).rectangle(xy, color)

return img

def SamplePairing(imgs): # [0, 0.4]

def f(img1, v):

i = np.random.choice(len(imgs))

img2 = PIL.Image.fromarray(imgs[i])

return PIL.Image.blend(img1, img2, v)

return f

def Identity(img, v):

return img

def augment_list(): # 16 oeprations and their ranges

# https://github.com/tensorflow/tpu/blob/8462d083dd89489a79e3200bcc8d4063bf362186/models/official/efficientnet/autoaugment.py#L505

l = [

(AutoContrast, 0, 1),

(Equalize, 0, 1),

(Invert, 0, 1),

(Rotate, 0, 30),

(Posterize, 0, 4),

(Solarize, 0, 256),

(SolarizeAdd, 0, 110),

(Color, 0.1, 1.9),

(Contrast, 0.1, 1.9),

(Brightness, 0.1, 1.9),

(Sharpness, 0.1, 1.9),

(ShearX, 0., 0.3),

(ShearY, 0., 0.3),

(CutoutAbs, 0, 40),

(TranslateXabs, 0., 100),

(TranslateYabs, 0., 100),

]

return l

class Lighting(object):

"""Lighting noise(AlexNet - style PCA - based noise)"""

def __init__(self, alphastd, eigval, eigvec):

self.alphastd = alphastd

self.eigval = torch.Tensor(eigval)

self.eigvec = torch.Tensor(eigvec)

def __call__(self, img):

if self.alphastd == 0:

return img

alpha = img.new().resize_(3).normal_(0, self.alphastd)

rgb = self.eigvec.type_as(img).clone() \

.mul(alpha.view(1, 3).expand(3, 3)) \

.mul(self.eigval.view(1, 3).expand(3, 3)) \

.sum(1).squeeze()

return img.add(rgb.view(3, 1, 1).expand_as(img))

class CutoutDefault(object):

"""

Reference : https://github.com/quark0/darts/blob/master/cnn/utils.py

"""

def __init__(self, length):

self.length = length

def __call__(self, img):

h, w = img.size(1), img.size(2)

mask = np.ones((h, w), np.float32)

y = np.random.randint(h)

x = np.random.randint(w)

y1 = np.clip(y - self.length // 2, 0, h)

y2 = np.clip(y + self.length // 2, 0, h)

x1 = np.clip(x - self.length // 2, 0, w)

x2 = np.clip(x + self.length // 2, 0, w)

mask[y1: y2, x1: x2] = 0.

mask = torch.from_numpy(mask)

mask = mask.expand_as(img)

img *= mask

return img

class RandAugment:

def __init__(self, n, m):

self.n = n

self.m = m # [0, 30]

self.augment_list = augment_list()

def __call__(self, img):

ops = random.choices(self.augment_list, k=self.n)

for op, minval, maxval in ops:

val = (float(self.m) / 30) * float(maxval - minval) + minval # 在最大值和最小值之间分了30个等级

img = op(img, val)

return img