- 原型链污染

江湖没什么好的

xss

原型链污染(PrototypePollution)是一种针对JavaScript应用的安全漏洞,攻击者通过操纵对象的原型链,向基础对象(如Object.prototype)注入恶意属性,从而影响整个应用程序的行为。以下是详细解析:核心原理JavaScript原型链机制:每个对象都有隐式原型__proto__(或通过Object.getPrototypeOf()访问),指向其构造函数的原型对象。访问

- python第一次作业

1.技术面试题(1)TCP与UDP的区别是什么?**答:1.TCP是面向连接的协议,而UDP是元连接的协议2.TCP协议传输是可靠的,而UDP协议的传输是“尽力而为3.TCP是可以实现流控,而UDP不行4.TCP可以实现分段,而UDP不行5.TCP的传输速率较慢,占用资源较大,UDP传输速率快,占用资源小。TCP/UDP的应用场景不同TCP适合可靠性高的效率要求低的,UDP可靠性低,效率高。(2)

- python

www_hhhhhhh

pythonjava面试

1.技术面试题(1)解释Linux中的进程、线程和守护进程的概念,以及如何管理它们?答:进程:是操作系统进行资源分配的基本单位,拥有独立的地址空间、进程控制块,每个进程之间相互隔离。例如,打开一个终端窗口会启动一个bash进程。线程:是操作系统调度的基本单位,隶属于进程,共享进程的资源,但有独立的线程控制块和栈。线程切换开销远小于进程。例如,一个Web服务器的单个进程中,多个线程可同时处理不同客户

- Python lambda表达式:匿名函数的适用场景与限制

梦幻南瓜

pythonpython服务器linux

目录1.Lambda表达式概述1.1Lambda表达式的基本语法1.2简单示例2.Lambda表达式的核心特点2.1匿名性2.2简洁性2.3即时性2.4函数式编程特性3.Lambda表达式的适用场景3.1作为高阶函数的参数3.2简单的数据转换3.3条件筛选3.4GUI编程中的回调函数3.5Pandas数据处理4.Lambda表达式的限制4.1只能包含单个表达式4.2没有语句4.3缺乏文档字符串4.

- 【python】

www_hhhhhhh

python面试职场和发展

1.技术面试题(1)TCP与UDP的区别是什么?答:TCP(传输控制协议)和UDP(用户数据报协议)是两种常见的传输层协议,主要区别在于连接方式和可靠性。TCP是面向连接的协议,传输数据前需建立连接,通过三次握手确保连接可靠,传输过程中有确认、重传和顺序控制机制,保证数据完整、按序到达,适用于网页浏览、文件传输等对可靠性要求高的场景。UDP是无连接的协议,无需建立连接即可发送数据,不保证数据可靠传

- Python函数的返回值

1.返回值定义及案例:2.返回值与print的区别:print仅仅是打印在控制台,而return则是将return后面的部分作为返回值作为函数的输出,可以用变量接走,继续使用该返回值做其它事。3.保存函数的返回值如果一个函数return返回了一个数据,那么想要用这个数据,那么就需要保存.#定义函数defadd2num(a,b): returna+b#调用函数,顺便保存函数的返回值result=

- python怎么把函数返回值_python函数怎么返回值

python函数使用return语句返回“返回值”,可以将其赋给其它变量作其它的用处。所有函数都有返回值,如果没有return语句,会隐式地调用returnNone作为返回值。python函数使用return语句返回"返回值",可以将其赋给其它变量作其它的用处。所有函数都有返回值,如果没有return语句,会隐式地调用returnNone作为返回值。一个函数可以存在多条return语句,但只有一条

- Python星球日记 - 第8天:函数基础

Code_流苏

Python星球日记python函数def关键字函数参数返回值

引言:上一篇:Python星球日记-第7天:字典与集合名人说:路漫漫其修远兮,吾将上下而求索。——屈原《离骚》创作者:Code_流苏(CSDN)(一个喜欢古诗词和编程的Coder)目录一、函数的定义与调用1.什么是函数?2.如何定义函数-`def`关键字3.函数调用方式二、参数与返回值1.函数参数类型2.如何传递参数3.返回值和`return`语句三、局部变量与全局变量1.变量作用域概念2.局部变

- 华为OD机试2025C卷 - 小明的幸运数 (C++ & Python & JAVA & JS & GO)

无限码力

华为od华为OD机试2025C卷华为OD2025C卷华为OD机考2025C卷

小明的幸运数华为OD机试真题目录点击查看:华为OD机试2025C卷真题题库目录|机考题库+算法考点详解华为OD机试2025C卷100分题型题目描述小明在玩一个游戏,游戏规则如下:在游戏开始前,小明站在坐标轴原点处(坐标值为0).给定一组指令和一个幸运数,每个指令都是一个整数,小明按照指令前进指定步数或者后退指定步数。前进代表朝坐标轴的正方向走,后退代表朝坐标轴的负方向走。幸运数为一个整数,如果某个

- Python 函数返回值

落花雨时

Python基础

#返回值,返回值就是函数执行以后返回的结果#可以通过return来指定函数的返回值#可以之间使用函数的返回值,也可以通过一个变量来接收函数的返回值defsum(*nums):#定义一个变量,来保存结果result=0#遍历元组,并将元组中的数进行累加forninnums:result+=nprint(result)#sum(123,456,789)#return后边跟什么值,函数就会返回什么值#r

- Flutter状态管理篇之ValueNotifier(三)

目录前言一、ValueNotifier概述二、ValueNotifier的实现原理1.类定义1.类定义2.关键字段3.关键方法1.构造函数2.getter:value3.setter:value:4.toString2.继承自ChangeNotifier的机制3.ValueListenable接口三、ValueNotifier的用法1.自动监听单个值的变化2.手动监听3.结合Provider四、V

- 存档python爬虫、Web学习资料

1python爬虫学习学习Python爬虫是个不错的选择,它能够帮你高效地获取网络数据。下面为你提供系统化的学习路径和建议:1.打好基础首先要掌握Python基础知识,这是学习爬虫的前提。比如:变量、数据类型、条件语句、循环等基础语法。列表、字典等常用数据结构的操作。函数、模块和包的使用方法。文件读写操作。推荐通过阅读《Python编程:从入门到实践》这本书或者在Codecademy、LeetCo

- Python爬虫入门到实战(3)-对网页进行操作

荼蘼

爬虫

一.获取和操作网页元素1.获取网页中的指定元素tag_name()方法:获取元素名称。text()方法:获取元素文本内容。click()方法():点击此元素。submit()方法():提交表单。send_keys()方法:模拟输入信息。size()方法:获取元素的尺寸可进入selenium库文件夹下的webdriver\remote\webelement.py中查看更多的操作方法,2.在元素中输入

- 华为OD 机试 2025 B卷 - 周末爬山 (C++ & Python & JAVA & JS & GO)

无限码力

华为OD机试真题刷题笔记华为od华为OD2025B卷华为OD机考2025B卷华为OD机试2025B卷华为OD机试

周末爬山华为OD机试真题目录点击查看:华为OD机试2025B卷真题题库目录|机考题库+算法考点详解华为OD机试2025B卷200分题型题目描述周末小明准备去爬山锻炼,0代表平地,山的高度使用1到9来表示,小明每次爬山或下山高度只能相差k及k以内,每次只能上下左右一个方向上移动一格,小明从左上角(0,0)位置出发输入描述第一行输入mnk(空格分隔)。代表m*n的二维山地图,k为小明每次爬山或下山高度

- Python,C++,Go开发芯片电路设计APP

Geeker-2025

pythonc++golang

#芯片电路设计APP-Python/C++/Go综合开发方案##系统架构设计```mermaidgraphTDA[Web前端]-->B(Python设计界面)B-->C(GoAPI网关)C-->D[C++核心引擎]D-->E[硬件加速]F[数据库]-->CG[EDA工具链]-->DH[云服务]-->C```##技术栈分工|技术|应用领域|优势||------|----------|------||

- 红队测试-代理和中间人攻击工具

小浪崇礼

BetterCAP-Modular,portableandeasilyextensibleMITMframework.Ettercap-Comprehensive,maturesuiteformachine-in-the-middleattacks.Habu-Pythonutilityimplementingavarietyofnetworkattacks,suchasARPpoisoning,D

- pyside6使用1 窗体、信号和槽

一、概要由于作者前期很多年都在使用C++和Qt框架进行项目的开发工作,故可以熟练的使用Qt框架。Qt框架在界面设计以及跨平台运用方面,有着巨大的优势,而界面设计恰恰是python的短板,故使用pyside6实现python和Qt的互补。1.1pyside6安装更新pip工具:pipinstall--upgradepip命令行执行如下指令:pipinstallpyside6-ihttps://pyp

- python-读写mysql(操作mysql数据库)

importpymysqlimportpandasaspdimporttimeonly_time=time.localtime(time.time())time_now=time.strftime('%Y-%m-%d%H:%M:%S',only_time)dt=time.strftime('%Y%m%d',only_time)t=time.time()tt=int(t)parentId=''sta

- python读写mysql

cavin_2017

Python学习

目前用到的连接数据库,主要实现连个功能:1.根据sql查询2.将dataframe数据通过pandas包写入mysql数据库中1.根据sql查询:通常我们通过sql查询mysql中的表,分三步1.连接数据库2.数据查询3.关闭连接,如果需要查询的步骤较多,将查询封装成函数,通过参数传递sql代码会省事很多。##定义连接数据库函数defmy_db(host,user,passwd,db,sql,po

- mysql学习记录7.22

woshishui68892

记录一下在学习mysql时避免忘记的内容。日期计算MySQL提供了一些函数,可用于对日期执行计算,例如,计算年龄或提取部分日期。要确定您的每只宠物几岁,请使用该TIMESTAMPDIFF()功能。它的参数是要表示结果的单位,以及两个日期之间的差值。以下查询为每只宠物显示出生日期,当前日期和年龄(以年为单位)。一个别名(age)是用来制造最终输出列标签更有意义。SELECTname,birth,CU

- 逻辑函数

汤汤grace

打卡第13天今天学习的是逻辑函数看似简单,实则里面蕴含着千万种可能,怎么选怎么用,IF配什么更简单高效,这都是学问。而且这个还要充分的开动小脑筋才能想出来。逻辑值TRUE,FALSE,不难理解,一真一假,平时考试也常遇见这两个大侠,但是,到了EXCEL,他们可会各种变身。比如,True*1,在单元格就会返回“1”,而False*1,就会返回"0".再来谈谈跟逻辑值有关系的函数And:所有条件都为T

- python+playwright 学习-91 cookies的获取保存删除相关操作

上海-悠悠

playwrightpython

前言playwright可以获取浏览器缓存的cookie信息,可以将这些cookies信息保存到本地,还可以加载本地cookies。获取cookies相关操作在登录前和登录后分别打印cookies信息,对比查看是否获取成功。fromplaywright.sync_apiimportsync_playwrightwithsync_playwright()asp:browser=p.chromium.

- Python——登录后获取cookie访问页面

尖叫的太阳

importrequestsurl="https://kyfw.12306.cn/otn/view/index.html"#网址首页https://kyfw.12306.cn/otn/view/index.html的cookieheaders={'User-Agent':'Mozilla/5.0(WindowsNT6.1;Win64;x64)','Cookie':'JSESSIONID=3330D

- python request 获取cookies value值的方法

dianqianwei8752

pythonc/c++

importrequestsres=requests.get(url)cookies=requests.utils.dict_from_cookiejar(res.cookies)print(cookies[key])转载于:https://www.cnblogs.com/VseYoung/p/python_cookies.html

- python连接达梦数据库方式

water bucket

python数据库pandas

1、通过jaydebeapi调用jdbcimportpandasaspdimportjaydebeapiif__name__=='__main__':url='jdbc:dm://{IP}:{PORT}/{库名}'username='{username}'password='{password}'jclassname='dm.jdbc.driver.DmDriver'jarFile='{DmJdb

- 计算机网络

哪里不会点哪里.

网络计算机网络服务器网络

目录一、OSI与TCP/IP各层的结构与功能二、三次握手和四次挥手1.三次握手2.为什么要三次握手3.第二次握手回传了ACK,为什么还要回传SYN4.四次挥手三、TCP协议如何保证可靠传输四、状态码五、Cookie和Session六、HTTP1.0和HTTP1.1七、URI和URL八、HTTP和HTTPS一、OSI与TCP/IP各层的结构与功能应用层应用层(application-layer)的任

- 阿里云天池-学习笔记(7.22)

2301_81822737

深度学习

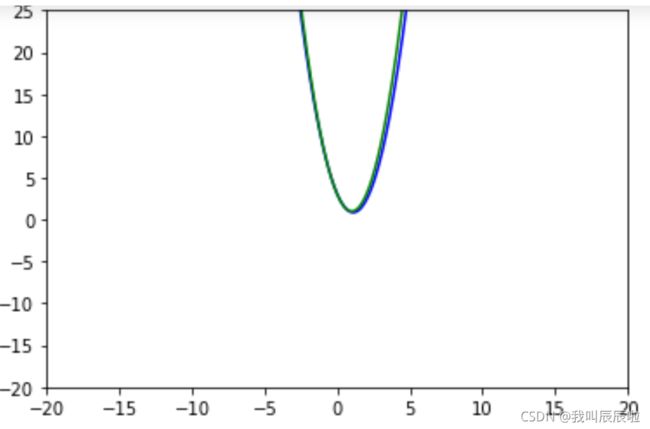

概念的初步认识和学习一、损失函数损失函数是衡量模型预测值与真实值之间差异的一个量度,通过最小化这个差异来优化模型的参数。损失函数的选择直接影响到模型的训练效果和最终性能。二、one-hot编码one-hot编码使用N位状态寄存器来对N个状态进行编码,每个状态都有它独立的寄存器位,并且在任意时候其中只有一位有效(即为1,其余为0)。具体来说,对于每个分类变量,都会为其分配一个唯一的二进制位,并使用该

- Python一次性批量下载网页内所有链接

Zhy_Tech

python前端开发语言

需要下载一个数据集,该数据集每一张图对应网页内一条链接,如下图所示。一开始尝试使用迅雷,但是迅雷一次性只能下载30条链接。采用Python成功实现一次性批量下载。importosimportrequestsfrombs4importBeautifulSoup#目标网页的URLurl="https://"#请将此处替换为实际的网页URL#指定下载文件的文件夹路径#使用原始字符串download_fo

- 初探贪心算法 -- 使用最少纸币组成指定金额

是小V呀

C++贪心算法算法c++python

python实现:#对于任意钱数,求最少张数n=int(input("money:"))#输入钱数bills=[100,50,20,10,5,2,1]#纸币面额种类total=0forbinbills:count=n//b#整除面额求用的纸币张数ifcount>0:print(f"{b}纸币张数{count}")n-=count*b#更新剩余金额total+=count#累加纸币数量print(f

- Linux系统编程(六)线程同步、互斥机制

小仇学长

Linuxlinux线程互斥锁信号量

本文目录前述:同步机制的引入及概念一、互斥锁1.定义2.互斥锁常用方法3.相关函数(1)头文件(2)创建互斥锁(3)销毁互斥锁(4)加锁(5)解锁4.使用例程二、条件变量1.相关函数(1)创建条件变量(2)注销条件变量(3)等待条件变量成立(4)条件变量激发(使条件变量成立)2.使用注意3.使用例程三、信号灯1.分类2.信号灯操作3.相关函数4.使用例程四、原子操作(内核层)1.优势2.常用的原子

- web报表工具FineReport常见的数据集报错错误代码和解释

老A不折腾

web报表finereport代码可视化工具

在使用finereport制作报表,若预览发生错误,很多朋友便手忙脚乱不知所措了,其实没什么,只要看懂报错代码和含义,可以很快的排除错误,这里我就分享一下finereport的数据集报错错误代码和解释,如果有说的不准确的地方,也请各位小伙伴纠正一下。

NS-war-remote=错误代码\:1117 压缩部署不支持远程设计

NS_LayerReport_MultiDs=错误代码

- Java的WeakReference与WeakHashMap

bylijinnan

java弱引用

首先看看 WeakReference

wiki 上 Weak reference 的一个例子:

public class ReferenceTest {

public static void main(String[] args) throws InterruptedException {

WeakReference r = new Wea

- Linux——(hostname)主机名与ip的映射

eksliang

linuxhostname

一、 什么是主机名

无论在局域网还是INTERNET上,每台主机都有一个IP地址,是为了区分此台主机和彼台主机,也就是说IP地址就是主机的门牌号。但IP地址不方便记忆,所以又有了域名。域名只是在公网(INtERNET)中存在,每个域名都对应一个IP地址,但一个IP地址可有对应多个域名。域名类型 linuxsir.org 这样的;

主机名是用于什么的呢?

答:在一个局域网中,每台机器都有一个主

- oracle 常用技巧

18289753290

oracle常用技巧 ①复制表结构和数据 create table temp_clientloginUser as select distinct userid from tbusrtloginlog ②仅复制数据 如果表结构一样 insert into mytable select * &nb

- 使用c3p0数据库连接池时出现com.mchange.v2.resourcepool.TimeoutException

酷的飞上天空

exception

有一个线上环境使用的是c3p0数据库,为外部提供接口服务。最近访问压力增大后台tomcat的日志里面频繁出现

com.mchange.v2.resourcepool.TimeoutException: A client timed out while waiting to acquire a resource from com.mchange.v2.resourcepool.BasicResou

- IT系统分析师如何学习大数据

蓝儿唯美

大数据

我是一名从事大数据项目的IT系统分析师。在深入这个项目前需要了解些什么呢?学习大数据的最佳方法就是先从了解信息系统是如何工作着手,尤其是数据库和基础设施。同样在开始前还需要了解大数据工具,如Cloudera、Hadoop、Spark、Hive、Pig、Flume、Sqoop与Mesos。系 统分析师需要明白如何组织、管理和保护数据。在市面上有几十款数据管理产品可以用于管理数据。你的大数据数据库可能

- spring学习——简介

a-john

spring

Spring是一个开源框架,是为了解决企业应用开发的复杂性而创建的。Spring使用基本的JavaBean来完成以前只能由EJB完成的事情。然而Spring的用途不仅限于服务器端的开发,从简单性,可测试性和松耦合的角度而言,任何Java应用都可以从Spring中受益。其主要特征是依赖注入、AOP、持久化、事务、SpringMVC以及Acegi Security

为了降低Java开发的复杂性,

- 自定义颜色的xml文件

aijuans

xml

<?xml version="1.0" encoding="utf-8"?> <resources> <color name="white">#FFFFFF</color> <color name="black">#000000</color> &

- 运营到底是做什么的?

aoyouzi

运营到底是做什么的?

文章来源:夏叔叔(微信号:woshixiashushu),欢迎大家关注!很久没有动笔写点东西,近些日子,由于爱狗团产品上线,不断面试,经常会被问道一个问题。问:爱狗团的运营主要做什么?答:带着用户一起嗨。为什么是带着用户玩起来呢?究竟什么是运营?运营到底是做什么的?那么,我们先来回答一个更简单的问题——互联网公司对运营考核什么?以爱狗团为例,绝大部分的移动互联网公司,对运营部门的考核分为三块——用

- js面向对象类和对象

百合不是茶

js面向对象函数创建类和对象

接触js已经有几个月了,但是对js的面向对象的一些概念根本就是模糊的,js是一种面向对象的语言 但又不像java一样有class,js不是严格的面向对象语言 ,js在java web开发的地位和java不相上下 ,其中web的数据的反馈现在主流的使用json,json的语法和js的类和属性的创建相似

下面介绍一些js的类和对象的创建的技术

一:类和对

- web.xml之资源管理对象配置 resource-env-ref

bijian1013

javaweb.xmlservlet

resource-env-ref元素来指定对管理对象的servlet引用的声明,该对象与servlet环境中的资源相关联

<resource-env-ref>

<resource-env-ref-name>资源名</resource-env-ref-name>

<resource-env-ref-type>查找资源时返回的资源类

- Create a composite component with a custom namespace

sunjing

https://weblogs.java.net/blog/mriem/archive/2013/11/22/jsf-tip-45-create-composite-component-custom-namespace

When you developed a composite component the namespace you would be seeing would

- 【MongoDB学习笔记十二】Mongo副本集服务器角色之Arbiter

bit1129

mongodb

一、复本集为什么要加入Arbiter这个角色 回答这个问题,要从复本集的存活条件和Aribter服务器的特性两方面来说。 什么是Artiber? An arbiter does

not have a copy of data set and

cannot become a primary. Replica sets may have arbiters to add a

- Javascript开发笔记

白糖_

JavaScript

获取iframe内的元素

通常我们使用window.frames["frameId"].document.getElementById("divId").innerHTML这样的形式来获取iframe内的元素,这种写法在IE、safari、chrome下都是通过的,唯独在fireforx下不通过。其实jquery的contents方法提供了对if

- Web浏览器Chrome打开一段时间后,运行alert无效

bozch

Webchormealert无效

今天在开发的时候,突然间发现alert在chrome浏览器就没法弹出了,很是怪异。

试了试其他浏览器,发现都是没有问题的。

开始想以为是chorme浏览器有啥机制导致的,就开始尝试各种代码让alert出来。尝试结果是仍然没有显示出来。

这样开发的结果,如果客户在使用的时候没有提示,那会带来致命的体验。哎,没啥办法了 就关闭浏览器重启。

结果就好了,这也太怪异了。难道是cho

- 编程之美-高效地安排会议 图着色问题 贪心算法

bylijinnan

编程之美

import java.util.ArrayList;

import java.util.Collections;

import java.util.List;

import java.util.Random;

public class GraphColoringProblem {

/**编程之美 高效地安排会议 图着色问题 贪心算法

* 假设要用很多个教室对一组

- 机器学习相关概念和开发工具

chenbowen00

算法matlab机器学习

基本概念:

机器学习(Machine Learning, ML)是一门多领域交叉学科,涉及概率论、统计学、逼近论、凸分析、算法复杂度理论等多门学科。专门研究计算机怎样模拟或实现人类的学习行为,以获取新的知识或技能,重新组织已有的知识结构使之不断改善自身的性能。

它是人工智能的核心,是使计算机具有智能的根本途径,其应用遍及人工智能的各个领域,它主要使用归纳、综合而不是演绎。

开发工具

M

- [宇宙经济学]关于在太空建立永久定居点的可能性

comsci

经济

大家都知道,地球上的房地产都比较昂贵,而且土地证经常会因为新的政府的意志而变幻文本格式........

所以,在地球议会尚不具有在太空行使法律和权力的力量之前,我们外太阳系统的友好联盟可以考虑在地月系的某些引力平衡点上面,修建规模较大的定居点

- oracle 11g database control 证书错误

daizj

oracle证书错误oracle 11G 安装

oracle 11g database control 证书错误

win7 安装完oracle11后打开 Database control 后,会打开em管理页面,提示证书错误,点“继续浏览此网站”,还是会继续停留在证书错误页面

解决办法:

是 KB2661254 这个更新补丁引起的,它限制了 RSA 密钥位长度少于 1024 位的证书的使用。具体可以看微软官方公告:

- Java I/O之用FilenameFilter实现根据文件扩展名删除文件

游其是你

FilenameFilter

在Java中,你可以通过实现FilenameFilter类并重写accept(File dir, String name) 方法实现文件过滤功能。

在这个例子中,我们向你展示在“c:\\folder”路径下列出所有“.txt”格式的文件并删除。 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16

- C语言数组的简单以及一维数组的简单排序算法示例,二维数组简单示例

dcj3sjt126com

carray

# include <stdio.h>

int main(void)

{

int a[5] = {1, 2, 3, 4, 5};

//a 是数组的名字 5是表示数组元素的个数,并且这五个元素分别用a[0], a[1]...a[4]

int i;

for (i=0; i<5; ++i)

printf("%d\n",

- PRIMARY, INDEX, UNIQUE 这3种是一类 PRIMARY 主键。 就是 唯一 且 不能为空。 INDEX 索引,普通的 UNIQUE 唯一索引

dcj3sjt126com

primary

PRIMARY, INDEX, UNIQUE 这3种是一类PRIMARY 主键。 就是 唯一 且 不能为空。INDEX 索引,普通的UNIQUE 唯一索引。 不允许有重复。FULLTEXT 是全文索引,用于在一篇文章中,检索文本信息的。举个例子来说,比如你在为某商场做一个会员卡的系统。这个系统有一个会员表有下列字段:会员编号 INT会员姓名

- java集合辅助类 Collections、Arrays

shuizhaosi888

CollectionsArraysHashCode

Arrays、Collections

1 )数组集合之间转换

public static <T> List<T> asList(T... a) {

return new ArrayList<>(a);

}

a)Arrays.asL

- Spring Security(10)——退出登录logout

234390216

logoutSpring Security退出登录logout-urlLogoutFilter

要实现退出登录的功能我们需要在http元素下定义logout元素,这样Spring Security将自动为我们添加用于处理退出登录的过滤器LogoutFilter到FilterChain。当我们指定了http元素的auto-config属性为true时logout定义是会自动配置的,此时我们默认退出登录的URL为“/j_spring_secu

- 透过源码学前端 之 Backbone 三 Model

逐行分析JS源代码

backbone源码分析js学习

Backbone 分析第三部分 Model

概述: Model 提供了数据存储,将数据以JSON的形式保存在 Model的 attributes里,

但重点功能在于其提供了一套功能强大,使用简单的存、取、删、改数据方法,并在不同的操作里加了相应的监听事件,

如每次修改添加里都会触发 change,这在据模型变动来修改视图时很常用,并且与collection建立了关联。

- SpringMVC源码总结(七)mvc:annotation-driven中的HttpMessageConverter

乒乓狂魔

springMVC

这一篇文章主要介绍下HttpMessageConverter整个注册过程包含自定义的HttpMessageConverter,然后对一些HttpMessageConverter进行具体介绍。

HttpMessageConverter接口介绍:

public interface HttpMessageConverter<T> {

/**

* Indicate

- 分布式基础知识和算法理论

bluky999

算法zookeeper分布式一致性哈希paxos

分布式基础知识和算法理论

BY

[email protected]

本文永久链接:http://nodex.iteye.com/blog/2103218

在大数据的背景下,不管是做存储,做搜索,做数据分析,或者做产品或服务本身,面向互联网和移动互联网用户,已经不可避免地要面对分布式环境。笔者在此收录一些分布式相关的基础知识和算法理论介绍,在完善自我知识体系的同

- Android Studio的.gitignore以及gitignore无效的解决

bell0901

androidgitignore

github上.gitignore模板合集,里面有各种.gitignore : https://github.com/github/gitignore

自己用的Android Studio下项目的.gitignore文件,对github上的android.gitignore添加了

# OSX files //mac os下 .DS_Store

- 成为高级程序员的10个步骤

tomcat_oracle

编程

What

软件工程师的职业生涯要历经以下几个阶段:初级、中级,最后才是高级。这篇文章主要是讲如何通过 10 个步骤助你成为一名高级软件工程师。

Why

得到更多的报酬!因为你的薪水会随着你水平的提高而增加

提升你的职业生涯。成为了高级软件工程师之后,就可以朝着架构师、团队负责人、CTO 等职位前进

历经更大的挑战。随着你的成长,各种影响力也会提高。

- mongdb在linux下的安装

xtuhcy

mongodblinux

一、查询linux版本号:

lsb_release -a

LSB Version: :base-4.0-amd64:base-4.0-noarch:core-4.0-amd64:core-4.0-noarch:graphics-4.0-amd64:graphics-4.0-noarch:printing-4.0-amd64:printing-4.0-noa