卷积神经网络

Tensorflow——卷积神经网络

-

- 卷积计算(Convolutional)

-

- TF描述卷积层

- 批标准化层(Batch Normalization,BN)

-

- TF描述批标准化层

- 池化层(Pooling)

-

- TF描述池化层

- 舍弃(Dropout)

-

- TF描述舍弃

- 卷积神经网络

-

- 基础卷积神经网络

- LeNet卷积神经网络 (1998)

- AlexNet卷积神经网络 (2012)

- VGGNet卷积神经网络 (2014)

- InceptionNet (2014)

- ResNet(2015)

观看【北京大学】TensorFlow2.0视频 笔记

https://www.bilibili.com/video/BV1B7411L7Qt?p=25

卷积计算(Convolutional)

送入网络的特征数很多时,随着隐藏层层数的增加,网络规模过大,待优化参数过多,很容易使模型过拟合,为了减少带训练参数,在实际训练时先对原始训练进行特征提取,把提取出来的特征送给网络。卷积计算是一种有效的特征提取方法

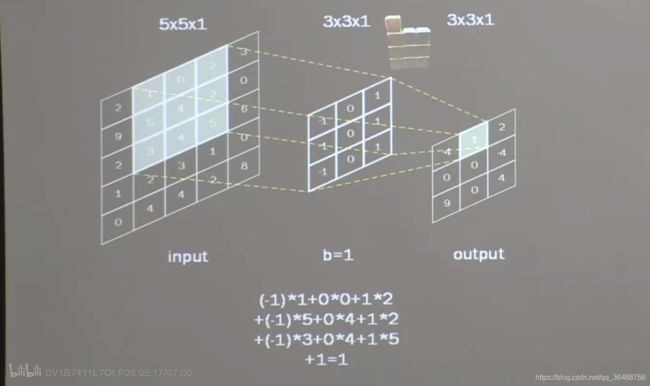

一般会用一个正方体的卷积核,按指定步长在输入特征图上滑动,遍历输入特征图里的每一个像素点,每滑动一个步长,卷积核会与输入特征图部分像素点重合,重合区域对应元素相乘求和再加上偏置项得到输出特征图的一个像素点

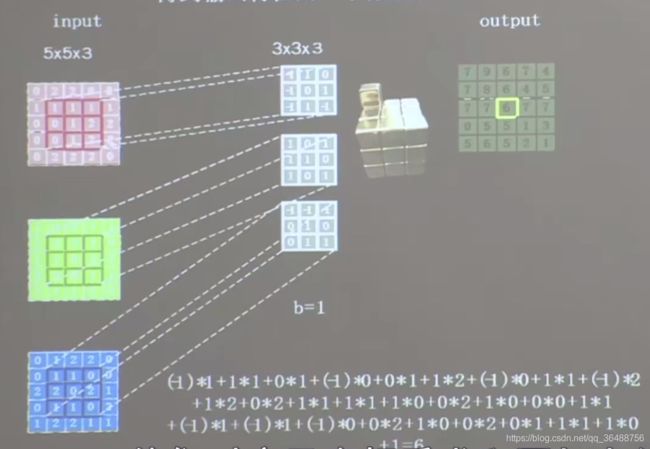

输入特征图的深度决定了当前卷积核的深度

当前卷积核的个数决定了当前输出特征图的深度

下图为大小为5 * 5 * 1输入特征图 与大小为3 * 3 * 1的单通道卷积核的卷积计算,滑动步长为1

感受野(Receptive Field):卷积神经网络各输出特征图中的每个像素点,在原始输入图片上映射的区域大小

该输出的每个像素点的感受野(Receptive Field)为3 * 3

下图为深度3的大小为553输入特征图 与深度为3的大小为333的三通道卷积核的卷积计算,滑动步长为1

全零填充Padding:为使经卷积计算后输出的特征图大小不变在输入特征图周围填入0

TF描述卷积层

tf.keras.layers.Conv2D(

filters=卷积核个数,

kernel_size=卷积核大小,

strides=滑动步长,

padding="same"(使用全零填充)or "valid"(不使用,默认),

activation="relu" or "sigmoid" or "tanh" or "softmax", #有标准化时不写

input_shape=(,,) #输入特征图维度,可省略

)

批标准化层(Batch Normalization,BN)

神经网络对0附近的数更敏感,但是随着网络层数的增加,特征数据会出现偏离0均值的情况,标准化可以使数据符合以0为均值,1为标准差的标准正态分布,把偏移的特征数据重新拉回到0附近,批标准化对一个Batch的数据做标准化处理,常用在卷积操作和激活操作之间

简单的特征数据标准化使特征数据完全满足标准正态分布,集中砸激活函数中心的线性区域,使激活函数丧失了非线性特征,因此在BN操作中为每个卷积核引入了两个可训练参数,缩放因子gamma和偏移因子beta,这两个参数会与其他训练参数一同被训练优化,使标准正态分布后的特征数据通过缩放因子和偏移因子优化特征数据分布的宽窄和偏移量,保证网络的非线性表达力

TF描述批标准化层

tf.keras.layers.BatchNormalization()

池化层(Pooling)

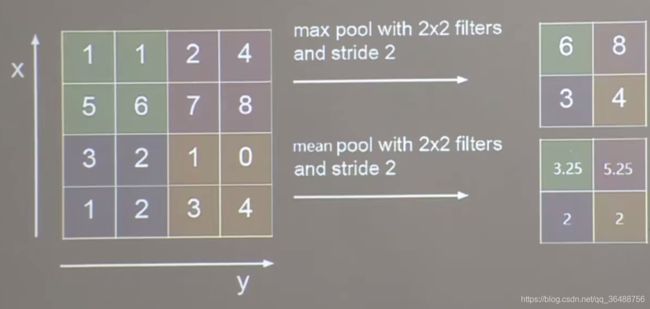

池化层用于减少特征数据量

池化的方法有:

最大池化:提取图片纹理 (下图右上)

均值池化:保留背景特征 (下图右下)

TF描述池化层

#最大池化层

tf.keras.layers.MaxPool2D(

pool_size=池化层尺寸,

strides=池化步长,

padding="same"(使用全零填充)or "valid"(不使用,默认)

)

#均值池化层

tf.keras.layers.AveragePooling2D(

pool_size=池化层尺寸,

strides=池化步长,

padding="same"(使用全零填充)or "valid"(不使用,默认)

)

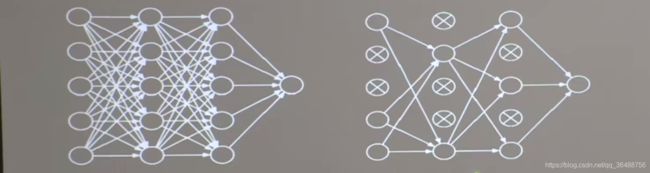

舍弃(Dropout)

为了缓解神经网络过拟合,在神经网络训练过程中,常把隐藏层的部分神经元按照一定比例从神经网络中临时舍弃,在使用神经网络时再把所有神经元恢复到神经网络中

TF描述舍弃

tf.keras.layers.Dropout(舍弃的概率)

卷积神经网络

卷积神经网络的主要模块就是CBAPD

Convolutional,BN,Activation,Pooling,Dropout

基础卷积神经网络

import tensorflow as tf

import os

import numpy as np

from matplotlib import pyplot as plt

from tensorflow.keras.layers import Conv2D, BatchNormalization, Activation, MaxPool2D, Dropout, Flatten, Dense

from tensorflow.keras import Model

#让输出不会显示省略号

np.set_printoptions(threshold=np.inf)

#加载数据集

cifar10 = tf.keras.datasets.cifar10

(x_train, y_train), (x_test, y_test) = cifar10.load_data()

x_train, x_test = x_train / 255.0, x_test / 255.0

class Baseline(Model):

def __init__(self):

super(Baseline, self).__init__()

self.c1 = Conv2D(filters=6, kernel_size=(5, 5), padding='same') # 卷积层

self.b1 = BatchNormalization() # BN层

self.a1 = Activation('relu') # 激活层

self.p1 = MaxPool2D(pool_size=(2, 2), strides=2, padding='same') # 池化层

self.d1 = Dropout(0.2) # dropout层

self.flatten = Flatten()

self.f1 = Dense(128, activation='relu')

self.d2 = Dropout(0.2)

self.f2 = Dense(10, activation='softmax')

def call(self, x):

x = self.c1(x)

x = self.b1(x)

x = self.a1(x)

x = self.p1(x)

x = self.d1(x)

x = self.flatten(x)

x = self.f1(x)

x = self.d2(x)

y = self.f2(x)

return y

model = Baseline()

model.compile(optimizer='adam',

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=False),

metrics=['sparse_categorical_accuracy'])

#端点续训文件保存

checkpoint_save_path = "./checkpoint/Baseline.ckpt"

if os.path.exists(checkpoint_save_path + '.index'):

print('-------------load the model-----------------')

model.load_weights(checkpoint_save_path)

cp_callback = tf.keras.callbacks.ModelCheckpoint(filepath=checkpoint_save_path,

save_weights_only=True,

save_best_only=True)

history = model.fit(x_train, y_train, batch_size=32, epochs=5, validation_data=(x_test, y_test), validation_freq=1,

callbacks=[cp_callback]) #使用回调函数实现端点续训

model.summary()

# print(model.trainable_variables)

file = open('./weights.txt', 'w')

for v in model.trainable_variables:

file.write(str(v.name) + '\n')

file.write(str(v.shape) + '\n')

file.write(str(v.numpy()) + '\n')

file.close()

############################################### show ###############################################

# 显示训练集和验证集的acc和loss曲线

acc = history.history['sparse_categorical_accuracy']

val_acc = history.history['val_sparse_categorical_accuracy']

loss = history.history['loss']

val_loss = history.history['val_loss']

plt.subplot(1, 2, 1)

plt.plot(acc, label='Training Accuracy')

plt.plot(val_acc, label='Validation Accuracy')

plt.title('Training and Validation Accuracy')

plt.legend()

plt.subplot(1, 2, 2)

plt.plot(loss, label='Training Loss')

plt.plot(val_loss, label='Validation Loss')

plt.title('Training and Validation Loss')

plt.legend()

plt.show()

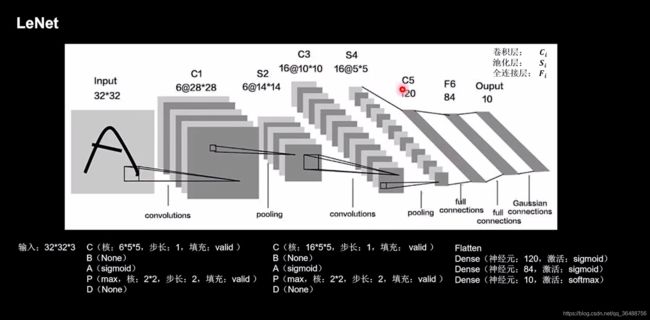

LeNet卷积神经网络 (1998)

LeNet卷积神经网络是LeCun于1998年提出,通过空间卷积核共享减少网络的参数

在统计神经网络层数时一般只统计卷积计算层和全连接层,其余操作可以认为是卷积计算层的附属

LeNet一共有五层网络:两个卷积层和两个sigmoid全连层和一个softmax全连层

class LeNet5(Model):

def __init__(self):

super(LeNet5, self).__init__()

self.c1 = Conv2D(filters=6, kernel_size=(5, 5),

activation='sigmoid')

self.p1 = MaxPool2D(pool_size=(2, 2), strides=2)

self.c2 = Conv2D(filters=16, kernel_size=(5, 5),

activation='sigmoid')

self.p2 = MaxPool2D(pool_size=(2, 2), strides=2)

self.flatten = Flatten()

self.f1 = Dense(120, activation='sigmoid')

self.f2 = Dense(84, activation='sigmoid')

self.f3 = Dense(10, activation='softmax')

def call(self, x):

x = self.c1(x)

x = self.p1(x)

x = self.c2(x)

x = self.p2(x)

x = self.flatten(x)

x = self.f1(x)

x = self.f2(x)

y = self.f3(x)

return y

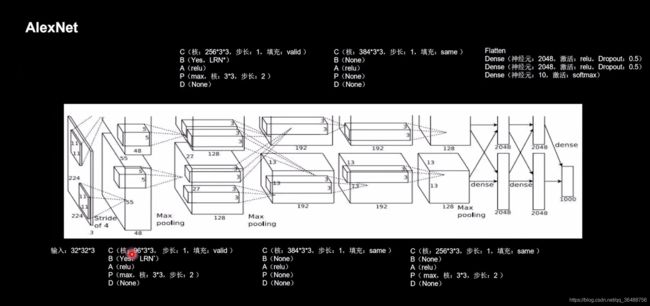

AlexNet卷积神经网络 (2012)

AlexNet于2012年提出,使用relu提升训练速度,使用Dropout缓解过拟合

class AlexNet8(Model):

def __init__(self):

super(AlexNet8, self).__init__()

self.c1 = Conv2D(filters=96, kernel_size=(3, 3))

self.b1 = BatchNormalization()

self.a1 = Activation('relu')

self.p1 = MaxPool2D(pool_size=(3, 3), strides=2)

self.c2 = Conv2D(filters=256, kernel_size=(3, 3))

self.b2 = BatchNormalization()

self.a2 = Activation('relu')

self.p2 = MaxPool2D(pool_size=(3, 3), strides=2)

self.c3 = Conv2D(filters=384, kernel_size=(3, 3), padding='same',

activation='relu')

self.c4 = Conv2D(filters=384, kernel_size=(3, 3), padding='same',

activation='relu')

self.c5 = Conv2D(filters=256, kernel_size=(3, 3), padding='same',

activation='relu')

self.p3 = MaxPool2D(pool_size=(3, 3), strides=2)

self.flatten = Flatten()

self.f1 = Dense(2048, activation='relu')

self.d1 = Dropout(0.5)

self.f2 = Dense(2048, activation='relu')

self.d2 = Dropout(0.5)

self.f3 = Dense(10, activation='softmax')

def call(self, x):

x = self.c1(x)

x = self.b1(x)

x = self.a1(x)

x = self.p1(x)

x = self.c2(x)

x = self.b2(x)

x = self.a2(x)

x = self.p2(x)

x = self.c3(x)

x = self.c4(x)

x = self.c5(x)

x = self.p3(x)

x = self.flatten(x)

x = self.f1(x)

x = self.d1(x)

x = self.f2(x)

x = self.d2(x)

y = self.f3(x)

return y

VGGNet卷积神经网络 (2014)

VGGNet于2014年提出,使用小尺寸卷积核,减少了参数同时提高了识别准确率,网络结构规整,适合硬件加速

class VGG16(Model):

def __init__(self):

super(VGG16, self).__init__()

self.c1 = Conv2D(filters=64, kernel_size=(3, 3), padding='same') # 卷积层1

self.b1 = BatchNormalization() # BN层1

self.a1 = Activation('relu') # 激活层1

self.c2 = Conv2D(filters=64, kernel_size=(3, 3), padding='same', )

self.b2 = BatchNormalization() # BN层1

self.a2 = Activation('relu') # 激活层1

self.p1 = MaxPool2D(pool_size=(2, 2), strides=2, padding='same')

self.d1 = Dropout(0.2) # dropout层

self.c3 = Conv2D(filters=128, kernel_size=(3, 3), padding='same')

self.b3 = BatchNormalization() # BN层1

self.a3 = Activation('relu') # 激活层1

self.c4 = Conv2D(filters=128, kernel_size=(3, 3), padding='same')

self.b4 = BatchNormalization() # BN层1

self.a4 = Activation('relu') # 激活层1

self.p2 = MaxPool2D(pool_size=(2, 2), strides=2, padding='same')

self.d2 = Dropout(0.2) # dropout层

self.c5 = Conv2D(filters=256, kernel_size=(3, 3), padding='same')

self.b5 = BatchNormalization() # BN层1

self.a5 = Activation('relu') # 激活层1

self.c6 = Conv2D(filters=256, kernel_size=(3, 3), padding='same')

self.b6 = BatchNormalization() # BN层1

self.a6 = Activation('relu') # 激活层1

self.c7 = Conv2D(filters=256, kernel_size=(3, 3), padding='same')

self.b7 = BatchNormalization()

self.a7 = Activation('relu')

self.p3 = MaxPool2D(pool_size=(2, 2), strides=2, padding='same')

self.d3 = Dropout(0.2)

self.c8 = Conv2D(filters=512, kernel_size=(3, 3), padding='same')

self.b8 = BatchNormalization() # BN层1

self.a8 = Activation('relu') # 激活层1

self.c9 = Conv2D(filters=512, kernel_size=(3, 3), padding='same')

self.b9 = BatchNormalization() # BN层1

self.a9 = Activation('relu') # 激活层1

self.c10 = Conv2D(filters=512, kernel_size=(3, 3), padding='same')

self.b10 = BatchNormalization()

self.a10 = Activation('relu')

self.p4 = MaxPool2D(pool_size=(2, 2), strides=2, padding='same')

self.d4 = Dropout(0.2)

self.c11 = Conv2D(filters=512, kernel_size=(3, 3), padding='same')

self.b11 = BatchNormalization() # BN层1

self.a11 = Activation('relu') # 激活层1

self.c12 = Conv2D(filters=512, kernel_size=(3, 3), padding='same')

self.b12 = BatchNormalization() # BN层1

self.a12 = Activation('relu') # 激活层1

self.c13 = Conv2D(filters=512, kernel_size=(3, 3), padding='same')

self.b13 = BatchNormalization()

self.a13 = Activation('relu')

self.p5 = MaxPool2D(pool_size=(2, 2), strides=2, padding='same')

self.d5 = Dropout(0.2)

self.flatten = Flatten()

self.f1 = Dense(512, activation='relu')

self.d6 = Dropout(0.2)

self.f2 = Dense(512, activation='relu')

self.d7 = Dropout(0.2)

self.f3 = Dense(10, activation='softmax')

def call(self, x):

x = self.c1(x)

x = self.b1(x)

x = self.a1(x)

x = self.c2(x)

x = self.b2(x)

x = self.a2(x)

x = self.p1(x)

x = self.d1(x)

x = self.c3(x)

x = self.b3(x)

x = self.a3(x)

x = self.c4(x)

x = self.b4(x)

x = self.a4(x)

x = self.p2(x)

x = self.d2(x)

x = self.c5(x)

x = self.b5(x)

x = self.a5(x)

x = self.c6(x)

x = self.b6(x)

x = self.a6(x)

x = self.c7(x)

x = self.b7(x)

x = self.a7(x)

x = self.p3(x)

x = self.d3(x)

x = self.c8(x)

x = self.b8(x)

x = self.a8(x)

x = self.c9(x)

x = self.b9(x)

x = self.a9(x)

x = self.c10(x)

x = self.b10(x)

x = self.a10(x)

x = self.p4(x)

x = self.d4(x)

x = self.c11(x)

x = self.b11(x)

x = self.a11(x)

x = self.c12(x)

x = self.b12(x)

x = self.a12(x)

x = self.c13(x)

x = self.b13(x)

x = self.a13(x)

x = self.p5(x)

x = self.d5(x)

x = self.flatten(x)

x = self.f1(x)

x = self.d6(x)

x = self.f2(x)

x = self.d7(x)

y = self.f3(x)

return y

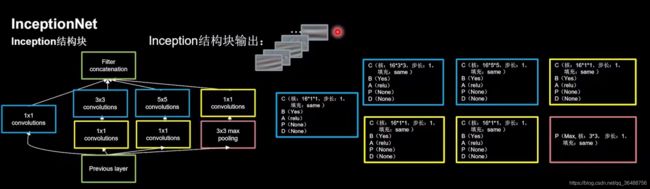

InceptionNet (2014)

于2014年提出,引入inception结构块,在同一层网络内使用不同尺寸的卷积核,提升了模型感知力,使用了批标准化,缓解了梯度消失

inception结构块:在同一层网络中使用了多个尺寸的卷积核,可以提取不同尺寸的特征,通过1 * 1卷积核作用到输入特征图的每个像素点,通过设定少于输入特征图深度的1 * 1 卷积核个数,减少了输出特征图的深度,起到了降维的作用,减少了参数量和计算量

inception结构块包括四个分支:如下图所示,由下至上有四个路径

class ConvBNRelu(Model):

def __init__(self, ch, kernelsz=3, strides=1, padding='same'):

super(ConvBNRelu, self).__init__()

self.model = tf.keras.models.Sequential([

Conv2D(ch, kernelsz, strides=strides, padding=padding),

BatchNormalization(),

Activation('relu')

])

def call(self, x):

x = self.model(x, training=False) #在training=False时,BN通过整个训练集计算均值、方差去做批归一化,training=True时,通过当前batch的均值、方差去做批归一化。推理时 training=False效果好

return x

class InceptionBlk(Model):

def __init__(self, ch, strides=1):

super(InceptionBlk, self).__init__()

self.ch = ch

self.strides = strides

self.c1 = ConvBNRelu(ch, kernelsz=1, strides=strides)

self.c2_1 = ConvBNRelu(ch, kernelsz=1, strides=strides)

self.c2_2 = ConvBNRelu(ch, kernelsz=3, strides=1)

self.c3_1 = ConvBNRelu(ch, kernelsz=1, strides=strides)

self.c3_2 = ConvBNRelu(ch, kernelsz=5, strides=1)

self.p4_1 = MaxPool2D(3, strides=1, padding='same')

self.c4_2 = ConvBNRelu(ch, kernelsz=1, strides=strides)

def call(self, x):

x1 = self.c1(x)

x2_1 = self.c2_1(x)

x2_2 = self.c2_2(x2_1)

x3_1 = self.c3_1(x)

x3_2 = self.c3_2(x3_1)

x4_1 = self.p4_1(x)

x4_2 = self.c4_2(x4_1)

# concat along axis=channel

x = tf.concat([x1, x2_2, x3_2, x4_2], axis=3)

return x

class Inception10(Model):

def __init__(self, num_blocks, num_classes, init_ch=16, **kwargs):

super(Inception10, self).__init__(**kwargs)

self.in_channels = init_ch

self.out_channels = init_ch

self.num_blocks = num_blocks

self.init_ch = init_ch

self.c1 = ConvBNRelu(init_ch)

self.blocks = tf.keras.models.Sequential()

for block_id in range(num_blocks):

for layer_id in range(2):

if layer_id == 0:

block = InceptionBlk(self.out_channels, strides=2)

else:

block = InceptionBlk(self.out_channels, strides=1)

self.blocks.add(block)

# enlarger out_channels per block

self.out_channels *= 2

self.p1 = GlobalAveragePooling2D()

self.f1 = Dense(num_classes, activation='softmax')

def call(self, x):

x = self.c1(x)

x = self.blocks(x)

x = self.p1(x)

y = self.f1(x)

return y

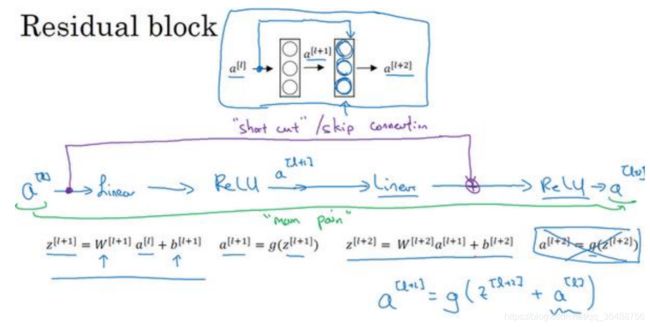

ResNet(2015)

于2015年提出,提出了层间残差跳连,引入了前方信息,缓解梯度消失和梯度爆炸,有效缓解神经网络模型堆叠导致的退化,使神经网络可以向更深层级发展。

跳跃连接(skip connection)可以从某一层网络获取激活然后迅速反馈给另外一层

class ResnetBlock(Model):

def __init__(self, filters, strides=1, residual_path=False):

super(ResnetBlock, self).__init__()

self.filters = filters

self.strides = strides

self.residual_path = residual_path

self.c1 = Conv2D(filters, (3, 3), strides=strides, padding='same', use_bias=False)

self.b1 = BatchNormalization()

self.a1 = Activation('relu')

self.c2 = Conv2D(filters, (3, 3), strides=1, padding='same', use_bias=False)

self.b2 = BatchNormalization()

# residual_path为True时,对输入进行下采样,即用1x1的卷积核做卷积操作,保证x能和F(x)维度相同,顺利相加

if residual_path:

self.down_c1 = Conv2D(filters, (1, 1), strides=strides, padding='same', use_bias=False)

self.down_b1 = BatchNormalization()

self.a2 = Activation('relu')

def call(self, inputs):

residual = inputs # residual等于输入值本身,即residual=x

# 将输入通过卷积、BN层、激活层,计算F(x)

x = self.c1(inputs)

x = self.b1(x)

x = self.a1(x)

x = self.c2(x)

y = self.b2(x)

if self.residual_path:

residual = self.down_c1(inputs)

residual = self.down_b1(residual)

out = self.a2(y + residual) # 最后输出的是两部分的和,即F(x)+x或F(x)+Wx,再过激活函数

return out

class ResNet18(Model):

def __init__(self, block_list, initial_filters=64): # block_list表示每个block有几个卷积层

super(ResNet18, self).__init__()

self.num_blocks = len(block_list) # 共有几个block

self.block_list = block_list

self.out_filters = initial_filters

self.c1 = Conv2D(self.out_filters, (3, 3), strides=1, padding='same', use_bias=False)

self.b1 = BatchNormalization()

self.a1 = Activation('relu')

self.blocks = tf.keras.models.Sequential()

# 构建ResNet网络结构

for block_id in range(len(block_list)): # 第几个resnet block

for layer_id in range(block_list[block_id]): # 第几个卷积层

if block_id != 0 and layer_id == 0: # 对除第一个block以外的每个block的输入进行下采样

block = ResnetBlock(self.out_filters, strides=2, residual_path=True)

else:

block = ResnetBlock(self.out_filters, residual_path=False)

self.blocks.add(block) # 将构建好的block加入resnet

self.out_filters *= 2 # 下一个block的卷积核数是上一个block的2倍

self.p1 = tf.keras.layers.GlobalAveragePooling2D()

self.f1 = tf.keras.layers.Dense(10, activation='softmax', kernel_regularizer=tf.keras.regularizers.l2())

def call(self, inputs):

x = self.c1(inputs)

x = self.b1(x)

x = self.a1(x)

x = self.blocks(x)

x = self.p1(x)

y = self.f1(x)

return y