fast-mvsnet阅读笔记

一、数据集预处理脚本

测试部分的DTU_Test_Set(Dataset):

读取扫描scan数据集id

test_set = [1, 4, 9, 10, 11, 12, 13, 15, 23, 24, 29, 32, 33, 34, 48, 49, 62, 75, 77,

110, 114, 118]

test_lighting_set = [3]

数据参数读入

首先需要说明网络的参数在 ./FastMVSNet/configs/dtu.yaml中

构造数据初始化代码块为

def __init__(self, root_dir, dataset_name,

num_view=3,

height=1152, width=1600,

num_virtual_plane=128,

interval_scale=1.6,

base_image_size=64,

depth_folder=""):

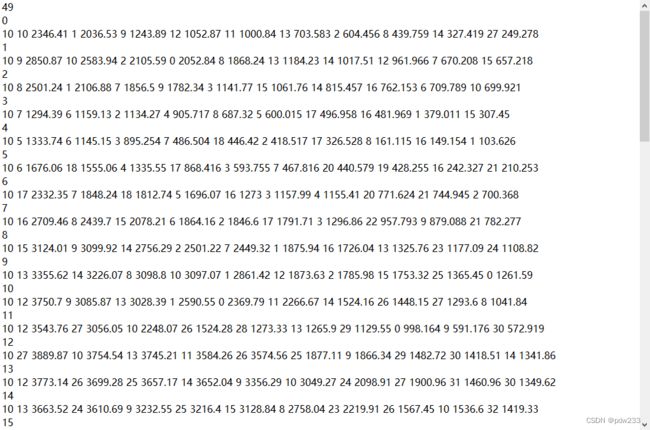

读取pair.txt

pair文件写的是视图数,参考图像id,相关联源图像个数,相关联源图像id,相似评分

cluster_file_path = "Cameras/pair.txt"

self.cluster_file_path = osp.join(root_dir, self.cluster_file_path)

self.cluster_list = open(self.cluster_file_path).read().split()

读取图片信息和内外参信息

image_folder = osp.join(self.root_dir, "Eval/Rectified/scan{}".format(ind))

cam_folder = osp.join(self.root_dir, "Cameras")

确定参考图像

for p in range(0, int(self.cluster_list[0])): #代码对所有图像进行处理

此代码表示读取了pair文件的第一个信息,即图片数。因为每一个图片需要生成一张深度图,因此使用循环

for p in range(0, int(self.cluster_list[0])):

ref_index = int(self.cluster_list[22 * p + 1]) #每隔22个读取参考id

ref_image_path = osp.join(image_folder, "rect_{:03d}_{}_r5000.png".format(ref_index,lighting_ind))

ref_cam_path = osp.join(cam_folder, "{:08d}_cam.txt".format(ref_index))

view_image_paths.append(ref_image_path)

view_cam_paths.append(ref_cam_path)

确定源图像

for p in range(0, int(self.cluster_list[0])):

ref_index = int(self.cluster_list[22 * p + 1]) #每隔22个读取参考id

ref_image_path = osp.join(image_folder, "rect_{:03d}_{}_r5000.png".format(ref_index,lighting_ind))

ref_cam_path = osp.join(cam_folder, "{:08d}_cam.txt".format(ref_index))

view_image_paths.append(ref_image_path)

view_cam_paths.append(ref_cam_path)

#以下为源图像路径读取

for view in range(self.num_view - 1):

view_index = int(self.cluster_list[22 * p + 2 * view + 3])

view_image_path = osp.join(image_folder, "rect_{:03d}_{}_r5000.png".format(view_index + 1,lighting_ind))

view_cam_path = osp.join(cam_folder, "{:08d}_cam.txt".format(view_index))

view_image_paths.append(view_image_path)

view_cam_paths.append(view_cam_path)

paths["view_image_paths"] = view_image_paths

paths["view_cam_paths"] = view_cam_paths

其中num_view 为设定相邻的源图像个数,一般为pair评分最高的几个,论文里默认为5。

FastMVSNet网络主体 mode.py

特征提取网络

该网络的结构如下,最终生成的特征图尺寸为原图的 1/4*1/4

class ImageConv(nn.Module):

def __init__(self, base_channels, in_channels=3):

super(ImageConv, self).__init__()

self.base_channels = base_channels

self.out_channels = 8 * base_channels

self.conv0 = nn.Sequential(

Conv2d(in_channels, base_channels, 3, 1, padding=1),

Conv2d(base_channels, base_channels, 3, 1, padding=1),

)

self.conv1 = nn.Sequential(

Conv2d(base_channels, base_channels * 2, 5, stride=2, padding=2),

Conv2d(base_channels * 2, base_channels * 2, 3, 1, padding=1),

Conv2d(base_channels * 2, base_channels * 2, 3, 1, padding=1),

)

self.conv2 = nn.Sequential(

Conv2d(base_channels * 2, base_channels * 4, 5, stride=2, padding=2),

Conv2d(base_channels * 4, base_channels * 4, 3, 1, padding=1),

nn.Conv2d(base_channels * 4, base_channels * 4, 3, padding=1, bias=False)

)

def forward(self, imgs):

out_dict = {}

conv0 = self.conv0(imgs)

out_dict["conv0"] = conv0

conv1 = self.conv1(conv0)

out_dict["conv1"] = conv1

conv2 = self.conv2(conv1)

out_dict["conv2"] = conv2

return out_dict

深度扩散模块

该步骤是将稀疏的高分辨率深度图扩散为密集的深度图,但都是粗深度图,未经优化。

代码如下(示例):

self.feature_grad_fetcher = FeatureGradFetcher()

正则化网络

self.coarse_vol_conv = VolumeConv(img_base_channels * 4, vol_base_channels)

虽然之前得到的特征图是1/41/4的尺寸,但经过ref_feature = ref_feature[:, :, ::2,::2].contiguous()已经将特征图尺度变为1/81/8,因此构造的代价体尺度为1/8Wx1/8HxDxF。

此步最大的创新在于3d cnn时,选用了反卷积步骤,同时使用前后阶段的特征相加得到了1/8*1/8尺度的深度图,这区别于point-mvsnet的小分辨率结果

此正则化3d cnn如下:

class VolumeConv(nn.Module):

def __init__(self, in_channels, base_channels):

super(VolumeConv, self).__init__()

self.in_channels = in_channels

self.out_channels = base_channels * 8

self.base_channels = base_channels

self.conv1_0 = Conv3d(in_channels, base_channels * 2, 3, stride=2, padding=1)

self.conv2_0 = Conv3d(base_channels * 2, base_channels * 4, 3, stride=2, padding=1)

self.conv3_0 = Conv3d(base_channels * 4, base_channels * 8, 3, stride=2, padding=1)

self.conv0_1 = Conv3d(in_channels, base_channels, 3, 1, padding=1)

self.conv1_1 = Conv3d(base_channels * 2, base_channels * 2, 3, 1, padding=1)

self.conv2_1 = Conv3d(base_channels * 4, base_channels * 4, 3, 1, padding=1)

self.conv3_1 = Conv3d(base_channels * 8, base_channels * 8, 3, 1, padding=1)

self.conv4_0 = Deconv3d(base_channels * 8, base_channels * 4, 3, 2, padding=1, output_padding=1)

self.conv5_0 = Deconv3d(base_channels * 4, base_channels * 2, 3, 2, padding=1, output_padding=1)

self.conv6_0 = Deconv3d(base_channels * 2, base_channels, 3, 2, padding=1, output_padding=1)

self.conv6_2 = nn.Conv3d(base_channels, 1, 3, padding=1, bias=False)

def forward(self, x):

conv0_1 = self.conv0_1(x)

conv1_0 = self.conv1_0(x)

conv2_0 = self.conv2_0(conv1_0)

conv3_0 = self.conv3_0(conv2_0)

conv1_1 = self.conv1_1(conv1_0)

conv2_1 = self.conv2_1(conv2_0)

conv3_1 = self.conv3_1(conv3_0)

conv4_0 = self.conv4_0(conv3_1)

conv5_0 = self.conv5_0(conv4_0 + conv2_1)

conv6_0 = self.conv6_0(conv5_0 + conv1_1)

conv6_2 = self.conv6_2(conv6_0 + conv0_1)

return conv6_2

高斯牛顿优化

高斯牛顿优化深度图,

if isGN:

feature_pyramids = {}

chosen_conv = ["conv1", "conv2"]

for conv in chosen_conv:

feature_pyramids[conv] = []

for i in range(num_view):

curr_img = img_list[:, i, :, :, :]

curr_feature_pyramid = self.flow_img_conv(curr_img)

for conv in chosen_conv:

feature_pyramids[conv].append(curr_feature_pyramid[conv])

for conv in chosen_conv:

feature_pyramids[conv] = torch.stack(feature_pyramids[conv], dim=1)

if isTest:

for conv in chosen_conv:

feature_pyramids[conv] = torch.detach(feature_pyramids[conv])