deeplearning.35Keras入门教程及残差网络

Keras教程及残差网络

- Keras简介

- Keras笑脸识别

-

- 导入相关库。

- 问题描述

- 加载数据集

- 在Keras中构建模型

- 小结

- 残差网络

-

- 导入相关库

- 深层网络带来的问题

- 构建一个残差网络

- 恒等块(Identity block)

- 卷积块(convolutional block)

- 构建50层的残差网络

- 加载数据集

- 训练模型

- 评估模型

- 测试模型

- 小结

Keras简介

Keras,这是一种高级神经网络API(编程框架),采用Python编写,并且能够在包括TensorFlow和CNTK在内的几个较低级框架之上运行。开发Keras的目的是使深度学习工程师能够快速构建和试验不同的模型。正如TensorFlow是一个比Python更高级的框架一样,Keras是一个甚至更高层次的框架,能够以最小的延迟将想法付诸实践是找到良好模型的关键。但是,Keras比低级框架更具限制性,因此可以在TensorFlow中实现一些非常复杂的模型,而在Keras中实现这些模型较为困难。话虽如此,Keras仍可以在许多常见模型上正常工作。

Keras笑脸识别

下载相关数据集和必要的文件,下载链接。使用pycharm新建名为keras.py的文件,数据集等下载到同目录下。

导入相关库。

import numpy as np

from keras import layers

from keras.layers import Input, Dense, Activation, ZeroPadding2D, BatchNormalization, Flatten, Conv2D

from keras.layers import AveragePooling2D, MaxPooling2D, Dropout, GlobalMaxPooling2D, GlobalAveragePooling2D

from keras.models import Model

from keras.preprocessing import image

from keras.utils import layer_utils

from keras.utils.data_utils import get_file

from keras.applications.imagenet_utils import preprocess_input

import pydot

from IPython.display import SVG

from keras.utils.vis_utils import model_to_dot

from keras.utils.vis_utils import plot_model

import kt_utils

import keras.backend as K

K.set_image_data_format('channels_last')

import matplotlib.pyplot as plt

from matplotlib.pyplot import imshow

问题描述

加载数据集

# 加载数据集

X_train_orig, Y_train_orig, X_test_orig, Y_test_orig, classes = kt_utils.load_dataset()

# Normalize image vectors

X_train = X_train_orig/255.

X_test = X_test_orig/255.

# Reshape

Y_train = Y_train_orig.T

Y_test = Y_test_orig.T

在Keras中构建模型

构建模型举例,并不应用于本次实践的代码中

def model(input_shape):

"""

模型大纲

"""

#定义一个tensor的placeholder,维度为input_shape

X_input = Input(input_shape)

#使用0填充:X_input的周围填充0

X = ZeroPadding2D((3,3))(X_input)

# 对X使用 CONV -> BN -> RELU 块

X = Conv2D(32, (7, 7), strides = (1, 1), name = 'conv0')(X)

X = BatchNormalization(axis = 3, name = 'bn0')(X)

X = Activation('relu')(X)

#最大值池化层

X = MaxPooling2D((2,2),name="max_pool")(X)

#降维,矩阵转化为向量 + 全连接层

X = Flatten()(X)

X = Dense(1, activation='sigmoid', name='fc')(X)

#创建模型,讲话创建一个模型的实体,我们可以用它来训练、测试。

model = Model(inputs = X_input, outputs = X, name='HappyModel')

return model

注意:Keras使用变量名与我们之前使用numpy和TensorFlow不同。不是在正向传播的每个步骤上创建和分配新变量,例如X, Z1, A1, Z2, A2等,以用于不同层的计算, 在Keras中,我们使用X覆盖了所有的值,没有保存每一层结果,我们只需要最新的值,唯一例外的就是X_input,我们将它分离出来是因为它是输入的数据,我们要在最后的创建模型那一步中用到。

下边定义一个Happy_model()模型

def HappyModel(input_shape):

"""

实现一个检测笑容的模型

参数:

input_shape - 输入的数据的维度

返回:

model - 创建的Keras的模型

"""

#你可以参考和上面的大纲

X_input = Input(input_shape)

#使用0填充:X_input的周围填充0

X = ZeroPadding2D((3, 3))(X_input)

#对X使用 CONV -> BN -> RELU 块

X = Conv2D(32, (7, 7), strides=(1, 1), name='conv0')(X)

X = BatchNormalization(axis=3, name='bn0')(X)

X = Activation('relu')(X)

#最大值池化层

X = MaxPooling2D((2, 2), name='max_pool')(X)

#降维,矩阵转化为向量 + 全连接层

X = Flatten()(X)

X = Dense(1, activation='sigmoid', name='fc')(X)

#创建模型,讲话创建一个模型的实体,我们可以用它来训练、测试。

model = Model(inputs=X_input, outputs=X, name='HappyModel')

return model

现在,已经构建了一个描述模型的函数。为了训练和测试该模型,Keras中有四个步骤:

- 通过调用上面的函数创建模型

- 通过调用model.compile(optimizer = “…”, loss = “…”, metrics = [“accuracy”])编译模型

- 通过调用model.fit(x = …, y = …, epochs = …, batch_size = …)训练模型

- 通过调用model.evaluate(x = …, y = …)测试模型

#创建一个模型实体

happy_model = HappyModel(X_train.shape[1:])

#编译模型

happy_model.compile("adam","binary_crossentropy", metrics=['accuracy'])

#训练模型

#请注意,此操作会花费你大约6-10分钟。

happy_model.fit(X_train, Y_train, epochs=40, batch_size=50)

#评估模型

preds = happy_model.evaluate(X_test, Y_test, batch_size=32, verbose=1, sample_weight=None)

print ("误差值 = " + str(preds[0]))

print ("准确度 = " + str(preds[1]))

小结

import numpy as np

from keras import layers

from keras.layers import Input, Dense, Activation, ZeroPadding2D, BatchNormalization, Flatten, Conv2D

from keras.layers import AveragePooling2D, MaxPooling2D, Dropout, GlobalMaxPooling2D, GlobalAveragePooling2D

from keras.models import Model

from keras.preprocessing import image

from keras.utils import layer_utils

from keras.utils.data_utils import get_file

from keras.applications.imagenet_utils import preprocess_input

import pydot

from IPython.display import SVG

from keras.utils.vis_utils import model_to_dot

from keras.utils.vis_utils import plot_model

import kt_utils

import keras.backend as K

K.set_image_data_format('channels_last')

import matplotlib.pyplot as plt

from matplotlib.pyplot import imshow

# 加载数据集

X_train_orig, Y_train_orig, X_test_orig, Y_test_orig, classes = kt_utils.load_dataset()

# Normalize image vectors

X_train = X_train_orig/255.

X_test = X_test_orig/255.

# Reshape

Y_train = Y_train_orig.T

Y_test = Y_test_orig.T

# 定义模型

def HappyModel(input_shape):

"""

实现一个检测笑容的模型

参数:

input_shape - 输入的数据的维度

返回:

model - 创建的Keras的模型

"""

# 你可以参考和上面的大纲

X_input = Input(input_shape)

# 使用0填充:X_input的周围填充0

X = ZeroPadding2D((3, 3))(X_input)

# 对X使用 CONV -> BN -> RELU 块

X = Conv2D(32, (7, 7), strides=(1, 1), name='conv0')(X)

X = BatchNormalization(axis=3, name='bn0')(X)

X = Activation('relu')(X)

# 最大值池化层

X = MaxPooling2D((2, 2), name='max_pool')(X)

# 降维,矩阵转化为向量 + 全连接层

X = Flatten()(X)

X = Dense(1, activation='sigmoid', name='fc')(X)

# 创建模型,讲话创建一个模型的实体,我们可以用它来训练、测试。

model = Model(inputs=X_input, outputs=X, name='HappyModel')

return model

#创建一个模型实体

happy_model = HappyModel(X_train.shape[1:])

#编译模型

happy_model.compile("adam","binary_crossentropy", metrics=['accuracy'])

#训练模型

happy_model.fit(X_train, Y_train, epochs=40, batch_size=50)

#评估模型

preds = happy_model.evaluate(X_test, Y_test, batch_size=32, verbose=1, sample_weight=None)

print ("误差值 = " + str(preds[0]))

print ("准确度 = " + str(preds[1]))

残差网络

使用pycharm,新建cancha.py文件。相关资料下载地址

导入相关库

import numpy as np

import tensorflow as tf

from keras import layers

from keras.layers import Input, Add, Dense, Activation, ZeroPadding2D, BatchNormalization, Flatten, Conv2D, AveragePooling2D, MaxPooling2D, GlobalMaxPooling2D

from keras.models import Model, load_model

from keras.preprocessing import image

from keras.utils import layer_utils

from keras.utils.data_utils import get_file

from keras.applications.imagenet_utils import preprocess_input

from keras.utils.vis_utils import model_to_dot

from keras.utils.vis_utils import plot_model

from keras.initializers import glorot_uniform

import pydot

from IPython.display import SVG

import imageio

from matplotlib.pyplot import imshow

import keras.backend as K

K.set_image_data_format('channels_last')

K.set_learning_phase(1)

import resnets_utils

from PIL import Image

import numpy as np

import matplotlib.pyplot as plt # plt 用于显示图片

深层网络带来的问题

最近几年,卷积神经网络变得越来越深,从只从几层(例如AlexNet)到超过一百层。深层网络的主要好处是可以表示非常复杂的特征。它还可以学习许多不同抽象级别的特征,从边缘(较低层)到非常复杂的特征(较深层)。但是,使用更深的网络并不总是好的。训练它们的一个巨大障碍是梯度的消失:非常深的网络通常会有一个梯度信号,该信号会迅速的消退,从而使得梯度下降变得非常缓慢。更具体的说,在梯度下降的过程中,当你从最后一层回到第一层的时候,你在每个步骤上乘以权重矩阵,因此梯度值可以迅速的指数式地减少到0(在极少数的情况下会迅速增长,造成梯度爆炸)。

构建一个残差网络

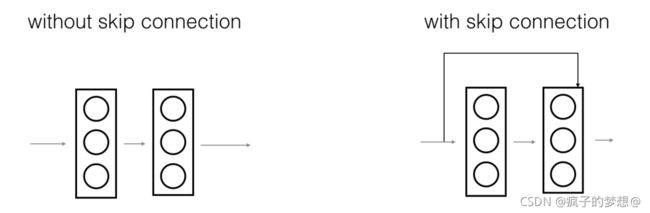

在残差网络中,一个“捷径(shortcut)”或者说“跳跃连接(skip connection)”允许梯度直接反向传播到较早的层。左图显示了通过网络的“主要路径”。右图为主路径添加了shortcut。通过将这些ResNet块彼此堆叠,可以形成一个非常深的网络。

恒等块(Identity block)

恒等块是残差网络使用的的标准块,对应于输入的激活值(比如 a^ l)与输出激活值(比如 a^ l + 1 )具有相同的维度。图中,上面的曲线路径是“捷径”,下面的直线路径是主路径。在上图中,我们依旧把CONV2D 与 ReLU包含到了每个步骤中,为了提升训练的速度,我们在每一步也把数据进行了归一化(BatchNorm),不要害怕这些东西,因为Keras框架已经实现了这些东西,调用BatchNorm只需要一行代码。

主路径的第一部分:

- 第一个CONV2D具有形状为(1,1)和步幅为(1,1)的F1个滤波器。其填充为“valid”,其名称应为conv_name_base + ‘2a’。使用0作为随机初始化的种子。

- 第一个BatchNorm标准化通道轴。它的名字应该是bn_name_base + ‘2a’。

- 然后应用ReLU激活函数。

主路径的第二部分:

- 第二个CONV2D具有形状为(1,1)和步幅为(1,1)的F2个滤波器。其填充为“valid”,其名称应为conv_name_base + ‘2b’。使用0作为随机初始化的种子。

- 第二个BatchNorm标准化通道轴。它的名字应该是bn_name_base + ‘2b’。请注意,此组件中没有ReLU激活函数。

最后一步: - 将shortcut(捷径)和输入添加在一起。

- 然后应用ReLU激活函数。

接下来我们就要实现残差网络的恒等块了,请务必查看下面的中文手册:

-

实现Conv2D:参见链接

-

实现BatchNorm:参加链接

-

实现激活:使用Activation(‘relu’)(X)

-

添加快捷方式传递的值:参见链接

定义恒等快函数,这里定义了是主路径为三部分的。

# 定义恒等快函数

def identity_block(X, f, filters, stage, block):

"""

实现图3的恒等块

参数:

X - 输入的tensor类型的数据,维度为( m, n_H_prev, n_W_prev, n_H_prev )

f - 整数,指定主路径中间的CONV窗口的维度

filters - 整数列表,定义了主路径每层的卷积层的过滤器数量

stage - 整数,根据每层的位置来命名每一层,与block参数一起使用。

block - 字符串,据每层的位置来命名每一层,与stage参数一起使用。

返回:

X - 恒等块的输出,tensor类型,维度为(n_H, n_W, n_C)

"""

# 定义命名规则

conv_name_base = "res" + str(stage) + block + "_branch"

bn_name_base = "bn" + str(stage) + block + "_branch"

# 获取过滤器

F1, F2, F3 = filters

# 保存输入数据,将会用于为主路径添加捷径

X_shortcut = X

# 主路径的第一部分

##卷积层

X = Conv2D(filters=F1, kernel_size=(1, 1), strides=(1, 1), padding="valid",

name=conv_name_base + "2a", kernel_initializer=glorot_uniform(seed=0))(X)

##归一化

X = BatchNormalization(axis=3, name=bn_name_base + "2a")(X)

##使用ReLU激活函数

X = Activation("relu")(X)

# 主路径的第二部分

##卷积层

X = Conv2D(filters=F2, kernel_size=(f, f), strides=(1, 1), padding="same",

name=conv_name_base + "2b", kernel_initializer=glorot_uniform(seed=0))(X)

##归一化

X = BatchNormalization(axis=3, name=bn_name_base + "2b")(X)

##使用ReLU激活函数

X = Activation("relu")(X)

# 主路径的第三部分

##卷积层

X = Conv2D(filters=F3, kernel_size=(1, 1), strides=(1, 1), padding="valid",

name=conv_name_base + "2c", kernel_initializer=glorot_uniform(seed=0))(X)

##归一化

X = BatchNormalization(axis=3, name=bn_name_base + "2c")(X)

##没有ReLU激活函数

# 最后一步:

##将捷径与输入加在一起

X = Add()([X, X_shortcut])

##使用ReLU激活函数

X = Activation("relu")(X)

return X

# 测试一下

tf.reset_default_graph()

with tf.Session() as test:

np.random.seed(1)

A_prev = tf.placeholder("float", [3, 4, 4, 6])

X = np.random.randn(3, 4, 4, 6)

A = identity_block(A_prev, f=2, filters=[2, 4, 6], stage=1, block="a")

test.run(tf.global_variables_initializer())

out = test.run([A], feed_dict={A_prev: X, K.learning_phase(): 0})

print("out = " + str(out[0][1][1][0]))

test.close()

卷积块(convolutional block)

残差网络的卷积块是另一种类型的残差块,它适用于输入输出的维度不一致的情况,它不同于上面的恒等块,与之区别在于,捷径中有一个CONV2D层,如下图:

捷径中的卷积层将把输入x,卷积为不同的维度,因此在主路径最后那里需要适配捷径中的维度。比如:把激活值中的宽高减少2倍,我们可以使用1x1的卷积,步伐为2。捷径上的卷积层不使用任何非线性激活函数,它的主要作用是仅仅应用(学习后的)线性函数来减少输入的维度,以便与在后面的加法步骤中的维度相匹配。

主路径的第一部分:

- 第一个CONV2D具有形状为(1,1)和步幅为(s,s)的F1个滤波器。其填充为"valid",其名称应为conv_name_base + ‘2a’。

- 第一个BatchNorm标准化通道轴。其名字是bn_name_base + ‘2a’。

- 然后应用ReLU激活函数。

主路径的第二部分:

- 第二个CONV2D具有F2个(f,f)的滤波器和(1,1)的步幅。其填充为"same",并且名称应为conv_name_base + ‘2b’。

- 第二个BatchNorm标准化通道轴。它的名字应该是bn_name_base + ‘2b’。

- 然后应用ReLU激活函数。

主路径的第三部分:

- 第三个CONV2D的F3个滤波器为(1,1),步幅为(1,1)。其填充为"valid",其名称应为conv_name_base + ‘2c’。

- 第三个BatchNorm标准化通道轴。它的名字应该是bn_name_base + ‘2c’。请注意,此组件中没有ReLU激活函数。

捷径

CONV2D具有形状为(1,1)和步幅为(s,s)的F3个滤波器。其填充为"valid",其名称应为conv_name_base + ‘1’。

BatchNorm标准化通道轴。它的名字应该是bn_name_base + ‘1’。

最后一步:

- 将Shortcut路径和主路径添加在一起。

- 然后应用ReLU激活函数。

定义卷积块函数

# 定义卷积块函数

def convolutional_block(X, f, filters, stage, block, s=2):

"""

实现图5的卷积块

参数:

X - 输入的tensor类型的变量,维度为( m, n_H_prev, n_W_prev, n_C_prev)

f - 整数,指定主路径中间的CONV窗口的维度

filters - 整数列表,定义了主路径每层的卷积层的过滤器数量

stage - 整数,根据每层的位置来命名每一层,与block参数一起使用。

block - 字符串,据每层的位置来命名每一层,与stage参数一起使用。

s - 整数,指定要使用的步幅

返回:

X - 卷积块的输出,tensor类型,维度为(n_H, n_W, n_C)

"""

# 定义命名规则

conv_name_base = "res" + str(stage) + block + "_branch"

bn_name_base = "bn" + str(stage) + block + "_branch"

# 获取过滤器数量

F1, F2, F3 = filters

# 保存输入数据

X_shortcut = X

# 主路径

##主路径第一部分

X = Conv2D(filters=F1, kernel_size=(1, 1), strides=(s, s), padding="valid",

name=conv_name_base + "2a", kernel_initializer=glorot_uniform(seed=0))(X)

X = BatchNormalization(axis=3, name=bn_name_base + "2a")(X)

X = Activation("relu")(X)

##主路径第二部分

X = Conv2D(filters=F2, kernel_size=(f, f), strides=(1, 1), padding="same",

name=conv_name_base + "2b", kernel_initializer=glorot_uniform(seed=0))(X)

X = BatchNormalization(axis=3, name=bn_name_base + "2b")(X)

X = Activation("relu")(X)

##主路径第三部分

X = Conv2D(filters=F3, kernel_size=(1, 1), strides=(1, 1), padding="valid",

name=conv_name_base + "2c", kernel_initializer=glorot_uniform(seed=0))(X)

X = BatchNormalization(axis=3, name=bn_name_base + "2c")(X)

# 捷径

X_shortcut = Conv2D(filters=F3, kernel_size=(1, 1), strides=(s, s), padding="valid",

name=conv_name_base + "1", kernel_initializer=glorot_uniform(seed=0))(X_shortcut)

X_shortcut = BatchNormalization(axis=3, name=bn_name_base + "1")(X_shortcut)

# 最后一步

X = Add()([X, X_shortcut])

X = Activation("relu")(X)

return X

# 测试一下

tf.reset_default_graph()

with tf.Session() as test:

np.random.seed(1)

A_prev = tf.placeholder("float", [3, 4, 4, 6])

X = np.random.randn(3, 4, 4, 6)

A = convolutional_block(A_prev, f=2, filters=[2, 4, 6], stage=1, block="a")

test.run(tf.global_variables_initializer())

out = test.run([A], feed_dict={A_prev: X, K.learning_phase(): 0})

print("out = " + str(out[0][1][1][0]))

test.close()

构建50层的残差网络

我们已经做完所需要的所有残差块了,下面这个图就描述了神经网络的算法细节,图中的"ID BLOCK"是指标准的恒等块,"ID BLOCK X3"是指把三个恒等块放在一起。

此ResNet-50模型的详细结构是:

零填充填充(3,3)的输入

- 阶段1:

- 2D卷积具有64个形状为(7,7)的滤波器,并使用(2,2)步幅,名称是“conv1”。

- BatchNorm(规范层)应用于输入的通道轴。

- MaxPooling使用(3,3)窗口和(2,2)步幅。

- 阶段2:

- 卷积块使用三组大小为[64,64,256]的滤波器,“f”为3,“s”为1,块为“a”。

- 2个标识块使用三组大小为[64,64,256]的滤波器,“f”为3,块为“b”和“c”。

- 阶段3:

- 卷积块使用三组大小为[128,128,512]的滤波器,“f”为3,“s”为2,块为“a”。

- 3个标识块使用三组大小为[128,128,512]的滤波器,“f”为3,块为“b”,“c”和“d”。

- 阶段4:

- 卷积块使用三组大小为[256、256、1024]的滤波器,“f”为3,“s”为2,块为“a”。

- 5个标识块使用三组大小为[256、256、1024]的滤波器,“f”为3,块为“b”,“c”,“d”,“e”和“f”。

- 阶段5:

- 卷积块使用三组大小为[512、512、2048]的滤波器,“f”为3,“s”为2,块为“a”。

- 2个标识块使用三组大小为[256、256、2048]的滤波器,“f”为3,块为“b”和“c”。

- 2D平均池使用形状为(2,2)的窗口,其名称为“avg_pool”。

- Flatten层没有任何超参数或名称。

- 全连接(密集)层使用softmax激活将其输入减少为类数。名字是’fc’ + str(classes)。

为了实现这50层的残差网络,我们需要查看一下手册:

了解如下是怎么操作的,参考中文手册

均值池化层:

Conv2D:

BatchNorm:

0填充:

最大值池化层:

全连接层:

添加快捷方式传递的值:

定义50层残差网络

# 定义50层残差网络

def ResNet50(input_shape=(64, 64, 3), classes=6):

"""

实现ResNet50

CONV2D -> BATCHNORM -> RELU -> MAXPOOL -> CONVBLOCK -> IDBLOCK*2 -> CONVBLOCK -> IDBLOCK*3

-> CONVBLOCK -> IDBLOCK*5 -> CONVBLOCK -> IDBLOCK*2 -> AVGPOOL -> TOPLAYER

参数:

input_shape - 图像数据集的维度

classes - 整数,分类数

返回:

model - Keras框架的模型

"""

# 定义tensor类型的输入数据

X_input = Input(input_shape)

# 0填充

X = ZeroPadding2D((3, 3))(X_input)

# stage1

X = Conv2D(filters=64, kernel_size=(7, 7), strides=(2, 2), name="conv1",

kernel_initializer=glorot_uniform(seed=0))(X)

X = BatchNormalization(axis=3, name="bn_conv1")(X)

X = Activation("relu")(X)

X = MaxPooling2D(pool_size=(3, 3), strides=(2, 2))(X)

# stage2

X = convolutional_block(X, f=3, filters=[64, 64, 256], stage=2, block="a", s=1)

X = identity_block(X, f=3, filters=[64, 64, 256], stage=2, block="b")

X = identity_block(X, f=3, filters=[64, 64, 256], stage=2, block="c")

# stage3

X = convolutional_block(X, f=3, filters=[128, 128, 512], stage=3, block="a", s=2)

X = identity_block(X, f=3, filters=[128, 128, 512], stage=3, block="b")

X = identity_block(X, f=3, filters=[128, 128, 512], stage=3, block="c")

X = identity_block(X, f=3, filters=[128, 128, 512], stage=3, block="d")

# stage4

X = convolutional_block(X, f=3, filters=[256, 256, 1024], stage=4, block="a", s=2)

X = identity_block(X, f=3, filters=[256, 256, 1024], stage=4, block="b")

X = identity_block(X, f=3, filters=[256, 256, 1024], stage=4, block="c")

X = identity_block(X, f=3, filters=[256, 256, 1024], stage=4, block="d")

X = identity_block(X, f=3, filters=[256, 256, 1024], stage=4, block="e")

X = identity_block(X, f=3, filters=[256, 256, 1024], stage=4, block="f")

# stage5

X = convolutional_block(X, f=3, filters=[512, 512, 2048], stage=5, block="a", s=2)

X = identity_block(X, f=3, filters=[512, 512, 2048], stage=5, block="b")

X = identity_block(X, f=3, filters=[512, 512, 2048], stage=5, block="c")

# 均值池化层

X = AveragePooling2D(pool_size=(2, 2), padding="same")(X)

# 输出层

X = Flatten()(X)

X = Dense(classes, activation="softmax", name="fc" + str(classes),

kernel_initializer=glorot_uniform(seed=0))(X)

# 创建模型

model = Model(inputs=X_input, outputs=X, name="ResNet50")

return model

运行以下代码以构建模型图。

model = ResNet50(input_shape = (64, 64, 3), classes = 6)

在训练模型之前,你需要通过编译模型来配置学习过程。

model.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['accuracy'])

加载数据集

X_train_orig, Y_train_orig, X_test_orig, Y_test_orig, classes = resnets_utils.load_dataset()

# Normalize image vectors

X_train = X_train_orig / 255.

X_test = X_test_orig / 255.

# Convert training and test labels to one hot matrices

Y_train = resnets_utils.convert_to_one_hot(Y_train_orig, 6).T

Y_test = resnets_utils.convert_to_one_hot(Y_test_orig, 6).T

print("number of training examples = " + str(X_train.shape[0]))

print("number of test examples = " + str(X_test.shape[0]))

print("X_train shape: " + str(X_train.shape))

print("Y_train shape: " + str(Y_train.shape))

print("X_test shape: " + str(X_test.shape))

print("Y_test shape: " + str(Y_test.shape))

训练模型

运行以下代码,训练模型(批处理大小为32)2个epoch。在CPU上,每个epoch大约需要5分钟。

model.fit(X_train,Y_train,epochs=2,batch_size=32)

评估模型

preds = model.evaluate(X_test,Y_test)

print("误差值 = " + str(preds[0]))

print("准确率 = " + str(preds[1]))

如果需要,你还可以选择训练ResNet进行更多epoch。训练约20个epoch时,我们会获得更好的性能,但是在CPU上进行训练将需要一个多小时。使用GPU,可以加快训练速度。

测试模型

测试我们自己的图片,继续追加下列代码

# 测试自己的图片

img_path = 'my_image.jpg'

my_image = image.load_img(img_path, target_size=(64, 64))

my_image = image.img_to_array(my_image)

my_image = np.expand_dims(my_image,axis=0)

my_image = preprocess_input(my_image)

print("my_image.shape = " + str(my_image.shape))

print("class prediction vector [p(0), p(1), p(2), p(3), p(4), p(5)] = ")

print(model.predict(my_image))

my_image = imageio.imread(img_path)

plt.imshow(my_image)

小结

cancha.py完整代码如下

import numpy as np

import tensorflow as tf

from keras import layers

from keras.layers import Input, Add, Dense, Activation, ZeroPadding2D, BatchNormalization, Flatten, Conv2D, AveragePooling2D, MaxPooling2D, GlobalMaxPooling2D

from keras.models import Model, load_model

from keras.preprocessing import image

from keras.utils import layer_utils

from keras.utils.data_utils import get_file

from keras.applications.imagenet_utils import preprocess_input

from keras.utils.vis_utils import model_to_dot

from keras.utils.vis_utils import plot_model

from keras.initializers import glorot_uniform

import pydot

from IPython.display import SVG

import imageio

from matplotlib.pyplot import imshow

import keras.backend as K

K.set_image_data_format('channels_last')

K.set_learning_phase(1)

import resnets_utils

from PIL import Image

import numpy as np

import matplotlib.pyplot as plt # plt 用于显示图片

# 定义恒等快函数

def identity_block(X, f, filters, stage, block):

"""

实现图3的恒等块

参数:

X - 输入的tensor类型的数据,维度为( m, n_H_prev, n_W_prev, n_H_prev )

f - 整数,指定主路径中间的CONV窗口的维度

filters - 整数列表,定义了主路径每层的卷积层的过滤器数量

stage - 整数,根据每层的位置来命名每一层,与block参数一起使用。

block - 字符串,据每层的位置来命名每一层,与stage参数一起使用。

返回:

X - 恒等块的输出,tensor类型,维度为(n_H, n_W, n_C)

"""

# 定义命名规则

conv_name_base = "res" + str(stage) + block + "_branch"

bn_name_base = "bn" + str(stage) + block + "_branch"

# 获取过滤器

F1, F2, F3 = filters

# 保存输入数据,将会用于为主路径添加捷径

X_shortcut = X

# 主路径的第一部分

##卷积层

X = Conv2D(filters=F1, kernel_size=(1, 1), strides=(1, 1), padding="valid",

name=conv_name_base + "2a", kernel_initializer=glorot_uniform(seed=0))(X)

##归一化

X = BatchNormalization(axis=3, name=bn_name_base + "2a")(X)

##使用ReLU激活函数

X = Activation("relu")(X)

# 主路径的第二部分

##卷积层

X = Conv2D(filters=F2, kernel_size=(f, f), strides=(1, 1), padding="same",

name=conv_name_base + "2b", kernel_initializer=glorot_uniform(seed=0))(X)

##归一化

X = BatchNormalization(axis=3, name=bn_name_base + "2b")(X)

##使用ReLU激活函数

X = Activation("relu")(X)

# 主路径的第三部分

##卷积层

X = Conv2D(filters=F3, kernel_size=(1, 1), strides=(1, 1), padding="valid",

name=conv_name_base + "2c", kernel_initializer=glorot_uniform(seed=0))(X)

##归一化

X = BatchNormalization(axis=3, name=bn_name_base + "2c")(X)

##没有ReLU激活函数

# 最后一步:

##将捷径与输入加在一起

X = Add()([X, X_shortcut])

##使用ReLU激活函数

X = Activation("relu")(X)

return X

# # 测试一下

# tf.reset_default_graph()

# with tf.Session() as test:

# np.random.seed(1)

# A_prev = tf.placeholder("float", [3, 4, 4, 6])

# X = np.random.randn(3, 4, 4, 6)

# A = identity_block(A_prev, f=2, filters=[2, 4, 6], stage=1, block="a")

#

# test.run(tf.global_variables_initializer())

# out = test.run([A], feed_dict={A_prev: X, K.learning_phase(): 0})

# print("out = " + str(out[0][1][1][0]))

#

# test.close()

# 定义卷积块函数

def convolutional_block(X, f, filters, stage, block, s=2):

"""

实现图5的卷积块

参数:

X - 输入的tensor类型的变量,维度为( m, n_H_prev, n_W_prev, n_C_prev)

f - 整数,指定主路径中间的CONV窗口的维度

filters - 整数列表,定义了主路径每层的卷积层的过滤器数量

stage - 整数,根据每层的位置来命名每一层,与block参数一起使用。

block - 字符串,据每层的位置来命名每一层,与stage参数一起使用。

s - 整数,指定要使用的步幅

返回:

X - 卷积块的输出,tensor类型,维度为(n_H, n_W, n_C)

"""

# 定义命名规则

conv_name_base = "res" + str(stage) + block + "_branch"

bn_name_base = "bn" + str(stage) + block + "_branch"

# 获取过滤器数量

F1, F2, F3 = filters

# 保存输入数据

X_shortcut = X

# 主路径

##主路径第一部分

X = Conv2D(filters=F1, kernel_size=(1, 1), strides=(s, s), padding="valid",

name=conv_name_base + "2a", kernel_initializer=glorot_uniform(seed=0))(X)

X = BatchNormalization(axis=3, name=bn_name_base + "2a")(X)

X = Activation("relu")(X)

##主路径第二部分

X = Conv2D(filters=F2, kernel_size=(f, f), strides=(1, 1), padding="same",

name=conv_name_base + "2b", kernel_initializer=glorot_uniform(seed=0))(X)

X = BatchNormalization(axis=3, name=bn_name_base + "2b")(X)

X = Activation("relu")(X)

##主路径第三部分

X = Conv2D(filters=F3, kernel_size=(1, 1), strides=(1, 1), padding="valid",

name=conv_name_base + "2c", kernel_initializer=glorot_uniform(seed=0))(X)

X = BatchNormalization(axis=3, name=bn_name_base + "2c")(X)

# 捷径

X_shortcut = Conv2D(filters=F3, kernel_size=(1, 1), strides=(s, s), padding="valid",

name=conv_name_base + "1", kernel_initializer=glorot_uniform(seed=0))(X_shortcut)

X_shortcut = BatchNormalization(axis=3, name=bn_name_base + "1")(X_shortcut)

# 最后一步

X = Add()([X, X_shortcut])

X = Activation("relu")(X)

return X

# # 测试一下

# tf.reset_default_graph()

#

# with tf.Session() as test:

# np.random.seed(1)

# A_prev = tf.placeholder("float", [3, 4, 4, 6])

# X = np.random.randn(3, 4, 4, 6)

#

# A = convolutional_block(A_prev, f=2, filters=[2, 4, 6], stage=1, block="a")

# test.run(tf.global_variables_initializer())

#

# out = test.run([A], feed_dict={A_prev: X, K.learning_phase(): 0})

# print("out = " + str(out[0][1][1][0]))

#

# test.close()

# 定义50层残差网络

def ResNet50(input_shape=(64, 64, 3), classes=6):

"""

实现ResNet50

CONV2D -> BATCHNORM -> RELU -> MAXPOOL -> CONVBLOCK -> IDBLOCK*2 -> CONVBLOCK -> IDBLOCK*3

-> CONVBLOCK -> IDBLOCK*5 -> CONVBLOCK -> IDBLOCK*2 -> AVGPOOL -> TOPLAYER

参数:

input_shape - 图像数据集的维度

classes - 整数,分类数

返回:

model - Keras框架的模型

"""

# 定义tensor类型的输入数据

X_input = Input(input_shape)

# 0填充

X = ZeroPadding2D((3, 3))(X_input)

# stage1

X = Conv2D(filters=64, kernel_size=(7, 7), strides=(2, 2), name="conv1",

kernel_initializer=glorot_uniform(seed=0))(X)

X = BatchNormalization(axis=3, name="bn_conv1")(X)

X = Activation("relu")(X)

X = MaxPooling2D(pool_size=(3, 3), strides=(2, 2))(X)

# stage2

X = convolutional_block(X, f=3, filters=[64, 64, 256], stage=2, block="a", s=1)

X = identity_block(X, f=3, filters=[64, 64, 256], stage=2, block="b")

X = identity_block(X, f=3, filters=[64, 64, 256], stage=2, block="c")

# stage3

X = convolutional_block(X, f=3, filters=[128, 128, 512], stage=3, block="a", s=2)

X = identity_block(X, f=3, filters=[128, 128, 512], stage=3, block="b")

X = identity_block(X, f=3, filters=[128, 128, 512], stage=3, block="c")

X = identity_block(X, f=3, filters=[128, 128, 512], stage=3, block="d")

# stage4

X = convolutional_block(X, f=3, filters=[256, 256, 1024], stage=4, block="a", s=2)

X = identity_block(X, f=3, filters=[256, 256, 1024], stage=4, block="b")

X = identity_block(X, f=3, filters=[256, 256, 1024], stage=4, block="c")

X = identity_block(X, f=3, filters=[256, 256, 1024], stage=4, block="d")

X = identity_block(X, f=3, filters=[256, 256, 1024], stage=4, block="e")

X = identity_block(X, f=3, filters=[256, 256, 1024], stage=4, block="f")

# stage5

X = convolutional_block(X, f=3, filters=[512, 512, 2048], stage=5, block="a", s=2)

X = identity_block(X, f=3, filters=[512, 512, 2048], stage=5, block="b")

X = identity_block(X, f=3, filters=[512, 512, 2048], stage=5, block="c")

# 均值池化层

X = AveragePooling2D(pool_size=(2, 2), padding="same")(X)

# 输出层

X = Flatten()(X)

X = Dense(classes, activation="softmax", name="fc" + str(classes),

kernel_initializer=glorot_uniform(seed=0))(X)

# 创建模型

model = Model(inputs=X_input, outputs=X, name="ResNet50")

return model

# 构建模型图

model = ResNet50(input_shape = (64, 64, 3), classes = 6)

# 编译模型来配置学习过程

model.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['accuracy'])

# 加载数据集

X_train_orig, Y_train_orig, X_test_orig, Y_test_orig, classes = resnets_utils.load_dataset()

# Normalize image vectors

X_train = X_train_orig / 255.

X_test = X_test_orig / 255.

# Convert training and test labels to one hot matrices

Y_train = resnets_utils.convert_to_one_hot(Y_train_orig, 6).T

Y_test = resnets_utils.convert_to_one_hot(Y_test_orig, 6).T

print("number of training examples = " + str(X_train.shape[0]))

print("number of test examples = " + str(X_test.shape[0]))

print("X_train shape: " + str(X_train.shape))

print("Y_train shape: " + str(Y_train.shape))

print("X_test shape: " + str(X_test.shape))

print("Y_test shape: " + str(Y_test.shape))

# 训练模型

model.fit(X_train,Y_train,epochs=2,batch_size=32)

#评估模型

preds = model.evaluate(X_test,Y_test)

print("误差值 = " + str(preds[0]))

print("准确率 = " + str(preds[1]))

# 测试自己的图片

img_path = 'my_image.jpg'

my_image = image.load_img(img_path, target_size=(64, 64))

my_image = image.img_to_array(my_image)

my_image = np.expand_dims(my_image,axis=0)

my_image = preprocess_input(my_image)

print("my_image.shape = " + str(my_image.shape))

print("class prediction vector [p(0), p(1), p(2), p(3), p(4), p(5)] = ")

print(model.predict(my_image))

my_image = imageio.imread(img_path)

plt.imshow(my_image)