论文学习——StyleGan原文精读

原论文地址:https://arxiv.org/pdf/1812.04948.pdf

原论文及翻译,参考研究,能力有限,不一定每句话都准确,欢迎讨论

A Style-Based Generator Architecture for Generative Adversarial Networks

生成对抗网络中一种基于样式的生成器结构

目录

A Style-Based Generator Architecture for Generative Adversarial Networks

生成对抗网络中一种基于样式的生成器结构

Abstract摘 要

1.Introduction介 绍

2.Style-based generator基于样式的生成器

2.1.Quality of generated images生成图像的质量

2.2.Prior art现有技术

3.Properties of the style-based generator基于样式的生成器的属性

3.1.Style mixing样式混合

3.2.Stochastic variation随机变化

3.3.Separation of global effects from stochasticity 从随机性中分离全局效应

4.Disentanglement studies解缠研究

4.1.Perceptual path length感知路径长度

4.2.Linear separability线性可分性

5.Conclusion结论

6.Acknowledgements致谢

A. The FFHQ dataset FFHQ 数据集

B. Truncation trick in W ,W 中的截断技巧

C. Hyperparameters and training details超参数和训练细节

D. Training convergence训练融合

E. Other datasets其他数据集

References参考文献

说明:文中[...]中的数字表示论文作者在此处引用的文献对应的标号,可在原论文中查找相关文献说明。

Abstract摘 要

We propose an alternative generator architecture for generative adversarial networks, borrowing from style transfer literature. The new architecture leads to an automatically learned, unsupervised separation of high-level attributes (e.g., pose and identity when trained on human

faces) and stochastic variation in the generated images (e.g., freckles, hair), and it enables intuitive, scale-specific control of the synthesis. The new generator improves the state-of-the-art in terms of traditional distribution quality metrics, leads to demonstrably better interpolation properties, and also better disentangles the latent factors of variation. To quantify interpolation quality and disentanglement, we propose two new, automated methods that are applicable to any generator architecture. Finally, we introduce a new, highly varied and high-quality dataset of human faces.

我们提出了一种用于生成对抗网络的新生成器体系结构,借鉴了样式转移的相关文献。新的体系结构导致自动学习地、无监督地分离高级属性(例如,在人脸上训练时的姿势和身份)和生成的图像中的随机变化(例如,雀斑,头发),并且能够直观地、按特定尺度地控制合成。新的生成器在传统的生成质量指标方面提高了最新技术水平值,可以显著提高插值特性,并且可以更好地解决变异的潜在因素。为了量化插值质量和分解,我们提出了两种适用于任何生成器架构的新的自动化方法。最后,我们介绍了一个新的,高度多样化和高质量的人脸数据集。

1.Introduction介 绍

The resolution and quality of images produced by generative methods — especially generative adversarial networks (GAN) [21] — have seen rapid improvement recently [29, 43, 5]. Yet the generators continue to operate as black boxes, and despite recent efforts [3], the understanding of various aspects of the image synthesis process, e.g., the origin of stochastic features, is still lacking. The properties of the latent space are also poorly understood, and the commonly demonstrated latent space interpolations [12, 50, 35] provide no quantitative way to compare different generators against each other.

由生成方法产生的图像的分辨率和质量 - 尤其是生成对抗性网络(GAN)[21] - 近来已经迅速改善[29,43,5]。然而,生成器继续作为黑匣子运行,尽管最近的努力理解它的过程[3],但仍然缺乏对图像合成过程的各个方面的理解,例如随机特征的组织。潜在空间的性质也很难理解,并且通常展示的潜在空间插值[12,50,35]没有提供将不同生成器相互比较的定量方法。

Motivated by style transfer literature [26], we re-design the generator architecture in a way that exposes novel ways to control the image synthesis process. Our generator starts from a learned constant input and adjusts the “style” of the image at each convolution layer based on the latent code, therefore directly controlling the strength of image features at different scales. Combined with noise injected directly into the network, this architectural change leads to automatic, unsupervised separation of high-level attributes (e.g., pose, identity) from stochastic variation (e.g., freckles, hair) in the generated images, and enables intuitive scale-specific mixing and interpolation operations. We do not modify the discriminator or the loss function in any way, and our work is thus orthogonal to the ongoing discussion about GAN loss functions, regularization, and hyperparameters [23, 43, 5, 38, 42, 34].

在样式转移文献[26]的推动下,我们以一种揭示控制图像合成过程的新方法的方式重新设计了生成器结构。我们的生成器从学习常量输入开始,并基于潜码调整每个卷积层图像的“样式”,因此直接控制不同尺度的图像特征的强度。结合直接注入网络的噪声,这种架构变化导致高级属性的自动,无监督解缠(例如,姿势,身份)来自所生成图像中的随机变化(例如,雀斑,头发),并且能够进行直观的尺度特定混合和插值操作。我们不以任何方式修改鉴别器或损失函数,因此我们的工作与正在进行的关于 GAN 损失函数,正则化和超参数的讨论是互为独立的[23,43,5,38,42,34] 。

Our generator embeds the input latent code into an intermediate latent space, which has a profound effect on how the factors of variation are represented in the network. The input latent space must follow the probability density of the training data, and we argue that this leads to some degree of unavoidable entanglement. Our intermediate latent space is free from that restriction and is therefore allowed to be disentangled. As previous methods for estimating the degree of latent space disentanglement are not directly applicable in our case, we propose two new automated metrics — perceptual path length and linear separability — for quantifying these aspects of the generator. Using these metrics, we show that compared to a traditional generator architecture, our generator admits a more linear, less entangled representation of different factors of variation.

我们的生成器将输入的潜在编码嵌入到中间的潜在空间中,这对于如何在网络中表示噪音因子具有深远的影响。输入潜在空间必须遵循训练数据的概率密度,并且我们认为这会导致某种程度的不可避免的纠缠。我们的中间潜在空间不受限制,因此可以解开。由于以前估算潜在空间解缠的方法并不直接适用于我们的情况,我们提出了两个新的自动度量- 感知路径长度和线性可分性 - 用于量化生成器的这些方面。使用这些指标,我们表明,与传统的生成器架构相比,我们的生成器允许更多线性,更少纠缠的不同变化因素的表示。

Finally, we present a new dataset of human faces (Flickr-Faces-HQ, FFHQ) that offers much higher quality and covers considerably wider variation than existing high-resolution datasets (Appendix A). We have made this dataset publicly available, along with our source code and pre-trained networks.1 The accompanying video can be found under the same link.

最后,我们提出了一个新的人脸数据集(Flickr-FacesHQ,FFHQ),它提供了更高的质量,涵盖了比现有高分辨率数据集更广泛的变化(附录 A)。我们已将此数据集与我们的源代码和预先训练的网络一起公开提供,见底部链接1,随附的视频可在同一链接下找到。

(附件1:https://github.com/NVlabs/stylegan)

2.Style-based generator基于样式的生成器

Traditionally the latent code is provided to the generator through an input layer, i.e., the first layer of a feedforward network (Figure 1a). We depart from this design by omitting the input layer altogether and starting from a learned constant instead (Figure 1b, right). Given a latent code z in the input latent space Z, a non-linear mapping network f : Z → W first produces w ∈ W (Figure 1b, left). For simplicity, we set the dimensionality of both

传统上,潜在编码通过输入层,即前馈网络的第一层(图 1a)提供给生成器。 我们通过省略输入层并从学习的常量开始而偏离此设计(图 1b,右)。 给定输入潜在空间Z 中的潜在代码 z,非线性映射网络(mapping network)首先产生 w ∈ W(图 1b,左)。 为简单起见,我们设置了两者的维度

Figure 1. While a traditional generator [29] feeds the latent code though the input layer only, we first map the input to an intermediate latent space W, which then controls the generator through adaptive instance normalization (AdaIN) at each convolution layer. Gaussian noise is added after each convolution, before evaluating the nonlinearity. Here “A” stands for a learned affine transform, and “B” applies learned per-channel scaling factors to the noise input. The mapping network f consists of 8 layers and the synthesis network g consists of 18 layers— two for each resolution (4^2 − 1024^2 ). The output of the last layer is converted to RGB using a separate 1 × 1 convolution, similar to Karras et al. [29]. Our generator has a total of 26.2M trainable parameters, compared to 23.1M in the traditional generator.

图 1.虽然传统的生成器[29]仅通过输入层提供潜在编码,但我们首先将输入映射到中间潜在空间 W,然后在每一个卷积层通过自适应实例规范化(AdaIN)控制生成器。 在评估非线性之前,在每次卷积之后添加高斯噪声。 这里“A”代表学习的仿射变换,“B”将学习的每通道缩放因子应用于噪声输入。 映射网络 f 由 8 个层组成,合成网络 g 由 18 层组成 - 每个分辨率两层(分辨率从

)。使用单独的 1×1 卷积将最后一层的输出转换为 RGB,类似于Karras 等人[29]。 我们的生成器共有 2620 万可训练的参数,而传统生成器的参数量为 2310 万。

paces to 512, and the mapping f is implemented using an 8-layer MLP, a decision we will analyze in Section 4.1. Learned affine transformations then specialize w to styles y = (ys, yb) that control adaptive instance normalization (AdaIN) [26, 16, 20, 15] operations after each convolution layer of the synthesis network g. The AdaIN operation is defined as

空间为512,映射f使用8层MLP实现,我们将在 4.1 节中进行分析。然后,学习的仿射变换将w专门化为样式

,其控制在合成网络g的每个卷积层之后的自适应实例归一化(AdaIN)[26,16,20,15]运算。AdaIN操作定义为

where each feature map xi is normalized separately, and then scaled and biased using the corresponding scalar components from style y. Thus the dimensionality of y is twice the number of feature maps on that layer.

其中每个特征映射 xi 分别标准化,然后使用样式 y 中相应的标量组件进行缩放和偏置。因此,y 的维度是该层上的特征映射的数量的两倍。

Comparing our approach to style transfer, we compute the spatially invariant style y from vector w instead of an example image. We choose to reuse the word “style” for y because similar network architectures are already used for feedforward style transfer [26], unsupervised image-toimage translation [27], and domain mixtures [22]. Compared to more general feature transforms [36, 55], AdaIN is particularly well suited for our purposes due to its efficiency and compact representation.

比较我们的样式转换方法,我们从矢量 w 而不是示例图像计算空间不变的样式 y。 我们选择为 y 使用“style”这个词,因为类似的网络架构已经用于前馈式传输[26],无监督的图像到图像转换[27]和域混合[22]。 与更一般的特征变换相比[36,55],由于其效率和紧凑的表现,AdaIN 特别适合我们的目的。

Table 1. Fr´echet inception distance (FID) for various generator designs (lower is better). In this paper we calculate the FIDs using 50,000 images drawn randomly from the training set, and report the lowest distance encountered over the course of training.

表 1.各种生成器设计的 Frechet'起始距离(FID)(越低越好)。在本文中,我们使用从训练集中随机抽取的 50,000 张图像来计算FID,并报告在训练过程中遇到的最低距离。

Finally, we provide our generator with a direct means to generate stochastic detail by introducing explicit noise inputs. These are single-channel images consisting of uncorrelated Gaussian noise, and we feed a dedicated noise image to each layer of the synthesis network. The noise image is broadcasted to all feature maps using learned perfeature scaling factors and then added to the output of the corresponding convolution, as illustrated in Figure 1b. The implications of adding the noise inputs are discussed in Sections 3.2 and 3.3.

最后,我们通过引入显式噪声输入为我们的生成器提供直接的方法来生成随机细节。 这些是由不相关的高斯噪声组成的单通道图像,并且我们将专用噪声图像馈送到合成网络的每一层。使用学习的每个特征缩放因子将噪声图像广播到所有特征图,然后将其添加到相应卷积的输出中,如图 1b 所示。添加噪声输入的含义在第 3.2 和 3.3 节中讨论。

2.1.Quality of generated images生成图像的质量

Before studying the properties of our generator, we demonstrate experimentally that the redesign does not compromise image quality but, in fact, improves it considerably. Table 1 gives Fr´echet inception distances (FID) [24] for various generator architectures in CELEBA-HQ [29] and our new FFHQ dataset (Appendix A). Results for other datasets are given in Appendix E. Our baseline configuration (A) is the Progressive GAN setup of Karras et al. [29], from which we inherit the networks and all hyperparameters except where stated otherwise. We first switch to an improved baseline (B) by using bilinear up/downsampling operations [62], longer training, and tuned hyperparameters. A detailed description of training setups and hyperparameters is included in Appendix C. We then improve this new baseline further by adding the mapping network and AdaIN operations (C), and make a surprising observation that the network no longer benefits from feeding the latent code into the first convolution layer. We therefore simplify the architecture by removing the traditional input layer and starting the image synthesis from a learned 4 × 4 × 512 constant tensor (D). We find it quite remarkable that the synthesis network is able to produce meaningful results even though it receives input only through the styles that control the AdaIN operations.

在研究我们的生成器的特性之前,我们通过实验证明重新设计不能保证图像质量,但事实上,它可以大大改善它。表1给出了CELEBA-HQ [29]和我们新的FFHQ数据集(附录A)中各种生成器架构的 Frechet'起始距离(FID)[24]。其他数据集的结果在附录 E 中给出。我们的基线配置(A)是 Karras 等人的 Progressive GAN 设置 [29],我们默认继承了网络和所有超参数除非另有说明。我们首先通过使用双线性上/下采样操作[62],更长的训练和调整的超参数来切换到改进的基线(B)。附录 C 中包含了对训练设置和超参数的详细描述。然后我们通过添加映射网络和AdaIN 操作(C)进一步改进了这个新的基线,并且惊讶地观察到网络不再从将潜在编码馈送到第一个卷积层以获益。因此,我们通过移除传统的输入层并从学习的 4 × 4 × 512恒定张量(D)开始图像合成来简化架构。我们发现,即使只通过控制 AdaIN 操作的样式接收输入,合成网络也能够产生有意义的结果。

Finally, we introduce the noise inputs (E) that improve the results further, as well as novel mixing regularization (F) that decorrelates neighboring styles and enables more finegrained control over the generated imagery (Section 3.1).

最后,我们介绍了进一步改善结果的噪声输入(E),以及对相邻样式进行去相关的新型混合正则化(F),并对生成的图像进行更细粒度的控制(第 3.1 节)。

We evaluate our methods using two different loss functions: for CELEBA-HQ we rely on WGAN-GP [23],

我们使用两种不同的损失函数来评估我们的方法:对于CELEBA-HQ,我们依赖于 WGAN-GP [23],

Figure 2. Uncurated set of images produced by our style-based generator (config F) with the FFHQ dataset. Here we used a variation of the truncation trick [40, 5, 32] with ψ = 0.7 for resolutions 4^2 − 32^2 . Please see the accompanying video for more results.

图 2.由基于样式的生成器(config F)和 FFHQ 数据集生成的未经验证的图像集。 在这里,我们使用截断技巧[40,5,32]的变化,对于分辨率

,使用ψ=0.7。请参阅随附的视频以获得更多结果。

while FFHQ uses WGAN-GP for configuration A and nonsaturating loss [21] with R1 regularization [42, 49, 13] for configurations B–F. We found these choices to give the best results. Our contributions do not modify the loss function.

而 FFHQ 使用 WGAN-GP 进行配置 A 和非饱和损耗[21],其中 R1 正则化[42,49,13]用于配置 B-F。我们发现这些选择可以产生最佳效果。我们的贡献不需要修改损失函数。

We observe that the style-based generator (E) improves FIDs quite significantly over the traditional generator (B), almost 20%, corroborating the large-scale ImageNet measurements made in parallel work [6, 5]. Figure 2 shows an uncurated set of novel images generated from the FFHQ dataset using our generator. As confirmed by the FIDs, the average quality is high, and even accessories such as eyeglasses and hats get successfully synthesized. For this figure, we avoided sampling from the extreme regions of W using the so-called truncation trick [40, 5, 32] — Appendix B details how the trick can be performed in W instead of Z. Note that our generator allows applying the truncation selectively to low resolutions only, so that highresolution details are not affected.

我们观察到基于样式的生成器(E)相比传统生成器(B)显著提高了FID,几乎达到了 20%,证实了并行工作中的大规模 ImageNet 测量[6,5]。图2显示了使用我们的生成器从 FFHQ 数据集生成的一组未经验证的新图像。正如 FID所证实的那样,平均质量很高,甚至眼镜和帽子等配件也能成功合成。对于这个图,我们使用所谓的截断技巧避免了从W 的极端区域采样[40,5,32] - 附录 B 详述了如何在 W 而不是 Z 中执行该技巧。注意我们的生成器允许应用仅选择性地截断为低分辨率,以便不影响高分辨率细节。

All FIDs in this paper are computed without the truncation trick, and we only use it for illustrative purposes in Figure 2 and the video. All images are generated in 1024^2 resolution.

本文中的所有 FID 都是在没有截断技巧的情况下计算出来的,我们在图 2 和视频中仅将其用于说明目的。所有图像均以

分辨率生成。

2.2.Prior art现有技术

Much of the work on GAN architectures has focused on improving the discriminator by, e.g., using multiple discriminators [17, 45], multiresolution discrimination [58, 53], or self-attention [61]. The work on generator side has mostly focused on the exact distribution in the input latent

space [5] or shaping the input latent space via Gaussian mixture models [4], clustering [46], or encouraging convexity [50].

关于 GAN 架构的大部分工作都集中在通过例如使用多个鉴别器[17,15],多分辨率判别[58,53]或自注意力机制[61]来改进鉴别器。 生成器侧的工作主要集中在输入潜在空间的精确分布[5]或通过高斯混合模型[4],聚类[46]或鼓励凸性[50]对输入潜在空间进行整形。

Recent conditional generators feed the class identifier through a separate embedding network to a large number of layers in the generator [44], while the latent is still provided though the input layer. A few authors have considered feeding parts of the latent code to multiple generator layers [9, 5]. In parallel work, Chen et al. [6] “self modulate” the generator using AdaINs, similarly to our work, but do not consider an intermediate latent space or noise inputs.

最近的条件生成器通过单独的嵌入网络将类标识符提供给生成器[44]中的大量层,而潜在的仍然通过输入层提供。一些作者已经考虑将潜在编码的一部分馈送到多个生成器层[9,5]。 在平行工作中,陈等人[6]使用 AdaIN“自我调制”生成器,与我们的工作类似,但不考虑中间潜在空间或噪声输入。

3.Properties of the style-based generator基于样式的生成器的属性

Our generator architecture makes it possible to control the image synthesis via scale-specific modifications to the styles. We can view the mapping network and affine transformations as a way to draw samples for each style from a learned distribution, and the synthesis network as a way to generate a novel image based on a collection of styles. The effects of each style are localized in the network, i.e., modifying a specific subset of the styles can be expected to affect only certain aspects of the image.

我们的生成器架构可以通过对样式的特定尺度修改来控制图像合成。我们可以将映射网络和仿射变换视为从学习的分布中为每种样式绘制样本的方式,并且将合成网络视为基于样式集合生成新颖图像的方式。每种样式的效果都在网络中定位,即:可以预期修改样式的特定子集仅影响图像的某些方面。

To see the reason for this localization, let us consider how the AdaIN operation (Eq. 1) first normalizes each channel to zero mean and unit variance, and only then applies scales and biases based on the style. The new per-channel statistics, as dictated by the style, modify the relative importance of features for the subsequent convolution operation, but they do not depend on the original statistics because of the normalization. Thus each style controls only one convolution before being overridden by the next AdaIN operation.

为了了解这种定位的原因,让我们考虑 AdaIN 操作(方程 1)如何首先将每个通道归一化为零均值和单位方差,然后才应用基于样式的尺度和偏差。根据样式的要求,新的每通道统计数据修改了后续卷积操作的特征的相对重要性,但由于规范化,它们不依赖于原始统计数据。因此,每个样式在被下一个 AdaIN 操作覆盖之前仅控制一个循环。

3.1.Style mixing样式混合

To further encourage the styles to localize, we employ mixing regularization, where a given percentage of images are generated using two random latent codes instead of one during training. When generating such an image, we simply switch from one latent code to another — an operation we refer to as style mixing— at a randomly selected point in the synthesis network. To be specific, we run two latent codes z1, z2 through the mapping network, and have the corresponding w1, w2 control the styles so that w1 applies before the crossover point and w2 after it. This regularization technique prevents the network from assuming that adjacent styles are correlated.

为了进一步鼓励样式进行本地化,我们采用混合正则化,其中使用两个随机潜码而不是训练期间的一个潜在编码生成给定百分比的图像。 当生成这样的图像时,我们简单地从一个潜在编码切换到另一个 - 我们称之为样式混合的操作 -在合成网络中随机选择的点。 具体来说,我们运行两个潜码 z1, z2 通过映射网络,并具有相应的 w1,w2 控制样式,以便 w1 在交叉点之前应用,w2 在交叉点之后应用。 这种正则化技术可以防止网络假设相邻的样式是相关的。

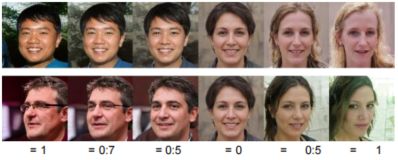

Figure 3. Visualizing the effect of styles in the generator by having the styles produced by one latent code (source) override a subset of the styles of another one (destination). Overriding the styles of layers corresponding to coarse spatial resolutions (4^2 – 8^2 ), high-level aspects such as pose, general hair style, face shape, and eyeglasses get copied from the source, while all colors (eyes, hair, lighting) and finer facial features of the destination are retained. If we instead copy the styles of middle layers (16^2 – 32^2 ), we inherit smaller scale facial features, hair style, eyes open/closed from the source, while the pose, general face shape, and eyeglasses from the destination are preserved. Finally, copying the styles corresponding to fine resolutions (64^2 – 1024^2) brings mainly the color scheme and microstructure from the source.

图 3.通过使一个潜在编码(源)生成的样式覆盖另一个(目标)的样式的子集,可视化生成器中样式的效果。 覆盖与粗糙空间分辨率相对应的图层样式(

),从源头复制高级属性,如姿势,通用发型,面部形状和眼镜,同时所有颜色(眼睛,头发,灯光)和保留目标的更精细的面部特征。 如果我们改为复制中间层(

)的样式,我们会继承较小比例的面部特征,发型,从源头打开/关闭的眼睛,同时保留来自目标的姿势,一般面部形状和眼镜。 最后,复制与精细分辨率(

)相对应的样式主要带来来自源的颜色方案和微结构。

Table 2. FIDs in FFHQ for networks trained by enabling the mixing regularization for different percentage of training examples. Here we stress test the trained networks by randomizing 1 . . . 4

latents and the crossover points between them. Mixing regularization improves the tolerance to these adverse operations signifi- cantly. Labels E and F refer to the configurations in Table 1.

表2.通过对不同百分比的训练样例进行混合正则化训练的网络的FFHQ 中的 FID。在这里,我们通过随机化1…4潜码和它们之间的交叉点来对受过训练的网络进行压力测试。混合正则化可显著提高对这些不良操作的耐受性。标签E和F参见表1中的配置。

Figure 4. Examples of stochastic variation. (a) Two generated images. (b) Zoom-in with different realizations of input noise. While the overall appearance is almost identical, individual hairs are placed very differently. (c) Standard deviation of each pixel over 100 different realizations, highlighting which parts of the images are affected by the noise. The main areas are the hair, silhouettes, and parts of background, but there is also interesting stochastic variation in the eye reflections. Global aspects such as identity and pose are unaffected by stochastic variation.

图 4.随机变化的例子。(a)两个生成的图像。(b)放大输入噪声的不同实现。虽然整体外观几乎相同,但个别毛发的放置方式却截然不同。(c)100 个不同实现中每个像素的标准偏差,突出显示图像的哪些部分受到噪声的影响。主要区域是头发,轮廓和背景的一部分,但眼睛反射也有有趣的随机变化。 身份和姿势等全局方面不受随机变化的影响。

Table 2 shows how enabling mixing regularization during training improves the localization considerably, indicated by improved FIDs in scenarios where multiple latents are mixed at test time. Figure 3 presents examples of images synthesized by mixing two latent codes at various scales. We can see that each subset of styles controls meaningful high-level attributes of the image.

表 2 显示了在训练期间启用混合正则化如何显著改善定位,通过在测试时混合多个潜码的情况下改进的 FID 来表示。图3 给出了通过混合不同尺度的两个潜码合成的图像的例子。我们可以看到每个样式子集控制图像的有意义的高级属性。

3.2.Stochastic variation随机变化

There are many aspects in human portraits that can be regarded as stochastic, such as the exact placement of hairs, stubble, freckles, or skin pores. Any of these can be randomized without affecting our perception of the image as long as they follow the correct distribution.

人类肖像中有许多方面可以被视为随机的,例如毛发,茬,雀斑或皮肤毛孔的确切位置。只要它们遵循正确的分布,任何这些都可以在不影响我们对图像的感知的情况下进行随机化。

Let us consider how a traditional generator implements stochastic variation. Given that the only input to the network is through the input layer, the network needs to invent a way to generate spatially-varying pseudorandom numbers from earlier activations whenever they are needed. This consumes network capacity and hiding the periodicity of generated signal is difficult — and not always successful, as evidenced by commonly seen repetitive patterns in generated images. Our architecture sidesteps these issues altogether by adding per-pixel noise after each convolution.

让我们考虑一下传统生成器如何实现随机变化。鉴于网络的唯一输入是通过输入层,网络需要发明一种方法来生成空间变化的伪随机数从需要时的早期激活开始。这消耗了网络容量并且隐藏了生成信号的周期性是困难的 - 并且并不总是成功的,正如生成的图像中常见的重复模式所证明的那样。我们的架构通过在每次卷积后添加每像素噪声来回避这些问题。

Figure 5. Effect of noise inputs at different layers of our generator. (a) Noise is applied to all layers. (b) No noise. (c) Noise in fine layers only (642 – 10242 ). (d) Noise in coarse layers only (42 – 322 ). We can see that the artificial omission of noise leads to featureless “painterly” look. Coarse noise causes large-scale curling of hair and appearance of larger background features, while the fine noise brings out the finer curls of hair, finer background detail, and skin pores.

图 5.在我们的生成器的不同层的噪声输入的影响。(a)噪音适用于所有层。( b )没噪音 。( c )仅有精细层的噪音(

)。(d)仅粗糙层的噪音(

)。我们可以看到,人为地忽略噪音会导致无意义的“绘画”外观。粗糙的噪音会导致头发大规模卷曲和出现更大的背景特征,而细微的噪音则会带来更精细的头发卷曲,更细致的背景细节和皮肤毛孔。

Figure 4 shows stochastic realizations of the same underlying image, produced using our generator with different noise realizations. We can see that the noise affects only the stochastic aspects, leaving the overall composition and high-level aspects such as identity intact. Figure 5 further illustrates the effect of applying stochastic variation to different subsets of layers. Since these effects are best seen in animation, please consult the accompanying video for a demonstration of how changing the noise input of one layer leads to stochastic variation at a matching scale.

图 4 显示了使用具有不同噪声实现的生成器产生的相同非图像的随机实现。我们可以看到,噪声仅影响随机方面,使整体构成和身份等高级属性完整无缺。图5进一步说明了将随机变化应用于不同的子集设置的效果。由于这些效果最好在动画中看到,请参阅随附的视频,以了解如何更改一层的噪声输入导致匹配比例的随机变化。

We find it interesting that the effect of noise appears tightly localized in the network. We hypothesize that at any point in the generator, there is pressure to introduce new content as soon as possible, and the easiest way for our network to create stochastic variation is to rely on the noise provided. A fresh set of noise is available for every layer, and thus there is no incentive to generate the stochastic effects from earlier activations, leading to a localized effect.

我们发现有趣的是,噪声的影响似乎在网络中紧密地定位。我们假设在生成器的任何一点,都有压力尽快引入新的内容,而我们的网络创建随机变化的最简单方法是依靠提供的噪声。每一层都有一组新的噪声,因此没有动力从早期的激活中产生随机效应,从而产生局部效应。

Figure 6. Illustrative example with two factors of variation (image features, e.g., masculinity and hair length). (a) An example training set where some combination (e.g., long haired males) is virtually non-existent. (b) This forces the mapping from Z to image features to become curved so that the forbidden combination disappears in Z to prevent the sampling of invalid combinations. (c) However, the learned mapping from Z to W is able to “undo” much of the warping.

图 6.具有两个变异因素的示例性示例(图像特征,例如,男性气质和头发长度)。(a)一个示例训练集,其中一些组合(例如,长发男性)几乎不存在。(b)这会强制从 Z 到图像特征的映射变为弯曲,以便禁止组合在 Z 中消失,以防止对无效组合进行采样。(c)然而,从 Z 到 W 的学习映射能够“消除”大部分翘曲。

3.3.Separation of global effects from stochasticity 从随机性中分离全局效应

The previous sections as well as the accompanying video demonstrate that while changes to the style have global effects (changing pose, identity, etc.), the noise affects only inconsequential stochastic variation (differently combed hair, beard, etc.). This observation is in line with style transfer literature, where it has been established that spatially invariant statistics (Gram matrix, channel-wise mean, variance, etc.) reliably encode the style of an image [19, 37] while spatially varying features encode a specific instance.

前面的部分以及随附的视频表明,虽然样式的变化具有全局效果(改变姿势,身份等),但噪声仅影响无关紧要的随机变化(不同梳理的头发,胡须等)。这种观察符合样式转换文献,其中已经确定空间不变统计量(格式矩阵,通道平均值,变量等)可靠地编码图像的样式[19,37]。空间变化的特征编码特定的实例。

In our style-based generator, the style affects the entire image because complete feature maps are scaled and biased with the same values. Therefore, global effects such as pose, lighting, or background style can be controlled coherently. Meanwhile, the noise is added independently to

each pixel and is thus ideally suited for controlling stochastic variation. If the network tried to control, e.g., pose using the noise, that would lead to spatially inconsistent decisions that would then be penalized by the discriminator. Thus the network learns to use the global and local channels appropriately, without explicit guidance.

在我们基于样式的生成器中,样式会影响整个图像,因为完整的要素图将使用相同的值进行缩放和双向化。因此,可以同时控制姿势,灯光或背景样式等全局效果。同时,噪声被独立地添加到每个像素,因此理想地适合于控制随机变化。如果网络试图控制例如使用噪声的姿势,那将导致空间不一致的决定,然后由鉴别器惩罚。因此,网络学会在没有明确指导的情况下,适当地使用全局和局部渠道。

4.Disentanglement studies解缠研究

There are various definitions for disentanglement [52, 48, 2, 7, 18], but a common goal is a latent space that consists of linear subspaces, each of which controls one factor of variation. However, the sampling probability of each combination of factors in Z needs to match the corresponding density in the training data. As illustrated in Figure 6, this precludes the factors from being fully disentangled with typical datasets and input latent distributions.2

(附件:2The few artificial datasets designed for disentanglement studies (e.g., [41, 18]) tabulate all combinations of predetermined factors of variation with uniform frequency, thus hiding the problem.)

解缠有各种定义[52,48,2,7,18],但共同的目标是由线性子空间构成的潜在空间,每个空间控制一个变异因子。 然而,Z 中每个因子组合的采样概率需要与训练数据中的对应密度相匹配。 如图 6 所示,这排除了与典型数据集和输入潜在分布完全解开的因素。

(附件:2 设计用于解缠研究的少数人工数据集(例如,[41,18])将预定变异因子的所有组合制成具有均匀频率,从而隐藏了该问题。)

A major benefit of our generator architecture is that the intermediate latent space W does not have to support sampling according to any fixed distribution; its sampling density is induced by the learned piecewise continuous mapping f(z). This mapping can be adapted to “unwarp” W so that the factors of variation become more linear. We posit that there is pressure for the generator to do so, as it should be easier to generate realistic images based on a disentangled representation than based on an entangled representation. As such, we expect the training to yield a less entangled W in an unsupervised setting, i.e., when the factors of variation are not known in advance [10, 33, 47, 8, 25, 30, 7].

我们的生成器架构的一个主要好处是中间潜在空间 W 不必根据任何固定分布支持采样;它的采样密度是由学习的分段连续映射 f(z)引起的。该映射可以适于“解除”W,以使变化因子变得更加线性。我们认为生成器有这样的压力,因为基于解开的表示而不是基于纠缠的表示来生成逼真的图像应该更容易。因此,我们期望训练在无人监督的环境中,即当事先不知道变异因素时产生较少陷入的 W [10,33,47,8,25,30,7]。

Unfortunately the metrics recently proposed for quantifying disentanglement [25, 30, 7, 18] require an encoder network that maps input images to latent codes. These metrics are ill-suited for our purposes since our baseline GAN lacks such an encoder. While it is possible to add an extra network for this purpose [8, 11, 14], we want to avoid investing effort into a component that is not a part of the actual solution. To this end, we describe two new ways of quantifying disentanglement, neither of which requires an encoder or known factors of variation, and are therefore computable for any image dataset and generator.

不幸的是,最近提出的用于量化解缠的指标[25,30,7,18]需要编码器网络将输入图像映射到潜码。由于我们的基线GAN缺少这样的编码器,因此这些方法不适合我们的目的。虽然可以为此目的添加额外的网络[8,11,14],但我们希望避免将工作投入到不属于实际解决方案的组件中。为此,我们描述了量化非解缠结的两种新方法,这两种方法都不需要编码器或已知的变化因子,因此可以计算任何图像数据集和生成器。

4.1.Perceptual path length感知路径长度

As noted by Laine [35], interpolation of latent-space vectors may yield surprisingly non-linear changes in the image. For example, features that are absent in either endpoint may appear in the middle of a linear interpolation path. This is a sign that the latent space is entangled and the factors of variation are not properly separated. To quantify this effect, we can measure how drastic changes the image undergoes as we perform interpolation in the latent space. Intuitively, a less curved latent space should result in perceptually smoother transition than a highly curved latent space.

正如 Laine [35]所指出的,潜在空间矢量的插值可能会在图像中产生令人惊讶的非线性变化。例如,任一端点中不存在的特征可能出现在线性插值路径的中间。这表明潜在的空间被纠缠,并且变化的因素没有被恰当地分开。为了量化这种效果,我们可以测量在潜在空间中执行插值时图像的剧烈变化。直观地说,较小弯曲的潜在空间应该比高度弯曲的潜在空间更容易过渡。

As a basis for our metric, we use a perceptually-based pairwise image distance [63] that is calculated as a weighted difference between two VGG16 [56] embeddings, where the weights are fit so that the metric agrees with human perceptual similarity judgments. If we subdivide a latent space interpolation path into linear segments, we can define the total perceptual length of this segmented path as the sum of perceptual differences over each segment, as reported by the image distance metric. A natural definition for the perceptual path length would be the limit of this sum under infinitely fine subdivision, but in practice we approximate it using a small subdivision epsilon ![]() . The average perceptual path length in latent space Z, over all possible endpoints, is therefore

. The average perceptual path length in latent space Z, over all possible endpoints, is therefore

where z1, z2~ P(z), t ~ U(0, 1), G is the generator (i.e., go f for style-based networks), and d(·, ·) evaluates the perceptual distance between the resulting images. Here slerp denotes the spherical interpolation operation [54] that is the most appropriate way of interpolating in our normalized input latent space [59]. To concentrate on the facial features instead of background, we crop the generated images to contain only the face prior to evaluating the pairwise image metric. As the metric d happens to be quadratic in nature [63], we divide by![]() instead of

instead of![]() in order to cancel out the unwanted dependence on subdivision granularity. We compute the expectation value by taking 100,000 samples.

in order to cancel out the unwanted dependence on subdivision granularity. We compute the expectation value by taking 100,000 samples.

作为我们度量的基础,我们使用基于感知的成对图像距离[63],其被计算为两个 VGG16 [56]嵌入之间的加权差异,其中权重是适合的,使得度量与人类的视觉相似性判断一致。如果我们将潜在空间插值路径细分为线性段,我们可以将该分段路径的总感知长度定义为每个段上的感知差异的总和,如图像距离度量所报告的。在无限精细细分下,这个总和的自然定义将是这个和的极限,但在实践中我们使用一个小的细分小量

来近似它。潜在空间 Z 中的平均感知路径长度,超过所有可能的终点,因此

其中

G 是生成器(即

用于基于样式的网络),d(.,.)评估的是结果图像之间的每个视距。 这里 slerp 表示球面插值运算[54],它是我们归一化输入潜在空间中最合适的插值方式[59]。 为了专注于面部特征而不是背景,我们在评估成对图像度量之前裁剪生成的图像以仅包含面部。 由于度量 d 恰好是自然二次[63],我们除以

而不是

为了抵消对细分粒度的不必要依赖。 我们通过获取 100,000 个样本来计算期望值。

Table 3. Perceptual path lengths and separability scores for various generator architectures in FFHQ (lower is better). We perform the measurements in Z for the traditional network, and in W for stylebased ones. Making the network resistant to style mixing appears to distort the intermediate latent space W somewhat. We hypothesize that mixing makes it more difficult for W to efficiently encode factors of variation that span multiple scales.

表 3. FFHQ 中各种生成器架构的感知路径长度和可分性得分(越低越好)。我们在 Z 中为传统网络执行测量,在 W 中执行基于样式的测量。使网络抵抗样式混合似乎有点扭曲中间潜在空间 W。我们假设混合使得 W 更难以有效地编码跨越多个尺度的变化因子。

Computing the average perceptual path length in W is carried out in a similar fashion:

计算 W 中的平均感知路径长度以类似的方式执行:

where the only difference is that interpolation happens in W space. Because vectors in W are not normalized in any fashion, we use linear interpolation (lerp).

唯一的区别是插值发生在 W 空间中。因为 W 中的向量没有以任何方式标准化,我们使用线性插值(lerp)。

Table 3 shows that this full-path length is substantially shorter for our style-based generator with noise inputs, indicating that W is perceptually more linear than Z. Yet, this measurement is in fact slightly biased in favor of the input latent space Z. If W is indeed a disentangled and “flattened” mapping of Z, it may contain regions that are not on the input manifold— and are thus badly reconstructed by the generator — even between points that are mapped from the input manifold, whereas the input latent space Z has no such regions by definition. It is therefore to be expected that if we restrict our measure to path endpoints, i.e., t ∈ {0, 1}, we should obtain a smaller lW while lZ is not affected. This is indeed what we observe in Table 3.

表 3 显示,对于具有噪声输入的基于样式的生成器,该全路径长度明显更短,这表明 W 在感知上比 Z 更线性。然而,这种测量实际上略微偏向于输入潜在空间 Z。如果 W确实是 Z 的解缠和“平坦”映射,它可能包含不在输入流形上的区域 - 因此由生成器较差重建 - 甚至在从输入流形映射的点之间,而输入潜在空间 Z 根据定义没有这样的区域。因此,如果我们将度量限制在路径端点,即

,则结果应该是可期望的,我们应该获得更小的

而不受

影响。这确实是我们在表 3 中观察到的。

Table 4 shows how path lengths are affected by the mapping network. We see that both traditional and style-based generators benefit from having a mapping network, and additional depth generally improves the perceptual path length as well as FIDs. It is interesting that while lW improves in the traditional generator, lZ becomes considerably worse, illustrating our claim that the input latent space can indeed be arbitrarily entangled in GANs.

表 4 显示了映射网络如何影响路径长度。我们看到传统和基于样式的生成器都受益于映射网络,并且附加深度通常会改善感知路径长度以及 FID。有趣的是,虽然

有所改善在传统的生成器里,

却变得更加糟糕,说明我们的主张输入潜在空间确实可以任意纠缠在 GAN 中。

Table 4. The effect of a mapping network in FFHQ. The number in method name indicates the depth of the mapping network. We see that FID, separability, and path length all benefit from having a mapping network, and this holds for both style-based and traditional generator architectures. Furthermore, a deeper mapping network generally performs better than a shallow one.

表 4. FFHQ 中映射网络的影响。 方法名称中的数字表示映射网络的深度。 我们看到 FID,可分离性和路径长度都可以从映射网络中受益,这适用于基于样式和传统的生成器体系结构。 此外,更深的映射网络通常比浅层映射网络表现更好。

4.2.Linear separability线性可分性

If a latent space is sufficiently disentangled, it should be possible to find direction vectors that consistently correspond to individual factors of variation. We propose another metric that quantifies this effect by measuring how well the latent-space points can be separated into two distinct sets via a linear hyperplane, so that each set corresponds to a specific binary attribute of the image.

如果潜在空间被充分解开,则应该可以找到始终与各个变化因素相对应的方向向量。我们提出了另一个度量,通过测量潜在空间点通过线性超平面分成两个不同的集合的程度来量化这种效应,以便每个集合对应于图像的特定二进制属性。

In order to label the generated images, we train auxiliary classification networks for a number of binary attributes, e.g., to distinguish male and female faces. In our tests, the classifiers had the same architecture as the discriminator we use (i.e., same as in [29]), and were trained using the CelebA-HQ dataset that retains the 40 attributes available in the original CelebA dataset. To measure the separability of one attribute, we generate 200,000 images with z ∼ P(z) and classify them using the auxiliary classification network. We then sort the samples according to classifier confidence and remove the least confident half, yielding 100,000 labeled latent-space vectors.

为了标记所生成的图像,我们为许多二进制属性训练辅助分类网络,例如,以区分男性和女性面部。在我们的测试中,分类器具有与我们使用的判别器相同的架构(即,与[29]中相同),并且使用 CelebA-HQ 数据集进行训练,该数据集保留原始 CelebA 数据集中可用的 40 个属性。为了测量一个属性的可分离性,我们用 z ~ P(z) 生成 200,000个图像,并使用辅助分类网络对它们进行分类。然后,我们根据分类器置信度对样本进行排序,并移除最不自信的一半,产生 100,000 个标记的潜在空间向量。

For each attribute, we fit a linear SVM to predict the label based on the latent-space point —z for traditional and w for style-based — and classify the points by this plane. To measure how well the hyperplane is able to separate the points into correct groups, we compute the conditional entropy H(Y |X) where X are the classes predicted by the SVM and Y are the classes determined by the pre-trained classifier. The conditional entropy thus tells how much additional information is required to determine the true class of a sample, assuming that we know on which side of the hyperplane it lies. The intuition is that if the associated factor of variation, or combination thereof, has inconsistent latent space directions, it will be more difficult to separate the sample points by a hyperplane, yielding high conditional entropy. A low value indicates easy separability and thus more consistent latent space direction for the particular factor or set of factors that the attribute corresponds to.

对于每个属性,我们拟合线性 SVM 来预测基于潜在空间点的标签 - 传统的 z 和基于样式的 w - 并且通过该平面对点进行分类。为了测量超平面能够将点分成正确的组的程度,我们计算条件对数 H(Y | X),其中 X 是由 SVM 预测的类,Y 是由预训练的分类器确定的类。因此,条件熵告诉我们确定样本的真实类需要多少附加信息,假设我们知道它所在的超平面的哪一侧。直觉是,如果相关的变异因子或其组合具有不一致的拉伸空间方向,则通过超平面分离样本点将更加困难,从而产生高条件熵。低值表示易于分离,因此对于特定因素或属性对应的一组因子,更一致的潜在空间方向。

We calculate the final separability score as![]() , where i enumerates the 40 attributes. Similar to the inception score [51], the exponentiation brings the values from logarithmic to linear domain so that they are easier to compare.

, where i enumerates the 40 attributes. Similar to the inception score [51], the exponentiation brings the values from logarithmic to linear domain so that they are easier to compare.

我们将最终的可分性得分计算为

,其中我列举了 40 个属性。与初始得分[51]类似,取幂使得从对数到线性域的值更容易比较。

Tables 3 and 4 show that W is consistently better separable than Z, suggesting a less entangled representation. Furthermore, increasing the depth of the mapping network improves both image quality and separability in W, which is in line with the hypothesis that the synthesis network inherently favors a disentangled input representation. Interestingly, adding a mapping network in front of a traditional generator results in severe loss of separability in Z but improves the situation in the intermediate latent space W, and the FID improves as well. This shows that even the traditional generator architecture performs better when we introduce an intermediate latent space that does not have to follow the distribution of the training data.

表 3 和表 4 显示 W 一直比 Z 更好,这表明纠缠的表示更少。此外,增加映射网络的深度改善了 W 中的图像质量和可分离性,这符合合成网络当然有利于解缠的输入表示的假

设。有趣的是,在传统生成器前面添加一个映射网络会导致Z 中可分离性的严重损失,但是会证明中间潜在空间 W 中的情况,并且 FID 也会改善。这表明,当我们引入一个不必

跟随训练数据分布的中间潜在空间时,即使是传统的生成器架构也能表现得更好。

5.Conclusion结论

Based on both our results and parallel work by Chen et al. [6], it is becoming clear that the traditional GAN generator architecture is in every way inferior to a style-based design. This is true in terms of established quality metrics, and we further believe that our investigations to the separation of high-level attributes and stochastic effects, as well as the linearity of the intermediate latent space will prove fruitful in improving the understanding and controllability of GAN synthesis.

基于我们的结果和 Chen 等人的并行工作[6],越来越清楚的是,传统的 GAN 生成器架构在各方面都不如基于样式的设计。在已建立的质量指标方面也是如此,我们进一步认为,我们对高级属性和随机效应分离的研究以及中间潜在空间的线性将在提高 GAN 理论的理解和可控性方面取得丰硕成果。

We note that our average path length metric could easily be used as a regularizer during training, and perhaps some variant of the linear separability metric could act as one, too. In general, we expect that methods for directly shaping the intermediate latent space during training will provide interesting avenues for future work.

我们注意到,我们的平均路径长度度量可以很容易地用作训练期间的正则化器,也许线性可分性度量的一些变体也可以作为一个变量。总的来说,我们希望在训练期间直接塑造中间潜码空间的方法将为未来的工作提供有趣的途径。

6.Acknowledgements致谢

We thank Jaakko Lehtinen, David Luebke, and Tuomas Kynk¨a¨anniemi for in-depth discussions and helpful comments; Janne Hellsten, Tero Kuosmanen, and Pekka J¨anis for compute infrastructure and help with the code release.

A. The FFHQ dataset FFHQ 数据集

We have collected a new dataset of human faces, FlickrFaces-HQ (FFHQ), consisting of 70,000 high-quality images at 10242 resolution (Figure 7). The dataset includes vastly more variation than CelebA-HQ [29] in terms of age, ethnicity and image background, and also has much better coverage of accessories such as eyeglasses, sunglasses, hats, etc. The images were crawled from Flickr (thus inheriting all the biases of that website) and automatically aligned and cropped. Only images under permissive licenses were collected. Various automatic filters were used to prune the set, and finally Mechanical Turk allowed us to remove the occasional statues, paintings, or photos of photos. We have made the dataset publicly available at

我们收集了一个新的人脸数据集, Flickr-Faces-HQ(FFHQ),由 分辨率的 70,000 个高质量图像组成(图 7)。 该数据集在年龄,种族和图像背景方面包含了比CelebA-HQ [29]更多的变化,并且还有更多的配件覆盖范围,如眼镜,太阳镜,帽子等。图像是从 Flickr 爬行的( 从而加入了该网站的所有偏见)并自动对齐和裁剪。 只收集了许可证下的图像。 各种自动过滤器用于修剪套装,最后 Mechanical Turk 允许我们删除偶然的雕像,绘画或照片。 我们已经公布了数据集:

https://github.com/NVlabs/ffhq-dataset

B. Truncation trick in W ,W 中的截断技巧

If we consider the distribution of training data, it is clear that areas of low density are poorly represented and thus likely to be difficult for the generator to learn. This is a significant open problem in all generative modeling techniques. However, it is known that drawing latent vectors from a truncated [40, 5] or otherwise shrunk [32] sampling space tends to improve average image quality, although some amount of variation is lost.

如果我们考虑训练数据的分布,很明显低密度区域的代表性很差,因此生成器很难学习。这是所有生成建模技术中一个重要的开放性问题。然而,众所周知,从截断的[40,5]或以其他方式缩小的[32]采样空间中绘制潜在向量倾向于改善平均图像质量,尽管丢失了一些量的变化。

We can follow a similar strategy. To begin, we compute the center of mass of W as ¯w = Ez∼P (z)[f(z)]. In case of FFHQ this point represents a sort of an average face (Figure 8, ψ = 0). We can then scale the deviation of a given w from the center as w0 = ¯w + ψ(w − ¯w), where ψ < 1. While Brock et al. [5] observe that only a subset of networks is amenable to such truncation even when orthogonal regularization is used, truncation in W space seems to work reliably even without changes to the loss function.

我们可以遵循类似的策略。首先,我们计算 W 的质心为

,在 FFHQ 的情况下,这一点代表一种平均面 (图 8,ψ = 0)。然后我们可以将给定 w 与中心的偏差缩放为

,其中ψ < 1。而 Brock 等人[5]观察到,即使使用正交正则化,只有一部分网络能够适应这种截断,即使不改变损失函数,W 空间中的截断似乎也能可靠地工作。

C. Hyperparameters and training details超参数和训练细节

We build upon the official TensorFlow [1] implementation of Progressive GANs by Karras et al. [29], from which we inherit most of the training details.3 This original setup corresponds to configuration A in Table 1. In particular, we use the same discriminator architecture, resolutiondependent minibatch sizes, Adam [31] hyperparameters, and exponential moving average of the generator. We enable mirror augmentation for CelebA-HQ and FFHQ, but disable it for LSUN. Our training time is approximately one week on an NVIDIA DGX-1 with 8 Tesla V100 GPUs.

我们建立在 Karras 等人的正式 TensorFlow [1]实施渐进式 GAN 的基础上[29],我们继承了大部分的训练细节(见页底 3)这个原始设置对应于表 1 中的配置 A。特别是,我们使用相同的鉴别器结构,分辨率相关的小批量大小,Adam [31]超参数,和生成器的指数移动平均值。我们为 CelebA-HQ 和 FFHQ启用了镜像扩充,但是为 LSUN 禁用了它。使用 8 个 Tesla V100 GPU 的 NVIDIA DGX-1 训练时间约为一周。

Figure 8. The effect of truncation trick as a function of style scale ψ. When we fade ψ → 0, all faces converge to the “mean” face of FFHQ. This face is similar for all trained networks, and the interpolation towards it never seems to cause artifacts. By applying negative scaling to styles, we get the corresponding opposite or “anti-face”. It is interesting that various high-level attributes often flip between the opposites, including viewpoint, glasses, age, coloring, hair length, and often gender.

图 8.截断技巧作为样式比例函数ψ的影响。 当我们褪色ψ → 0,所有面孔都汇聚到 FFHQ 的“均值”面。 对于所有受过训练的网络来说,这个面部是相似的,并且对它的插入似乎永远不会导致伪影。通过对样式应用负缩放,我们得到相应的相反或“反面”。 有趣的是,在对立面之间翻转的各种高级属性,包括视点,眼镜,年龄,着色,头发长度和性别。

(附件3:3https://github.com/tkarras/progressive growing of gans)

For our improved baseline (B in Table 1), we make several modifications to improve the overall result quality. We replace the nearest-neighbor up/downsampling in both networks with bilinear sampling, which we implement by lowpass filtering the activations with a separable 2nd order binomial filter after each upsampling layer and before each downsampling layer [62]. We implement progressive growing the same way as Karras et al. [29], but we start from 8^2 images instead of 4^2 . For the FFHQ dataset, we switch from WGAN-GP to the non-saturating loss [21] with R1 regularization [42] using γ = 10. With R1 we found that the FID scores keep decreasing for considerably longer than with WGAN-GP, and we thus increase the training time from 12M to 25M images. We use the same learning rates as Karras et al. [29] for FFHQ, but we found that setting the learning rate to 0.002 instead of 0.003 for 512^2 and 1024^2 leads to better stability with CelebA-HQ.

对于我们改进的基线(表 1 中的 B),我们进行了几项修改以提高整体结果质量。我们用双线性采样替换两个网络中的最近邻/上/下采样,我们通过在每个上采样层之后和每个下采样层之前用可分离的二阶二次滤波器对激活进行低通滤波来实现[62]。我们以与 Karras 等人相同的方式实施渐进式增长[29],但我们从

的图像而不是

的图像开始。对于 FFHQ 数据集,我们使用γ = 10 从 R1 正则化[42]切换到WGAN-GP 到非饱和损失[21]。我们发现了 R1 与 WGANGP 相比,FID 分数持续下降的时间要长得多,因此我们将训练时间从 12M 增加到 25M。我们使用与 Karras 等人相同的学习率 [29]对于 FFHQ,但我们发现将学习率设置为0.002 而不是 0.003(对于

和

)可以使 CelebAHQ 获得更好的稳定性。

For our style-based generator (F in Table 1), we use leaky ReLU [39] with α = 0.2 and equalized learning rate [29] for all layers. We use the same feature map counts in our convolution layers as Karras et al. [29]. Our mapping network consists of 8 fully-connected layers, and the dimensionality of all input and output activations— including z and w — is 512. We found that increasing the depth of the mapping network tends to make the training unstable with high learning rates. We thus reduce the learning rate by two orders of magnitude for the mapping network, i.e., λ0 = 0.01 ·λ. We initialize all weights of the convolutional, fully-connected, and affine transform layers using N (0, 1). The biases and noise scaling factors are initialized to zero, except for the biases associated with ys that we initialize to one.

对于我们基于样式的生成器(表 1 中的 F),我们使用Leaky ReLU [39]和α = 0.2 以及所有层的均衡学习率[29]。我们在卷积层中使用与 Karras 等人相同的特征映射计数[29]。我们的映射网络由 8 个完全连接层组成,所有输入和输出激活的维度 - 包括 z 和 w - 是 512。我们发现在高学习率下增加映射网络的深度往往会使训练不稳定。因此,我们将映射网络的学习速率降低了两个数量级,即

。我们使用 N(0,1)初始化卷积,完全连接和仿射变换层的所有权重。偏差和噪声缩放因子初始化为零,除了与我们初始化为 1 的 y 相关的偏差。

Figure 9. FID and perceptual path length metrics over the course of training in our configurations B and F using the FFHQ dataset. Horizontal axis denotes the number of training images seen by the discriminator. The dashed vertical line at 8.4M images marks the point when training has progressed to full 1024^2 resolution. On the right, we show only one curve for the traditional generator’s path length measurements, because there is no discernible difference between full-path and endpoint sampling in Z (Section 4.1).

图 9.使用 FFHQ 数据集在我们的配置 B 和 F 中训练过程中的 FID 和感知路径长度指标。横轴表示鉴别器所看到的训练图像的数量。 8.4M 张图像的虚线垂直线标志着训练进展到完全

分辨率时的点。 在右边,我们只显示传统生成器路径长度测量的一条曲线,因为 Z 中的全路径和端点采样之间没有明显的差异(4.1 节)。

The classifiers used by our separability metric (Section 4.2) have the same architecture as our discriminator except that minibatch standard deviation [29] is disabled. We use the learning rate of 10^−3 , minibatch size of 8, Adam optimizer, and training length of 150,000 images. The classifiers are trained independently of generators, and the same 40 classifiers, one for each CelebA attribute, are used for measuring the separability metric for all generators. We will release the pre-trained classifier networks so that our measurements can be reproduced.

我们的可分性度量(第 4.2 节)使用的分类器与我们的鉴别器具有相同的体系结构,即禁用了小批量标准偏差[29]。我们使用

的学习率,8 的小批量大小,Adam 优化器,以及 150,000 张图像的训练长度。 分类器独立于生成器进行训练,并且相同的 40 个分类器(每个 CelebA 属性一个)用于测量所有生成器的可分性度量。 我们将发布预训练的分类器网络,以便我们的测量可以再现。

We do not use batch normalization [28], spectral normalization [43], attention mechanisms [61], dropout [57], or pixelwise feature vector normalization [29] in our networks.

我们的网络中不使用批量归一化[28],谱正则化[43],注意力机制[61],Dropout[57]或像素特征向量归一化[29]。

D. Training convergence训练融合

Figure 9 shows how the FID and perceptual path length metrics evolve during the training of our configurations B and F with the FFHQ dataset. With R1 regularization active in both configurations, FID continues to slowly decrease as the training progresses, motivating our choice to increase the training time from 12M images to 25M images. Even when the training has reached the full 1024^2 resolution, the slowly rising path lengths indicate that the improvements in FID come at the cost of a more entangled representation. Considering future work, it is an interesting question whether this is unavoidable, or if it were possible to encourage shorter path lengths without compromising the convergence of FID.

图 9 显示了在使用 FFHQ 数据集训练配置 B 和 F 期间FID 和感知路径长度度量如何演变。 R1 正则化在两种配置中都有效,随着训练的进行,FID 继续缓慢下降,激励我们选择将训练时间从 12M 图像增加到 25M 图像。 即使训练达到了

分辨率,缓慢上升的路径长度也表明 FID 的改进是以更纠缠的表示为代价的。 考虑到未来的工作,这是一个有趣的问题,这是否是不可避免的,或者是否有可能在不影响 FID 收敛的情况下鼓励更短的路径长度。

E. Other datasets其他数据集

Figures 10, 11, and 12 show an uncurated set of results for LSUN [60] BEDROOM, CARS, and CATS, respectively. In these images we used the truncation trick from Appendix B with ψ = 0.7 for resolutions 4^2 − 32^2 . The accompanying video provides results for style mixing and stochastic variation tests. As can be seen therein, in case of BEDROOM the coarse styles basically control the viewpoint of the camera, middle styles select the particular furniture, and fine styles deal with colors and smaller details of materials. In CARS the effects are roughly similar. Stochastic variation affects primarily the fabrics in BEDROOM, backgrounds and headlamps in CARS, and fur, background, and interestingly, the positioning of paws in CATS. Somewhat surprisingly the wheels of a car never seem to rotate based on stochastic inputs.

图 10,11 和 12 分别显示了 LSUN [60] BEDROOM,CARS 和 CATS 的一组未经验证的结果。在这些图像中,我们使用附录 B 中的截断技巧,对于分辨率

,使用ψ= 0.7。附带的视频提供了样式混合和随机变化测试的结果。从中可以看出,在 BEDROOM 的情况下,粗糙的样式基本上控制了相机的视点,中间样式选择特定的家具,而精细的样式处理颜色和材料的较小细节。在 CARS 中,效果大致相似。随机变化主要影响卧室中的织物,CARS 中的背景和前照灯,以及毛皮,背景,以及有趣的是,爪子在 CATS 中的定位。有点令人惊讶的是,汽车的车轮似乎永远不会根据随机输入进行旋转。

These datasets were trained using the same setup as FFHQ for the duration of 70M images for BEDROOM and CATS, and 46M for CARS. We suspect that the results for BEDROOM are starting to approach the limits of the training data, as in many images the most objectionable issues are the severe compression artifacts that have been inherited from the low-quality training data. CARS has much higher quality training data that also allows higher spatial resolution (512 × 384 instead of 2562 ), and CATS continues to be a difficult dataset due to the high intrinsic variation in poses, zoom levels, and backgrounds.

这些数据集使用与 FFHQ 相同的设置进行训练,持续时间为卧室和 CATS 的 70M 图像,以及 CARS 的 46M 图像。我们怀疑 BEDROOM 的结果开始接近训练数据的极限,因为在许多图像中,最令人反感的问题是从低质量训练数据继承的严重压缩伪像。 CARS 有高得多的质量训练数据也允许更高的空间分辨率(512 × 384 而不是

),并且由于姿势,缩放级别和背景的高内在变化,CATS 仍然是一个困难的数据集。

References参考文献

略

详见原文地址:

https://arxiv.org/pdf/1812.04948.pdf