【机器学习实战】KNN算法 python代码实现

[机器学习实战]KNN算法 Python

P31页代码

from numpy import *

import operator

def createDataSet():

group = array([[1.0, 1.1], [1.0, 1.0], [0, 0], [0, 0.1]])

labels = ['A', 'A', 'B', 'B']

return group, labels

执行截图

执行命令

python

import kNN

group, labels = kNN.createDataSet()

group

labels

2.1.2 实施kNN算法

https://blog.csdn.net/niuwei22007/article/details/49703719#:~:text=%23%20index%20%3D%20sortedDistIndicies%20%5Bi%5D%E6%98%AF%E7%AC%ACi%E4%B8%AA%E6%9C%80%E7%9B%B8%E8%BF%91%E7%9A%84%E6%A0%B7%E6%9C%AC%E4%B8%8B%E6%A0%87%20%23%20voteIlabel%20%3D,%5BsortedDistIndicies%20%5Bi%5D%5D%20%23%20classCount.get%20%28voteIlabel%2C%200%29%E8%BF%94%E5%9B%9EvoteIlabel%E7%9A%84%E5%80%BC%EF%BC%8C%E5%A6%82%E6%9E%9C%E4%B8%8D%E5%AD%98%E5%9C%A8%EF%BC%8C%E5%88%99%E8%BF%94%E5%9B%9E0%20%23%20%E7%84%B6%E5%90%8E%E5%B0%86%E7%A5%A8%E6%95%B0%E5%A2%9E1

from numpy import *

import operator

def createDataSet():

group = array([[1.0, 1.1], [1.0, 1.0], [0, 0], [0, 0.1]])

labels = ['A', 'A', 'B', 'B']

return group, labels

def classify0(inX, dataSet, labels, k):

"""

:param inX:是输入的测试样本,是一个[x, y]样式的

:param dataSet:是训练样本集

:param labels:是训练样本标签

:param k: 选择距离最近的k个点 是top k最相近的

:return:

"""

dataSetSize = dataSet.shape[0] # 获取数据集的行数 shape【1】获取列数

diffMat = tile(inX, (dataSetSize, 1)) - dataSet # 把inX生成 (dataSize行,1列)的数组 并求与dataSize的差值

sqDiffMat = diffMat ** 2 # 差值求平方

sqDistances = sqDiffMat.sum(axis=1) # 矩阵的每一行向量相加

distances = sqDistances ** 0.5 # 向量和开方

sortedDistIndicies = distances.argsort() # argsort函数返回的是数组值从小到大的索引值 按照升序进行快速排序,返回的是原数组的下标

# 存放最终的分类结果及相应的结果投票数

classCount = {}

# 投票过程,就是统计前k个最近的样本所属类别包含的样本个数

for i in range(k):

# index = sortedDistIndicies[i]是第i个最相近的样本下标

# voteIlabel = labels[index]是样本index对应的分类结果('A' or 'B')

voteIlabel = labels[sortedDistIndicies[i]]

# classCount.get(voteIlabel, 0)返回voteIlabel的值,如果不存在,则返回0

# 然后将票数增1

classCount[voteIlabel] = classCount.get(voteIlabel, 0) + 1

# 把分类结果进行排序,然后返回得票数最多的分类结果

sortedClassCount = sorted(classCount.items(), key=operator.itemgetter(1), reverse=True)

# classCount.iteritems()将classCount字典分解为元组列表,

# operator.itemgetter(1)按照第二个元素的次序对元组进行排序,reverse=True是逆序,即按照从大到小的顺序排列

return sortedClassCount[0][0]

执行截图

执行命令

kNN.classify0([0,0],group,labels,3)

2.2 示例:使用 k-近邻算法改进约会网站的配对效果

实现代码

def file2matrix(filename):

"""

:param filename: 文件名

:return:

returnMat - 特征矩阵

classLabelVector - 分类Label向量

"""

fr = open(filename) # 打开文件

arrayOLines = fr.readlines() # 读取所有文件

numberOfLines = len(arrayOLines) # 获取文件长度

returnMat = zeros((numberOfLines, 3)) # 设定几行几类的0矩阵

classLabelVector = []

index = 0

for line in arrayOLines:

line = line.strip() # s.strip(rm),当rm空时,默认删除空白符(包括'\n','\r','\t',' ')

listFromLines = line.split('\t')

# 根据文本中标记的喜欢的程度进行分类,1代表不喜欢,2代表魅力一般,3代表极具魅力

# 对于datingTestSet2.txt 最后的标签是已经经过处理的 标签已经改为了1, 2, 3

# 将数据前三列提取出来,存放到returnMat的NumPy矩阵中,也就是特征矩阵

returnMat[index, :] = listFromLines[0: 3]

if listFromLines[-1] == 'didntLike':

classLabelVector.append(1)

elif listFromLines[-1] == 'smallDoses':

classLabelVector.append(2)

elif listFromLines[-1] == 'largeDoses':

classLabelVector.append(3)

# classLabelVector.append(int(listFromLines - 1))

index += 1

return returnMat, classLabelVector

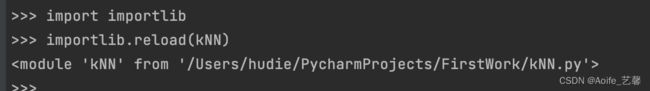

执行截图

执行命令

import importlib

importlib.reload(kNN)

datingDataMat, datingLabels = kNN.file2matrix('datingTestSet.txt')

datingDataMat

datingLabels[0:20]

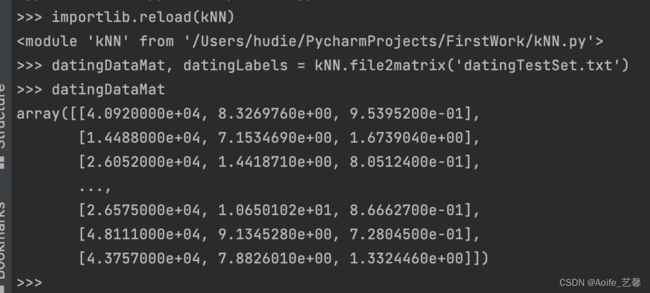

2.2.2 分析数据:使用 Matplotlib 创建散点图

执行命令代码

if __name__ == "__main__":

group, labels = createDataSet()

classify0([0, 0], group, labels, 3)

datingDataMat, datingLabels = file2matrix('datingTestSet.txt')

import matplotlib

import matplotlib.pyplot as plt

matplotlib.use('TkAgg') # 必须显式指明matplotlib的后端

fig = plt.figure()

ax = fig.add_subplot(111)

ax.scatter(datingDataMat[:, 1], datingDataMat[:, 2])

plt.show()

执行命令

import matplotlib

import matplotlib.pyplot as plt

fig = plt.figure()

ax = fig.add_subplot(111)

ax.scatter(datingDataMat[:,1], datingDataMat[:,2])

plt.show()

执行截图

执行命令代码

if __name__ == "__main__":

group, labels = createDataSet()

classify0([0, 0], group, labels, 3)

datingDataMat, datingLabels = file2matrix('datingTestSet.txt')

import matplotlib

import matplotlib.pyplot as plt

matplotlib.use('TkAgg') # 必须显式指明matplotlib的后端

fig = plt.figure()

ax = fig.add_subplot(111)

# ax.scatter(datingDataMat[:, 1], datingDataMat[:, 2])

ax.scatter(datingDataMat[:, 1], datingDataMat[:, 2], 15.0 * array(datingLabels) ,15.0 * array(datingLabels))

plt.show()

执行截图

归一化处理

执行代码

group, labels = createDataSet()

classify0([0, 0], group, labels, 3)

datingDataMat, datingLabels = file2matrix('datingTestSet.txt')

normDataSet, ranges, minVals = autoNorm(datingDataMat)

print(normDataSet)

print("----------------------------")

print(ranges)

print("----------------------------")

print(minVals)

# 执行代码

if __name__ == "__main__":

group, labels = createDataSet()

classify0([0, 0], group, labels, 3)

datingDataMat, datingLabels = file2matrix('datingTestSet.txt')

normDataSet, ranges, minVals = autoNorm(datingDataMat)

print(normDataSet)

print("----------------------------")

print(ranges)

print("----------------------------")

print(minVals)

执行截图

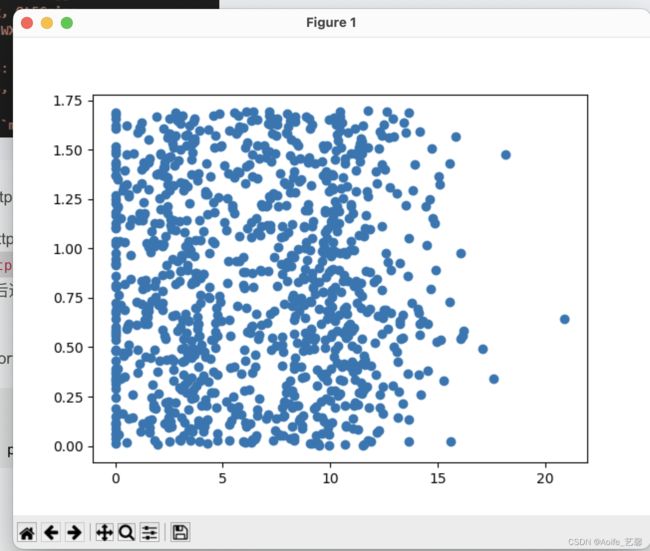

测试算法:作为完整程序验证分类器

执行代码

def datingClassTest():

hoRatio = 0.01

datingDataMat, datingLabels = file2matrix('datingTestSet.txt')

normMat, ranges, minVals = autoNorm(datingDataMat)

m = normDataSet.shape[0]

numTestVecs = int(m * hoRatio)

errorCount = 0.0

for i in range(numTestVecs):

classifierResult = classify0(normMat[i, :], normMat[numTestVecs:m, :], \

datingLabels[numTestVecs: m], 3)

print("分类器解析得:%d 正确为: %d" % (classifierResult, datingLabels[i]))

if (classifierResult != datingLabels[i]):

errorCount += 1.0

print("错误率 %f" % (errorCount / float(numTestVecs)))

# 执行代码

group, labels = createDataSet()

classify0([0, 0], group, labels, 3)

datingDataMat, datingLabels = file2matrix('datingTestSet.txt')

normDataSet, ranges, minVals = autoNorm(datingDataMat)

datingClassTest()

执行截图

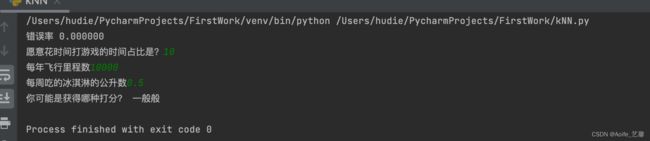

程序清单2-5 约会网站预测函数

执行代码

def classifyPerson():

resultList = ['一点也不喜欢', '一般般', '很感兴趣']

percentTats = float(input( \

"愿意花时间打游戏的时间占比是?"))

ffMiles = float(input("每年飞行里程数"))

iceCream = float(input("每周吃的冰淇淋的公升数"))

datingDataMat, datingLabels = file2matrix('datingTestSet.txt')

normMat, ranges, minVals = autoNorm(datingDataMat)

inArr = array([ffMiles, percentTats, iceCream])

classifierResult = classify0((inArr - minVals) / ranges, normMat, datingLabels, 3)

print("你可能是获得哪种打分?", resultList[classifierResult - 1])

# 执行代码

if __name__ == "__main__":

group, labels = createDataSet()

classify0([0, 0], group, labels, 3)

datingDataMat, datingLabels = file2matrix('datingTestSet.txt')

normDataSet, ranges, minVals = autoNorm(datingDataMat)

datingClassTest()

classifyPerson()

执行结果截图

执行手写识别系统

函数代码

def img2vector(filename):

returnVect = zeros((1, 1024))

fr = open(filename)

for i in range(32):

lineStr = fr.readline()

for j in range(32):

returnVect[0, 32 * i + j] = int(lineStr[j])

return returnVect

执行代码

group, labels = createDataSet()

classify0([0, 0], group, labels, 3)

testVector = img2vector('testDigits/0_13.txt')

print(testVector[0, 0:31])

print(testVector[0, 32:63])

执行截图

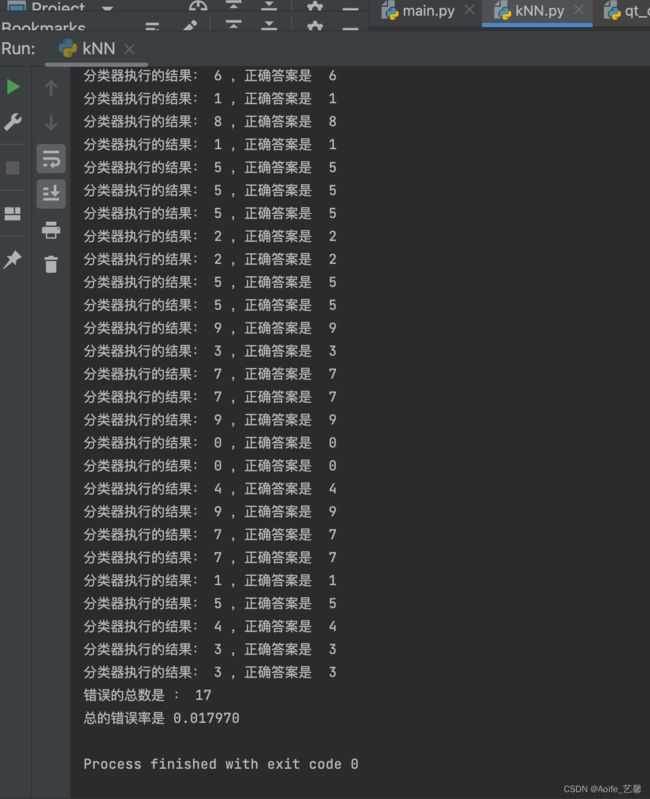

使用K近邻算法识别手写数字

执行代码

def handwritingClassTest():

hwLabels = []

trainingFileList = listdir('trainingDigits')

m = len(trainingFileList)

trainingMat = zeros((m, 1024))

for i in range(m):

fileNameStr = trainingFileList[i]

fileStr = fileNameStr.split('.')[0]

classNumStr = int(fileStr.split('_')[0])

hwLabels.append(classNumStr)

trainingMat[i, :] = img2vector('trainingDigits/%s' % (fileNameStr))

testFileList = listdir('testDigits')

errorCount = 0.0

mTest = len(testFileList)

for i in range(mTest):

fileNameStr = testFileList[i]

fileStr = fileNameStr.split('.')[0]

classNumStr = int(fileStr.split('_')[0])

vectorUnderTest = img2vector('trainingDigits/%s' % (fileNameStr))

classifierResult = classify0(vectorUnderTest, trainingMat, hwLabels, 5)

print("分类器执行的结果: %s ,正确答案是 %s " % (classifierResult, classNumStr))

if(classifierResult != classNumStr):

errorCount += 1.0

print("错误的总数是 : %d" % (errorCount))

print("总的错误率是 %f" % (errorCount / float(mTest)))

执行代码调用

handwritingClassTest()

执行结果截图

https://cdn.jsdelivr.net/gh/user/repo@version/file

https://cdn.jsdelivr.net/gh/hudiework/img

bid=A4-0lFjCD3I; gr_user_id=a3d3fc3d-8c94-413b-9a67-29970d4bc172; __gads=ID=9b650bed12d46173-224ad6e93fd60073:T=1662603166:RT=1662603166:S=ALNI_MZWO3aV0mpoOiBgmtHEk889_OsEXw; _ga=GA1.1.347996523.1662603166; _ga_RXNMP372GL=GS1.1.1662626127.1.1.1662626167.20.0.0; douban-fav-remind=1; viewed="30332976_26417896"; ll="118123"; dbcl2="148288304:rjulBCFYct0"; ck=Pzjq; _pk_ref.100001.4cf6=["","",1664352054,"https://open.weixin.qq.com/"]; _pk_ses.100001.4cf6=*; __utma=30149280.347996523.1662603166.1664194955.1664352054.6; __utmb=30149280.0.10.1664352054; __utmc=30149280; __utmz=30149280.1664352054.6.6.utmcsr=open.weixin.qq.com|utmccn=(referral)|utmcmd=referral|utmcct=/; __utma=223695111.347996523.1662603166.1664194956.1664352054.2; __utmb=223695111.0.10.1664352054; __utmc=223695111; __utmz=223695111.1664352054.2.2.utmcsr=open.weixin.qq.com|utmccn=(referral)|utmcmd=referral|utmcct=/; push_noty_num=0; push_doumail_num=0; __gpi=UID=00000993488750fc:T=1662603166:RT=1664352146:S=ALNI_MavDF8AYmPstOI4vsGc2dGLWY-b3A; _vwo_uuid_v2=DCE02D464D392AEAE108394912A092925|6023f68d9637ae7c331f184199955148; _pk_id.100001.4cf6=9221a804633b8bfd.1664194956.2.1664352419.1664195697.