pytorch搭建Resnet50实现狗狗120个品种类的分类

此项目出自Kaggle竞赛

项目介绍:

谁是好狗?谁喜欢搔耳朵?好吧,看来那些花哨的深度神经网络并没有解决所有问题。然而,也许它们能回答我们在遇到四条腿的陌生人时普遍会问的问题:这是什么样的好狗狗?

在这个操场竞赛级别中,您将得到ImageNet的一个严格的犬类子集,以便练习细粒度图像分类。你能多好地区分你的诺福克梗和诺威奇梗?

数据集介绍:

你会得到一组训练集和一组狗的图像测试集。每张图片都有一个文件名,这是它唯一的id。该数据集包含120个品种的狗。比赛的目标是创造一个分类器,能够从一张照片决定狗的品种。犬种名单如下:

affenpinscher

afghan_hound

african_hunting_dog

airedale

american_staffordshire_terrier

appenzeller

australian_terrier

basenji

basset

beagle

bedlington_terrier

bernese_mountain_dog

black-and-tan_coonhound

blenheim_spaniel

bloodhound

bluetick

border_collie

border_terrier

borzoi

boston_bull

bouvier_des_flandres

boxer

brabancon_griffon

briard

brittany_spaniel

bull_mastiff

cairn

cardigan

chesapeake_bay_retriever

chihuahua

chow

clumber

cocker_spaniel

collie

curly-coated_retriever

dandie_dinmont

dhole

dingo

doberman

english_foxhound

english_setter

english_springer

entlebucher

eskimo_dog

flat-coated_retriever

french_bulldog

german_shepherd

german_short-haired_pointer

giant_schnauzer

golden_retriever

gordon_setter

great_dane

great_pyrenees

greater_swiss_mountain_dog

groenendael

ibizan_hound

irish_setter

irish_terrier

irish_water_spaniel

irish_wolfhound

italian_greyhound

japanese_spaniel

keeshond

kelpie

kerry_blue_terrier

komondor

kuvasz

labrador_retriever

lakeland_terrier

leonberg

lhasa

malamute

malinois

maltese_dog

mexican_hairless

miniature_pinscher

miniature_poodle

miniature_schnauzer

newfoundland

norfolk_terrier

norwegian_elkhound

norwich_terrier

old_english_sheepdog

otterhound

papillon

pekinese

pembroke

pomeranian

pug

redbone

rhodesian_ridgeback

rottweiler

saint_bernard

saluki

samoyed

schipperke

scotch_terrier

scottish_deerhound

sealyham_terrier

shetland_sheepdog

shih-tzu

siberian_husky

silky_terrier

soft-coated_wheaten_terrier

staffordshire_bullterrier

standard_poodle

standard_schnauzer

sussex_spaniel

tibetan_mastiff

tibetan_terrier

toy_poodle

toy_terrier

vizsla

walker_hound

weimaraner

welsh_springer_spaniel

west_highland_white_terrier

whippet

wire-haired_fox_terrier

yorkshire_terrier

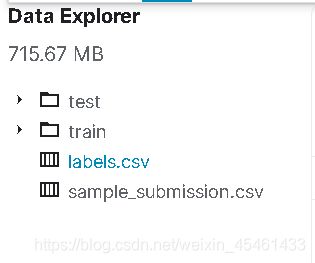

文件结构

如图:

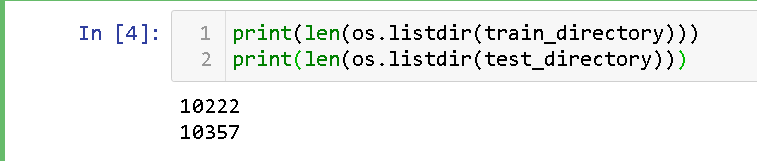

train文件夹下包括训练图片 10222 张

test文件夹下包括了测试图片 10357 张

如图:

labels.csv文件为train文件夹中图片与对应的label

如图:

准备数据集

对于很多新手来说 这一直是一件很麻烦的事情,实在是有太多的方法可以用,所以不知道学哪一种,看多了头晕,我的建议是:

pandas(Kaggle),

lxml(目标检测),

PIL(图片),

opencv(图片 视频) ,

学习以上四种基本上可以解决了

对于这个项目,由于使用的是pytorch框架,我使用了通过继承类torch.nn.utils.Dataset类(必须要重写__getitem__ 和__len__ 方法) , 从而将数据读入,直接放代码

from torch.utils.data import Dataset

from PIL import Image

class MyData(Dataset):

def __init__(self, txt_path, transform=None):

super(MyData, self).__init__()

self.txt_path = txt_path

self.transform = transform

self.imgs = [] # 用于保留图片的路径和标签

with open(txt_path, 'r') as f:

print("正在读入路径下 {0} 文件".format(txt_path))

line = f.readline()

count = 0

while len(line) != 0:

line = line.strip().split()

self.imgs.append((line[0], line[1]))

line = f.readline()

count += 1

print("该文件长度为{0} 读取完毕".format(count))

def __getitem__(self, item):

img_path, label = self.imgs[item][0], self.imgs[item][1]

img = Image.open(img_path).convert("RGB")

if self.transform is not None:

img = self.transform(img)

label = int(label)

return img, label

def __len__(self):

return len(self.imgs)

训练

import torch

from torchvision import transforms

from torchvision import models

from torch.utils.data import DataLoader

from torch import optim

import time

import os

import visdom

from mydata import MyData

from Parctice_resnet import get_Resnet50

GPU = torch.cuda.is_available()

if GPU:

print("开始使用GPU训练.......")

else:

print("开始使用CPU训练.......")

device = torch.device("cuda:0" if GPU else "cpu")

print("使用设备名称:{0}".format(device))

# 创建窗口

vz = visdom.Visdom(use_incoming_socket=True, port=58504)

vz.line([0.], [0.], win="train_loss", opts={

"title": "train_loss"

})

vz.line([0.], [0.], win="val_loss", opts={

"title": "val_loss"

})

vz.line([0.], [0.], win="val_acc", opts={

"title": "val_acc"

})

# 训练的超参数

train_parameters = {

"learning_rate": 0.1,

"epoch": 120,

"batch_size": 32

}

# 训练数据增强

train_transform = transforms.Compose([

# # 随机裁剪

# transforms.RandomCrop(300),

# 修改图片大小

transforms.RandomResizedCrop(size=256, scale=(0.8, 1)),

# 中心裁剪

transforms.CenterCrop(224),

# 水平翻转

transforms.RandomHorizontalFlip(0.5),

# 垂直翻转

transforms.RandomVerticalFlip(0.5),

# 旋转一定的角度

transforms.RandomRotation(45),

# 转换成Tensor

transforms.ToTensor(),

# 数据标准化

# transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])

])

# 测试数据增强

val_transform = transforms.Compose([

# 中心裁剪

transforms.CenterCrop(224),

# 转换成Tensor

transforms.ToTensor(),

# 数据标准化

# transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])

])

# 获取数据

train_db = MyData("./data/train.txt", transform=train_transform)

val_db = MyData("./data/test.txt", transform=val_transform)

train_db_loader = DataLoader(train_db, shuffle=True, batch_size=train_parameters["batch_size"])

val_db_loader = DataLoader(val_db, shuffle=True, batch_size=train_parameters["batch_size"])

# 获取模型

# model = get_Resnet50()

model = models.resnet50(pretrained=True)

# 不对上面的层进行训练 c

for param in model.parameters():

param.requires_grad = False

# 修改最后一层

in_future = model.fc.in_features

model.fc = torch.nn.Sequential(torch.nn.Linear(in_future, 120), torch.nn.LogSoftmax(dim=1))

# 断点

RESUME = False

start_epoch = 0

check_point_path = "./checkpoint/best.pth"

if os.path.exists(check_point_path):

print("存在断点 正在恢复......")

checkpoint = torch.load(check_point_path, map_location=device)

start_epoch = checkpoint["start_epoch"]

optimizer = checkpoint["optimizer"]

model.load_state_dict(checkpoint["weight"])

print("从断点出加载完成")

else:

print("不存在断点, 重新开始训练模型")

if not os.path.exists("./checkpoint/"):

print("在目录下{0} 创建checkpoint文件夹".format("./checkpoint/"))

os.mkdir("./checkpoint/")

if not RESUME:

optimizer = optim.SGD(model.parameters(), lr=train_parameters["learning_rate"])

schedule = optim.lr_scheduler.MultiStepLR(optimizer, milestones=[5, 30, 70], gamma=0.1, last_epoch=-1) # 调整学习率

loss_function = torch.nn.NLLLoss()

model.to(device)

# 开始训练

print("开始训练..................")

best_acc = 0

best_loss = 0

for epoch in range(start_epoch, train_parameters["epoch"]):

# 70个epoch后开始训练所有的层

if epoch == 70:

for param in model.parameters():

param.requires_grad = True

schedule.step()

model.train()

start_time = time.time()

train_loss_total = 0

for step, (x, y) in enumerate(train_db_loader):

if GPU:

x = x.cuda()

y = y.cuda()

pred = model(x)

train_loss = loss_function(pred, y)

optimizer.zero_grad()

train_loss.backward()

optimizer.step()

train_loss_total += train_loss

avg_loss = train_loss_total / (step + 1)

print("epoch:{0} train_loss:{1:.2f} lr:{2}".format(epoch, avg_loss, train_parameters["learning_rate"]))

vz.line([avg_loss.item()], [epoch], win="train_loss", update="append")

# 开始验证

model.eval()

with torch.no_grad():

val_loss_total = 0

val_correct_total = 0

for val_step, (val_x, val_y) in enumerate(val_db_loader):

if GPU:

val_x = val_x.cuda()

# target = val_y

# target = target.cuda()

# val_y = torch.nn.functional.one_hot(val_y, 120)

# val_y = val_y.float()

val_y = val_y.cuda()

pred = model(val_x)

val_loss = loss_function(pred, val_y)

val_loss_total += val_loss

correct = torch.eq(torch.argmax(pred, dim=-1), val_y).float().sum()

correct = 100 * correct / val_x.size(0)

val_correct_total += correct

avg_val_loss = val_loss_total / (val_step + 1)

avg_val_correct = val_correct_total / (val_step + 1)

vz.line([avg_val_loss.item()], [epoch], win="val_loss", update="append")

vz.line([avg_val_correct.item()], [epoch], win="val_acc", update="append")

print("epoch:{0} val_loss:{1:.2f} acc:{2:.2f}".format(epoch, avg_val_loss, avg_val_correct))

end_time = time.time()

print("epoch_time_cost: {0}s".format(end_time - start_time))

if avg_val_correct > best_acc:

checkpoint = {

"weight": model.state_dict(),

"start_epoch": epoch,

"optimizer": optimizer

}

best_acc = avg_val_correct

best_loss = avg_loss

save_path = "./checkpoint/best.pth"

torch.save(checkpoint, save_path)

print("训练结束......")

print("best_acc:{0:.2f} best_loss:{1:.2f}".format(best_acc, best_loss))

print("checkpoint保存路径为:{0}".format(save_path))

为什么直接调用了pyTorch中的Resnet50 模型?

因为做这个分类时, 由于Kaggle给的数据集很少,Resnet50网络层太深了,在误差反向传播的时候,由于梯度消失,往后传几层就基本上为0了,导致前端的网络没有训练,那么无法使得神经网络很好的学习,所以利用迁移学习,如果使用自己的模型,没有训练好的参数,无法达到迁移学习的前提,所以我直接调用了pytorch中的Resnet50模型,它具有再Imagenet上提前训练好的权重,我们直接调用就是。有兴趣的朋友可以去试试自己写一个Restnet50,

这里是我自己编写的 Restnet50模型.

从0开始训练你会发现 loss 基本上不收敛, acc基本上不变化

该模型我是如何训练的

训练的超参数

# 训练的超参数

train_parameters = {

"learning_rate": 0.1,

"epoch": 120,

"batch_size": 32

}

调用训练好的模型 ,参数 pretrained=True

model = models.resnet50(pretrained=True)

前70个epoch冻结除最后一层的所有层

学习率变化为

0~5: 0.1

5~30: 0.01

30~70: 0.001

# 不对前面的层进行训练

for param in model.parameters():

param.requires_grad = False

# 修改最后一层

in_future = model.fc.in_features

model.fc = torch.nn.Sequential(torch.nn.Linear(in_future, 120), torch.nn.LogSoftmax(dim=1))

70~120 epoch 解冻之前的层 学习率为调整为0.0001

# 70个epoch后开始训练所有的层

if epoch == 70:

for param in model.parameters():

param.requires_grad = True

训练过程的图片

明天更新