MXNet-图像分类(gluon版本)【附源码】

文章目录

- 前言

- 图像的发展史及意义

- 一、图像分类模型构建

-

- 1.LeNet的实现(1994年)

- 2.AlexNet的实现(2012年)

- 3.VGG的实现(2014年)

- 4.GoogleNet的实现(2014年)

- 5.ResNet的实现(2015年)

- 6.MobileNet_V1的实现(2017年)

- 7.MobileNet_V2的实现(2019年)

- 8.MobileNet_V3的实现(2019年)

- 9.DenseNet的实现(2017年)

- 10.ResNest的实现(2020年)

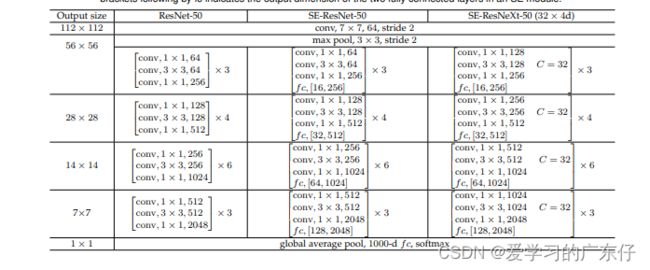

- 11.ResNext的实现(2016年)

- 12.SeNet的实现(2017年)

- 13.Xception的实现

- 14.InceptionV3的实现

- 15.SqueezeNet的实现

- 16.NinNet的实现

- 17.NasNet的实现

- 18.HrNet的实现

- 19.DLA的实现

- 二、数据集的准备

-

- 1.数据集描述

- 2.数据集准备

- 三、模型训练/预测

-

- 1.基础信息配置

- 2.数据加载器

- 3.模型构建

- 4.模型训练

- 5.模型预测

- 四、模型主入口

前言

本文基于mxnet的gluon实现图像分类,本文基于多个网络结构实现图像分类

图像的发展史及意义

在神经网络和深度学习领域,Yann LeCun可以说是元老级人物。他于1998年在 IEEE 上发表了一篇42页的长文,文中首次提出卷积-池化-全连接的神经网络结构,由LeCun提出的七层网络命名LeNet5,因而也为他赢得了卷积神经网络之父的美誉。CNN在近几年的发展历程中,从经典的LeNet5网络到最近号称最好的图像分类网络EfficientNet,大量学者不断的做出了努力和创新。

在后面的一些图像算法过程中,图像分类的网络结构起到了举足轻重的作用,他们都依赖于分类网络做主干特征提取网络,有一定的程度体现出特征提取能力。

一、图像分类模型构建

本文基于mxnet实现图像分类的网络结构,下边对各个网络结构进行介绍,后续只需要稍作修改即可完成网络结构的替换

1.LeNet的实现(1994年)

论文地址:https://ieeexplore.ieee.org/document/726791

网络结构:

![]()

LeNet由Yann Lecun 提出,是一种经典的卷积神经网络,是现代卷积神经网络的起源之一。Yann将该网络用于邮局的邮政的邮政编码识别,有着良好的学习和识别能力。LeNet又称LeNet-5,具有一个输入层,两个卷积层,两个池化层,3个全连接层(其中最后一个全连接层为输出层)。

LeNet-5是一种经典的卷积神经网络结构,于1998年投入实际使用中。该网络最早应用于手写体字符识别应用中。普遍认为,卷积神经网络的出现开始于LeCun 等提出的LeNet 网络,可以说LeCun 等是CNN 的缔造者,而LeNet-5 则是LeCun 等创造的CNN 经典之作。

LeNet5 一共由7 层组成,分别是C1、C3、C5 卷积层,S2、S4 降采样层(降采样层又称池化层),F6 为一个全连接层,输出是一个高斯连接层,该层使用softmax 函数对输出图像进行分类。为了对应模型输入结构,将MNIST 中的28* 28 的图像扩展为32* 32 像素大小。下面对每一层进行详细介绍。C1 卷积层由6 个大小为5* 5 的不同类型的卷积核组成,卷积核的步长为1,没有零填充,卷积后得到6 个28* 28 像素大小的特征图;S2 为最大池化层,池化区域大小为2* 2,步长为2,经过S2 池化后得到6 个14* 14 像素大小的特征图;C3 卷积层由16 个大小为5* 5 的不同卷积核组成,卷积核的步长为1,没有零填充,卷积后得到16 个10* 10 像素大小的特征图;S4 最大池化层,池化区域大小为2* 2,步长为2,经过S2 池化后得到16 个5* 5 像素大小的特征图;C5 卷积层由120 个大小为5* 5 的不同卷积核组成,卷积核的步长为1,没有零填充,卷积后得到120 个1* 1 像素大小的特征图;将120 个1* 1 像素大小的特征图拼接起来作为F6 的输入,F6 为一个由84 个神经元组成的全连接隐藏层,激活函数使用sigmoid 函数;最后一层输出层是一个由10 个神经元组成的softmax 高斯连接层,可以用来做分类任务。

代码实现(gluon):

class _LeNet(nn.HybridBlock):

def __init__(self, classes=1000, **kwargs):

super(_LeNet, self).__init__(**kwargs)

with self.name_scope():

self.features = nn.HybridSequential(prefix='')

with self.features.name_scope():

self.features.add(nn.Conv2D(6, kernel_size=5))

self.features.add(nn.BatchNorm())

self.features.add(nn.Activation('sigmoid'))

self.features.add(nn.MaxPool2D(pool_size=2, strides=2))

self.features.add(nn.Conv2D(16, kernel_size=5))

self.features.add(nn.BatchNorm())

self.features.add(nn.Activation('sigmoid'))

self.features.add(nn.MaxPool2D(pool_size=2, strides=2))

self.features.add(nn.Dense(120))

self.features.add(nn.BatchNorm())

self.features.add(nn.Activation('sigmoid'))

self.features.add(nn.Dense(84))

self.features.add(nn.BatchNorm())

self.features.add(nn.Activation('sigmoid'))

self.features.add()

self.features.add()

self.features.add()

self.features.add()

self.output = nn.Dense(classes)

def hybrid_forward(self, F, x):

x = self.features(x)

x = self.output(x)

return x

def get_lenet(class_num):

return _LeNet(classes=class_num)

2.AlexNet的实现(2012年)

论文地址:https://proceedings.neurips.cc/paper/2012/file/c399862d3b9d6b76c8436e924a68c45b-Paper.pdf

网络结构:

![]()

AlexNet是2012年ImageNet竞赛冠军获得者Hinton和他的学生Alex Krizhevsky设计的。也是在那年之后,更多的更深的神经网络被提出,比如优秀的vgg,GoogLeNet。 这对于传统的机器学习分类算法而言,已经相当的出色。

AlexNet中包含了几个比较新的技术点,也首次在CNN中成功应用了ReLU、Dropout和LRN等Trick。同时AlexNet也使用了GPU进行运算加速。

AlexNet将LeNet的思想发扬光大,把CNN的基本原理应用到了很深很宽的网络中。AlexNet主要使用到的新技术点如下:

- 成功使用ReLU作为CNN的激活函数,并验证其效果在较深的网络超过了Sigmoid,成功解决了Sigmoid在网络较深时的梯度弥散问题。虽然ReLU激活函数在很久之前就被提出了,但是直到AlexNet的出现才将其发扬光大。

- 训练时使用Dropout随机忽略一部分神经元,以避免模型过拟合。Dropout虽有单独的论文论述,但是AlexNet将其实用化,通过实践证实了它的效果。在AlexNet中主要是最后几个全连接层使用了Dropout。

- 在CNN中使用重叠的最大池化。此前CNN中普遍使用平均池化,AlexNet全部使用最大池化,避免平均池化的模糊化效果。并且AlexNet中提出让步长比池化核的尺寸小,这样池化层的输出之间会有重叠和覆盖,提升了特征的丰富性。

- 提出了LRN层,对局部神经元的活动创建竞争机制,使得其中响应比较大的值变得相对更大,并抑制其他反馈较小的神经元,增强了模型的泛化能力。

- 使用CUDA加速深度卷积网络的训练,利用GPU强大的并行计算能力,处理神经网络训练时大量的矩阵运算。AlexNet使用了两块GTX 580 GPU进行训练,单个GTX 580只有3GB显存,这限制了可训练的网络的最大规模。因此作者将AlexNet分布在两个GPU上,在每个GPU的显存中储存一半的神经元的参数。因为GPU之间通信方便,可以互相访问显存,而不需要通过主机内存,所以同时使用多块GPU也是非常高效的。同时,AlexNet的设计让GPU之间的通信只在网络的某些层进行,控制了通信的性能损耗。

- 数据增强,随机地从256256的原始图像中截取224224大小的区域(以及水平翻转的镜像),相当于增加了2*(256-224)^2=2048倍的数据量。如果没有数据增强,仅靠原始的数据量,参数众多的CNN会陷入过拟合中,使用了数据增强后可以大大减轻过拟合,提升泛化能力。进行预测时,则是取图片的四个角加中间共5个位置,并进行左右翻转,一共获得10张图片,对他们进行预测并对10次结果求均值。同时,AlexNet论文中提到了会对图像的RGB数据进行PCA处理,并对主成分做一个标准差为0.1的高斯扰动,增加一些噪声,这个Trick可以让错误率再下降1%。

代码实现(gluon):

class _AlexNet(nn.HybridBlock):

def __init__(self, classes=1000,alpha=4, **kwargs):

super(AlexNet, self).__init__(**kwargs)

with self.name_scope():

self.features = nn.HybridSequential(prefix='')

with self.features.name_scope():

self.features.add(nn.Conv2D(96//alpha, kernel_size=11, strides=4, activation='relu'))

self.features.add(nn.MaxPool2D(pool_size=3, strides=2))

self.features.add(nn.Conv2D(256//alpha, kernel_size=5, padding=2, activation='relu'))

self.features.add(nn.MaxPool2D(pool_size=3, strides=2))

self.features.add(nn.Conv2D(384//alpha, kernel_size=3, padding=1, activation='relu'))

self.features.add(nn.Conv2D(384//alpha, kernel_size=3, padding=1, activation='relu'))

self.features.add(nn.Conv2D(256//alpha, kernel_size=3, padding=1, activation='relu'))

self.features.add(nn.MaxPool2D(pool_size=3, strides=2))

self.features.add(nn.Flatten())

self.features.add(nn.Dense(4096//alpha, activation='relu'))

self.features.add(nn.Dropout(0.5))

self.features.add(nn.Dense(4096//alpha, activation='relu'))

self.features.add(nn.Dropout(0.5))

self.output = nn.Dense(classes)

def hybrid_forward(self, F, x):

x = self.features(x)

x = self.output(x)

return x

def get_alexnet(class_num):

net = _AlexNet(classes=class_num)

return net

3.VGG的实现(2014年)

论文地址:https://arxiv.org/pdf/1409.1556.pdf

网络结构:

![]()

![]()

VGG模型是2014年ILSVRC竞赛的第二名,第一名是GoogLeNet。但是VGG模型在多个迁移学习任务中的表现要优于googLeNet。而且,从图像中提取CNN特征,VGG模型是首选算法。它的缺点在于,参数量有140M之多,需要更大的存储空间。但是这个模型很有研究价值。

模型的名称——“VGG”代表了牛津大学的Oxford Visual Geometry Group,该小组隶属于1985年成立的Robotics Research Group,该Group研究范围包括了机器学习到移动机器人。下面是一段来自网络对同年GoogLeNet和VGG的描述:

VGG的特点:

- 小卷积核。作者将卷积核全部替换为3x3(极少用了1x1);

- 小池化核。相比AlexNet的3x3的池化核,VGG全部为2x2的池化核;

- 层数更深特征图更宽。基于前两点外,由于卷积核专注于扩大通道数、池化专注于缩小宽和高,使得模型架构上更深更宽的同时,计算量的增加放缓;

- 全连接转卷积。网络测试阶段将训练阶段的三个全连接替换为三个卷积,测试重用训练时的参数,使得测试得到的全卷积网络因为没有全连接的限制,因而可以接收任意宽或高为的输入。

代码实现(gluon):

class _VGG(nn.HybridBlock):

def __init__(self, layers, filters, classes=1000, batch_norm=False, **kwargs):

super(_VGG, self).__init__(**kwargs)

assert len(layers) == len(filters)

with self.name_scope():

self.features = self._make_features(layers, filters, batch_norm)

self.features.add(nn.Dense(4096, activation='relu', weight_initializer='normal', bias_initializer='zeros'))

self.features.add(nn.Dropout(rate=0.5))

self.features.add(nn.Dense(4096, activation='relu', weight_initializer='normal', bias_initializer='zeros'))

self.features.add(nn.Dropout(rate=0.5))

self.output = nn.Dense(classes, weight_initializer='normal', bias_initializer='zeros')

def _make_features(self, layers, filters, batch_norm):

featurizer = nn.HybridSequential(prefix='')

for i, num in enumerate(layers):

for _ in range(num):

featurizer.add(nn.Conv2D(filters[i], kernel_size=3, padding=1, weight_initializer=Xavier(rnd_type='gaussian', factor_type='out', magnitude=2), bias_initializer='zeros'))

if batch_norm:

featurizer.add(nn.BatchNorm())

featurizer.add(nn.Activation('relu'))

featurizer.add(nn.MaxPool2D(strides=2))

return featurizer

def hybrid_forward(self, F, x):

x = self.features(x)

x = self.output(x)

return x

_vgg_spec = {11: ([1, 1, 2, 2, 2], [64, 128, 256, 512, 512]),

13: ([2, 2, 2, 2, 2], [64, 128, 256, 512, 512]),

16: ([2, 2, 3, 3, 3], [64, 128, 256, 512, 512]),

19: ([2, 2, 4, 4, 4], [64, 128, 256, 512, 512])}

def get_vgg(num_layers,class_num,bn = False, **kwargs):

if bn:

kwargs['batch_norm'] = True

layers, filters = _vgg_spec[num_layers]

net = _VGG(layers, filters,classes = class_num, **kwargs)

return net

4.GoogleNet的实现(2014年)

论文地址:https://arxiv.org/pdf/1409.4842.pdf

网络结构:

![]()

GoogLeNet是2014年Christian Szegedy提出的一种全新的深度学习结构,在这之前的AlexNet、VGG等结构都是通过增大网络的深度(层数)来获得更好的训练效果,但层数的增加会带来很多负作用,比如overfit、梯度消失、梯度爆炸等。inception的提出则从另一种角度来提升训练结果:能更高效的利用计算资源,在相同的计算量下能提取到更多的特征,从而提升训练结果。

inception模块的基本机构如图1,整个inception结构就是由多个这样的inception模块串联起来的。inception结构的主要贡献有两个:一是使用1x1的卷积来进行升降维;二是在多个尺寸上同时进行卷积再聚合。![]()

1x1卷积

作用1:在相同尺寸的感受野中叠加更多的卷积,能提取到更丰富的特征。这个观点来自于Network in Network,图1里三个1x1卷积都起到了该作用。

![]()

图2左侧是是传统的卷积层结构(线性卷积),在一个尺度上只有一次卷积;图2右图是Network in Network结构(NIN结构),先进行一次普通的卷积(比如3x3),紧跟再进行一次1x1的卷积,对于某个像素点来说1x1卷积等效于该像素点在所有特征上进行一次全连接的计算,所以图2右侧图的1x1卷积画成了全连接层的形式,需要注意的是NIN结构中无论是第一个3x3卷积还是新增的1x1卷积,后面都紧跟着激活函数(比如relu)。将两个卷积串联,就能组合出更多的非线性特征。举个例子,假设第1个3x3卷积+激活函数近似于f1(x)=ax2+bx+c,第二个1x1卷积+激活函数近似于f2(x)=mx2+nx+q,那f1(x)和f2(f1(x))比哪个非线性更强,更能模拟非线性的特征?答案是显而易见的。NIN的结构和传统的神经网络中多层的结构有些类似,后者的多层是跨越了不同尺寸的感受野(通过层与层中间加pool层),从而在更高尺度上提取出特征;NIN结构是在同一个尺度上的多层(中间没有pool层),从而在相同的感受野范围能提取更强的非线性。

作用2:使用1x1卷积进行降维,降低了计算复杂度。图2中间3x3卷积和5x5卷积前的1x1卷积都起到了这个作用。当某个卷积层输入的特征数较多,对这个输入进行卷积运算将产生巨大的计算量;如果对输入先进行降维,减少特征数后再做卷积计算量就会显著减少。图3是优化前后两种方案的乘法次数比较,同样是输入一组有192个特征、32x32大小,输出256组特征的数据,图3第一张图直接用3x3卷积实现,需要192x256x3x3x32x32=452984832次乘法;图3第二张图先用1x1的卷积降到96个特征,再用3x3卷积恢复出256组特征,需要192x96x1x1x32x32+96x256x3x3x32x32=245366784次乘法,使用1x1卷积降维的方法节省了一半的计算量。有人会问,用1x1卷积降到96个特征后特征数不就减少了么,会影响最后训练的效果么?答案是否定的,只要最后输出的特征数不变(256组),中间的降维类似于压缩的效果,并不影响最终训练的结果。

![]()

多个尺寸上进行卷积再聚合

图2可以看到对输入做了4个分支,分别用不同尺寸的filter进行卷积或池化,最后再在特征维度上拼接到一起。这种全新的结构有什么好处呢?Szegedy从多个角度进行了解释:

解释1:在直观感觉上在多个尺度上同时进行卷积,能提取到不同尺度的特征。特征更为丰富也意味着最后分类判断时更加准确。

解释2:利用稀疏矩阵分解成密集矩阵计算的原理来加快收敛速度。举个例子图4左侧是个稀疏矩阵(很多元素都为0,不均匀分布在矩阵中),和一个2x2的矩阵进行卷积,需要对稀疏矩阵中的每一个元素进行计算;如果像图4右图那样把稀疏矩阵分解成2个子密集矩阵,再和2x2矩阵进行卷积,稀疏矩阵中0较多的区域就可以不用计算,计算量就大大降低。这个原理应用到inception上就是要在特征维度上进行分解!传统的卷积层的输入数据只和一种尺度(比如3x3)的卷积核进行卷积,输出固定维度(比如256个特征)的数据,所有256个输出特征基本上是均匀分布在3x3尺度范围上,这可以理解成输出了一个稀疏分布的特征集;而inception模块在多个尺度上提取特征(比如1x1,3x3,5x5),输出的256个特征就不再是均匀分布,而是相关性强的特征聚集在一起(比如1x1的的96个特征聚集在一起,3x3的96个特征聚集在一起,5x5的64个特征聚集在一起),这可以理解成多个密集分布的子特征集。这样的特征集中因为相关性较强的特征聚集在了一起,不相关的非关键特征就被弱化,同样是输出256个特征,inception方法输出的特征“冗余”的信息较少。用这样的“纯”的特征集层层传递最后作为反向计算的输入,自然收敛的速度更快。

![]()

解释3:Hebbin赫布原理。Hebbin原理是神经科学上的一个理论,解释了在学习的过程中脑中的神经元所发生的变化,用一句话概括就是fire togethter, wire together。赫布认为“两个神经元或者神经元系统,如果总是同时兴奋,就会形成一种‘组合’,其中一个神经元的兴奋会促进另一个的兴奋”。比如狗看到肉会流口水,反复刺激后,脑中识别肉的神经元会和掌管唾液分泌的神经元会相互促进,“缠绕”在一起,以后再看到肉就会更快流出口水。用在inception结构中就是要把相关性强的特征汇聚到一起。这有点类似上面的解释2,把1x1,3x3,5x5的特征分开。因为训练收敛的最终目的就是要提取出独立的特征,所以预先把相关性强的特征汇聚,就能起到加速收敛的作用。

在inception模块中有一个分支使用了max pooling,作者认为pooling也能起到提取特征的作用,所以也加入模块中。注意这个pooling的stride=1,pooling后没有减少数据的尺寸。

代码实现(gluon):

def _make_basic_conv(in_channels, channels, norm_layer=nn.BatchNorm, norm_kwargs=None, **kwargs):

out = nn.HybridSequential(prefix='')

out.add(nn.Conv2D(in_channels=in_channels, channels=channels, use_bias=False, **kwargs))

out.add(norm_layer(in_channels=channels, epsilon=0.001, **({} if norm_kwargs is None else norm_kwargs)))

out.add(nn.Activation('relu'))

return out

def _make_branch(use_pool, norm_layer, norm_kwargs, *conv_settings):

out = nn.HybridSequential(prefix='')

if use_pool == 'avg':

out.add(nn.AvgPool2D(pool_size=3, strides=1, padding=1))

elif use_pool == 'max':

out.add(nn.MaxPool2D(pool_size=3, strides=1, padding=1))

setting_names = ['in_channels', 'channels', 'kernel_size', 'strides', 'padding']

for setting in conv_settings:

kwargs = {}

for i, value in enumerate(setting):

if value is not None:

if setting_names[i] == 'in_channels':

in_channels = value

elif setting_names[i] == 'channels':

channels = value

else:

kwargs[setting_names[i]] = value

out.add(_make_basic_conv(in_channels, channels, norm_layer, norm_kwargs, **kwargs))

return out

def _make_Mixed_3a(in_channels, pool_features, prefix, norm_layer, norm_kwargs):

out = HybridConcurrent(axis=1, prefix=prefix)

with out.name_scope():

out.add(_make_branch(None, norm_layer, norm_kwargs, (in_channels, 64, 1, None, None)))

out.add(_make_branch(None, norm_layer, norm_kwargs, (in_channels, 96, 1, None, None), (96, 128, 3, None, 1)))

out.add(_make_branch(None, norm_layer, norm_kwargs, (in_channels, 16, 1, None, None), (16, 32, 3, None, 1)))

out.add(_make_branch('max', norm_layer, norm_kwargs, (in_channels, pool_features, 1, None, None)))

return out

def _make_Mixed_3b(in_channels, pool_features, prefix, norm_layer, norm_kwargs):

out = HybridConcurrent(axis=1, prefix=prefix)

with out.name_scope():

out.add(_make_branch(None, norm_layer, norm_kwargs, (in_channels, 128, 1, None, None)))

out.add(_make_branch(None, norm_layer, norm_kwargs, (in_channels, 128, 1, None, None), (128, 192, 3, None, 1)))

out.add(_make_branch(None, norm_layer, norm_kwargs, (in_channels, 32, 1, None, None), (32, 96, 3, None, 1)))

out.add(_make_branch('max', norm_layer, norm_kwargs, (in_channels, pool_features, 1, None, None)))

return out

def _make_Mixed_4a(in_channels, pool_features, prefix, norm_layer, norm_kwargs):

out = HybridConcurrent(axis=1, prefix=prefix)

with out.name_scope():

out.add(_make_branch(None, norm_layer, norm_kwargs, (in_channels, 192, 1, None, None)))

out.add(_make_branch(None, norm_layer, norm_kwargs, (in_channels, 96, 1, None, None), (96, 208, 3, None, 1)))

out.add(_make_branch(None, norm_layer, norm_kwargs, (in_channels, 16, 1, None, None), (16, 48, 3, None, 1)))

out.add(_make_branch('max', norm_layer, norm_kwargs, (in_channels, pool_features, 1, None, None)))

return out

def _make_Mixed_4b(in_channels, pool_features, prefix, norm_layer, norm_kwargs):

out = HybridConcurrent(axis=1, prefix=prefix)

with out.name_scope():

out.add(_make_branch(None, norm_layer, norm_kwargs, (in_channels, 160, 1, None, None)))

out.add(_make_branch(None, norm_layer, norm_kwargs, (in_channels, 112, 1, None, None), (112, 224, 3, None, 1)))

out.add(_make_branch(None, norm_layer, norm_kwargs, (in_channels, 24, 1, None, None), (24, 64, 3, None, 1)))

out.add(_make_branch('max', norm_layer, norm_kwargs, (in_channels, pool_features, 1, None, None)))

return out

def _make_Mixed_4c(in_channels, pool_features, prefix, norm_layer, norm_kwargs):

out = HybridConcurrent(axis=1, prefix=prefix)

with out.name_scope():

out.add(_make_branch(None, norm_layer, norm_kwargs, (in_channels, 128, 1, None, None)))

out.add(_make_branch(None, norm_layer, norm_kwargs, (in_channels, 128, 1, None, None), (128, 256, 3, None, 1)))

out.add(_make_branch(None, norm_layer, norm_kwargs, (in_channels, 24, 1, None, None), (24, 64, 3, None, 1)))

out.add(_make_branch('max', norm_layer, norm_kwargs, (in_channels, pool_features, 1, None, None)))

return out

def _make_Mixed_4d(in_channels, pool_features, prefix, norm_layer, norm_kwargs):

out = HybridConcurrent(axis=1, prefix=prefix)

with out.name_scope():

out.add(_make_branch(None, norm_layer, norm_kwargs, (in_channels, 112, 1, None, None)))

out.add(_make_branch(None, norm_layer, norm_kwargs, (in_channels, 144, 1, None, None), (144, 288, 3, None, 1)))

out.add(_make_branch(None, norm_layer, norm_kwargs, (in_channels, 32, 1, None, None), (32, 64, 3, None, 1)))

out.add(_make_branch('max', norm_layer, norm_kwargs, (in_channels, pool_features, 1, None, None)))

return out

def _make_Mixed_4e(in_channels, pool_features, prefix, norm_layer, norm_kwargs):

out = HybridConcurrent(axis=1, prefix=prefix)

with out.name_scope():

out.add(_make_branch(None, norm_layer, norm_kwargs, (in_channels, 256, 1, None, None)))

out.add(_make_branch(None, norm_layer, norm_kwargs, (in_channels, 160, 1, None, None), (160, 320, 3, None, 1)))

out.add(_make_branch(None, norm_layer, norm_kwargs, (in_channels, 32, 1, None, None), (32, 128, 3, None, 1)))

out.add(_make_branch('max', norm_layer, norm_kwargs, (in_channels, pool_features, 1, None, None)))

return out

def _make_Mixed_5a(in_channels, pool_features, prefix, norm_layer, norm_kwargs):

out = HybridConcurrent(axis=1, prefix=prefix)

with out.name_scope():

out.add(_make_branch(None, norm_layer, norm_kwargs, (in_channels, 256, 1, None, None)))

out.add(_make_branch(None, norm_layer, norm_kwargs, (in_channels, 160, 1, None, None), (160, 320, 3, None, 1)))

out.add(_make_branch(None, norm_layer, norm_kwargs, (in_channels, 32, 1, None, None), (32, 128, 3, None, 1)))

out.add(_make_branch('max', norm_layer, norm_kwargs, (in_channels, pool_features, 1, None, None)))

return out

def _make_Mixed_5b(in_channels, pool_features, prefix, norm_layer, norm_kwargs):

out = HybridConcurrent(axis=1, prefix=prefix)

with out.name_scope():

out.add(_make_branch(None, norm_layer, norm_kwargs, (in_channels, 384, 1, None, None)))

out.add(_make_branch(None, norm_layer, norm_kwargs, (in_channels, 192, 1, None, None), (192, 384, 3, None, 1)))

out.add(_make_branch(None, norm_layer, norm_kwargs, (in_channels, 48, 1, None, None), (48, 128, 3, None, 1)))

out.add(_make_branch('max', norm_layer, norm_kwargs, (in_channels, pool_features, 1, None, None)))

return out

def _make_aux(in_channels, classes, norm_layer, norm_kwargs):

out = nn.HybridSequential(prefix='')

out.add(nn.AvgPool2D(pool_size=5, strides=3))

out.add(_make_basic_conv(in_channels=in_channels, channels=128, kernel_size=1, norm_layer=norm_layer, norm_kwargs=norm_kwargs))

out.add(nn.Flatten())

out.add(nn.Dense(units=1024, in_units=2048))

out.add(nn.Activation('relu'))

out.add(nn.Dropout(0.7))

out.add(nn.Dense(units=classes, in_units=1024))

return out

class _GoogLeNet(nn.HybridBlock):

def __init__(self, classes=1000, norm_layer=nn.BatchNorm, dropout_ratio=0.4, aux_logits=False,norm_kwargs=None, partial_bn=False, **kwargs):

super(_GoogLeNet, self).__init__(**kwargs)

self.dropout_ratio = dropout_ratio

self.aux_logits = aux_logits

with self.name_scope():

self.conv1 = _make_basic_conv(in_channels=3, channels=64, kernel_size=7,

strides=2, padding=3,

norm_layer=norm_layer, norm_kwargs=norm_kwargs)

self.maxpool1 = nn.MaxPool2D(pool_size=3, strides=2, ceil_mode=True)

if partial_bn:

if norm_kwargs is not None:

norm_kwargs['use_global_stats'] = True

else:

norm_kwargs = {}

norm_kwargs['use_global_stats'] = True

self.conv2 = _make_basic_conv(in_channels=64, channels=64, kernel_size=1,

norm_layer=norm_layer, norm_kwargs=norm_kwargs)

self.conv3 = _make_basic_conv(in_channels=64, channels=192,

kernel_size=3, padding=1,

norm_layer=norm_layer, norm_kwargs=norm_kwargs)

self.maxpool2 = nn.MaxPool2D(pool_size=3, strides=2, ceil_mode=True)

self.inception3a = _make_Mixed_3a(192, 32, 'Mixed_3a_', norm_layer, norm_kwargs)

self.inception3b = _make_Mixed_3b(256, 64, 'Mixed_3b_', norm_layer, norm_kwargs)

self.maxpool3 = nn.MaxPool2D(pool_size=3, strides=2, ceil_mode=True)

self.inception4a = _make_Mixed_4a(480, 64, 'Mixed_4a_', norm_layer, norm_kwargs)

self.inception4b = _make_Mixed_4b(512, 64, 'Mixed_4b_', norm_layer, norm_kwargs)

self.inception4c = _make_Mixed_4c(512, 64, 'Mixed_4c_', norm_layer, norm_kwargs)

self.inception4d = _make_Mixed_4d(512, 64, 'Mixed_4d_', norm_layer, norm_kwargs)

self.inception4e = _make_Mixed_4e(528, 128, 'Mixed_4e_', norm_layer, norm_kwargs)

self.maxpool4 = nn.MaxPool2D(pool_size=2, strides=2)

self.inception5a = _make_Mixed_5a(832, 128, 'Mixed_5a_', norm_layer, norm_kwargs)

self.inception5b = _make_Mixed_5b(832, 128, 'Mixed_5b_', norm_layer, norm_kwargs)

if self.aux_logits:

self.aux1 = _make_aux(512, classes, norm_layer, norm_kwargs)

self.aux2 = _make_aux(528, classes, norm_layer, norm_kwargs)

self.head = nn.HybridSequential(prefix='')

self.avgpool = nn.AvgPool2D(pool_size=7)

self.dropout = nn.Dropout(self.dropout_ratio)

self.output = nn.Dense(units=classes, in_units=1024)

self.head.add(self.avgpool)

self.head.add(self.dropout)

self.head.add(self.output)

def hybrid_forward(self, F, x):

x = self.conv1(x)

x = self.maxpool1(x)

x = self.conv2(x)

x = self.conv3(x)

x = self.maxpool2(x)

x = self.inception3a(x)

x = self.inception3b(x)

x = self.maxpool3(x)

x = self.inception4a(x)

if self.aux_logits:

aux1 = self.aux1(x)

x = self.inception4b(x)

x = self.inception4c(x)

x = self.inception4d(x)

if self.aux_logits:

aux2 = self.aux2(x)

x = self.inception4e(x)

x = self.maxpool4(x)

x = self.inception5a(x)

x = self.inception5b(x)

x = self.head(x)

if self.aux_logits:

return (x, aux2, aux1)

return x

def get_googlenet(class_num=1000, dropout_ratio=0.4, aux_logits=False,partial_bn=False, **kwargs):

net = _GoogLeNet(classes=class_num, partial_bn=partial_bn, dropout_ratio=dropout_ratio, aux_logits=aux_logits, **kwargs)

return net

5.ResNet的实现(2015年)

论文地址:https://arxiv.org/pdf/1512.03385.pdf

网络结构(VGG vs ResNet):

![]()

![]()

ResNet的发明者是何恺明(Kaiming He)、张翔宇(Xiangyu Zhang)、任少卿(Shaoqing Ren)和孙剑(Jiangxi Sun)。

在2015年的ImageNet大规模视觉识别竞赛(ImageNet Large Scale Visual Recognition Challenge, ILSVRC)中获得了图像分类和物体识别的优胜。 残差网络的特点是容易优化,并且能够通过增加相当的深度来提高准确率。其内部的残差块使用了跳跃连接,缓解了在深度神经网络中增加深度带来的梯度消失问题。

代码实现(gluon):

def _conv3x3(channels, stride, in_channels):

return nn.Conv2D(channels, kernel_size=3, strides=stride, padding=1, use_bias=False, in_channels=in_channels)

class _BasicBlockV1(nn.HybridBlock):

def __init__(self, channels, stride, downsample=False, in_channels=0, last_gamma=False, use_se=False, norm_layer=nn.BatchNorm, norm_kwargs=None, **kwargs):

super(_BasicBlockV1, self).__init__(**kwargs)

self.body = nn.HybridSequential(prefix='')

self.body.add(_conv3x3(channels, stride, in_channels))

self.body.add(norm_layer(**({} if norm_kwargs is None else norm_kwargs)))

self.body.add(nn.Activation('relu'))

self.body.add(_conv3x3(channels, 1, channels))

if not last_gamma:

self.body.add(norm_layer(**({} if norm_kwargs is None else norm_kwargs)))

else:

self.body.add(norm_layer(gamma_initializer='zeros', **({} if norm_kwargs is None else norm_kwargs)))

if use_se:

self.se = nn.HybridSequential(prefix='')

self.se.add(nn.Dense(channels // 16, use_bias=False))

self.se.add(nn.Activation('relu'))

self.se.add(nn.Dense(channels, use_bias=False))

self.se.add(nn.Activation('sigmoid'))

else:

self.se = None

if downsample:

self.downsample = nn.HybridSequential(prefix='')

self.downsample.add(nn.Conv2D(channels, kernel_size=1, strides=stride, use_bias=False, in_channels=in_channels))

self.downsample.add(norm_layer(**({} if norm_kwargs is None else norm_kwargs)))

else:

self.downsample = None

def hybrid_forward(self, F, x):

residual = x

x = self.body(x)

if self.se:

w = F.contrib.AdaptiveAvgPooling2D(x, output_size=1)

w = self.se(w)

x = F.broadcast_mul(x, w.expand_dims(axis=2).expand_dims(axis=2))

if self.downsample:

residual = self.downsample(residual)

x = F.Activation(residual+x, act_type='relu')

return x

class _BottleneckV1(nn.HybridBlock):

def __init__(self, channels, stride, downsample=False, in_channels=0, last_gamma=False, use_se=False, norm_layer=nn.BatchNorm, norm_kwargs=None, **kwargs):

super(_BottleneckV1, self).__init__(**kwargs)

self.body = nn.HybridSequential(prefix='')

self.body.add(nn.Conv2D(channels//4, kernel_size=1, strides=stride))

self.body.add(norm_layer(**({} if norm_kwargs is None else norm_kwargs)))

self.body.add(nn.Activation('relu'))

self.body.add(_conv3x3(channels//4, 1, channels//4))

self.body.add(norm_layer(**({} if norm_kwargs is None else norm_kwargs)))

self.body.add(nn.Activation('relu'))

self.body.add(nn.Conv2D(channels, kernel_size=1, strides=1))

if use_se:

self.se = nn.HybridSequential(prefix='')

self.se.add(nn.Dense(channels // 16, use_bias=False))

self.se.add(nn.Activation('relu'))

self.se.add(nn.Dense(channels, use_bias=False))

self.se.add(nn.Activation('sigmoid'))

else:

self.se = None

if not last_gamma:

self.body.add(norm_layer(**({} if norm_kwargs is None else norm_kwargs)))

else:

self.body.add(norm_layer(gamma_initializer='zeros', **({} if norm_kwargs is None else norm_kwargs)))

if downsample:

self.downsample = nn.HybridSequential(prefix='')

self.downsample.add(nn.Conv2D(channels, kernel_size=1, strides=stride, use_bias=False, in_channels=in_channels))

self.downsample.add(norm_layer(**({} if norm_kwargs is None else norm_kwargs)))

else:

self.downsample = None

def hybrid_forward(self, F, x):

residual = x

x = self.body(x)

if self.se:

w = F.contrib.AdaptiveAvgPooling2D(x, output_size=1)

w = self.se(w)

x = F.broadcast_mul(x, w.expand_dims(axis=2).expand_dims(axis=2))

if self.downsample:

residual = self.downsample(residual)

x = F.Activation(x + residual, act_type='relu')

return x

class _BasicBlockV2(nn.HybridBlock):

def __init__(self, channels, stride, downsample=False, in_channels=0, last_gamma=False, use_se=False, norm_layer=nn.BatchNorm, norm_kwargs=None, **kwargs):

super(_BasicBlockV2, self).__init__(**kwargs)

self.bn1 = norm_layer(**({} if norm_kwargs is None else norm_kwargs))

self.conv1 = _conv3x3(channels, stride, in_channels)

if not last_gamma:

self.bn2 = norm_layer(**({} if norm_kwargs is None else norm_kwargs))

else:

self.bn2 = norm_layer(gamma_initializer='zeros', **({} if norm_kwargs is None else norm_kwargs))

self.conv2 = _conv3x3(channels, 1, channels)

if use_se:

self.se = nn.HybridSequential(prefix='')

self.se.add(nn.Dense(channels // 16, use_bias=False))

self.se.add(nn.Activation('relu'))

self.se.add(nn.Dense(channels, use_bias=False))

self.se.add(nn.Activation('sigmoid'))

else:

self.se = None

if downsample:

self.downsample = nn.Conv2D(channels, 1, stride, use_bias=False, in_channels=in_channels)

else:

self.downsample = None

def hybrid_forward(self, F, x):

residual = x

x = self.bn1(x)

x = F.Activation(x, act_type='relu')

if self.downsample:

residual = self.downsample(x)

x = self.conv1(x)

x = self.bn2(x)

x = F.Activation(x, act_type='relu')

x = self.conv2(x)

if self.se:

w = F.contrib.AdaptiveAvgPooling2D(x, output_size=1)

w = self.se(w)

x = F.broadcast_mul(x, w.expand_dims(axis=2).expand_dims(axis=2))

return x + residual

class _BottleneckV2(nn.HybridBlock):

def __init__(self, channels, stride, downsample=False, in_channels=0, last_gamma=False, use_se=False, norm_layer=nn.BatchNorm, norm_kwargs=None, **kwargs):

super(_BottleneckV2, self).__init__(**kwargs)

self.bn1 = norm_layer(**({} if norm_kwargs is None else norm_kwargs))

self.conv1 = nn.Conv2D(channels//4, kernel_size=1, strides=1, use_bias=False)

self.bn2 = norm_layer(**({} if norm_kwargs is None else norm_kwargs))

self.conv2 = _conv3x3(channels//4, stride, channels//4)

if not last_gamma:

self.bn3 = norm_layer(**({} if norm_kwargs is None else norm_kwargs))

else:

self.bn3 = norm_layer(gamma_initializer='zeros', **({} if norm_kwargs is None else norm_kwargs))

self.conv3 = nn.Conv2D(channels, kernel_size=1, strides=1, use_bias=False)

if use_se:

self.se = nn.HybridSequential(prefix='')

self.se.add(nn.Dense(channels // 16, use_bias=False))

self.se.add(nn.Activation('relu'))

self.se.add(nn.Dense(channels, use_bias=False))

self.se.add(nn.Activation('sigmoid'))

else:

self.se = None

if downsample:

self.downsample = nn.Conv2D(channels, 1, stride, use_bias=False, in_channels=in_channels)

else:

self.downsample = None

def hybrid_forward(self, F, x):

residual = x

x = self.bn1(x)

x = F.Activation(x, act_type='relu')

if self.downsample:

residual = self.downsample(x)

x = self.conv1(x)

x = self.bn2(x)

x = F.Activation(x, act_type='relu')

x = self.conv2(x)

x = self.bn3(x)

x = F.Activation(x, act_type='relu')

x = self.conv3(x)

if self.se:

w = F.contrib.AdaptiveAvgPooling2D(x, output_size=1)

w = self.se(w)

x = F.broadcast_mul(x, w.expand_dims(axis=2).expand_dims(axis=2))

return x + residual

class _ResNetV1(nn.HybridBlock):

def __init__(self, block, layers, channels, classes=1000, thumbnail=False, last_gamma=False, use_se=False, norm_layer=nn.BatchNorm, norm_kwargs=None, **kwargs):

super(_ResNetV1, self).__init__(**kwargs)

assert len(layers) == len(channels) - 1

with self.name_scope():

self.features = nn.HybridSequential(prefix='')

if thumbnail:

self.features.add(_conv3x3(channels[0], 1, 0))

else:

self.features.add(nn.Conv2D(channels[0], 7, 2, 3, use_bias=False))

self.features.add(norm_layer(**({} if norm_kwargs is None else norm_kwargs)))

self.features.add(nn.Activation('relu'))

self.features.add(nn.MaxPool2D(3, 2, 1))

for i, num_layer in enumerate(layers):

stride = 1 if i == 0 else 2

self.features.add(self._make_layer(block, num_layer, channels[i+1], stride, i+1, in_channels=channels[i], last_gamma=last_gamma, use_se=use_se, norm_layer=norm_layer, norm_kwargs=norm_kwargs))

self.features.add(nn.GlobalAvgPool2D())

self.output = nn.Dense(classes, in_units=channels[-1])

def _make_layer(self, block, layers, channels, stride, stage_index, in_channels=0, last_gamma=False, use_se=False, norm_layer=nn.BatchNorm, norm_kwargs=None):

layer = nn.HybridSequential(prefix='stage%d_'%stage_index)

with layer.name_scope():

layer.add(block(channels, stride, channels != in_channels, in_channels=in_channels, last_gamma=last_gamma, use_se=use_se, prefix='', norm_layer=norm_layer, norm_kwargs=norm_kwargs))

for _ in range(layers-1):

layer.add(block(channels, 1, False, in_channels=channels, last_gamma=last_gamma, use_se=use_se, prefix='', norm_layer=norm_layer, norm_kwargs=norm_kwargs))

return layer

def hybrid_forward(self, F, x):

x = self.features(x)

x = self.output(x)

return x

class _ResNetV2(nn.HybridBlock):

def __init__(self, block, layers, channels, classes=1000, thumbnail=False, last_gamma=False, use_se=False, norm_layer=nn.BatchNorm, norm_kwargs=None, **kwargs):

super(_ResNetV2, self).__init__(**kwargs)

assert len(layers) == len(channels) - 1

with self.name_scope():

self.features = nn.HybridSequential(prefix='')

self.features.add(norm_layer(scale=False, center=False, **({} if norm_kwargs is None else norm_kwargs)))

if thumbnail:

self.features.add(_conv3x3(channels[0], 1, 0))

else:

self.features.add(nn.Conv2D(channels[0], 7, 2, 3, use_bias=False))

self.features.add(norm_layer(**({} if norm_kwargs is None else norm_kwargs)))

self.features.add(nn.Activation('relu'))

self.features.add(nn.MaxPool2D(3, 2, 1))

in_channels = channels[0]

for i, num_layer in enumerate(layers):

stride = 1 if i == 0 else 2

self.features.add(self._make_layer(block, num_layer, channels[i+1], stride, i+1, in_channels=in_channels, last_gamma=last_gamma, use_se=use_se, norm_layer=norm_layer, norm_kwargs=norm_kwargs))

in_channels = channels[i+1]

self.features.add(norm_layer(**({} if norm_kwargs is None else norm_kwargs)))

self.features.add(nn.Activation('relu'))

self.features.add(nn.GlobalAvgPool2D())

self.features.add(nn.Flatten())

self.output = nn.Dense(classes, in_units=in_channels)

def _make_layer(self, block, layers, channels, stride, stage_index, in_channels=0,

last_gamma=False, use_se=False, norm_layer=nn.BatchNorm, norm_kwargs=None):

layer = nn.HybridSequential(prefix='stage%d_'%stage_index)

with layer.name_scope():

layer.add(block(channels, stride, channels != in_channels, in_channels=in_channels, last_gamma=last_gamma, use_se=use_se, prefix='', norm_layer=norm_layer, norm_kwargs=norm_kwargs))

for _ in range(layers-1):

layer.add(block(channels, 1, False, in_channels=channels, last_gamma=last_gamma, use_se=use_se, prefix='', norm_layer=norm_layer, norm_kwargs=norm_kwargs))

return layer

def hybrid_forward(self, F, x):

x = self.features(x)

x = self.output(x)

return x

_resnet_spec = {18: ('basic_block', [2, 2, 2, 2], [64, 64, 128, 256, 512]),

34: ('basic_block', [3, 4, 6, 3], [64, 64, 128, 256, 512]),

50: ('bottle_neck', [3, 4, 6, 3], [64, 256, 512, 1024, 2048]),

101: ('bottle_neck', [3, 4, 23, 3], [64, 256, 512, 1024, 2048]),

152: ('bottle_neck', [3, 8, 36, 3], [64, 256, 512, 1024, 2048])}

_resnet_net_versions = [_ResNetV1, _ResNetV2]

_resnet_block_versions = [{'basic_block': _BasicBlockV1, 'bottle_neck': _BottleneckV1}, {'basic_block': _BasicBlockV2, 'bottle_neck': _BottleneckV2}]

def get_resnet(version, num_layers,class_num,use_se=False):

block_type, layers, channels = _resnet_spec[num_layers]

resnet_class = _resnet_net_versions[version-1]

block_class = _resnet_block_versions[version-1][block_type]

net = resnet_class(block_class, layers, channels,use_se=use_se, classes=class_num)

return net

6.MobileNet_V1的实现(2017年)

论文地址:https://arxiv.org/pdf/1704.04861.pdf

网络结构:

![]()

MobileNet V1是由google2016年提出,2017年发布的文章。其主要创新点在于深度可分离卷积,而整个网络实际上也是深度可分离模块的堆叠。

深度可分离卷积被证明是轻量化网络的有效设计,深度可分离卷积由逐深度卷积(Depthwise)和逐点卷积(Pointwise)构成。

对比于标准卷积,逐深度卷积将卷积核拆分成为单通道形式,在不改变输入特征图像的深度的情况下,对每一通道进行卷积操作,这样就得到了和输入特征图通道数一致的输出特征图。

逐点卷积就是1×1卷积。主要作用就是对特征图进行升维和降维。

代码实现(gluon):

class _ReLU6(nn.HybridBlock):

def __init__(self, **kwargs):

super(_ReLU6, self).__init__(**kwargs)

def hybrid_forward(self, F, x):

return F.clip(x, 0, 6, name="relu6")

def _add_conv(out, channels=1, kernel=1, stride=1, pad=0, num_group=1, active=True, relu6=False, norm_layer=nn.BatchNorm, norm_kwargs=None):

out.add(nn.Conv2D(channels, kernel, stride, pad, groups=num_group, use_bias=False))

out.add(norm_layer(scale=True, **({} if norm_kwargs is None else norm_kwargs)))

if active:

out.add(_ReLU6() if relu6 else nn.Activation('relu'))

def _add_conv_dw(out, dw_channels, channels, stride, relu6=False, norm_layer=nn.BatchNorm, norm_kwargs=None):

_add_conv(out, channels=dw_channels, kernel=3, stride=stride,

pad=1, num_group=dw_channels, relu6=relu6,

norm_layer=norm_layer, norm_kwargs=norm_kwargs)

_add_conv(out, channels=channels, relu6=relu6,

norm_layer=norm_layer, norm_kwargs=norm_kwargs)

class _LinearBottleneck(nn.HybridBlock):

def __init__(self, in_channels, channels, t, stride, norm_layer=nn.BatchNorm, norm_kwargs=None, **kwargs):

super(_LinearBottleneck, self).__init__(**kwargs)

self.use_shortcut = stride == 1 and in_channels == channels

with self.name_scope():

self.out = nn.HybridSequential()

if t != 1:

_add_conv(self.out, in_channels * t, relu6=True, norm_layer=norm_layer, norm_kwargs=norm_kwargs)

_add_conv(self.out, in_channels * t, kernel=3, stride=stride, pad=1, num_group=in_channels * t, relu6=True, norm_layer=norm_layer, norm_kwargs=norm_kwargs)

_add_conv(self.out, channels, active=False, relu6=True, norm_layer=norm_layer, norm_kwargs=norm_kwargs)

def hybrid_forward(self, F, x):

out = self.out(x)

if self.use_shortcut:

out = F.elemwise_add(out, x)

return out

class _MobileNetV1(nn.HybridBlock):

def __init__(self, multiplier=1.0, classes=1000, norm_layer=nn.BatchNorm, norm_kwargs=None, **kwargs):

super(_MobileNetV1, self).__init__(**kwargs)

with self.name_scope():

self.features = nn.HybridSequential(prefix='')

with self.features.name_scope():

_add_conv(self.features, channels=int(32 * multiplier), kernel=3, pad=1, stride=2, norm_layer=norm_layer, norm_kwargs=norm_kwargs)

dw_channels = [int(x * multiplier) for x in [32, 64] + [128] * 2 + [256] * 2 + [512] * 6 + [1024]]

channels = [int(x * multiplier) for x in [64] + [128] * 2 + [256] * 2 + [512] * 6 + [1024] * 2]

strides = [1, 2] * 3 + [1] * 5 + [2, 1]

for dwc, c, s in zip(dw_channels, channels, strides):

_add_conv_dw(self.features, dw_channels=dwc, channels=c, stride=s, norm_layer=norm_layer, norm_kwargs=norm_kwargs)

self.features.add(nn.GlobalAvgPool2D())

self.features.add(nn.Flatten())

self.output = nn.Dense(classes)

def hybrid_forward(self, F, x):

x = self.features(x)

x = self.output(x)

return x

def get_mobilenet_v1(multiplier,class_num, norm_layer=nn.BatchNorm, norm_kwargs=None, **kwargs):

net = _MobileNetV1(multiplier,classes = class_num, norm_layer=norm_layer, norm_kwargs=norm_kwargs, **kwargs)

return net

7.MobileNet_V2的实现(2019年)

论文地址:https://arxiv.org/pdf/1801.04381.pdf

网络结构:

![]()

![]()

mobilenetV1的缺点:

- V1结构过于简单,没有复用图像特征,即没有concat/eltwise+ 等操作进行特征融合,而后续的一系列的ResNet, DenseNet等结构已经证明复用图像特征的有效性。

- 在处理低维数据(比如逐深度的卷积)时,relu函数会造成信息的丢失。

- DW 卷积由于本身的计算特性决定它自己没有改变通道数的能力,上一层给它多少通道,它就只能输出多少通道。所以如果上一层给的通道数本身很少的话,DW 也只能很委屈的在低维空间提特征,因此效果不够好。

V2使用了跟V1类似的深度可分离结构,不同之处也正对应着V1中逐深度卷积的缺点改进:

- V2 去掉了第二个 PW 的激活函数改为线性激活。

- V2 在 DW 卷积之前新加了一个 PW 卷积。

代码实现(gluon):

class _ReLU6(nn.HybridBlock):

def __init__(self, **kwargs):

super(_ReLU6, self).__init__(**kwargs)

def hybrid_forward(self, F, x):

return F.clip(x, 0, 6, name="relu6")

def _add_conv(out, channels=1, kernel=1, stride=1, pad=0, num_group=1, active=True, relu6=False, norm_layer=nn.BatchNorm, norm_kwargs=None):

out.add(nn.Conv2D(channels, kernel, stride, pad, groups=num_group, use_bias=False))

out.add(norm_layer(scale=True, **({} if norm_kwargs is None else norm_kwargs)))

if active:

out.add(_ReLU6() if relu6 else nn.Activation('relu'))

def _add_conv_dw(out, dw_channels, channels, stride, relu6=False, norm_layer=nn.BatchNorm, norm_kwargs=None):

_add_conv(out, channels=dw_channels, kernel=3, stride=stride,

pad=1, num_group=dw_channels, relu6=relu6,

norm_layer=norm_layer, norm_kwargs=norm_kwargs)

_add_conv(out, channels=channels, relu6=relu6,

norm_layer=norm_layer, norm_kwargs=norm_kwargs)

class _LinearBottleneck(nn.HybridBlock):

def __init__(self, in_channels, channels, t, stride, norm_layer=nn.BatchNorm, norm_kwargs=None, **kwargs):

super(_LinearBottleneck, self).__init__(**kwargs)

self.use_shortcut = stride == 1 and in_channels == channels

with self.name_scope():

self.out = nn.HybridSequential()

if t != 1:

_add_conv(self.out, in_channels * t, relu6=True, norm_layer=norm_layer, norm_kwargs=norm_kwargs)

_add_conv(self.out, in_channels * t, kernel=3, stride=stride, pad=1, num_group=in_channels * t, relu6=True, norm_layer=norm_layer, norm_kwargs=norm_kwargs)

_add_conv(self.out, channels, active=False, relu6=True, norm_layer=norm_layer, norm_kwargs=norm_kwargs)

def hybrid_forward(self, F, x):

out = self.out(x)

if self.use_shortcut:

out = F.elemwise_add(out, x)

return out

class _MobileNetV2(nn.HybridBlock):

def __init__(self, multiplier=1.0, classes=1000, norm_layer=nn.BatchNorm, norm_kwargs=None, **kwargs):

super(_MobileNetV2, self).__init__(**kwargs)

with self.name_scope():

self.features = nn.HybridSequential(prefix='features_')

with self.features.name_scope():

_add_conv(self.features, int(32 * multiplier), kernel=3, stride=2, pad=1, relu6=True, norm_layer=norm_layer, norm_kwargs=norm_kwargs)

in_channels_group = [int(x * multiplier) for x in [32] + [16] + [24] * 2 + [32] * 3 + [64] * 4 + [96] * 3 + [160] * 3]

channels_group = [int(x * multiplier) for x in [16] + [24] * 2 + [32] * 3 + [64] * 4 + [96] * 3 + [160] * 3 + [320]]

ts = [1] + [6] * 16

strides = [1, 2] * 2 + [1, 1, 2] + [1] * 6 + [2] + [1] * 3

for in_c, c, t, s in zip(in_channels_group, channels_group, ts, strides):

self.features.add(_LinearBottleneck(in_channels=in_c, channels=c, t=t, stride=s, norm_layer=norm_layer, norm_kwargs=norm_kwargs))

last_channels = int(1280 * multiplier) if multiplier > 1.0 else 1280

_add_conv(self.features, last_channels, relu6=True, norm_layer=norm_layer, norm_kwargs=norm_kwargs)

self.features.add(nn.GlobalAvgPool2D())

self.output = nn.HybridSequential(prefix='output_')

with self.output.name_scope():

self.output.add(nn.Conv2D(classes, 1, use_bias=False, prefix='pred_'), nn.Flatten())

def hybrid_forward(self, F, x):

x = self.features(x)

x = self.output(x)

return x

def get_mobilenet_v2(multiplier,class_num, norm_layer=nn.BatchNorm, norm_kwargs=None, **kwargs):

net = _MobileNetV2(multiplier,classes = class_num, norm_layer=norm_layer, norm_kwargs=norm_kwargs, **kwargs)

return net

8.MobileNet_V3的实现(2019年)

论文地址:https://arxiv.org/pdf/1905.02244.pdf

网络结构:

根据MobileNetV3论文总结,网络存在以下3点需要大家注意的:

- 更新了Block(bneck),在v3版本中原论文称之为bneck,在v2版倒残差结构上进行了简单的改动。

- 使用了NAS(Neural Architecture Search)搜索参数

- 重新设计了耗时结构:作者使用NAS搜索之后得到的网络,接下来对网络每一层的推理时间进行分析,针对某些耗时的层结构做了进一步的优化

可以看出V3版本的Large 1.0(V3-Large 1.0)的Top-1是75.2,对于V2 1.0 它的Top-1是72,相当于提升了3.2%

在推理速度方面也有一定的提升,(V3-Large 1.0)在P-1手机上推理时间为51ms,而V2是64ms,很明显V3比V2,不仅准确率更高了,而且速度更快了

V3-Small版本的Top-1是 67.4,而V2 0.35 (0.35表示卷积核的倍率因子)的Top-1只有60.8,准确率提升了6.6%

代码实现(gluon):

class _ReLU6(nn.HybridBlock):

def __init__(self, **kwargs):

super(_ReLU6, self).__init__(**kwargs)

def hybrid_forward(self, F, x):

return F.clip(x, 0, 6, name="relu6")

class _HardSigmoid(nn.HybridBlock):

def __init__(self, **kwargs):

super(_HardSigmoid, self).__init__(**kwargs)

self.act = _ReLU6()

def hybrid_forward(self, F, x):

return self.act(x + 3.) / 6.

class _HardSwish(nn.HybridBlock):

def __init__(self, **kwargs):

super(_HardSwish, self).__init__(**kwargs)

self.act = _HardSigmoid()

def hybrid_forward(self, F, x):

return x * self.act(x)

def _make_divisible(x, divisible_by=8):

return int(np.ceil(x * 1. / divisible_by) * divisible_by)

class _Activation_mobilenetv3(nn.HybridBlock):

def __init__(self, act_func, **kwargs):

super(_Activation_mobilenetv3, self).__init__(**kwargs)

if act_func == "relu":

self.act = nn.Activation('relu')

elif act_func == "relu6":

self.act = _ReLU6()

elif act_func == "hard_sigmoid":

self.act = _HardSigmoid()

elif act_func == "swish":

self.act = nn.Swish()

elif act_func == "hard_swish":

self.act = _HardSwish()

elif act_func == "leaky":

self.act = nn.LeakyReLU(alpha=0.375)

else:

raise NotImplementedError

def hybrid_forward(self, F, x):

return self.act(x)

class _SE(nn.HybridBlock):

def __init__(self, num_out, ratio=4, act_func=("relu", "hard_sigmoid"), use_bn=False, prefix='', **kwargs):

super(_SE, self).__init__(**kwargs)

self.use_bn = use_bn

num_mid = _make_divisible(num_out // ratio)

self.pool = nn.GlobalAvgPool2D()

self.conv1 = nn.Conv2D(channels=num_mid, kernel_size=1, use_bias=True, prefix=('%s_fc1_' % prefix))

self.act1 = _Activation_mobilenetv3(act_func[0])

self.conv2 = nn.Conv2D(channels=num_out, kernel_size=1, use_bias=True, prefix=('%s_fc2_' % prefix))

self.act2 = _Activation_mobilenetv3(act_func[1])

def hybrid_forward(self, F, x):

out = self.pool(x)

out = self.conv1(out)

out = self.act1(out)

out = self.conv2(out)

out = self.act2(out)

return F.broadcast_mul(x, out)

class _Unit(nn.HybridBlock):

def __init__(self, num_out, kernel_size=1, strides=1, pad=0, num_groups=1, use_act=True, act_type="relu", prefix='', norm_layer=nn.BatchNorm, **kwargs):

super(_Unit, self).__init__(**kwargs)

self.use_act = use_act

self.conv = nn.Conv2D(channels=num_out, kernel_size=kernel_size, strides=strides, padding=pad, groups=num_groups, use_bias=False, prefix='%s-conv2d_'%prefix)

self.bn = norm_layer(prefix='%s-batchnorm_'%prefix)

if use_act is True:

self.act = _Activation_mobilenetv3(act_type)

def hybrid_forward(self, F, x):

out = self.conv(x)

out = self.bn(out)

if self.use_act:

out = self.act(out)

return out

class _ResUnit(nn.HybridBlock):

def __init__(self, num_in, num_mid, num_out, kernel_size, act_type="relu", use_se=False, strides=1, prefix='', norm_layer=nn.BatchNorm, **kwargs):

super(_ResUnit, self).__init__(**kwargs)

with self.name_scope():

self.use_se = use_se

self.first_conv = (num_out != num_mid)

self.use_short_cut_conv = True

if self.first_conv:

self.expand = _Unit(num_mid, kernel_size=1, strides=1, pad=0, act_type=act_type, prefix='%s-exp'%prefix, norm_layer=norm_layer)

self.conv1 = _Unit(num_mid, kernel_size=kernel_size, strides=strides, pad=self._get_pad(kernel_size), act_type=act_type, num_groups=num_mid, prefix='%s-depthwise'%prefix, norm_layer=norm_layer)

if use_se:

self.se = _SE(num_mid, prefix='%s-se'%prefix)

self.conv2 = _Unit(num_out, kernel_size=1, strides=1, pad=0, act_type=act_type, use_act=False, prefix='%s-linear'%prefix, norm_layer=norm_layer)

if num_in != num_out or strides != 1:

self.use_short_cut_conv = False

def hybrid_forward(self, F, x):

out = self.expand(x) if self.first_conv else x

out = self.conv1(out)

if self.use_se:

out = self.se(out)

out = self.conv2(out)

if self.use_short_cut_conv:

return x + out

else:

return out

def _get_pad(self, kernel_size):

if kernel_size == 1:

return 0

elif kernel_size == 3:

return 1

elif kernel_size == 5:

return 2

elif kernel_size == 7:

return 3

else:

raise NotImplementedError

class _MobileNetV3(nn.HybridBlock):

def __init__(self, cfg, cls_ch_squeeze, cls_ch_expand, multiplier=1., classes=1000, norm_kwargs=None, last_gamma=False, final_drop=0., use_global_stats=False, name_prefix='', norm_layer=nn.BatchNorm):

super(_MobileNetV3, self).__init__(prefix=name_prefix)

norm_kwargs = norm_kwargs if norm_kwargs is not None else {}

if use_global_stats:

norm_kwargs['use_global_stats'] = True

# initialize residual networks

k = multiplier

self.last_gamma = last_gamma

self.norm_kwargs = norm_kwargs

self.inplanes = 16

with self.name_scope():

self.features = nn.HybridSequential(prefix='')

self.features.add(nn.Conv2D(channels=_make_divisible(k*self.inplanes), kernel_size=3, padding=1, strides=2, use_bias=False, prefix='first-3x3-conv-conv2d_'))

self.features.add(norm_layer(prefix='first-3x3-conv-batchnorm_'))

self.features.add(_HardSwish())

i = 0

for layer_cfg in cfg:

layer = self._make_layer(kernel_size=layer_cfg[0], exp_ch=_make_divisible(k * layer_cfg[1]), out_channel=_make_divisible(k * layer_cfg[2]), use_se=layer_cfg[3], act_func=layer_cfg[4], stride=layer_cfg[5], prefix='seq-%d'%i,)

self.features.add(layer)

i += 1

self.features.add(nn.Conv2D(channels= _make_divisible(k*cls_ch_squeeze), kernel_size=1, padding=0, strides=1, use_bias=False, prefix='last-1x1-conv1-conv2d_'))

self.features.add(norm_layer(prefix='last-1x1-conv1-batchnorm_', **({} if norm_kwargs is None else norm_kwargs)))

self.features.add(_HardSwish())

self.features.add(nn.GlobalAvgPool2D())

self.features.add(nn.Conv2D(channels=cls_ch_expand, kernel_size=1, padding=0, strides=1, use_bias=False, prefix='last-1x1-conv2-conv2d_'))

self.features.add(_HardSwish())

if final_drop > 0:

self.features.add(nn.Dropout(final_drop))

self.output = nn.HybridSequential(prefix='output_')

with self.output.name_scope():

self.output.add(

nn.Conv2D(in_channels=cls_ch_expand, channels=classes, kernel_size=1, prefix='fc_'),

nn.Flatten())

def _make_layer(self, kernel_size, exp_ch, out_channel, use_se, act_func, stride=1, prefix=''):

mid_planes = exp_ch

out_planes = out_channel

layer = _ResUnit(self.inplanes, mid_planes, out_planes, kernel_size, act_func, strides=stride, use_se=use_se, prefix=prefix)

self.inplanes = out_planes

return layer

def hybrid_forward(self, F, x):

x = self.features(x)

x = self.output(x)

return x

def get_mobilenet_v3(version, multiplier=1.,class_num=1000, norm_layer=nn.BatchNorm, norm_kwargs=None, **kwargs):

if version == "large":

cfg = [

# k, exp, c, se, nl, s,

[3, 16, 16, False, 'relu', 1],

[3, 64, 24, False, 'relu', 2],

[3, 72, 24, False, 'relu', 1],

[5, 72, 40, True, 'relu', 2],

[5, 120, 40, True, 'relu', 1],

[5, 120, 40, True, 'relu', 1],

[3, 240, 80, False, 'hard_swish', 2],

[3, 200, 80, False, 'hard_swish', 1],

[3, 184, 80, False, 'hard_swish', 1],

[3, 184, 80, False, 'hard_swish', 1],

[3, 480, 112, True, 'hard_swish', 1],

[3, 672, 112, True, 'hard_swish', 1],

[5, 672, 160, True, 'hard_swish', 2],

[5, 960, 160, True, 'hard_swish', 1],

[5, 960, 160, True, 'hard_swish', 1],

]

cls_ch_squeeze = 960

cls_ch_expand = 1280

elif version == "small":

cfg = [

# k, exp, c, se, nl, s,

[3, 16, 16, True, 'relu', 2],

[3, 72, 24, False, 'relu', 2],

[3, 88, 24, False, 'relu', 1],

[5, 96, 40, True, 'hard_swish', 2],

[5, 240, 40, True, 'hard_swish', 1],

[5, 240, 40, True, 'hard_swish', 1],

[5, 120, 48, True, 'hard_swish', 1],

[5, 144, 48, True, 'hard_swish', 1],

[5, 288, 96, True, 'hard_swish', 2],

[5, 576, 96, True, 'hard_swish', 1],

[5, 576, 96, True, 'hard_swish', 1],

]

cls_ch_squeeze = 576

cls_ch_expand = 1280

else:

raise NotImplementedError

net = _MobileNetV3(cfg, cls_ch_squeeze, cls_ch_expand,classes=class_num, multiplier=multiplier, final_drop=0.2, norm_layer=norm_layer, **kwargs)

return net

9.DenseNet的实现(2017年)

论文地址:https://arxiv.org/pdf/1608.06993.pdf

网络结构:

经典网络DenseNet(Dense Convolutional Network)由Gao Huang等人于2017年提出,论文名为:《Densely Connected Convolutional Networks》,论文见:https://arxiv.org/pdf/1608.06993.pdf

DenseNet以前馈的方式(feed-forward fashion)将每个层与其它层连接起来。在传统卷积神经网络中,对于L层的网络具有L个连接,而在DenseNet中,会有L(L+1)/2个连接。每一层的输入来自前面所有层的输出。

DenseNet网络:

- 减轻梯度消失(vanishing-gradient)。

- 加强feature传递。

- 鼓励特征重用(encourage feature reuse)。

- 较少的参数数量。

代码实现(gluon):

def _make_dense_block(num_layers, bn_size, growth_rate, dropout, stage_index, norm_layer, norm_kwargs):

out = nn.HybridSequential(prefix='stage%d_'%stage_index)

with out.name_scope():

for _ in range(num_layers):

out.add(_make_dense_layer(growth_rate, bn_size, dropout, norm_layer, norm_kwargs))

return out

def _make_dense_layer(growth_rate, bn_size, dropout, norm_layer, norm_kwargs):

new_features = nn.HybridSequential(prefix='')

new_features.add(norm_layer(**({} if norm_kwargs is None else norm_kwargs)))

new_features.add(nn.Activation('relu'))

new_features.add(nn.Conv2D(bn_size * growth_rate, kernel_size=1, use_bias=False))

new_features.add(norm_layer(**({} if norm_kwargs is None else norm_kwargs)))

new_features.add(nn.Activation('relu'))

new_features.add(nn.Conv2D(growth_rate, kernel_size=3, padding=1, use_bias=False))

if dropout:

new_features.add(nn.Dropout(dropout))

out = HybridConcurrent(axis=1, prefix='')

out.add(Identity())

out.add(new_features)

return out

def _make_transition(num_output_features, norm_layer, norm_kwargs):

out = nn.HybridSequential(prefix='')

out.add(norm_layer(**({} if norm_kwargs is None else norm_kwargs)))

out.add(nn.Activation('relu'))

out.add(nn.Conv2D(num_output_features, kernel_size=1, use_bias=False))

out.add(nn.AvgPool2D(pool_size=2, strides=2))

return out

class _DenseNet(nn.HybridBlock):

def __init__(self, num_init_features, growth_rate, block_config, bn_size=4, dropout=0, classes=1000, norm_layer=nn.BatchNorm, norm_kwargs=None, **kwargs):

super(_DenseNet, self).__init__(**kwargs)

with self.name_scope():

self.features = nn.HybridSequential(prefix='')

self.features.add(nn.Conv2D(num_init_features, kernel_size=7, strides=2, padding=3, use_bias=False))

self.features.add(norm_layer(**({} if norm_kwargs is None else norm_kwargs)))

self.features.add(nn.Activation('relu'))

self.features.add(nn.MaxPool2D(pool_size=3, strides=2, padding=1))

# Add dense blocks

num_features = num_init_features

for i, num_layers in enumerate(block_config):

self.features.add(_make_dense_block(num_layers, bn_size, growth_rate, dropout, i+1, norm_layer, norm_kwargs))

num_features = num_features + num_layers * growth_rate

if i != len(block_config) - 1:

self.features.add(_make_transition(num_features // 2, norm_layer, norm_kwargs))

num_features = num_features // 2

self.features.add(norm_layer(**({} if norm_kwargs is None else norm_kwargs)))

self.features.add(nn.Activation('relu'))

self.features.add(nn.AvgPool2D(pool_size=7))

self.features.add(nn.Flatten())

self.output = nn.Dense(classes)

def hybrid_forward(self, F, x):

x = self.features(x)

x = self.output(x)

return x

_densenet_spec = {121: (64, 32, [6, 12, 24, 16]),

161: (96, 48, [6, 12, 36, 24]),

169: (64, 32, [6, 12, 32, 32]),

201: (64, 32, [6, 12, 48, 32])}

def get_densenet(num_layers, class_num, **kwargs):

num_init_features, growth_rate, block_config = _densenet_spec[num_layers]

net = _DenseNet(num_init_features, growth_rate, block_config,classes=class_num, **kwargs)

return net

10.ResNest的实现(2020年)

论文地址:https://arxiv.org/pdf/2004.08955.pdf

网络结构:

ResNeSt在图像分类上中ImageNet数据集上超越了其前辈ResNet、ResNeXt、SENet以及EfficientNet。使用ResNeSt-50为基本骨架的Faster-RCNN比使用ResNet-50的mAP要高出3.08%。使用ResNeSt-50为基本骨架的DeeplabV3比使用ResNet-50的mIOU要高出3.02%。涨点效果非常明显。

- 提出了split-attention blocks构造的ResNeSt,与现有的ResNet变体相比,不需要增加额外的计算量。而且ResNeSt可以作为其它任务的骨架。

- 图像分类和迁移学习应用的大规模基准。 利用ResNeSt主干的模型能够在几个任务上达到最先进的性能,即:图像分类,对象检测,实例分割和语义分割。 与通过神经架构搜索生成的最新CNN模型[55]相比,所提出的ResNeSt性能优于所有现有ResNet变体,并且具有相同的计算效率,甚至可以实现更好的速度精度折衷。单个Cascade-RCNN [3]使用ResNeSt-101主干的模型在MS-COCO实例分割上实现了48.3%的box mAP和41.56%的mask mAP。 单个DeepLabV3 [7]模型同样使用ResNeSt-101主干,在ADE20K场景分析验证集上的mIoU达到46.9%,比以前的最佳结果高出1%mIoU以上。

代码实现(gluon):

class _ResNeSt(nn.HybridBlock):

def __init__(self, block, layers, cardinality=1, bottleneck_width=64, classes=1000, dilated=False, dilation=1, norm_layer=nn.BatchNorm, norm_kwargs=None, last_gamma=False, deep_stem=False, stem_width=32, avg_down=False, final_drop=0.0, use_global_stats=False, name_prefix='', dropblock_prob=0.0, input_size=224, use_splat=False, radix=2, avd=False, avd_first=False, split_drop_ratio=0):

self.cardinality = cardinality

self.bottleneck_width = bottleneck_width

self.inplanes = stem_width * 2 if deep_stem else 64

self.radix = radix

self.split_drop_ratio = split_drop_ratio

self.avd_first = avd_first

super(_ResNeSt, self).__init__(prefix=name_prefix)

norm_kwargs = norm_kwargs if norm_kwargs is not None else {}

if use_global_stats:

norm_kwargs['use_global_stats'] = True

self.norm_kwargs = norm_kwargs

with self.name_scope():

if not deep_stem:

self.conv1 = nn.Conv2D(channels=64, kernel_size=7, strides=2, padding=3, use_bias=False, in_channels=3)

else:

self.conv1 = nn.HybridSequential(prefix='conv1')

self.conv1.add(nn.Conv2D(channels=stem_width, kernel_size=3, strides=2, padding=1, use_bias=False, in_channels=3))

self.conv1.add(norm_layer(in_channels=stem_width, **norm_kwargs))

self.conv1.add(nn.Activation('relu'))

self.conv1.add(nn.Conv2D(channels=stem_width, kernel_size=3, strides=1, padding=1, use_bias=False, in_channels=stem_width))

self.conv1.add(norm_layer(in_channels=stem_width, **norm_kwargs))

self.conv1.add(nn.Activation('relu'))

self.conv1.add(nn.Conv2D(channels=stem_width * 2, kernel_size=3, strides=1, padding=1, use_bias=False, in_channels=stem_width))

input_size = _update_input_size(input_size, 2)

self.bn1 = norm_layer(in_channels=64 if not deep_stem else stem_width * 2, **norm_kwargs)

self.relu = nn.Activation('relu')

self.maxpool = nn.MaxPool2D(pool_size=3, strides=2, padding=1)

input_size = _update_input_size(input_size, 2)

self.layer1 = self._make_layer(1, block, 64, layers[0], avg_down=avg_down, norm_layer=norm_layer, last_gamma=last_gamma, use_splat=use_splat, avd=avd)

self.layer2 = self._make_layer(2, block, 128, layers[1], strides=2, avg_down=avg_down, norm_layer=norm_layer, last_gamma=last_gamma, use_splat=use_splat, avd=avd)

input_size = _update_input_size(input_size, 2)

if dilated or dilation == 4:

self.layer3 = self._make_layer(3, block, 256, layers[2], strides=1, dilation=2, avg_down=avg_down, norm_layer=norm_layer, last_gamma=last_gamma, dropblock_prob=dropblock_prob, input_size=input_size, use_splat=use_splat, avd=avd)

self.layer4 = self._make_layer(4, block, 512, layers[3], strides=1, dilation=4, pre_dilation=2, avg_down=avg_down, norm_layer=norm_layer, last_gamma=last_gamma, dropblock_prob=dropblock_prob, input_size=input_size, use_splat=use_splat, avd=avd)

elif dilation == 3:

self.layer3 = self._make_layer(3, block, 256, layers[2], strides=1, dilation=2, avg_down=avg_down, norm_layer=norm_layer, last_gamma=last_gamma, dropblock_prob=dropblock_prob, input_size=input_size, use_splat=use_splat, avd=avd)

self.layer4 = self._make_layer(4, block, 512, layers[3], strides=2, dilation=2, pre_dilation=2, avg_down=avg_down, norm_layer=norm_layer, last_gamma=last_gamma, dropblock_prob=dropblock_prob, input_size=input_size, use_splat=use_splat, avd=avd)

elif dilation == 2:

self.layer3 = self._make_layer(3, block, 256, layers[2], strides=2, avg_down=avg_down, norm_layer=norm_layer, last_gamma=last_gamma, dropblock_prob=dropblock_prob, input_size=input_size, use_splat=use_splat, avd=avd)

self.layer4 = self._make_layer(4, block, 512, layers[3], strides=1, dilation=2, avg_down=avg_down, norm_layer=norm_layer, last_gamma=last_gamma, dropblock_prob=dropblock_prob, input_size=input_size, use_splat=use_splat, avd=avd)

else:

self.layer3 = self._make_layer(3, block, 256, layers[2], strides=2, avg_down=avg_down, norm_layer=norm_layer, last_gamma=last_gamma, dropblock_prob=dropblock_prob, input_size=input_size, use_splat=use_splat, avd=avd)

input_size = _update_input_size(input_size, 2)

self.layer4 = self._make_layer(4, block, 512, layers[3], strides=2, avg_down=avg_down, norm_layer=norm_layer, last_gamma=last_gamma, dropblock_prob=dropblock_prob, input_size=input_size, use_splat=use_splat, avd=avd)

input_size = _update_input_size(input_size, 2)

self.avgpool = nn.GlobalAvgPool2D()

self.flat = nn.Flatten()

self.drop = None

if final_drop > 0.0:

self.drop = nn.Dropout(final_drop)

self.fc = nn.Dense(in_units=512 * block.expansion, units=classes)

def _make_layer(self, stage_index, block, planes, blocks, strides=1, dilation=1, pre_dilation=1, avg_down=False, norm_layer=None, last_gamma=False, dropblock_prob=0, input_size=224, use_splat=False, avd=False):

downsample = None

if strides != 1 or self.inplanes != planes * block.expansion:

downsample = nn.HybridSequential(prefix='down%d_' % stage_index)

with downsample.name_scope():

if avg_down:

if pre_dilation == 1:

downsample.add(nn.AvgPool2D(pool_size=strides, strides=strides, ceil_mode=True, count_include_pad=False))

elif strides == 1:

downsample.add(nn.AvgPool2D(pool_size=1, strides=1, ceil_mode=True, count_include_pad=False))

else:

downsample.add(

nn.AvgPool2D(pool_size=pre_dilation * strides, strides=strides, padding=1, ceil_mode=True, count_include_pad=False))

downsample.add(nn.Conv2D(channels=planes * block.expansion, kernel_size=1, strides=1, use_bias=False, in_channels=self.inplanes))

downsample.add(norm_layer(in_channels=planes * block.expansion, **self.norm_kwargs))

else:

downsample.add(nn.Conv2D(channels=planes * block.expansion, kernel_size=1, strides=strides, use_bias=False, in_channels=self.inplanes))

downsample.add(norm_layer(in_channels=planes * block.expansion, **self.norm_kwargs))

layers = nn.HybridSequential(prefix='layers%d_' % stage_index)

with layers.name_scope():

if dilation in (1, 2):

layers.add(block(planes, cardinality=self.cardinality, bottleneck_width=self.bottleneck_width, strides=strides, dilation=pre_dilation, downsample=downsample, previous_dilation=dilation, norm_layer=norm_layer, norm_kwargs=self.norm_kwargs, last_gamma=last_gamma, dropblock_prob=dropblock_prob, input_size=input_size, use_splat=use_splat, avd=avd, avd_first=self.avd_first, radix=self.radix, in_channels=self.inplanes, split_drop_ratio=self.split_drop_ratio))

elif dilation == 4:

layers.add(block(planes, cardinality=self.cardinality, bottleneck_width=self.bottleneck_width, strides=strides, dilation=pre_dilation, downsample=downsample, previous_dilation=dilation, norm_layer=norm_layer, norm_kwargs=self.norm_kwargs, last_gamma=last_gamma, dropblock_prob=dropblock_prob, input_size=input_size, use_splat=use_splat, avd=avd, avd_first=self.avd_first, radix=self.radix, in_channels=self.inplanes, split_drop_ratio=self.split_drop_ratio))

else:

raise RuntimeError("=> unknown dilation size: {}".format(dilation))

input_size = _update_input_size(input_size, strides)

self.inplanes = planes * block.expansion

for i in range(1, blocks):

layers.add(block(planes, cardinality=self.cardinality, bottleneck_width=self.bottleneck_width, dilation=dilation, previous_dilation=dilation, norm_layer=norm_layer, norm_kwargs=self.norm_kwargs, last_gamma=last_gamma, dropblock_prob=dropblock_prob, input_size=input_size, use_splat=use_splat, avd=avd, avd_first=self.avd_first, radix=self.radix, in_channels=self.inplanes, split_drop_ratio=self.split_drop_ratio))

return layers

def hybrid_forward(self, F, x):

x = self.conv1(x)

x = self.bn1(x)

x = self.relu(x)

x = self.maxpool(x)

x = self.layer1(x)

x = self.layer2(x)

x = self.layer3(x)

x = self.layer4(x)

x = self.avgpool(x)

x = self.flat(x)

if self.drop is not None:

x = self.drop(x)

x = self.fc(x)

return x

class _DropBlock(nn.HybridBlock):

def __init__(self, drop_prob, block_size, c, h, w):

super(_DropBlock, self).__init__()

self.drop_prob = drop_prob

self.block_size = block_size

self.c, self.h, self.w = c, h, w

self.numel = c * h * w

pad_h = max((block_size - 1), 0)

pad_w = max((block_size - 1), 0)

self.padding = (pad_h//2, pad_h-pad_h//2, pad_w//2, pad_w-pad_w//2)

self.dtype = 'float32'

def hybrid_forward(self, F, x):

if not mx.autograd.is_training() or self.drop_prob <= 0:

return x

gamma = self.drop_prob * (self.h * self.w) / (self.block_size ** 2) / ((self.w - self.block_size + 1) * (self.h - self.block_size + 1))

# generate mask

mask = F.random.uniform(0, 1, shape=(1, self.c, self.h, self.w), dtype=self.dtype) < gamma

mask = F.Pooling(mask, pool_type='max',

kernel=(self.block_size, self.block_size), pad=self.padding)

mask = 1 - mask

y = F.broadcast_mul(F.broadcast_mul(x, mask), (1.0 * self.numel / mask.sum(axis=0, exclude=True).expand_dims(1).expand_dims(1).expand_dims(1)))

return y

def cast(self, dtype):

super(_DropBlock, self).cast(dtype)

self.dtype = dtype

def __repr__(self):

reprstr = self.__class__.__name__ + '(' +'drop_prob: {}, block_size{}'.format(self.drop_prob, self.block_size) +')'

return reprstr

def _update_input_size(input_size, stride):

sh, sw = (stride, stride) if isinstance(stride, int) else stride

ih, iw = (input_size, input_size) if isinstance(input_size, int) else input_size

oh, ow = math.ceil(ih / sh), math.ceil(iw / sw)

input_size = (oh, ow)

return input_size

class _SplitAttentionConv(nn.HybridBlock):

def __init__(self, channels, kernel_size, strides=(1, 1), padding=(0, 0), dilation=(1, 1), groups=1, radix=2, in_channels=None, r=2, norm_layer=nn.BatchNorm, norm_kwargs=None, drop_ratio=0, *args, **kwargs):

super(_SplitAttentionConv, self).__init__()

norm_kwargs = norm_kwargs if norm_kwargs is not None else {}

inter_channels = max(in_channels*radix//2//r, 32)

self.radix = radix

self.cardinality = groups

self.conv = Conv2D(channels*radix, kernel_size, strides, padding, dilation,

groups=groups*radix, *args, in_channels=in_channels, **kwargs)

self.use_bn = norm_layer is not None

if self.use_bn:

self.bn = norm_layer(in_channels=channels*radix, **norm_kwargs)

self.relu = nn.Activation('relu')

self.fc1 = Conv2D(inter_channels, 1, in_channels=channels, groups=self.cardinality)

if self.use_bn:

self.bn1 = norm_layer(in_channels=inter_channels, **norm_kwargs)

self.relu1 = nn.Activation('relu')

if drop_ratio > 0:

self.drop = nn.Dropout(drop_ratio)

else:

self.drop = None

self.fc2 = Conv2D(channels*radix, 1, in_channels=inter_channels, groups=self.cardinality)

self.channels = channels

def hybrid_forward(self, F, x):

x = self.conv(x)

if self.use_bn:

x = self.bn(x)

x = self.relu(x)

if self.radix > 1:

splited = F.reshape(x.expand_dims(1), (0, self.radix, self.channels, 0, 0))

gap = F.sum(splited, axis=1)

else:

gap = x

gap = F.contrib.AdaptiveAvgPooling2D(gap, 1)

gap = self.fc1(gap)

if self.use_bn:

gap = self.bn1(gap)

atten = self.relu1(gap)

if self.drop:

atten = self.drop(atten)

atten = self.fc2(atten).reshape((0, self.cardinality, self.radix, -1)).swapaxes(1, 2)

if self.radix > 1:

atten = F.softmax(atten, axis=1).reshape((0, self.radix, -1, 1, 1))

else:

atten = F.sigmoid(atten).reshape((0, -1, 1, 1))

if self.radix > 1:

outs = F.broadcast_mul(atten, splited)

out = F.sum(outs, axis=1)

else:

out = F.broadcast_mul(atten, x)

return out

class _Bottleneck(nn.HybridBlock):

expansion = 4

def __init__(self, channels, cardinality=1, bottleneck_width=64, strides=1, dilation=1, downsample=None, previous_dilation=1, norm_layer=None, norm_kwargs=None, last_gamma=False, dropblock_prob=0, input_size=None, use_splat=False, radix=2, avd=False, avd_first=False, in_channels=None, split_drop_ratio=0, **kwargs):

super(_Bottleneck, self).__init__()

group_width = int(channels * (bottleneck_width / 64.)) * cardinality

norm_kwargs = norm_kwargs if norm_kwargs is not None else {}

self.dropblock_prob = dropblock_prob

self.use_splat = use_splat

self.avd = avd and (strides > 1 or previous_dilation != dilation)

self.avd_first = avd_first

if self.dropblock_prob > 0:

self.dropblock1 = _DropBlock(dropblock_prob, 3, group_width, *input_size)

if self.avd:

if avd_first:

input_size = _update_input_size(input_size, strides)

self.dropblock2 = _DropBlock(dropblock_prob, 3, group_width, *input_size)

if not avd_first:

input_size = _update_input_size(input_size, strides)

else:

input_size = _update_input_size(input_size, strides)

self.dropblock2 = _DropBlock(dropblock_prob, 3, group_width, *input_size)

self.dropblock3 = _DropBlock(dropblock_prob, 3, channels * 4, *input_size)

self.conv1 = nn.Conv2D(channels=group_width, kernel_size=1, use_bias=False, in_channels=in_channels)

self.bn1 = norm_layer(in_channels=group_width, **norm_kwargs)

self.relu1 = nn.Activation('relu')

if self.use_splat:

self.conv2 = _SplitAttentionConv(channels=group_width, kernel_size=3,

strides=1 if self.avd else strides,

padding=dilation, dilation=dilation, groups=cardinality, use_bias=False, in_channels=group_width, norm_layer=norm_layer, norm_kwargs=norm_kwargs, radix=radix, drop_ratio=split_drop_ratio, **kwargs)

else:

self.conv2 = nn.Conv2D(channels=group_width, kernel_size=3,

strides=1 if self.avd else strides,

padding=dilation, dilation=dilation, groups=cardinality,

use_bias=False, in_channels=group_width, **kwargs)

self.bn2 = norm_layer(in_channels=group_width, **norm_kwargs)

self.relu2 = nn.Activation('relu')

self.conv3 = nn.Conv2D(channels=channels * 4, kernel_size=1, use_bias=False,

in_channels=group_width)

if not last_gamma:

self.bn3 = norm_layer(in_channels=channels * 4, **norm_kwargs)

else:

self.bn3 = norm_layer(in_channels=channels * 4, gamma_initializer='zeros',

**norm_kwargs)

if self.avd:

self.avd_layer = nn.AvgPool2D(3, strides, padding=1)

self.relu3 = nn.Activation('relu')

self.downsample = downsample

self.dilation = dilation

self.strides = strides

def hybrid_forward(self, F, x):

residual = x

out = self.conv1(x)

out = self.bn1(out)

if self.dropblock_prob > 0:

out = self.dropblock1(out)

out = self.relu1(out)

if self.avd and self.avd_first:

out = self.avd_layer(out)

if self.use_splat:

out = self.conv2(out)

if self.dropblock_prob > 0:

out = self.dropblock2(out)

else:

out = self.conv2(out)

out = self.bn2(out)

if self.dropblock_prob > 0:

out = self.dropblock2(out)

out = self.relu2(out)

if self.avd and not self.avd_first:

out = self.avd_layer(out)

out = self.conv3(out)

out = self.bn3(out)

if self.downsample is not None:

residual = self.downsample(x)

if self.dropblock_prob > 0:

out = self.dropblock3(out)

out = out + residual

out = self.relu3(out)

return out

def get_resnest(num_layers,class_num,image_size):

if num_layers==14:

net = _ResNeSt(_Bottleneck, [1, 1, 1, 1],

radix=2, cardinality=1, bottleneck_width=64,

deep_stem=True, avg_down=True,

avd=True, avd_first=False,

use_splat=True, dropblock_prob=0.0,

name_prefix='resnest_14',classes=class_num,input_size=image_size)

elif num_layers == 26:

net = _ResNeSt(_Bottleneck, [2, 2, 2, 2],

radix=2, cardinality=1, bottleneck_width=64,

deep_stem=True, avg_down=True,

avd=True, avd_first=False,

use_splat=True, dropblock_prob=0.1,

name_prefix='resnest_26', classes=class_num,input_size=image_size)

elif num_layers == 50:

net = _ResNeSt(_Bottleneck, [3, 4, 6, 3],

radix=2, cardinality=1, bottleneck_width=64,

deep_stem=True, avg_down=True,

avd=True, avd_first=False,

use_splat=True, dropblock_prob=0.1,

name_prefix='resnest_50', classes=class_num,input_size=image_size)

elif num_layers == 101:

net = _ResNeSt(_Bottleneck, [3, 4, 23, 3],

radix=2, cardinality=1, bottleneck_width=64,

deep_stem=True, avg_down=True, stem_width=64,

avd=True, avd_first=False, use_splat=True, dropblock_prob=0.1,

name_prefix='resnest_101', classes=class_num,input_size=image_size)

elif num_layers == 200:

net = _ResNeSt(_Bottleneck, [3, 24, 36, 3], deep_stem=True, avg_down=True, stem_width=64,

avd=True, use_splat=True, dropblock_prob=0.1, final_drop=0.2,

name_prefix='resnest_200', classes=class_num,input_size=image_size)

elif num_layers == 269:

net = _ResNeSt(_Bottleneck, [3, 30, 48, 8], deep_stem=True, avg_down=True, stem_width=64,

avd=True, use_splat=True, dropblock_prob=0.1, final_drop=0.2,

name_prefix='resnest_', classes=class_num,input_size=image_size)

else:

net = None

return net

11.ResNext的实现(2016年)

论文地址:https://arxiv.org/pdf/1611.05431.pdf

网络结构:

![]()

![]()

ResNeXt对ResNet进行了改进,采用了多分支的策略,在论文中作者提出了三种等价的模型结构,最后的ResNeXt用了C的结构来构建我们的ResNeXt、这里面和我们的Inception是不同的,在Inception中,每一部分的拓扑结构是不同的,比如一部分是1x1卷积,3x3卷积还有5x5卷积,而我们ResNeXt是用相同的拓扑结构,并在保持参数量的情况下提高了准确率。

代码实现(gluon):

class _Block_resnext(nn.HybridBlock):

def __init__(self, channels, cardinality, bottleneck_width, stride, downsample=False, last_gamma=False, use_se=False, avg_down=False, norm_layer=nn.BatchNorm, norm_kwargs=None, **kwargs):

super(_Block_resnext, self).__init__(**kwargs)

D = int(math.floor(channels * (bottleneck_width / 64)))

group_width = cardinality * D

self.body = nn.HybridSequential(prefix='')

self.body.add(nn.Conv2D(group_width, kernel_size=1, use_bias=False))

self.body.add(norm_layer(**({} if norm_kwargs is None else norm_kwargs)))

self.body.add(nn.Activation('relu'))

self.body.add(nn.Conv2D(group_width, kernel_size=3, strides=stride, padding=1, groups=cardinality, use_bias=False))

self.body.add(norm_layer(**({} if norm_kwargs is None else norm_kwargs)))

self.body.add(nn.Activation('relu'))

self.body.add(nn.Conv2D(channels * 4, kernel_size=1, use_bias=False))

if last_gamma:

self.body.add(norm_layer(**({} if norm_kwargs is None else norm_kwargs)))

else:

self.body.add(norm_layer(gamma_initializer='zeros', **({} if norm_kwargs is None else norm_kwargs)))

if use_se:

self.se = nn.HybridSequential(prefix='')

self.se.add(nn.Conv2D(channels // 4, kernel_size=1, padding=0))

self.se.add(nn.Activation('relu'))

self.se.add(nn.Conv2D(channels * 4, kernel_size=1, padding=0))

self.se.add(nn.Activation('sigmoid'))

else:

self.se = None

if downsample:

self.downsample = nn.HybridSequential(prefix='')

if avg_down:

self.downsample.add(nn.AvgPool2D(pool_size=stride, strides=stride, ceil_mode=True, count_include_pad=False))

self.downsample.add(nn.Conv2D(channels=channels * 4, kernel_size=1, strides=1, use_bias=False))

else:

self.downsample.add(nn.Conv2D(channels * 4, kernel_size=1, strides=stride, use_bias=False))

self.downsample.add(norm_layer(**({} if norm_kwargs is None else norm_kwargs)))

else:

self.downsample = None

def hybrid_forward(self, F, x):

residual = x

x = self.body(x)

if self.se:

w = F.contrib.AdaptiveAvgPooling2D(x, output_size=1)

w = self.se(w)

x = F.broadcast_mul(x, w)

if self.downsample:

residual = self.downsample(residual)

x = F.Activation(x + residual, act_type='relu')

return x

class _ResNext(nn.HybridBlock):

def __init__(self, layers, cardinality, bottleneck_width, classes=1000, last_gamma=False, use_se=False, deep_stem=False, avg_down=False, stem_width=64, norm_layer=nn.BatchNorm, norm_kwargs=None, **kwargs):

super(_ResNext, self).__init__(**kwargs)

self.cardinality = cardinality

self.bottleneck_width = bottleneck_width

channels = 64

with self.name_scope():

self.features = nn.HybridSequential(prefix='')

if not deep_stem:

self.features.add(nn.Conv2D(channels=64, kernel_size=7, strides=2, padding=3, use_bias=False))

else:

self.features.add(nn.Conv2D(channels=stem_width, kernel_size=3, strides=2, padding=1, use_bias=False))

self.features.add(norm_layer(**({} if norm_kwargs is None else norm_kwargs)))

self.features.add(nn.Activation('relu'))

self.features.add(nn.Conv2D(channels=stem_width, kernel_size=3, strides=1, padding=1, use_bias=False))

self.features.add(norm_layer(**({} if norm_kwargs is None else norm_kwargs)))

self.features.add(nn.Activation('relu'))

self.features.add(nn.Conv2D(channels=stem_width * 2, kernel_size=3, strides=1, padding=1, use_bias=False))

self.features.add(norm_layer(**({} if norm_kwargs is None else norm_kwargs)))

self.features.add(nn.Activation('relu'))

self.features.add(nn.MaxPool2D(3, 2, 1))

for i, num_layer in enumerate(layers):

stride = 1 if i == 0 else 2

self.features.add(self._make_layer(channels, num_layer, stride, last_gamma, use_se, False if i == 0 else avg_down, i + 1, norm_layer=norm_layer, norm_kwargs=norm_kwargs))

channels *= 2

self.features.add(nn.GlobalAvgPool2D())

self.output = nn.Dense(classes)

def _make_layer(self, channels, num_layers, stride, last_gamma, use_se, avg_down, stage_index, norm_layer=nn.BatchNorm, norm_kwargs=None):

layer = nn.HybridSequential(prefix='stage%d_' % stage_index)

with layer.name_scope():

layer.add(_Block_resnext(channels, self.cardinality, self.bottleneck_width, stride, True, last_gamma=last_gamma, use_se=use_se, avg_down=avg_down, prefix='', norm_layer=norm_layer, norm_kwargs=norm_kwargs))

for _ in range(num_layers - 1):

layer.add(_Block_resnext(channels, self.cardinality, self.bottleneck_width, 1, False, last_gamma=last_gamma, use_se=use_se, prefix='', norm_layer=norm_layer, norm_kwargs=norm_kwargs))