TypeError: __init__() takes 1 positional argument but 2 were given

在网上阅读了大量的博文。基本上都是因为没有实例化对象而导致的错误。如果没有实例化对象,或者压根就不知道自己有没有实例化对象的小伙伴们可以点击以下博文:

Pytorch报错TypeError : init() takes 1 positional argument but 2 were given 原因及解决方法

或者是因为少传了参数,导致参数个数不匹配。

因为参数个数不匹配而报错

但是,博主我的问题却不是这两个。我的源代码如下:

import torch

import torch.utils.data as data_utils

import torchvision.datasets as dataset

import torchvision.transforms as transforms

# data

# 手写数字数据集的下载

train_data = dataset.MNIST(root="mnist",

train=True,

transform=transforms.ToTensor,

download=True

)

test_data = dataset.MNIST(root="mnist",

train=False, # 不下载数据集

transform=transforms.ToTensor,

download=False

)

# batchsize

# 要真正理解batchSize的含义,以及为什么要使用train_loader 和test_loader

# dataloader可以完成对数据重复的,成千上万次读取

train_loader = data_utils.DataLoader(

dataset=train_data,

batch_size=64, #

shuffle=True, # 打乱数据

)

test_loader = data_utils.DataLoader(

dataset=test_data,

batch_size=64, #

shuffle=True, # 打乱数据

)

# 网络定义:

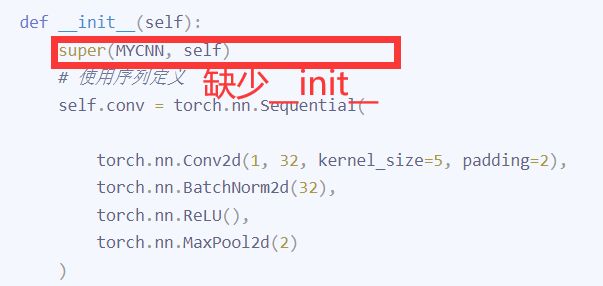

class MYCNN(torch.nn.Module):

def __init__(self):

super(MYCNN, self)

# 使用序列定义

self.conv = torch.nn.Sequential(

torch.nn.Conv2d(1, 32, kernel_size=5, padding=2),

torch.nn.BatchNorm2d(32),

torch.nn.ReLU(),

torch.nn.MaxPool2d(2)

)

# 输入的大小是经过第一轮卷积之后的大小。

# 输入的图像是28 *28 但经过pooling后会变为14*14

# 32是32个通道

# 10是输出

self.fc = torch.nn.Linear(14 * 14 * 32, 10)

def forward(self, x):

# print("TAG01:", x.shape)

out = self.conv(x)

# 在pytorch中tensor是N*C*H*W

# 我们需要将其拉成一个向量

# print("TAG02:", out.shape)

out = out.view(out.size()[0], -1)

# print("TAG03:", out.shape)

out = self.fc(out)

# print("TAG04:", out.shape)

return out

# 实例化对象

cnn = MYCNN()

print("网络结构:", cnn)

cnn = cnn.cuda()

batch_size = 64

# loss

loss_func = torch.nn.CrossEntropyLoss()

# optimizer

optimizer = torch.optim.Adam(cnn.parameters(), lr=0.01)

# training 每次在训练时采用mini-batch的策略进行数据的读取和训练

for epoch in range(10): # 整个数据集过10遍

for i, (images, labels) in enumerate(train_loader):

images = images.cuda() # 把images放到cuda

labels = labels.cuda()

outputs = cnn(images)

# 计算loss

loss = loss_func(outputs, labels)

optimizer.zero_grad()

loss.backward()

optimizer.step()

print("epoch is{}, 迭代次数是{}/{},loss is{}".format(epoch + 1, i, len(train_data) // batch_size, loss.item()))

# eval/test

loss_test = 0

accuracy = 0

for i, (images, labels) in enumerate(test_loader):

images = images.cuda() # 把images放到cuda

labels = labels.cuda()

outputs = cnn(images)

# 计算loss

# outputs = batchsize *cls_num

loss_test += loss_func(outputs, labels)

# 统计概率最大的对应的数字

_, pred = outputs.max(dim=1)

#print("预测的数字",pred)

accuracy = (pred == labels).sum().item()

accuracy = accuracy / len(test_data)

loss_test = loss_test / (len(test_data) // 64)

print("epoch is {},accuracy is {},loss is {}".format(

epoch + 1, accuracy, loss_test.item()

))

# 保存文件

torch.save(cnn, "model_by_myself.pkl")

我自己对比了大量的文件后,终于发现在以下三个地方出现问题了。

改正后的代码:

# 手写数字数据集的下载

train_data = dataset.MNIST(root="mnist",

train=True,

transform=transforms.ToTensor(),

download=True

)

test_data = dataset.MNIST(root="mnist",

train=False, # 不下载数据集

transform=transforms.ToTensor(),

download=False

)

# 网络定义:

class MYCNN(torch.nn.Module):

def __init__(self):

super(MYCNN, self).__init__()

# 使用序列定义

self.conv = torch.nn.Sequential(

torch.nn.Conv2d(1, 32, kernel_size=5, padding=2),

torch.nn.BatchNorm2d(32),

torch.nn.ReLU(),

torch.nn.MaxPool2d(2)

)

# 输入的大小是经过第一轮卷积之后的大小。

# 输入的图像是28 *28 但经过pooling后会变为14*14

# 32是32个通道

# 10是输出

self.fc = torch.nn.Linear(14 * 14 * 32, 10)

def forward(self, x):

# print("TAG01:", x.shape)

out = self.conv(x)

# 在pytorch中tensor是N*C*H*W

# 我们需要将其拉成一个向量

# print("TAG02:", out.shape)

out = out.view(out.size()[0], -1)

# print("TAG03:", out.shape)

out = self.fc(out)

# print("TAG04:", out.shape)

return out