机器学习-逻辑回归

机器学习-基础知识

机器学习-线性回归

机器学习-逻辑回归

机器学习-聚类算法

机器学习-决策树算法

机器学习-集成算法

机器学习-SVM算法

文章目录

-

- 逻辑回归

-

- 1. 线性逻辑回归

-

- 1.1. 理论基础

- 1.2. 逻辑回归模拟步骤

-

- 1.2.1. 数据集

- 1.2.2. 模拟逻辑回归类模块

- 1.2.3. 测试模块

- 1.2.4. 数据预处理模块

- 1.2.5. Sigmoid函数模块

- 1.3. 效果展示

-

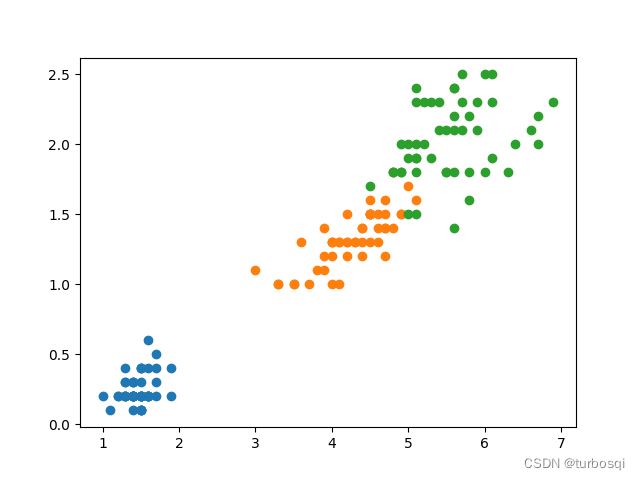

- 1.3.1. 数据集分布

- 1.3.2. 损失函数

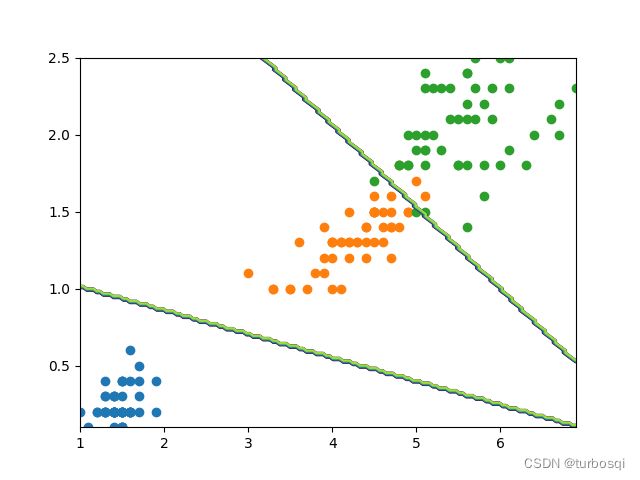

- 1.3.3. 测试结果

- 2. 非线性逻辑回归

-

- 2.1. 模拟实现

- 2.2. 效果展示

-

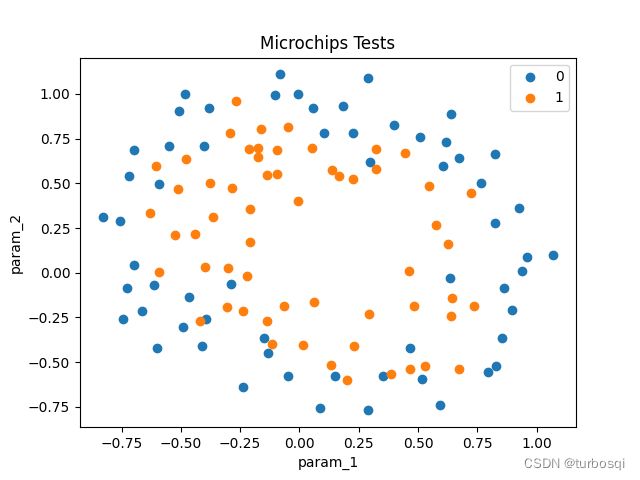

- 2.2.1. 原始数据集

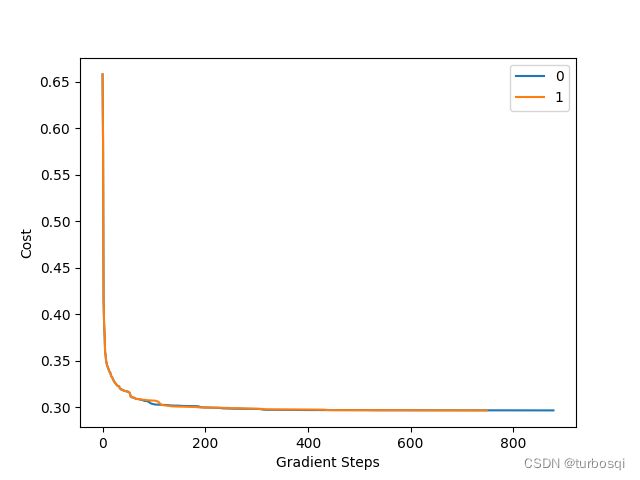

- 2.2.2. 损失函数

- 2.2.3. 预测结果

- 3. 逻辑回归问题

-

- 3.1. 二分类的实现

-

- 3.1.1. 导包操作

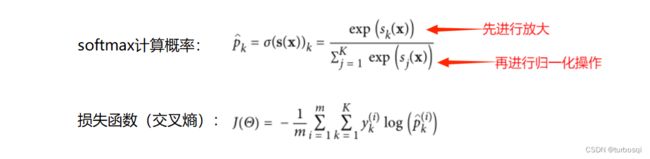

- 3.1.2. 值到概率的转换

- 3.1.3. 加载sklearn内置数据集

- 3.1.4. 进行训练

- 3.1.5. 画图展示

- 3.1.6. 边界绘制

- 3.2. 多分类的实现

-

- 3.2.1. 获取数据

- 3.2.2. 进行训练

- 3.2.3. 进行预测

- 3.2.4. 绘制图形

逻辑回归

1. 线性逻辑回归

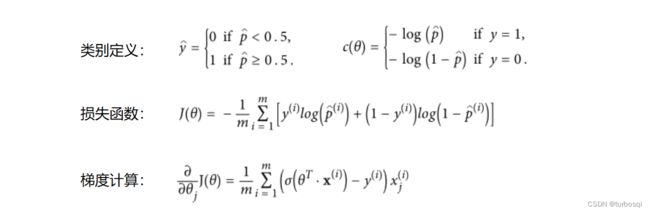

1.1. 理论基础

1.2. 逻辑回归模拟步骤

1.2.1. 数据集

(iris.csv)

sepal_length,sepal_width,petal_length,petal_width,class

5.1,3.5,1.4,0.2,SETOSA

4.9,3.0,1.4,0.2,SETOSA

4.7,3.2,1.3,0.2,SETOSA

4.6,3.1,1.5,0.2,SETOSA

5.0,3.6,1.4,0.2,SETOSA

5.4,3.9,1.7,0.4,SETOSA

4.6,3.4,1.4,0.3,SETOSA

5.0,3.4,1.5,0.2,SETOSA

4.4,2.9,1.4,0.2,SETOSA

4.9,3.1,1.5,0.1,SETOSA

5.4,3.7,1.5,0.2,SETOSA

4.8,3.4,1.6,0.2,SETOSA

4.8,3.0,1.4,0.1,SETOSA

4.3,3.0,1.1,0.1,SETOSA

5.8,4.0,1.2,0.2,SETOSA

5.7,4.4,1.5,0.4,SETOSA

5.4,3.9,1.3,0.4,SETOSA

5.1,3.5,1.4,0.3,SETOSA

5.7,3.8,1.7,0.3,SETOSA

5.1,3.8,1.5,0.3,SETOSA

5.4,3.4,1.7,0.2,SETOSA

5.1,3.7,1.5,0.4,SETOSA

4.6,3.6,1.0,0.2,SETOSA

5.1,3.3,1.7,0.5,SETOSA

4.8,3.4,1.9,0.2,SETOSA

5.0,3.0,1.6,0.2,SETOSA

5.0,3.4,1.6,0.4,SETOSA

5.2,3.5,1.5,0.2,SETOSA

5.2,3.4,1.4,0.2,SETOSA

4.7,3.2,1.6,0.2,SETOSA

4.8,3.1,1.6,0.2,SETOSA

5.4,3.4,1.5,0.4,SETOSA

5.2,4.1,1.5,0.1,SETOSA

5.5,4.2,1.4,0.2,SETOSA

4.9,3.1,1.5,0.1,SETOSA

5.0,3.2,1.2,0.2,SETOSA

5.5,3.5,1.3,0.2,SETOSA

4.9,3.1,1.5,0.1,SETOSA

4.4,3.0,1.3,0.2,SETOSA

5.1,3.4,1.5,0.2,SETOSA

5.0,3.5,1.3,0.3,SETOSA

4.5,2.3,1.3,0.3,SETOSA

4.4,3.2,1.3,0.2,SETOSA

5.0,3.5,1.6,0.6,SETOSA

5.1,3.8,1.9,0.4,SETOSA

4.8,3.0,1.4,0.3,SETOSA

5.1,3.8,1.6,0.2,SETOSA

4.6,3.2,1.4,0.2,SETOSA

5.3,3.7,1.5,0.2,SETOSA

5.0,3.3,1.4,0.2,SETOSA

7.0,3.2,4.7,1.4,VERSICOLOR

6.4,3.2,4.5,1.5,VERSICOLOR

6.9,3.1,4.9,1.5,VERSICOLOR

5.5,2.3,4.0,1.3,VERSICOLOR

6.5,2.8,4.6,1.5,VERSICOLOR

5.7,2.8,4.5,1.3,VERSICOLOR

6.3,3.3,4.7,1.6,VERSICOLOR

4.9,2.4,3.3,1.0,VERSICOLOR

6.6,2.9,4.6,1.3,VERSICOLOR

5.2,2.7,3.9,1.4,VERSICOLOR

5.0,2.0,3.5,1.0,VERSICOLOR

5.9,3.0,4.2,1.5,VERSICOLOR

6.0,2.2,4.0,1.0,VERSICOLOR

6.1,2.9,4.7,1.4,VERSICOLOR

5.6,2.9,3.6,1.3,VERSICOLOR

6.7,3.1,4.4,1.4,VERSICOLOR

5.6,3.0,4.5,1.5,VERSICOLOR

5.8,2.7,4.1,1.0,VERSICOLOR

6.2,2.2,4.5,1.5,VERSICOLOR

5.6,2.5,3.9,1.1,VERSICOLOR

5.9,3.2,4.8,1.8,VERSICOLOR

6.1,2.8,4.0,1.3,VERSICOLOR

6.3,2.5,4.9,1.5,VERSICOLOR

6.1,2.8,4.7,1.2,VERSICOLOR

6.4,2.9,4.3,1.3,VERSICOLOR

6.6,3.0,4.4,1.4,VERSICOLOR

6.8,2.8,4.8,1.4,VERSICOLOR

6.7,3.0,5.0,1.7,VERSICOLOR

6.0,2.9,4.5,1.5,VERSICOLOR

5.7,2.6,3.5,1.0,VERSICOLOR

5.5,2.4,3.8,1.1,VERSICOLOR

5.5,2.4,3.7,1.0,VERSICOLOR

5.8,2.7,3.9,1.2,VERSICOLOR

6.0,2.7,5.1,1.6,VERSICOLOR

5.4,3.0,4.5,1.5,VERSICOLOR

6.0,3.4,4.5,1.6,VERSICOLOR

6.7,3.1,4.7,1.5,VERSICOLOR

6.3,2.3,4.4,1.3,VERSICOLOR

5.6,3.0,4.1,1.3,VERSICOLOR

5.5,2.5,4.0,1.3,VERSICOLOR

5.5,2.6,4.4,1.2,VERSICOLOR

6.1,3.0,4.6,1.4,VERSICOLOR

5.8,2.6,4.0,1.2,VERSICOLOR

5.0,2.3,3.3,1.0,VERSICOLOR

5.6,2.7,4.2,1.3,VERSICOLOR

5.7,3.0,4.2,1.2,VERSICOLOR

5.7,2.9,4.2,1.3,VERSICOLOR

6.2,2.9,4.3,1.3,VERSICOLOR

5.1,2.5,3.0,1.1,VERSICOLOR

5.7,2.8,4.1,1.3,VERSICOLOR

6.3,3.3,6.0,2.5,VIRGINICA

5.8,2.7,5.1,1.9,VIRGINICA

7.1,3.0,5.9,2.1,VIRGINICA

6.3,2.9,5.6,1.8,VIRGINICA

6.5,3.0,5.8,2.2,VIRGINICA

7.6,3.0,6.6,2.1,VIRGINICA

4.9,2.5,4.5,1.7,VIRGINICA

7.3,2.9,6.3,1.8,VIRGINICA

6.7,2.5,5.8,1.8,VIRGINICA

7.2,3.6,6.1,2.5,VIRGINICA

6.5,3.2,5.1,2.0,VIRGINICA

6.4,2.7,5.3,1.9,VIRGINICA

6.8,3.0,5.5,2.1,VIRGINICA

5.7,2.5,5.0,2.0,VIRGINICA

5.8,2.8,5.1,2.4,VIRGINICA

6.4,3.2,5.3,2.3,VIRGINICA

6.5,3.0,5.5,1.8,VIRGINICA

7.7,3.8,6.7,2.2,VIRGINICA

7.7,2.6,6.9,2.3,VIRGINICA

6.0,2.2,5.0,1.5,VIRGINICA

6.9,3.2,5.7,2.3,VIRGINICA

5.6,2.8,4.9,2.0,VIRGINICA

7.7,2.8,6.7,2.0,VIRGINICA

6.3,2.7,4.9,1.8,VIRGINICA

6.7,3.3,5.7,2.1,VIRGINICA

7.2,3.2,6.0,1.8,VIRGINICA

6.2,2.8,4.8,1.8,VIRGINICA

6.1,3.0,4.9,1.8,VIRGINICA

6.4,2.8,5.6,2.1,VIRGINICA

7.2,3.0,5.8,1.6,VIRGINICA

7.4,2.8,6.1,1.9,VIRGINICA

7.9,3.8,6.4,2.0,VIRGINICA

6.4,2.8,5.6,2.2,VIRGINICA

6.3,2.8,5.1,1.5,VIRGINICA

6.1,2.6,5.6,1.4,VIRGINICA

7.7,3.0,6.1,2.3,VIRGINICA

6.3,3.4,5.6,2.4,VIRGINICA

6.4,3.1,5.5,1.8,VIRGINICA

6.0,3.0,4.8,1.8,VIRGINICA

6.9,3.1,5.4,2.1,VIRGINICA

6.7,3.1,5.6,2.4,VIRGINICA

6.9,3.1,5.1,2.3,VIRGINICA

5.8,2.7,5.1,1.9,VIRGINICA

6.8,3.2,5.9,2.3,VIRGINICA

6.7,3.3,5.7,2.5,VIRGINICA

6.7,3.0,5.2,2.3,VIRGINICA

6.3,2.5,5.0,1.9,VIRGINICA

6.5,3.0,5.2,2.0,VIRGINICA

6.2,3.4,5.4,2.3,VIRGINICA

5.9,3.0,5.1,1.8,VIRGINICA

1.2.2. 模拟逻辑回归类模块

(logist_regression.py)

import numpy as np

from scipy.optimize import minimize

from utils.features import prepare_for_training

from utils.hypothesis import sigmoid

class LogisticRegression:

def __init__(self, data, labels, polynomial_degree=0, sinusoid_degree=0, normalize_data=True):

data_processed, \

features_mean, \

features_deviation = prepare_for_training(data, polynomial_degree, sinusoid_degree, normalize_data=False)

# 对数据进行加1列的操作,操作后把数据返回data

self.data = data_processed

self.labels = labels

# 求不同类型label的数量

self.unique_labels = np.unique(labels)

# 不计算均值与标准差

self.features_mean = features_mean

self.features_deviation = features_deviation

self.polynomial_degree = polynomial_degree

self.sinusoid_degree = sinusoid_degree

# 是否进行归一化操作

self.normalize_data = normalize_data

# 计算当前特征的数量

num_features = self.data.shape[1]

# 分类的个数

num_unique_labels = np.unique(labels).shape[0]

# 第一个维度是多少类别,第二个维度每个类别的特征数,返回矩阵3x3

self.theta = np.zeros((num_unique_labels, num_features))

def train(self,max_iterations=1000):

"""训练函数"""

# 记录损失值

cost_histories = []

num_features = self.data.shape[1]

for label_index,unique_label in enumerate(self.unique_labels):

# 当前的初始化theta值

current_intial_theta = np.copy(self.theta[label_index].reshape(num_features,1))

# 当前的标签与训练的类别是否一致

current_labels = (self.labels == unique_label).astype(float)

# 进行梯度下降

(current_theta,cost_history) = self.gradient_descent(self.data,current_labels,current_intial_theta,max_iterations)

self.theta[label_index] = current_theta.T

cost_histories.append(cost_history)

return self.theta,cost_histories

@staticmethod

def gradient_descent(data,labels,current_intial_theta,max_iterations):

cost_history = []

num_features = data.shape[1]

result = minimize(

# 要优化的目标

# cost_function计算当前的水损失

lambda current_theta:LogisticRegression.cost_function(data,labels,current_theta.reshape(num_features,1)),

# 初始化的权重参数

current_intial_theta,

# 选择优化策略

method='CG',

# 梯度下降迭代计算公式

jac = lambda current_theta:LogisticRegression.gradient_step(data,labels,current_theta.reshape((num_features,1))),

# 记录结果

callback = lambda current_theta:cost_history.append(LogisticRegression.cost_function(data,labels,current_theta.reshape(num_features,1))),

# 迭代次数

options = {'maxiter': max_iterations}

)

if not result.success:

raise AttributeError('Can not minimize cost function' + result.message)

optimized_theta = result.x.reshape(num_features,1)

return optimized_theta,cost_history

@staticmethod

def cost_function(data,labels,theat):

# 计算当前总得数据量

num_example = data.shape[0]

# 计算预测值

presictions = LogisticRegression.hypothesis(data,theat)

# 属于当前类别总得的损失

y_is_set_cost = np.dot(labels[labels == 1].T,np.log(presictions[labels == 1]))

y_is_not_set_cost = np.dot(1-labels[labels == 0].T,np.log(1-presictions[labels == 0 ]))

# 计算当前的损失值

cost = (-1/num_example)*(y_is_set_cost + y_is_not_set_cost)

return cost

@staticmethod

def hypothesis(data,theat):

presictions = sigmoid(np.dot(data,theat))

return presictions

@staticmethod

def gradient_step(data,labels,theat):

num_example = labels.shape[0]

predictions = LogisticRegression.hypothesis(data,theat)

label_diff = predictions - labels

gradients = (1/num_example)*np.dot(data.T,label_diff)

# flatten拉长成行向量

return gradients.T.flatten()

def predict(self,data):

# 样本数据

num_examples = data.shape[0]

data_processed = prepare_for_training(data, self.polynomial_degree, self.sinusoid_degree, self.normalize_data)[0]

prob = LogisticRegression.hypothesis(data_processed,self.theta.T)

max_prob_index = np.argmax(prob,axis = 1)

class_prediction = np.empty(max_prob_index.shape,dtype=object)

for index,label in enumerate(self.unique_labels):

class_prediction[max_prob_index == index] = label

return class_prediction.reshape((num_examples,1))

1.2.3. 测试模块

(logistic_regression_with_linear_boundary.py)

import numpy as np

import matplotlib.pyplot as plt

import pandas as pd

# 导入模拟的逻辑回归类

from logist_regression import LogisticRegression

data = pd.read_csv('../data/iris.csv')

# 鸢尾花的类别

iris_types = ['SETOSA','VERSICOLOR','VIRGINICA']

# 选择鸢尾花的两个特征进行训练

x1_axis = 'petal_length'

x2_axis = 'petal_width'

# 画散点图并展示

for iris_type in iris_types:

plt.scatter(data[x1_axis][data['class'] == iris_type],

data[x2_axis][data['class'] == iris_type],

label = iris_type

)

plt.show()

# 获取样本的总数量

num_examples = data.shape[0]

# 取 'petal_length' 和 'petal_width' 两列的值重塑数组为150X2

x_train = data[[x1_axis, x2_axis]].values.reshape((num_examples, 2))

# 取 'class' 数据重塑数组为150X1

y_train = data['class'].values.reshape((num_examples,1))

# 迭代次数

max_iterations = 1000

polynomial_degree = 0

sinusoid_degree = 0

logistic_regression = LogisticRegression(x_train,y_train,polynomial_degree,sinusoid_degree)

# 开始训练,返回总得theta值和损失值

thetas,cost_histories = logistic_regression.train(max_iterations)

labels = logistic_regression.unique_labels

# 损失结果展示

plt.plot(range(len(cost_histories[0])),cost_histories[0],label = labels[0])

plt.plot(range(len(cost_histories[1])),cost_histories[1],label = labels[1])

plt.plot(range(len(cost_histories[2])),cost_histories[2],label = labels[2])

plt.show()

# 进行预测

y_train_predict = logistic_regression.predict(x_train)

precision = np.sum(y_train_predict == y_train)/y_train.shape[0] * 100

print("precision:",precision)

# 测试的两个维度

x_min = np.min(x_train[:,0])

x_max = np.max(x_train[:,0])

y_min = np.min(x_train[:,1])

y_max = np.max(x_train[:,1])

sample = 150

# 数值的起点,数值的终点,数值的个数

X = np.linspace(x_min,x_max,sample)

Y = np.linspace(y_min,y_max,sample)

# 生成三个150x150的空矩阵

SETOSA = np.zeros((sample,sample))

VERSICOLOR = np.zeros((sample,sample))

VIRGINICA = np.zeros((sample,sample))

# 进行预测并分类

for x_index,x in enumerate(X):

for y_index,y in enumerate(Y):

data = np.array([[x,y]])

# 进行预测

prediction = logistic_regression.predict(data)

if prediction == "SETOSA":

SETOSA[x_index][y_index] = 1

elif prediction == "VERSICOLOR":

VERSICOLOR[x_index][y_index] = 1

elif prediction == "VIRGINICA":

VIRGINICA[x_index][y_index] = 1

# 画图展示

for iris_type in iris_types:

plt.scatter(

x_train[(y_train == iris_type).flatten(),0],

x_train[(y_train == iris_type).flatten(),1],

label = iris_type

)

plt.contour(X,Y,SETOSA)

plt.contour(X,Y,VERSICOLOR)

plt.contour(X,Y,VIRGINICA)

plt.show()

1.2.4. 数据预处理模块

(prepare_for_training.py)

"""Prepares the dataset for training"""

import numpy as np

from .normalize import normalize

from .generate_sinusoids import generate_sinusoids

from .generate_polynomials import generate_polynomials

def prepare_for_training(data, polynomial_degree=0, sinusoid_degree=0, normalize_data=True):

# 计算样本总数

num_examples = data.shape[0]

data_processed = np.copy(data)

# 预处理

features_mean = 0

features_deviation = 0

data_normalized = data_processed

if normalize_data:

(

data_normalized,

features_mean,

features_deviation

) = normalize(data_processed)

data_processed = data_normalized

# 特征变换sinusoidal

if sinusoid_degree > 0:

sinusoids = generate_sinusoids(data_normalized, sinusoid_degree)

data_processed = np.concatenate((data_processed, sinusoids), axis=1)

# 特征变换polynomial

if polynomial_degree > 0:

polynomials = generate_polynomials(data_normalized, polynomial_degree, normalize_data)

data_processed = np.concatenate((data_processed, polynomials), axis=1)

# 加一列1

data_processed = np.hstack((np.ones((num_examples, 1)), data_processed))

return data_processed, features_mean, features_deviation

1.2.5. Sigmoid函数模块

(sigmoid.py)

import numpy as np

def sigmoid(matrix):

"""Applies sigmoid function to NumPy matrix"""

return 1 / (1 + np.exp(-matrix))

1.3. 效果展示

1.3.1. 数据集分布

1.3.2. 损失函数

结论: 损失值随梯度下降的结果 ,对于SETOSA和VIRGINICA结果相对较好 。

1.3.3. 测试结果

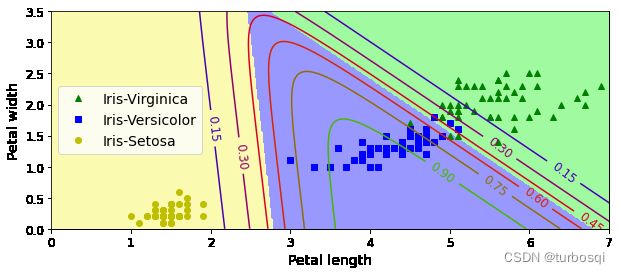

结论:以上根据鸢尾花数据集进行的三分类任务,本质上逻辑回归只能做二分类的任务,此处做了三个二分类 来实现一个三分类任务,横轴为花瓣的长度,纵轴为花瓣的宽度,用不同颜色代表三种不同的花,左侧是原始 数据集,右侧是多分类结果(四条线)。

2. 非线性逻辑回归

2.1. 模拟实现

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import math

from logist_regression import LogisticRegression

data = pd.read_csv('../data/microchips-tests.csv')

# 类别标签

validities = [0, 1]

# 选择两个特征

x_axis = 'param_1'

y_axis = 'param_2'

# 散点图

for validity in validities:

plt.scatter(

data[x_axis][data['validity'] == validity],

data[y_axis][data['validity'] == validity],

label=validity

)

plt.xlabel(x_axis)

plt.ylabel(y_axis)

plt.title('Microchips Tests')

plt.legend()

plt.show()

num_examples = data.shape[0]

x_train = data[[x_axis, y_axis]].values.reshape((num_examples, 2))

y_train = data['validity'].values.reshape((num_examples, 1))

# 训练参数

max_iterations = 100000

regularization_param = 0

polynomial_degree = 5

sinusoid_degree = 0

# 逻辑回归

logistic_regression = LogisticRegression(x_train, y_train, polynomial_degree, sinusoid_degree)

# 训练

(thetas, costs) = logistic_regression.train(max_iterations)

columns = []

for theta_index in range(0, thetas.shape[1]):

columns.append('Theta ' + str(theta_index));

# 训练结果

labels = logistic_regression.unique_labels

plt.plot(range(len(costs[0])), costs[0], label=labels[0])

plt.plot(range(len(costs[1])), costs[1], label=labels[1])

plt.xlabel('Gradient Steps')

plt.ylabel('Cost')

plt.legend()

plt.show()

# 预测

y_train_predictions = logistic_regression.predict(x_train)

# 准确率

precision = np.sum(y_train_predictions == y_train) / y_train.shape[0] * 100

print('Training Precision: {:5.4f}%'.format(precision))

num_examples = x_train.shape[0]

samples = 150

x_min = np.min(x_train[:, 0])

x_max = np.max(x_train[:, 0])

y_min = np.min(x_train[:, 1])

y_max = np.max(x_train[:, 1])

X = np.linspace(x_min, x_max, samples)

Y = np.linspace(y_min, y_max, samples)

Z = np.zeros((samples, samples))

# 结果展示

for x_index, x in enumerate(X):

for y_index, y in enumerate(Y):

data = np.array([[x, y]])

Z[x_index][y_index] = logistic_regression.predict(data)[0][0]

positives = (y_train == 1).flatten()

negatives = (y_train == 0).flatten()

plt.scatter(x_train[negatives, 0], x_train[negatives, 1], label='0')

plt.scatter(x_train[positives, 0], x_train[positives, 1], label='1')

plt.contour(X, Y, Z)

plt.xlabel('param_1')

plt.ylabel('param_2')

plt.title('Microchips Tests')

plt.legend()

plt.show()

2.2. 效果展示

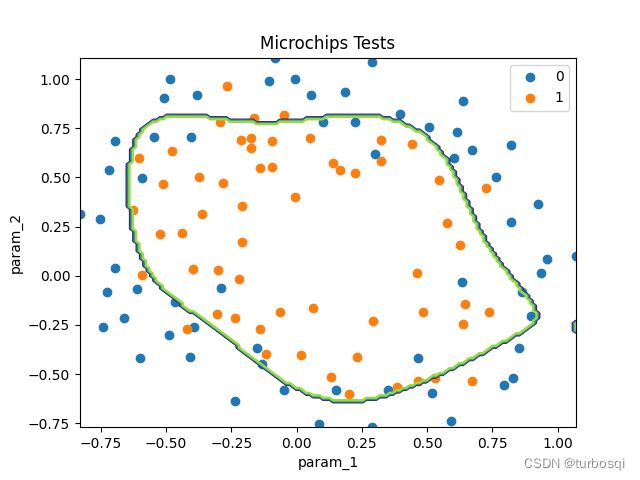

2.2.1. 原始数据集

2.2.2. 损失函数

结论: 损失值随梯度下降的结果随着迭代次数增加,损失值下降明显 。

2.2.3. 预测结果

结论:此处选择的是芯片测试的数据集,横轴与纵轴代表芯片的两个特征,左侧为原始数据集,右侧是预测结 果,此处选择的特征变换为polynomial_degree=5(进行非线性映射),训练次数为100000,此时可以得到较好 的预测结果。

3. 逻辑回归问题

3.1. 二分类的实现

3.1.1. 导包操作

import numpy as np

import os

%matplotlib inline

import matplotlib

import matplotlib.pyplot as plt

plt.rcParams['axes.labelsize'] = 14

plt.rcParams['xtick.labelsize'] = 12

plt.rcParams['ytick.labelsize'] = 12

import warnings

warnings.filterwarnings('ignore')

np.random.seed(42)

3.1.2. 值到概率的转换

把数据转换到概率,使用sigmoid函数,可以把数值映射到0-1之间,通过概率值比较来完成分类任务

sigmoid函数代码实现:

t = np.linspace(-10, 10, 100)

sig = 1 / (1 + np.exp(-t))

plt.figure(figsize=(9, 3))

plt.plot([-10, 10], [0, 0], "k-")

plt.plot([-10, 10], [0.5, 0.5], "k:")

plt.plot([-10, 10], [1, 1], "k:")

plt.plot([0, 0], [-1.1, 1.1], "k-")

plt.plot(t, sig, "b-", linewidth=2, label=r"$\sigma(t) = \frac{1}{1 + e^{-t}}$")

plt.xlabel("t")

plt.legend(loc="upper left", fontsize=20)

plt.axis([-10, 10, -0.1, 1.1])

plt.title('Figure 4-21. Logistic function')

plt.show()

鸢尾花数据集:

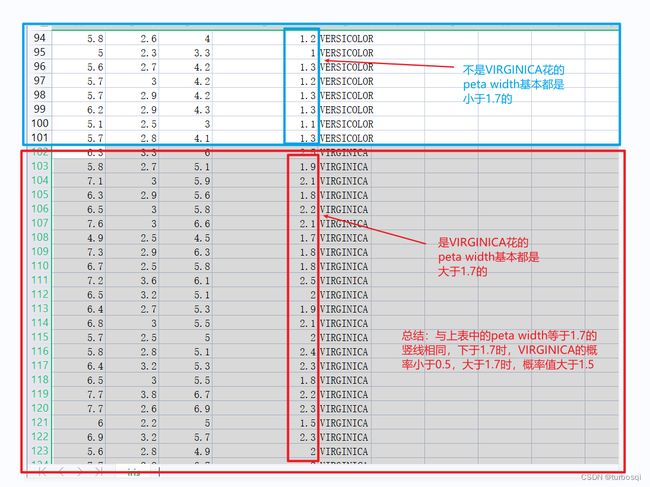

3.1.3. 加载sklearn内置数据集

# datasets数据集是sklearn工具包内置的

from sklearn import datasets

iris = datasets.load_iris()

list(iris.keys())

对于传统的逻辑回归,要对标签做变换,也就是属于当前类别为1,其他类别为0

# 获取数据

X = iris['data'][:,3:]

# 获取Virginica,把Virginica标记为1

y = (iris['target'] == 2).astype(np.int)

3.1.4. 进行训练

from sklearn.linear_model import LogisticRegression

log_res = LogisticRegression()

log_res.fit(X,y)

# 生成0-3之间的1000个数据,并且变成1列多行的形式

X_new = np.linspace(0,3,1000).reshape(-1,1)

# 预测概率值

y_proba = log_res.predict_proba(X_new)

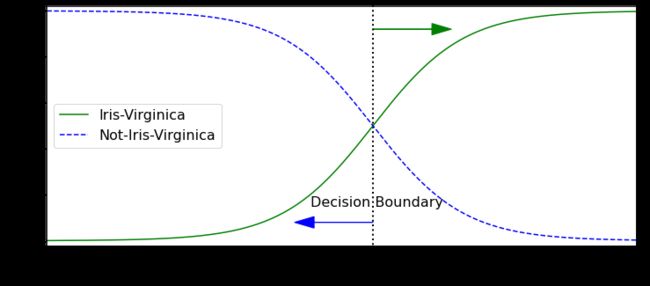

3.1.5. 画图展示

随着输入特征数值的变化,结果概率值也会随之变化

# 随着花萼宽度的增大,是Virginica这种花的概率会增大

plt.figure(figsize = (12,5))

decision_boundary = X_new[y_proba[:,1]>=0.5][0]

plt.plot([decision_boundary,decision_boundary],[-1,2],'k:',linewidth = 2)

plt.plot(X_new,y_proba[:,1],'g-',label = 'Iris-Virginica')

plt.plot(X_new,y_proba[:,0],'b--',label = 'Not-Iris-Virginica')

plt.arrow(decision_boundary,0.08,-0.3,0,head_width = 0.05,head_length = 0.1,fc = 'b',ec = 'b')

plt.arrow(decision_boundary,0.92,0.3,0,head_width = 0.05,head_length = 0.1,fc = 'g',ec = 'g')

plt.text(decision_boundary+0.02,0.15,'Decision Boundary',fontsize = 16,color = 'k',ha = 'center')

plt.xlabel('Peta width(cm)',fontsize = 16)

plt.ylabel('y_proba',fontsize = 16)

plt.axis([0,3,-0.02,1.02])

plt.legend(loc = 'center left',fontsize = 16)

3.1.6. 边界绘制

- 构建坐标数据,合理的范围当中,根据实际训练时输入数据来决定

# 数据范围适当小一点

# 棋盘布局,测试数据,要把所有的点都覆盖掉

x0,x1 = np.meshgrid(np.linspace(2.9,7,500).reshape(-1,1),np.linspace(0.8,2.7,200).reshape(-1,1))

- 整合坐标点,得到所有测试输入数据坐标点

X_new = np.c_[x0.ravel(),x1.ravel()]

# (100000, 2)

- 预测,得到所有概率值

y_proba = log_res.predict_proba(X_new)

- 绘制等高线,完成决策边界

plt.figure(figsize=(12,8))

plt.plot(X[y==0,0],X[y==0,1],'bs')

plt.plot(X[y==1,0],X[y==1,1],'g^')

# 选择绿色进行操作

# 获取概率值

zz = y_proba[:,1].reshape(x0.shape)

contour = plt.contour(x0,x1,zz,cmap=plt.cm.brg)

plt.clabel(contour,inline = 1)

plt.axis([2.9,7,0.8,2.7])

plt.text(3.2,1.5,'NOT Virginica',fontsize = 16,color = 'b')

plt.text(6.2,2.3,'Virginica',fontsize = 16,color = 'g')

3.2. 多分类的实现

3.2.1. 获取数据

X = iris['data'][:,(2,3)]

y = iris['target']

3.2.2. 进行训练

# multi_class 参数指定多分类问题, solver与多分类搭配使用

softmax_reg = LogisticRegression(multi_class = 'multinomial',solver='lbfgs')

# 训练

softmax_reg.fit(X,y)

3.2.3. 进行预测

# 预测概率值

softmax_reg.predict_proba([[5,2]])

3.2.4. 绘制图形

x0, x1 = np.meshgrid(

np.linspace(0, 8, 500).reshape(-1, 1),

np.linspace(0, 3.5, 200).reshape(-1, 1),

)

X_new = np.c_[x0.ravel(), x1.ravel()]

y_proba = softmax_reg.predict_proba(X_new)

y_predict = softmax_reg.predict(X_new)

zz1 = y_proba[:, 1].reshape(x0.shape)

zz = y_predict.reshape(x0.shape)

plt.figure(figsize=(10, 4))

plt.plot(X[y==2, 0], X[y==2, 1], "g^", label="Iris-Virginica")

plt.plot(X[y==1, 0], X[y==1, 1], "bs", label="Iris-Versicolor")

plt.plot(X[y==0, 0], X[y==0, 1], "yo", label="Iris-Setosa")

from matplotlib.colors import ListedColormap

custom_cmap = ListedColormap(['#fafab0','#9898ff','#a0faa0'])

plt.contourf(x0, x1, zz, cmap=custom_cmap)

contour = plt.contour(x0, x1, zz1, cmap=plt.cm.brg)

plt.clabel(contour, inline=1, fontsize=12)

plt.xlabel("Petal length", fontsize=14)

plt.ylabel("Petal width", fontsize=14)

plt.legend(loc="center left", fontsize=14)

plt.axis([0, 7, 0, 3.5])

plt.show()