pytorch之常用函数整理

pytorch之常用函数整理

- 一、图像预处理函数

-

- 1.1 torchvision.datasets.ImageFolder()函数

- 二、参数优化函数

-

- 2.1 torch.optim.lr_scheduler.StepLR()函数

- 2.2 optimizer.param_groups参数

- 三、模型保存与加载

-

- 3.1 模型和模型参数保存torch.save()函数

- 3.2 模型加载torch.load()函数

- 3.3 模型参数加载model.load_state_dict()函数

-

- 3.3.1 model.state_dict()函数和optimizer.state_dict()函数

- 3.4 总结模型参数保存与加载

-

- 3.4.1 保存整个模型参数

- 3.4.2 加载模型参数

- 3.4.3 保存阶段性模型训练过程

- 3.4.4 加载阶段性模型训练过程

- 3.4.5 保存/加载多个模型到一个文件

- 3.4.6 加载其他模型

- 3.4.7 跨设备保存与加载模型

-

- 3.4.7.1 GPU上保存,CPU上加载

- 3.4.7.2 GPU上保存,GPU上加载

- 3.4.7.3 CPU上保存,GPU上加载

- 四、其他函数

-

- 4.1 torch.randperm函数

一、图像预处理函数

1.1 torchvision.datasets.ImageFolder()函数

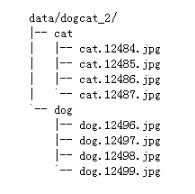

- ImageFolder函数假设所有的文件按文件夹保存,每个文件夹下存储同一类别的图片,文件夹名为类别名;

ImageFolder(root, transform=None, target_transform=None, loader=default_loader)

"""

参数解释:

1)root:图片存储根目录;

2)transform:对PIL Image进行的转换操作,原始图片作为输入,返回一个转换后的图片;

3)target_transform:对图片类别进行预处理的操作,输入为 target,输出对其的转换。如果不传该参数,即对 target 不做任何转换,返回的顺序索引 0,1, 2…;

4)loader:表示数据集加载方式,通常默认加载方式即可;

返回值:

self.classes:用一个 list 保存类别名称;

self.class_to_idx:类别对应的索引,与不做任何转换返回的 target 对应;

self.imgs:保存(img-path, class) tuple的 list;

"""

from torchvision import transforms

from torchvision.datasets import ImageFolder

transform = transforms.Compose([

transforms.RandomResizedCrop(224),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

transforms.Normalize(mean=[0.4, 0.4, 0.4], std=[0.2, 0.2, 0.2]),

])

dataset = ImageFolder('data1/dogcat_2/', transform=transform)

# 深度学习中图片数据一般保存成CxHxW,即通道数x图片高x图片宽

print(dataset.classes) #根据分的文件夹的名字来确定的类别

print(dataset.class_to_idx) #按顺序为这些类别定义索引为0,1...

print(dataset.imgs) #返回从所有文件夹中得到的图片的路径以及其类别

'''

输出:

['cat', 'dog']

{'cat': 0, 'dog': 1}

[('./data/train\\cat\\cat.12484.jpg', 0),

('./data/train\\cat\\cat.12485.jpg', 0),

('./data/train\\cat\\cat.12486.jpg', 0),

('./data/train\\cat\\cat.12487.jpg', 0),

('./data/train\\dog\\dog.12496.jpg', 1),

('./data/train\\dog\\dog.12497.jpg', 1),

('./data/train\\dog\\dog.12498.jpg', 1),

('./data/train\\dog\\dog.12499.jpg', 1)]

'''

二、参数优化函数

2.1 torch.optim.lr_scheduler.StepLR()函数

- 学习率衰减函数:每训练7个epoch,学习率衰减为原来的1/10

from torch import optim

optimizer_ft=optim.Adam(params_to_update,lr=1e-2)

scheduler=optim.lr_scheduler.StepLR(optimizer_ft,step_size=7,gamma=0.1)#学习率衰减,每训练7个epoch,学习率衰减为原来的1/10

2.2 optimizer.param_groups参数

- optimizer.param_groups: 是长度为1的list,其中的元素是1个字典;

1)optimizer.param_groups[0]: 长度为7的字典,包括[‘amsgrad’, ‘params’, ‘lr’, ‘betas’, ‘weight_decay’, ‘eps’, ‘maximize’]这7个参数;

import torch

import torch.optim as optim

w1 = torch.randn(3, 3)

w1.requires_grad = True

optimizer = optim.Adam([w1])

print(optimizer.param_groups)

print(next(iter(optimizer.param_groups))['lr'])

#结果

"""

[{'params': [tensor([[ 1.0950, 0.2128, 0.1464],

[ 0.0240, -0.4230, -0.3268],

[ 0.4877, -0.2145, 0.5996]], requires_grad=True)],

'lr': 0.001,

'betas': (0.9, 0.999),

'eps': 1e-08,

'weight_decay': 0,

'amsgrad': False,

'maximize': False}]

0.001

"""

三、模型保存与加载

- pytorch保存和加载模型后缀为:.pt和.pth

3.1 模型和模型参数保存torch.save()函数

- torch.save: 保存一个序列化(serialized)的目标到磁盘。函数使用了Python的pickle程序用于序列化。模型(models),张量(tensors)和文件夹(dictionaries)都是可以用这个函数保存的目标类型。

"""

torch.save(obj, f, pickle_module=, pickle_protocol=2)

参数:

obj:保存对象

f:类文件对象(必须实现写和刷新)或一个保存文件名的字符串

pickle_module:用于pickling源数据和对象模块

pickle_protocol:指定pickle_protocol可以覆盖默认参数

"""

#保存整个模型

torch.save(model,'save.pt')

#只保存训练好的权重

torch.save(model.state_dict(), 'save.pt')

3.2 模型加载torch.load()函数

- torch.load: 用来加载模型。torch.load() 使用 Python 的 解压工具(unpickling)来反序列化 pickled object 到对应存储设备上。首先在 CPU 上对压缩对象进行反序列化并且移动到它们保存的存储设备上,如果失败了(如:由于系统中没有相应的存储设备),就会抛出一个异常。用户可以通过 register_package 进行扩展,使用自己定义的标记和反序列化方法。

"""

torch.load(f, map_location=None, pickle_module=)

参数:

f:类文件对象(返回文件描述符)或一个保存文件名的字符串

map_location:一个函数或字典规定如何映射存储设备

pickle_module:用于unpickling元数据和对象的模块(必须匹配序列化文件时的pickle_module)

"""

#加载模型

torch.load('tensors.pt')

# 加载模型到CPU上 Load all tensors onto the CPU

torch.load('tensors.pt', map_location=torch.device('cpu'))

# 加载模型到CPU上 Load all tensors onto the CPU, using a function

torch.load('tensors.pt', map_location=lambda storage, loc: storage)

# 加载模型到第1个GPU上 Load all tensors onto GPU 1

torch.load('tensors.pt', map_location=lambda storage, loc: storage.cuda(1))

# 将模型从GPU1映射到GPU0上 Map tensors from GPU 1 to GPU 0

torch.load('tensors.pt', map_location={'cuda:1':'cuda:0'})

# Load tensor from io.BytesIO object

with open('tensor.pt') as f:

buffer = io.BytesIO(f.read())

torch.load(buffer)

3.3 模型参数加载model.load_state_dict()函数

- model.load_state_dict:使用状态字典state_dict反序列化模型参数字典,用来加载模型参数。将state_dict中的parameters和buffers复制到model及其子节点中。

model.load_state_dict(state_dict, strict=True)

"""

state_dict:保存parameters和persistent buffers的字典

strict:可选参数,bool型。state_dict中的key是否和model.state_dict()返回的key一样。

"""

torch.save(model,'save.pt')

#model.load_state_dict()函数把加载的权重复制到模型的权重中去

model.load_state_dict(torch.load("save.pt"))

3.3.1 model.state_dict()函数和optimizer.state_dict()函数

- state_dict其实是pytorch中模型的可学习参数(如weight和bias)python字典,模型的参数可通过model.parameters()获取。只有包含了可学参数层(卷积层、池化层)和已注册的命令(registered buffers,比如batchnorm的running_mean)才会进入state_dict中,优化目标torch.optim也有state_dict,其中包含的是优化器状态信息和使用到的超参数。

- model.state_dict()函数

"""

model.state_dict()

返回一个包含模型状态信息的字典。包含参数(weighs and biases)和持续的缓冲值(如:观测值的平均值)。

只有具有可更新参数的层才会被保存在模型的 state_dict 数据结构中

"""

model.state_dict().keys()

#结果

# ['bias', 'weight']

- torch.optim.Optimizer.state_dict()函数

"""

torch.optim.Optimizer.state_dict()

返回一个包含优化器状态信息的字典。包含两个 key:

state:字典,保存当前优化器的状态信息。不同优化器内容不同。

param_groups:字典,包含所有参数组(eg:超参数)。

"""

# Author: Liuxin

# Time: 2022/5/18

from __future__ import print_function, division

import torch

import torch.nn as nn

import torch.optim as optim

from torch.optim import lr_scheduler

import numpy as np

import torchvision

from torchvision import datasets, models, transforms

import matplotlib.pyplot as plt

import time

import os

import copy

# 定义模型

class TheModelClass(nn.Module):

def __init__(self):

super(TheModelClass, self).__init__()

self.conv1 = nn.Conv2d(3, 6, 5)

self.pool = nn.MaxPool2d(2, 2)

self.conv2 = nn.Conv2d(6, 16, 5)

self.fc1 = nn.Linear(16 * 5 * 5, 120)

self.fc2 = nn.Linear(120, 84)

self.fc3 = nn.Linear(84, 10)

def forward(self, x):

x = self.pool(F.relu(self.conv1(x)))

x = self.pool(F.relu(self.conv2(x)))

x = x.view(-1, 16 * 5 * 5)

x = F.relu(self.fc1(x))

x = F.relu(self.fc2(x))

x = self.fc3(x)

return x

# 初始化模型

model = TheModelClass()

# 初始化优化器

optimizer = optim.SGD(model.parameters(), lr=0.001, momentum=0.9)

# 打印模型的 state_dict

print("Model's state_dict:")

for param_tensor in model.state_dict():

print(param_tensor, "\t", model.state_dict()[param_tensor].size())

print(model.state_dict().keys())

# 打印优化器的 state_dict

print("Optimizer's state_dict:")

for var_name in optimizer.state_dict():

print(var_name, "\t", optimizer.state_dict()[var_name])

#结果

Model's state_dict:

conv1.weight torch.Size([6, 3, 5, 5])

conv1.bias torch.Size([6])

conv2.weight torch.Size([16, 6, 5, 5])

conv2.bias torch.Size([16])

fc1.weight torch.Size([120, 400])

fc1.bias torch.Size([120])

fc2.weight torch.Size([84, 120])

fc2.bias torch.Size([84])

fc3.weight torch.Size([10, 84])

fc3.bias torch.Size([10])

odict_keys(['conv1.weight', 'conv1.bias', 'conv2.weight', 'conv2.bias', 'fc1.weight', 'fc1.bias', 'fc2.weight', 'fc2.bias', 'fc3.weight', 'fc3.bias'])

Optimizer's state_dict:

state {}

param_groups [{'lr': 0.001, 'momentum': 0.9, 'dampening': 0, 'weight_decay': 0, 'nesterov': False, 'maximize': False, 'params': [0, 1, 2, 3, 4, 5, 6, 7, 8, 9]}]

3.4 总结模型参数保存与加载

3.4.1 保存整个模型参数

torch.save(model.state_dict(), PATH)

3.4.2 加载模型参数

model = TheModelClass(*args, **kwargs)

torch.save(model.state_dict(), PATH)

model.load_state_dict(torch.load(PATH))

model.eval()

- 保存训练过程时,只需保存模型训练好的参数,使用torch.save()保存state_dict,能够方便模型的加载。因此推荐使用这种方式进行模型保存。

- 模型参数加载好后,要使用model.eval()来固定dropout和归一化层,否则每次预测结果会不同。

- 注意,load_state_dict()需要传入字典对象,因此需要先反序列化state_dict再传入load_state_dict()。

3.4.3 保存阶段性模型训练过程

torch.save({

'epoch': epoch,

'model_state_dict': model.state_dict(),

'optimizer_state_dict': optimizer.state_dict(),

'loss': loss,

...

}, PATH)

3.4.4 加载阶段性模型训练过程

model = TheModelClass(*args, **kwargs)

optimizer = TheOptimizerClass(*args, **kwargs)

torch.save({

'epoch': epoch,

'model_state_dict': model.state_dict(),

'optimizer_state_dict': optimizer.state_dict(),

'loss': loss,

...

}, PATH)

checkpoint = torch.load(PATH)

model.load_state_dict(checkpoint['model_state_dict'])

optimizer.load_state_dict(checkpoint['optimizer_state_dict'])

epoch = checkpoint['epoch']

loss = checkpoint['loss']

model.eval()

# - 或者 -

model.train()

3.4.5 保存/加载多个模型到一个文件

- 保存

torch.save({

'modelA_state_dict': modelA.state_dict(),

'modelB_state_dict': modelB.state_dict(),

'optimizerA_state_dict': optimizerA.state_dict(),

'optimizerB_state_dict': optimizerB.state_dict(),

...

}, PATH)

- 加载

modelA = TheModelAClass(*args, **kwargs)

modelB = TheModelBClass(*args, **kwargs)

optimizerA = TheOptimizerAClass(*args, **kwargs)

optimizerB = TheOptimizerBClass(*args, **kwargs)

checkpoint = torch.load(PATH)

modelA.load_state_dict(checkpoint['modelA_state_dict'])

modelB.load_state_dict(checkpoint['modelB_state_dict'])

optimizerA.load_state_dict(checkpoint['optimizerA_state_dict'])

optimizerB.load_state_dict(checkpoint['optimizerB_state_dict'])

modelA.eval()

modelB.eval()

# - 或者 -

modelA.train()

modelB.train()

3.4.6 加载其他模型

- 保存

torch.save(modelA.state_dict(), PATH)

- 加载

modelB = TheModelBClass(*args, **kwargs)

modelB.load_state_dict(torch.load(PATH), strict=False)

3.4.7 跨设备保存与加载模型

3.4.7.1 GPU上保存,CPU上加载

- 当在CPU上加载一个GPU上训练的模型时,在torch.load()中指定map_location=torch.device(‘cpu’),此时,map_location动态地将tensors的底层存储重新映射到CPU设备上。

#保存

torch.save(model.state_dict(), PATH)

#加载

device = torch.device('cpu')

model = TheModelClass(*args, **kwargs)

model.load_state_dict(torch.load(PATH, map_location=device))

#上述代码只有在模型是在一块GPU上训练时才有效,如果模型在多个GPU上训练,那么在CPU上加载时,会得到类似如下错误:

#KeyError: ‘unexpected key “module.conv1.weight” in state_dict’

#原因是在使用多GPU训练并保存模型时,模型的参数名都带上了module前缀,因此可以在加载模型时,把key中的这个前缀去掉:

# 原始通过DataParallel保存的文件

state_dict = torch.load('myfile.pth.tar')

# 创建一个不包含`module.`的新OrderedDict

from collections import OrderedDict

new_state_dict = OrderedDict()

for k, v in state_dict.items():

name = k[7:] # 去掉 `module.`

new_state_dict[name] = v

# 加载参数

model.load_state_dict(new_state_dict)

3.4.7.2 GPU上保存,GPU上加载

- 在把GPU上训练的模型加载到GPU上时,只需要使用model.to(torch.device(‘cuda’))将初始化的模型转换为CUDA优化模型。同时确保在模型所有的输入上使用.to(torch.device(‘cuda’))。注意,调用my_tensor.to(device)会返回一份在GPU上的my_tensor的拷贝。不会覆盖原本的my_tensor,因此要记得手动将tensor重写:my_tensor = my_tensor.to(torch.device(‘cuda’))。

#保存

torch.save(model.state_dict(), PATH)

#加载

device = torch.device("cuda")

model = TheModelClass(*args, **kwargs)

model.load_state_dict(torch.load(PATH, map_location="cuda:0")) # 选择希望使用的GPU

model.to(device)

3.4.7.3 CPU上保存,GPU上加载

- 在 GPU 上加载 CPU 训练保存的模型时,将 torch.load() 函数的 map_location 参数 设置为 cuda:device_id。这种方式将模型加载到指定设备。下一步,确保调用 model.to(torch.device(‘cuda’)) 将模型参数 tensor 转换为 cuda tensor。最后,确保模型输入使用 .to(torch.device(‘cuda’)) 为 cuda 优化模型准备数据。

注意:调用 my_tensor.to(device) 会在 GPU 上返回 my_tensor 的新副本,不会覆盖 my_tensor。因此,使用 my_tensor = my_tensor.to(torch.device(‘cuda’)) 手动覆盖。

#保存

torch.save(model.state_dict(), PATH)

#加载

device = torch.device("cuda")

model = TheModelClass(*args, **kwargs)

model.load_state_dict(torch.load(PATH, map_location="cuda:0")) # Choose whatever GPU device number you want

model.to(device)

# Make sure to call input = input.to(device) on any input tensors that you feed to the model

参考网址:https://zhuanlan.zhihu.com/p/505487325

四、其他函数

4.1 torch.randperm函数

- torch.randperm(n):将0~n-1(包括0和n-1)随机打乱后获得的数字序列,函数名是random permutation缩写

torch.randperm(10)

# 结果:

#tensor([2, 3, 6, 7, 8, 9, 1, 5, 0, 4])