决策树ID3算法手动实现

1. ID3算法

决策树中每一个非叶结点对应着一个非类别属性,树枝代表这个属性的值。一个叶结点代表从树根到叶结点之间的路径对应的记录所属的类别属性值。

每一个非叶结点都将与属性中具有最大信息量的非类别属性相关联。

采用信息增益来选择能够最好地将样本分类的属性。

信息增益基于信息论中熵的概念。ID3总是选择具有最高信息增益(或最大熵压缩)的属性作为当前结点的测试属性。该属性使得对结果划分中的样本分类所需的信息量最小,并反映划分的最小随机性或“不纯性”。

2. 数据

iris是鸢尾植物,这里存储了其萼片和花瓣的长宽,共4个属性,鸢尾植物分三类。所以该数据集一共包含4个特征变量,1个类别变量。共有150个样本,鸢尾有三个亚属,分别是山鸢尾(Iris-setosa),变色鸢尾(Iris-versicolor)和维吉尼亚鸢尾(Iris-virginica)。

也就是说我们的数据集里每个样本含有四个属性,并且我们的任务是个三分类问题。三个类别分别为:Iris Setosa(山鸢尾),Iris Versicolour(杂色鸢尾),Iris Virginica(维吉尼亚鸢尾)。

例如:5.1, 3.5, 1.4, 0.2, Iris-setosa

其中 5.1,3.5,1.4,0.2 代表当前样本的四个属性的取值, Iris-setosa 代表当前样本的类别。

3. 实验思路

首先,我们先规定数据集dataset的格式为二维列表,第一维代表数据集中的样本,第二维代表样本的具体取值。

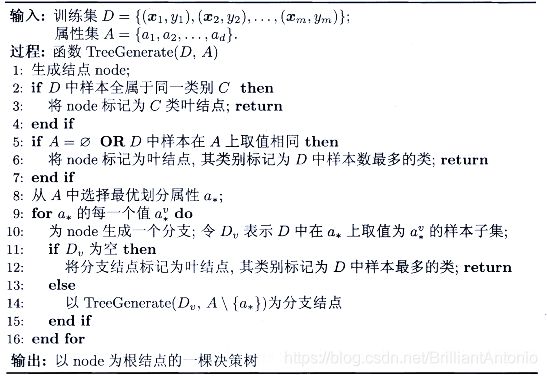

按照伪代码所示,我们编写代码,我们先定义几个函数:

3.1 计算信息熵

给定一个数据集(子集),该函数用于计算其对应于标签类别的信息熵

# calculate the information entropy of the dataset

def CalculateEntropy(dataset):

scale = len(dataset)

dictionary = {}

for data in dataset:

key = data[-1]

if key in dictionary:

dictionary[key] += 1

else:

dictionary[key] = 1

entropy = 0.0

for key in dictionary.keys():

p = dictionary[key] / scale

entropy -= p * log(p, 2)

return entropy

3.2 划分数据集

给定一个数据集(子集),将该数据集根据传入的属性值划分为大于属性值和小于属性值的两个部分。

请注意:我们的数据集上属性的取值是连续的,我们需要将其离散化,为简化问题,我们仅考虑两个分支划分,即:分为大于属性值和小于属性值两部分

# split dataset into two parts: less than or equal to value, and greater value

def SplitDataset(dataset, axis, value):

smaller = []

greater = []

for data in dataset:

if data[axis] <= value:

smaller.append(data)

else:

greater.append(data)

return smaller, greater

3.3 去除属性

给定一个数据集(子集),该函返回去除了某个属性的数据子集,返回的数据子集将被用于进一步划分,生成决策子树

# return the dataset removed feature[axis]

def RemoveFeature(dataset, axis):

subset = []

for data in dataset:

data.pop(axis)

subset.append(data)

return subset

3.4 选择合适的划分属性

给定一个数据集(子集),该函数采用分治的思想,对于每个属性依次求出其“最好”(使得信息增益最大)的划分点,但后全局比较并从中选择出最好的划分点。不过,我们可以将递归转化为循环进行显式的求解。考虑到函数的效率,我们的函数最终返回:最好的属性对应的轴bestSplitAxis,对应的取值bestSplitValue,划分后得到的两个分支数据子集bestSplitSmaller、bestSplitSmaller,对应的信息增益maxInformationGain

# choose the best split value leading to the max information gain of feature[axis]

def ChooseBestSplitValue(dataset):

maxInformationGain = 0.0

bestSplitAxis = -1

bestSplitValue = -1

bestSplitSmaller = None

bestSplitGreater = None

# compute

baseEntropy = CalculateEntropy(dataset)

for axis in range(len(dataset[0])-1):

feature = []

category = []

for data in dataset:

feature.append(data[axis])

category.append(data[-1])

uniqueValue = list(set(feature))

uniqueValue.sort()

candidate = [round((uniqueValue[i] + uniqueValue[i+1]) / 2.0, 2) for i in range(len(uniqueValue)-1)]

for value in candidate:

smaller, greater = SplitDataset(dataset, axis, value)

newEntropy = len(smaller) / len(dataset) * CalculateEntropy(smaller)

+ len(greater) / len(dataset) * CalculateEntropy(greater)

informationGain = baseEntropy - newEntropy

if informationGain > maxInformationGain:

bestSplitAxis = axis

bestSplitValue = value

bestSplitSmaller = smaller

bestSplitGreater = greater

maxInformationGain = informationGain

return bestSplitAxis, bestSplitValue, bestSplitSmaller, bestSplitGreater, maxInformationGain

3.5 分类

给定一个数据集(子集),该函数返回其中类别数最多的类别标签

# classify the dataset according to the major category

def Classify(dataset):

dictionary = {}

for data in dataset:

key = data[-1]

if key in dictionary:

dictionary[key] += 1

else:

dictionary[key] = 1

category = sorted(dictionary.items(), key=lambda x:x[1], reverse=True)

return category[0][0]

3.6 创建决策树

给定一个数据集(子集),该函数用于递归的创建决策树:

- 样本的类别都相同时,直接返回该类别

- 没有属性可供选择时,返回大多数类

- 否则则选择可以使信息增益达到最大的划分

注意:这里我们设置了阈值threshold当全局“最好”划分的信息增益小于阈值时,我们返回大多数类,以此达到预剪枝的效果

def CreateTree(dataset, head, threshold):

category = [data[-1] for data in dataset]

# the same category

if category.count(category[0]) == len(category):

return category[0]

# no feature left

if len(dataset[0]) == 1:

return Classify(dataset)

# split data

axis, value, smaller, greater, informationGain = ChooseBestSplitValue(dataset)

# not meet conditions

if axis == -1 or informationGain < threshold:

return Classify(dataset)

feature = head[axis]

head.pop(axis)

smaller = RemoveFeature(smaller, axis, value)

greater = RemoveFeature(greater, axis, value)

tree = {feature: {}}

tree[feature]['<= '+ str(value)] = CreateTree(smaller, deepcopy(head), threshold)

tree[feature]['> '+ str(value)] = CreateTree(greater, deepcopy(head), threshold)

return tree

4. 实验结果

我们加载数据集,设置阈值为0.00,代码运行我们的代码:

rawData = pd.read_csv('Iris.txt', names=['feature1', 'feature2', 'feature3', 'category'])()

threshold = 0.00

dataset = rawData.values.tolist()

head = rawData.columns.tolist()[:-1]

CreateTree(dataset, head, threshold)

可以看到如下结果:

Infomation Gain: 0.9182958340544894

Infomation Gain: 0.6901603707546748

Infomation Gain: 0.01953330524150132

{'feature1': {'<= 3.05': 'Iris-versicolor', '> 3.05': 'Iris-versicolor'}}

Infomation Gain: 0.03811321893076022

{'feature1': {'<= 3.15': 'Iris-virginica', '> 3.15': 'Iris-virginica'}}

{'feature3': {'<= 1.75': {'feature1': {'<= 3.05': 'Iris-versicolor', '> 3.05': 'Iris-versicolor'}}, '> 1.75': {'feature1': {'<= 3.15': 'Iris-virginica', '> 3.15': 'Iris-virginica'}}}}

{'feature2': {'<= 2.45': 'Iris-setosa', '> 2.45': {'feature3': {'<= 1.75': {'feature1': {'<= 3.05': 'Iris-versicolor', '> 3.05': 'Iris-versicolor'}}, '> 1.75': {'feature1': {'<= 3.15': 'Iris-virginica', '> 3.15': 'Iris-virginica'}}}}}}

Decision Tree: {'feature2': {'<= 2.45': 'Iris-setosa', '> 2.45': {'feature3': {'<= 1.75': {'feature1': {'<= 3.05': 'Iris-versicolor', '> 3.05': 'Iris-versicolor'}}, '> 1.75': {'feature1': {'<= 3.15': 'Iris-virginica', '> 3.15': 'Iris-virginica'}}}}}}

我们注意到,其中的3.05 和3.15划分后两个子树都包含在同一类中,观察其对应的信息增益,我们发现其值很小,分别为0.01953330524150132和0.03811321893076022。

此时我们可以进行调整阈值0.05,进行预剪枝处理:

rawData = pd.read_csv('Iris.txt', names=['feature1', 'feature2', 'feature3', 'category'])()

threshold = 0.05

dataset = rawData.values.tolist()

head = rawData.columns.tolist()[:-1]

CreateTree(dataset, head, threshold)

最终结果如下:

Infomation Gain: 0.9182958340544894

Infomation Gain: 0.6901603707546748

Infomation Gain: 0.01953330524150132

Infomation Gain: 0.03811321893076022

{'feature3': {'<= 1.75': 'Iris-versicolor', '> 1.75': 'Iris-virginica'}}

{'feature2': {'<= 2.45': 'Iris-setosa', '> 2.45': {'feature3': {'<= 1.75': 'Iris-versicolor', '> 1.75': 'Iris-virginica'}}}}

Decision Tree: {'feature2': {'<= 2.45': 'Iris-setosa', '> 2.45': {'feature3': {'<= 1.75': 'Iris-versicolor', '> 1.75': 'Iris-virginica'}}}}

我们可以清楚的看到,预剪枝后的决策树具有更加良好的结构。

本文为作者原创,转载请注明来源:https://blog.csdn.net/BrilliantAntonio/article/details/116885516?spm=1001.2014.3001.5501