Unable to find a valid cuDNN algorithm to run convolution (内有解决方法)

前几天在云服务器上跑Yolo代码时报的错:

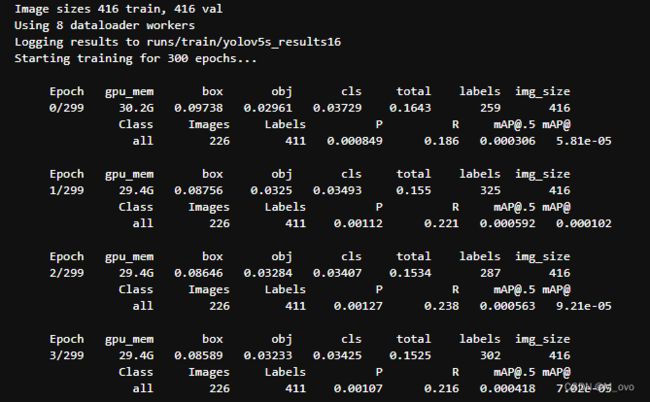

autoanchor: Analyzing anchors... anchors/target = 5.04, Best Possible Recall (BPR) = 1.0000

Image sizes 416 train, 416 val

Using 4 dataloader workers

Logging results to runs/train/yolov5s_results15

Starting training for 300 epochs...

Epoch gpu_mem box obj cls total labels img_size

0%| | 0/10 [00:00

main(opt)

File "train.py", line 558, in main

train(opt.hyp, opt, device)

File "train.py", line 337, in train

pred = model(imgs) # forward

File "/opt/conda/lib/python3.7/site-packages/torch/nn/modules/module.py", line 1110, in _call_impl

return forward_call(*input, **kwargs)

File "/root/yolov5/models/yolo.py", line 123, in forward

return self.forward_once(x, profile, visualize) # single-scale inference, train

File "/root/yolov5/models/yolo.py", line 154, in forward_once

x = m(x) # run

File "/opt/conda/lib/python3.7/site-packages/torch/nn/modules/module.py", line 1110, in _call_impl

return forward_call(*input, **kwargs)

File "/root/yolov5/models/common.py", line 128, in forward

return self.cv3(torch.cat((self.m(self.cv1(x)), self.cv2(x)), dim=1))

File "/opt/conda/lib/python3.7/site-packages/torch/nn/modules/module.py", line 1110, in _call_impl

return forward_call(*input, **kwargs)

File "/opt/conda/lib/python3.7/site-packages/torch/nn/modules/container.py", line 141, in forward

input = module(input)

File "/opt/conda/lib/python3.7/site-packages/torch/nn/modules/module.py", line 1110, in _call_impl

return forward_call(*input, **kwargs)

File "/root/yolov5/models/common.py", line 94, in forward

return x + self.cv2(self.cv1(x)) if self.add else self.cv2(self.cv1(x))

File "/opt/conda/lib/python3.7/site-packages/torch/nn/modules/module.py", line 1110, in _call_impl

return forward_call(*input, **kwargs)

File "/root/yolov5/models/common.py", line 42, in forward

return self.act(self.bn(self.conv(x)))

File "/opt/conda/lib/python3.7/site-packages/torch/nn/modules/module.py", line 1110, in _call_impl

return forward_call(*input, **kwargs)

File "/opt/conda/lib/python3.7/site-packages/torch/nn/modules/conv.py", line 447, in forward

return self._conv_forward(input, self.weight, self.bias)

File "/opt/conda/lib/python3.7/site-packages/torch/nn/modules/conv.py", line 444, in _conv_forward

self.padding, self.dilation, self.groups)

RuntimeError: Unable to find a valid cuDNN algorithm to run convolution一开始尝试了各种解法,包括更新Conda和重装pytorch。都没有用

后来发现是Batch_Size大了....导致显存不够用。服务器上的显存配置如下:

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 510.47.03 Driver Version: 510.47.03 CUDA Version: 11.6 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 Tesla V100-SXM2... On | 00000000:00:0E.0 Off | 0 |

| N/A 45C P0 42W / 300W | 0MiB / 32768MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| No running processes found |

+-----------------------------------------------------------------------------+看来还是高估了32G的能力.... 把Batchsize从256调回了128就可以正常运行了。显存基本上是在跑满的状态

至此问题已解决。

解决思路:调小Batch Size, 检查代码内是否有内存爆炸等Bug, 调小Num_Workers的数值。